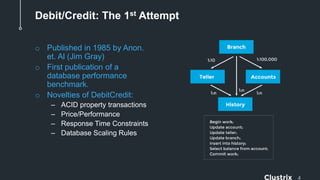

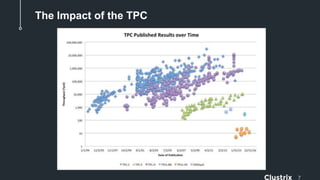

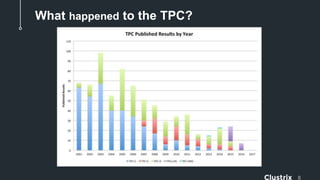

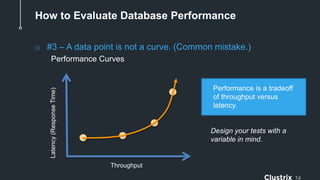

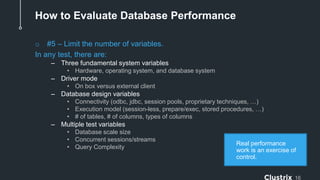

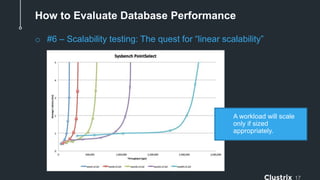

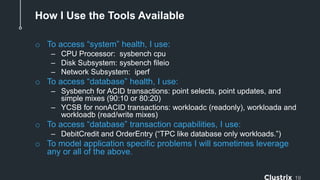

The document outlines a history of database benchmarks, highlighting the evolution of the Transaction Processing Performance Council (TPC) and its standards, as well as open-source tools like Sysbench and YCSB for evaluating database performance. It provides best practices for assessing database performance, including understanding objectives, choosing approaches, and identifying bottlenecks. The author emphasizes that while benchmarks are valuable, they are not always representative of actual application performance.