This document discusses machine learning techniques for computer vision tasks, focusing on deep learning and neural networks. It describes how neural networks can learn complex image features without hand-engineering, achieving state-of-the-art results in tasks like image classification. While powerful, deep learning models require large labeled datasets and are difficult to tune. The document also introduces the technique of transfer learning, where features learned from one task can be reused as inputs for another task with less data.

![Machine Learning Specialization

19

SIFT [Lowe ‘99]

©2015 Emily Fox & Carlos Guestrin

•Spin Images

[Johnson & Herbert ‘99]

•Textons

[Malik et al. ‘99]

•RIFT

[Lazebnik ’04]

•GLOH

[Mikolajczyk & Schmid ‘05]

•HoG

[Dalal & Triggs ‘05]

•…

Many hand created features exist

for finding interest points…](https://image.slidesharecdn.com/deeplearning-annotated-230401020645-b2ebbc4b/85/deeplearning-annotated-pdf-19-320.jpg)

![Machine Learning Specialization

21

SIFT [Lowe ‘99]

©2015 Emily Fox & Carlos Guestrin

•Spin Images

[Johnson & Herbert ‘99]

•Textons

[Malik et al. ‘99]

•RIFT

[Lazebnik ’04]

•GLOH

[Mikolajczyk & Schmid ‘05]

•HoG

[Dalal & Triggs ‘05]

•…

Many hand created features exist

for finding interest points…

Hand-created

features

… but very painful to design](https://image.slidesharecdn.com/deeplearning-annotated-230401020645-b2ebbc4b/85/deeplearning-annotated-pdf-21-320.jpg)

![Machine Learning Specialization

22

Deep learning:

implicitly learns features

Layer 1 Layer 2 Layer 3 Prediction

©2015 Emily Fox & Carlos Guestrin

Example

detectors

learned

Example

interest

points

detected

[Zeiler & Fergus ‘13]](https://image.slidesharecdn.com/deeplearning-annotated-230401020645-b2ebbc4b/85/deeplearning-annotated-pdf-22-320.jpg)

![Machine Learning Specialization

Sample results using deep neural networks

• German traffic sign

recognition benchmark

- 99.5% accuracy (IDSIA team)

• House number recognition

- 97.8% accuracy per character

[Goodfellow et al. ’13]

©2015 Emily Fox & Carlos Guestrin](https://image.slidesharecdn.com/deeplearning-annotated-230401020645-b2ebbc4b/85/deeplearning-annotated-pdf-24-320.jpg)

![Machine Learning Specialization

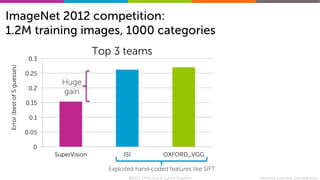

ImageNet 2012 competition:

1.2M training images, 1000 categories

©2015 Emily Fox & Carlos Guestrin

Winning entry: SuperVision

8 layers, 60M parameters [Krizhevsky et al. ’12]

Achieving these amazing results required:

• New learning algorithms

• GPU implementation](https://image.slidesharecdn.com/deeplearning-annotated-230401020645-b2ebbc4b/85/deeplearning-annotated-pdf-26-320.jpg)

![Machine Learning Specialization

Scene parsing with deep learning

©2015 Emily Fox & Carlos Guestrin

[Farabet et al. ‘13]](https://image.slidesharecdn.com/deeplearning-annotated-230401020645-b2ebbc4b/85/deeplearning-annotated-pdf-28-320.jpg)

![Machine Learning Specialization

33

Many tricks needed to work well…

Different types of layers, connections,…

needed for high accuracy

[Krizhevsky et al. ’12]

©2015 Emily Fox & Carlos Guestrin](https://image.slidesharecdn.com/deeplearning-annotated-230401020645-b2ebbc4b/85/deeplearning-annotated-pdf-33-320.jpg)

![Machine Learning Specialization

40

Careful where you cut:

latter layers may be too task specific

Layer 1 Layer 2 Layer 3 Prediction

©2015 Emily Fox & Carlos Guestrin

Example

detectors

learned

Example

interest

points

detected

[Zeiler & Fergus ‘13]

Too specific

for new task

Use these!](https://image.slidesharecdn.com/deeplearning-annotated-230401020645-b2ebbc4b/85/deeplearning-annotated-pdf-40-320.jpg)