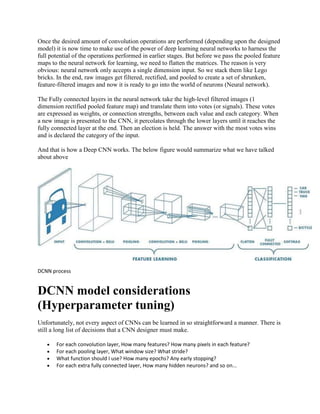

Deep convolutional neural networks (DCNNs) are a type of neural network commonly used for analyzing visual imagery. They work by using convolutional layers that extract features from images using small filters that slide across the input. Pooling layers then reduce the spatial size of representations to reduce computation. Multiple convolutional and pooling layers are followed by fully connected layers that perform classification. Key aspects of DCNNs include activation functions, dropout layers, hyperparameters like filter size and number of layers, and training for many epochs with techniques like early stopping.