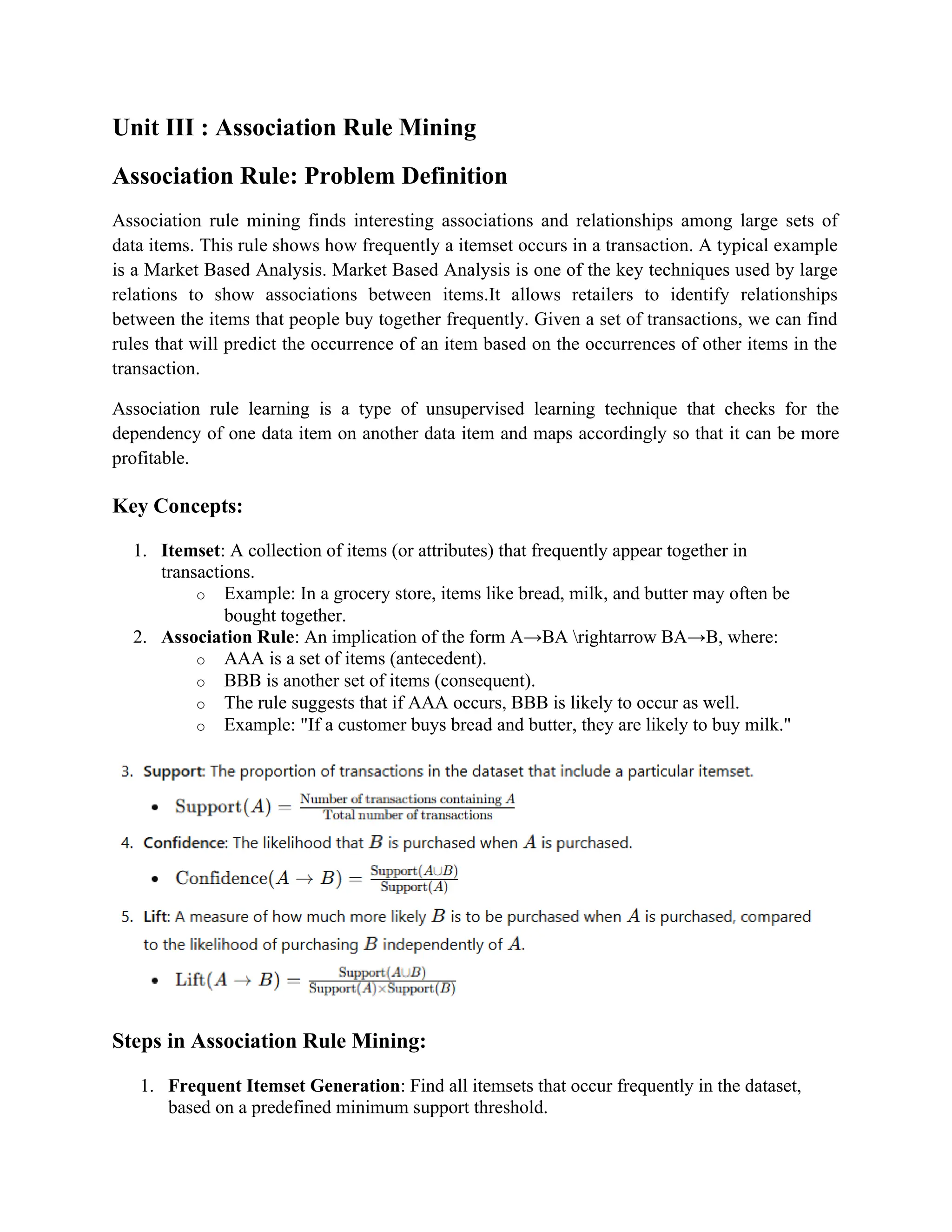

The document discusses association rule mining, focusing on finding interesting associations and relationships within large datasets, particularly through market basket analysis. It explains key concepts such as itemsets and the steps involved in association rule mining: generating frequent itemsets, creating rules, and evaluating them using metrics like support and confidence. The apriori algorithm is highlighted as a foundational method in this process, emphasizing its ability to discover frequent itemsets based on the principle that if an itemset is frequent, all its subsets must also be frequent.