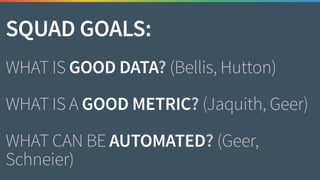

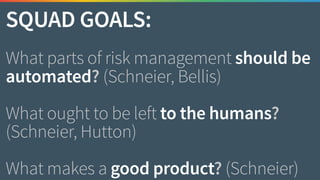

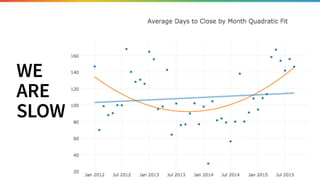

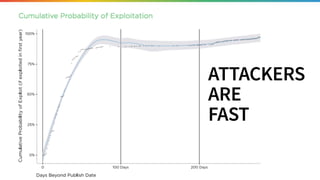

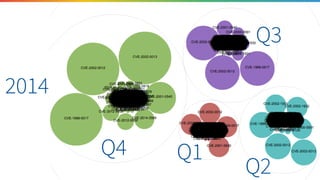

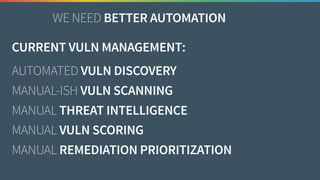

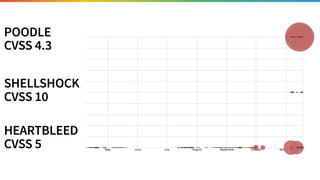

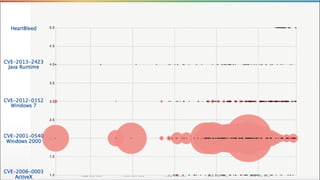

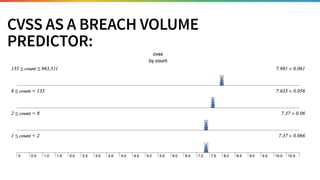

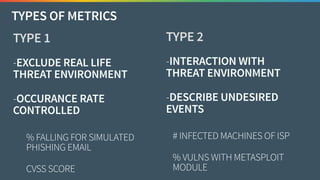

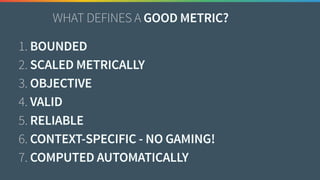

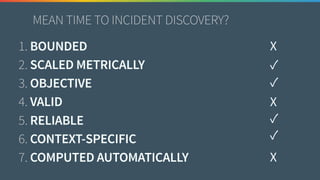

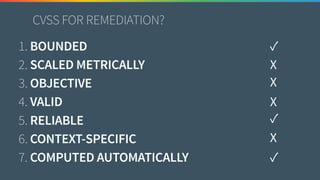

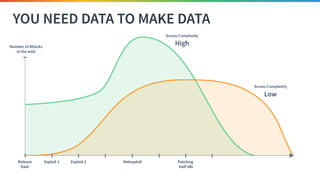

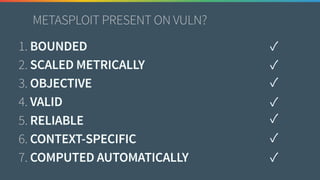

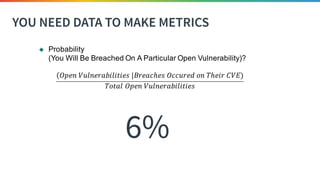

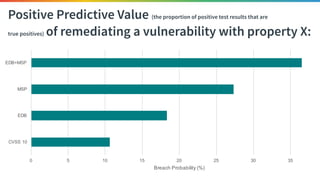

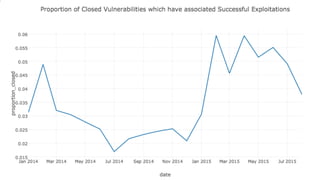

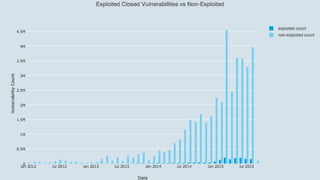

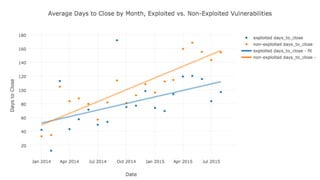

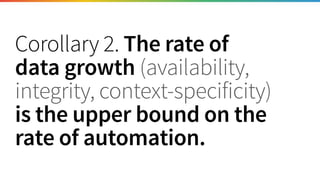

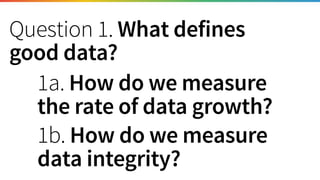

The document discusses the need for improved data, metrics, and automation in security risk management, emphasizing that effective metrics are essential for automation and decision-making. It outlines the characteristics of good metrics and data, highlighting the importance of context and reliability, while also addressing the challenges posed by attackers who excel at automation. Additionally, it raises questions about the definitions of good data and metrics, and how these can inform the automation of risk management processes.