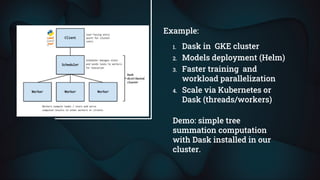

This document discusses Dask, an open source parallel computing library for Python that scales existing libraries like NumPy, Pandas, and Scikit-learn to larger datasets and clusters. It allows workloads to run across multiple cores on a single machine or be distributed across a Google Cloud Platform cluster. The document provides an example of using Dask in a Google Kubernetes Engine cluster to parallelize machine learning workloads and speed up model training. It concludes that Dask is a good open source solution that can be configured for machine learning projects and allows workloads to be scaled horizontally or vertically for increased speed.