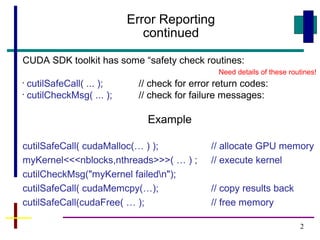

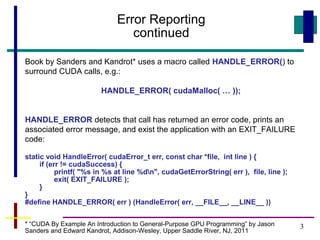

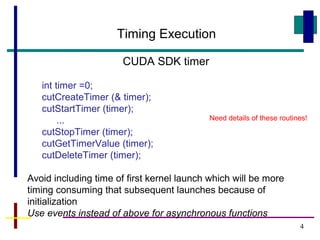

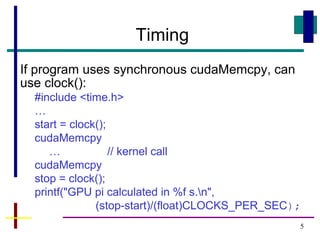

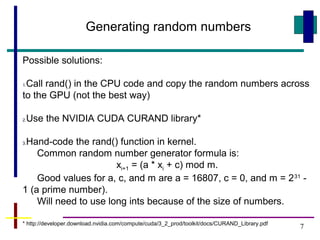

This document discusses error reporting, timing execution, and generating random numbers for CUDA programming. It describes the CUDA SDK safety check routines for error reporting, a macro called HANDLE_ERROR() that prints error messages and exits on failure, and using the clock() function or CUDA events to time execution. It also discusses generating pseudorandom numbers for Monte Carlo computations using the CURAND library, copying random numbers from CPU to GPU, or implementing a random number generator directly in kernels.