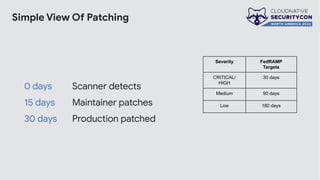

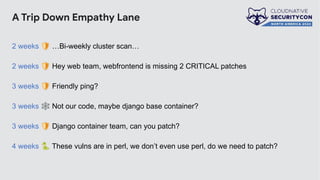

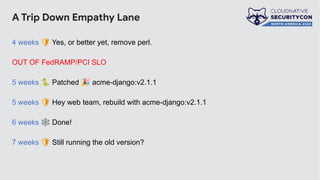

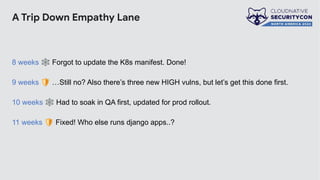

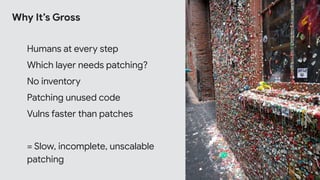

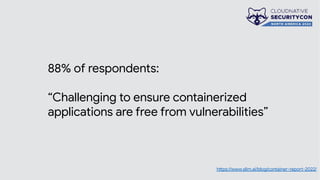

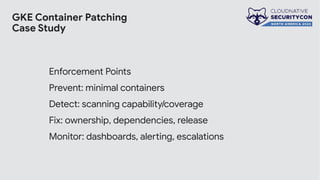

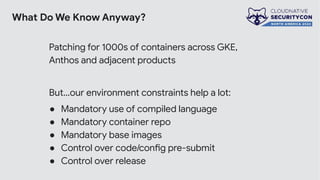

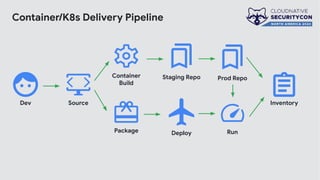

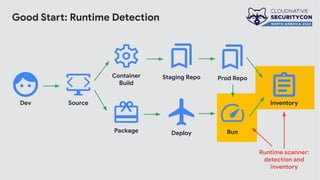

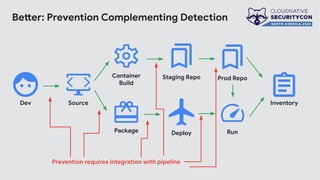

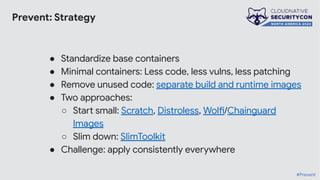

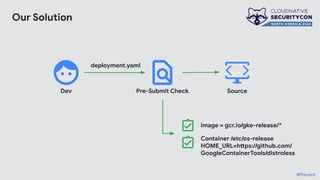

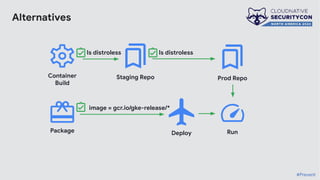

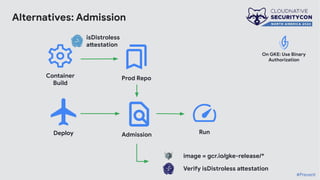

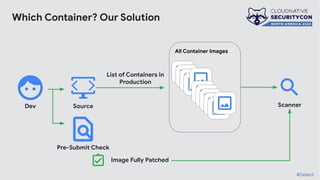

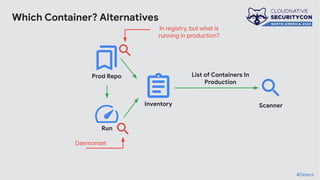

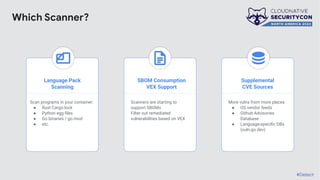

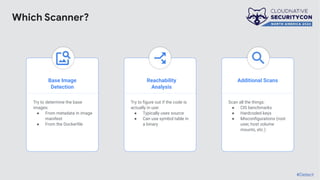

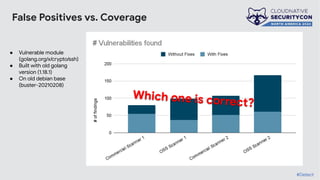

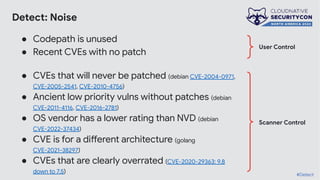

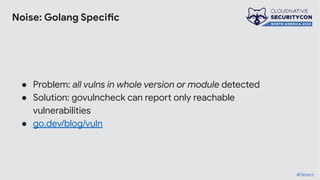

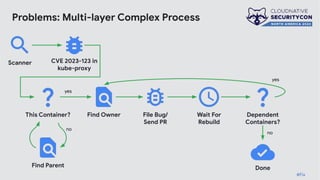

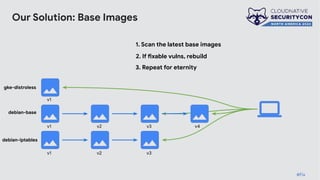

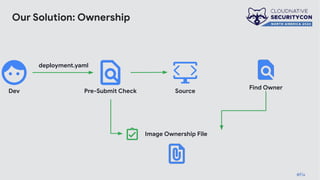

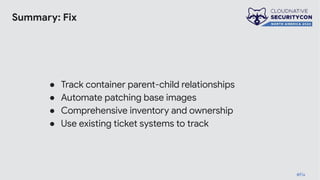

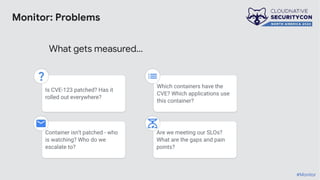

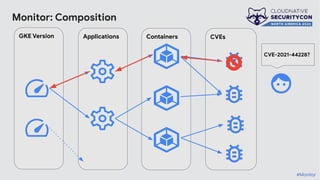

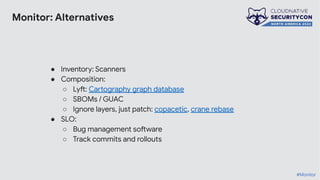

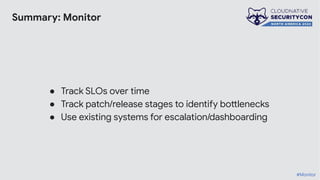

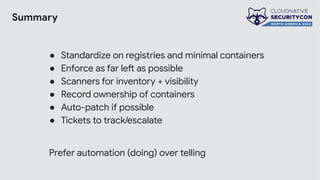

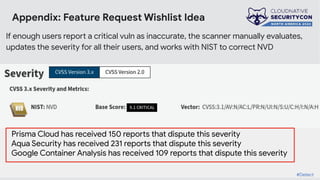

The document discusses the challenges and practices of container patching in GKE (Google Kubernetes Engine) environments, highlighting the importance of minimizing vulnerabilities and standardizing processes. It outlines strategies for prevention, detection, and monitoring of vulnerabilities, along with case studies and recommendations for effective ownership and automation in patch management. The overall goal is to improve the efficiency and scalability of container security practices in cloud-native applications.