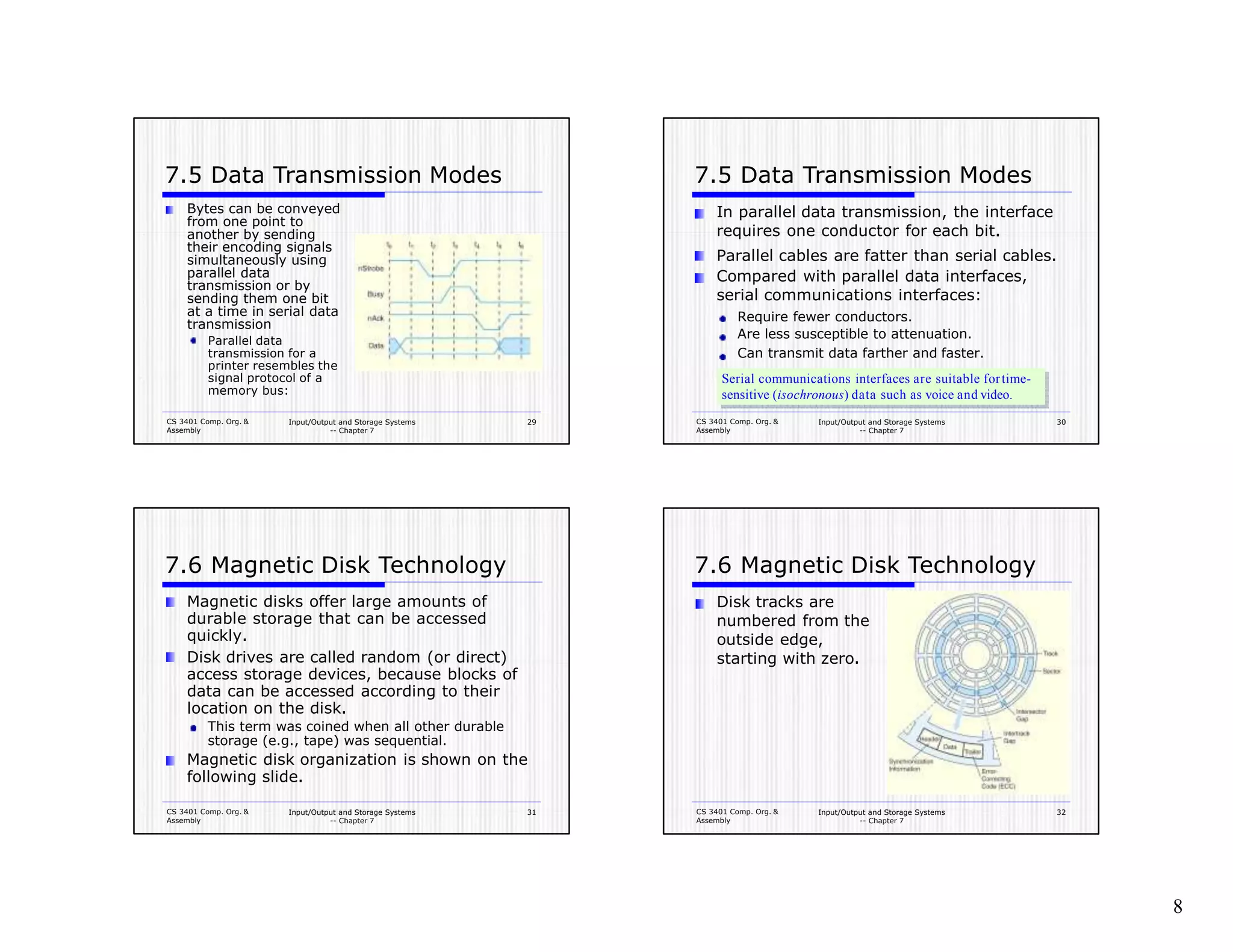

The document is a chapter about input/output and storage systems from a computer organization and assembly course. It discusses various I/O architectures like programmed I/O, interrupt-driven I/O, DMA, and channel I/O. It also covers data transmission modes, magnetic disk technology, hard disks, floppy disks, and file allocation tables. The key topics are I/O methods and architectures, storage media formats, RAID, data compression algorithms, and how I/O systems work.