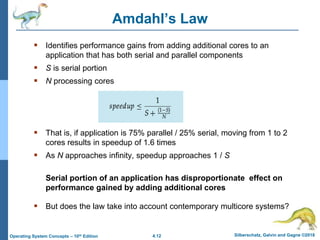

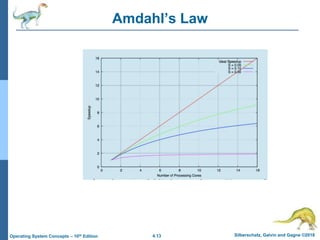

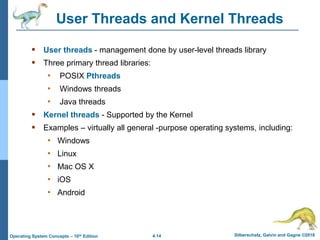

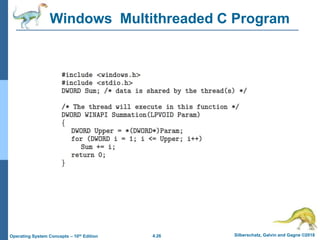

The document discusses threads and concurrency in operating systems. It covers topics like multicore programming, multithreading models, thread libraries, implicit threading and threading issues. It provides examples of thread implementations in Windows, Linux, Pthreads and Java. Different multithreading approaches like many-to-one, one-to-one and many-to-many models are explained. Implicit threading techniques like thread pools and fork-join parallelism are also summarized along with code examples.