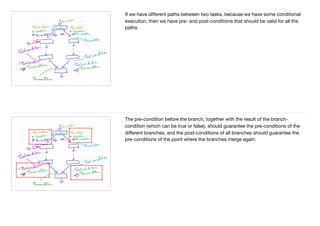

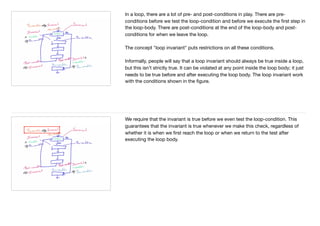

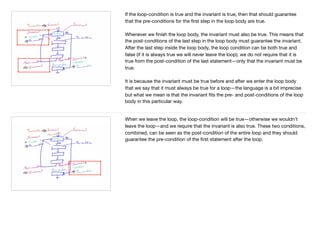

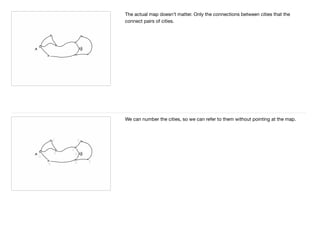

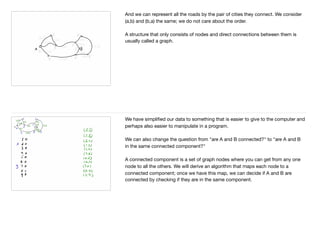

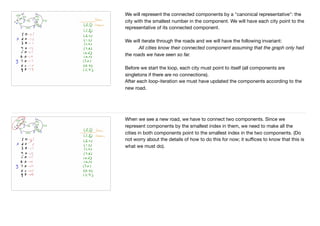

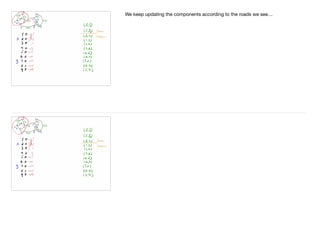

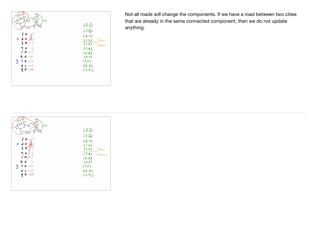

This document provides an introduction and definition of algorithms. It begins by discussing what algorithms are at a high level, noting they are sets of rules or steps to solve specific problems. It then provides a more formal definition, stating an algorithm is a finite sequence of unambiguous steps that solves a specific problem and always terminates. The document discusses the basic building blocks of algorithms, including sequential execution, branching, looping, and variables. It also covers designing algorithms by breaking large problems into smaller subproblems and using preconditions and postconditions. Finally, it provides an example algorithm to determine if two cities are connected on a map and discusses how to prove the correctness of algorithms.