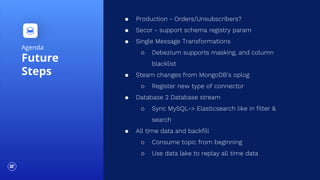

This document discusses change data capture (CDC) and its components. CDC is an approach that identifies, captures, and delivers changes made to enterprise data sources. It feeds these changes into a central data stream that can be combined with other data sources in real-time. The document outlines Kafka Connect, Debezium, Schema Registry, and Apache Avro which are key parts of the CDC architecture. It also discusses future steps like supporting additional databases and improving deployment, as well as open issues around performance and compatibility with certain databases.