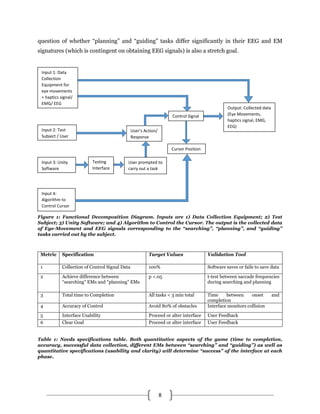

The document proposes developing an interface to study whether planning and guiding tasks in cursor movement have distinct signatures in eye movements and EEG signals. It will involve tasks of searching, planning a path, and guiding a cursor through a maze. The project has three phases: using haptics signals, then EMG signals, and finally EEG signals if subjects can control a BCI. The goal is to determine if signatures can distinguish planning from guiding and improve BCI control by recognizing user intent.

![3

Background and Significance

In healthy individuals, the sensorimotor system relays brain signals to control muscular

movement, however this function is compromised in the more than 11.5 million people suffering

from neuromuscular disorders worldwide [1]. Brain-Computer Interfaces (BCIs) offer the

potential to restore a level of sensorimotor control as they obtain control signals directly from the

brain in order to guide movement. Individuals with amputations, surgeons performing a remote

robotic surgery, or pilots wanting to fly hands-free may also benefit from a brain-computer

interface. Electroencephalography (EEG) signals offer the ability to infer a user’s intent and are

popular for use in BCIs since EEG is well-characterized, low-cost, and provides high temporal

resolution [2]. BCIs have been successfully used to map EEG signals to cursor movement on a

screen, movement of robot arms, wheelchairs, and prosthetics, and to allow communication via a

spelling device [3] [4]. However, there are significant limitations involved in EEG-based BCI

control. Firstly, BCI requires an explicitly defined mapping between each subset of neurons and

the output movement of the device or cursor. Thus, the burden is placed on the subject to “learn”

to control the device by activating these specific locations in the brain, a task which requires

significant mental effort and which is not always successful [4]. Furthermore, BCIs can capture

signals only from a small subset of the many neurons responsible, both directly and indirectly, for

driving movement. Mimicking sensorimotor control with a BCI is also complicated by the highly

nonlinear nature of brain-controlled arm movements, thus these are frequently simplified to

linear mappings for BCI control [5]. Low spatial resolution and artefacts caused by muscle and

eye movement limit EEG accuracy [4]. EEG-based BCI control is thus limited in its ability to

accurately mimic natural movement.

Tracking eye movements (EMs) presents an alternative strategy for controlling movement

of a cursor or robotic arm. Saccadic eye movements – rapid changes in the location of focus –

have been shown to be a rich source of information about an individual’s intended movements

and constitute a potential control signal for cursor movement [6] [7]. For instance, individuals

tend to look at an object before making a motion toward it, and duration of fixation on one object

over another during a decision-making task is a good indicator of a person’s decision [8] [9].

However, using gaze to inform movement is problematic since it is not a natural sensorimotor

connection: users are not naturally accustomed to moving or selecting items with their eyes. Eye

tracking techniques are also highly susceptible to misconstruing random eye motion (“searching”

eye movements) for meaningful control signals (“intentional” eye movements).](https://image.slidesharecdn.com/42676b97-d5d0-48d0-94b5-f64f194b739d-160825220239/85/Capstone-Proposal-3-320.jpg)

![4

The challenge of how to best control movement given a limited set of control signals

obtained using BCI may be addressed through a combined approach that uses gaze and EEG

signals as well as information about the signatures of different tasks. A BCI that can predict the

user’s intended goal based on eye movement and EEG signals could, for instance, tailor its own

control algorithm to better assist the user in carrying out that goal. A BCI might be able to infer

whether the user is intending to select an object or simply note its location, by recognizing specific

task signatures in the user’s eye movements and EEG signals. The first step towards producing

such a “smart” BCI is to determine whether such task-specific signatures exist in the combination

of eye-movement and EEG signals. This project will focus on tasks involved in cursor movement,

including planning a path before initiating movement and guiding the cursor along the pre-

planned trajectory using BCI control. The goal of this project is to determine whether EEG and

eye-movement data together provide a way to distinguish “planning” and “guiding” tasks, two

tasks which utilize eye movements generally categorized as “intentional” eye movements.

Evaluation of Previous Approaches

Previous strategies for improving EEG-based control of BCI have focused on obtaining

better spatial resolution of brain patterns using electrocorticogram (ECoG), a technique which

uses electrodes surgically implanted on or near the neocortex [3]. However, this method is not a

viable long-term solution as it is highly invasive and has persistent problems with electrode

contact and biocompatibility [3] [4].

Gaze-only BCI control offers a more intuitive and non-invasive approach to controlling

movement, but have the drawback of being unable to distinguish between casual glances at an

object or an intention to move in that direction [3]. The possibility of using a blink to indicate

intention of selection has been rejected because users are unable to prevent natural blinking which

are subsequently mistaken for an intention to select an object [10].

Hybrid EEG-gaze BCIs have recently been gaining interest [11]. For instance, previous

studies have compared the efficiency of eye-tracking and EEG as control signals for cursor

movement. EEG signals have been incorporated in hybrid BCIs to simulate the “selection” of an

object, with gaze data controlling movement, to obtain better accuracy, however this has been

shown to be slower than traditional (solo) gaze-driven cursor movement [10]. Studies have also

looked at “random” EMs (which occur, for instance, when a user is casually looking around a

screen) compared to “intentional” EMs (when a user is directing a cursor using gaze information),

as well as utilizing a combination of eye-tracking with EEG signals to obtain better control [3] [6].

These have certainly improved BCI control with regards to carrying out a single task more](https://image.slidesharecdn.com/42676b97-d5d0-48d0-94b5-f64f194b739d-160825220239/85/Capstone-Proposal-4-320.jpg)

![5

accurately; however, little advancement has been made in exploring BCI control of multiple tasks

in which the user’s eye movements are always “intentional” but the user is intending to carry out

distinct tasks. For instance, both “planning” EMs (which occur prior to initiation of cursor

movement) and “guiding” EMs (where the user is actively guiding the cursor using gaze

information and imagined hand-movements) may be considered to be “intentional” EMs, but the

distinction between these tasks is unclear.

This project aims to answer the question of whether eye movement and EEG signals can

be combined in order to reliably distinguish between the “planning” and “guiding” stages of

controlled cursor movement. If so, these signatures may be used to provide better control of a BCI

by a user carrying out a multiple tasks. In this project, the user will plan a route and guide an

object through a maze designed in Unity, using a combination of EEG and gaze information, while

data about the number and magnitude of saccades, dwell time (time spend focused on one area of

the screen), EEG amplitude and frequency will be collected. Analysis of these parameters will be

carried out in order to determine whether the “planning” and “guiding” stages have different

characteristic signatures.

Consequences of Success

The ultimate goal in enhancing BCI control is to facilitate the interaction between user and

machine. Ideally, a BCI should be minimally frustrating, non-invasive, and convenient, with

additional criteria including how quickly a task is carried out, accuracy, and elimination of

unintended movements. While BCI control has seen huge improvements following the advent of

hybrid systems combining EEG and eye-movement information, significant gaps remain in the

knowledge of the different stages of movement-related tasks [10] [11]. “Planning” versus “guiding”

eye movements have not been studied in concert with EEG for the purpose of enhancing BCI

control, and this represents a potential improvement in current hybrid eye-tracking BCIs.

Successful incorporation of “planning” and “guiding” phase signatures into BCI control will lead

to easier and more intuitive control of motion by allowing the BCI to dynamically respond to task

signatures and tailor its control algorithm to improve speed and accuracy of movement tasks.

Improved BCIs have the potential to greatly benefit individuals with amputations or who suffer

from neuromuscular diseases. BCI technology is also relevant for healthy individuals, for instance

to allow for control of a video game by thought, piloting of an airplane hands-free, or performance

of a remote surgery using a robotic arm.](https://image.slidesharecdn.com/42676b97-d5d0-48d0-94b5-f64f194b739d-160825220239/85/Capstone-Proposal-5-320.jpg)

![6

Constraints

Economic

Limitations in the practicality and applicability of this research include economic

constraints, since brain-computer interfaces are expensive and require extensive training to learn

to use. One goal in improving BCI control is to facilitate motor control and communication as well

as develop better prosthetic arms and other devices; however, this technology may be

prohibitively expensive for many individuals. Thus, improving BCI technology may only benefit

the most wealthy individuals able to afford these high prices.

Ethical

Several ethical issues come to mind when considering the implications of brain-computer

interface research. Firstly, learning to control BCIs with EEG is quite difficult and can be

impossible to master depending on a patient’s cognitive challenges [12]. It is plausible that

patients and/or their caregivers may have unreasonably high expectations of how much control a

BCI will provide, resulting in psychological harm. Additionally, a natural application of BCI is in

allowing locked-in patients – who are fully aware though paralyzed – to communicate. A potential

issue in this situation would arise around communicating the patient’s wish to continue or

discontinue life-support, since there is no clear answer of what level of communication a BCI

could offer concerning life or death decisions or at what level the patient was mentally capable to

make that decision. In these two situations, using BCI should be approached with extreme caution

and all parties clearly informed about the limitations of BCI in restoring mobility or

communication.

Social

The issues of “mind-reading” and the invasiveness of certain EEG techniques (such as

ECoG) contribute to social issues surrounding brain-computer interfaces. Resistance to BCI is

often due to misperceptions about technology that has the potential for combining human and

machine, so education about BCI use is important, especially for subjects involved in BCI

research. Precautions will be taken to preclude any possible spread of misinformation which could

contribute to public resistance of BCI research.

Legal

Because BCIs obtain signals directly from the brain, there are distinct possibilities of legal

and privacy issues arising from BCI research and popular use. Brain signals contain intimate

information about an individual’s intentions, emotions, thoughts, and interests, and these could](https://image.slidesharecdn.com/42676b97-d5d0-48d0-94b5-f64f194b739d-160825220239/85/Capstone-Proposal-6-320.jpg)

![7

potentially be mishandled or deliberately misused. For instance, BCIs can be used maliciously to

infer an individual’s familiarity with certain faces, religious beliefs, sexual preference, as well as

information including name and PIN by presenting a list of random names and numbers [13]. The

issue of limiting such “brain spyware” will likely be a significant problem in the near future and

should be addressed through openly discussing these privacy issues, but being aware of these

privacy issues is a crucial first step.

Specific Phases

Overview of Phases

This project aims to develop an interface for studying EEG and eye-tracking signals to

investigate whether the “planning” and “guiding” stages of a movement-control task have

different characteristic EEG and eye-movement signatures. Functionally, the interface will

present the subject with a simple game that incorporates “searching”, “planning”, and “guiding”

stages to move a cursor on a screen, while collecting eye-movement and EEG signals (see Figure

1 for a Functional Decomposition Diagram). Incorporating “searching” provides a method to

verify eye-movement data since “searching” EMs are well-characterized [6]. In order to collect

this data, I will develop an interface in Unity and iterate the design in each phase by testing it with

the specifications found in Table 1. At minimum, the interface must achieve an 80% success rate

of all three tasks within three minutes as well as statistically significant differences in eye

movements between the “searching” and “planning” phases. Qualitative aspects of the interface

will also be tested including clarity of the goal (to eliminate subject confusion) and whether the

subject has enough time to complete each task without becoming distracted. This will validate

that the interface is collecting data primarily focused on the signatures in “searching”, “planning”,

and “guiding” tasks. I will be starting with a simple and easily-obtained control signal from the

Phantom Omni haptics device (which provides touch feedback of virtual forces) in order to

initially develop and test the interface.

This project will consist of three phases, each characterized by increased complexity in the

type of control signal used. First, and as a proof-of-concept of the testing interface, I will

implement signals from the Omni haptics device along with eye movement signals. Next, I will

replace the haptics signals with EMG (electromyography) signals, which provide intermediate

complexity as a control signal. Finally, and as a stretch goal, I will replace EMG signals with EEG

signals. The last phase is a stretch goal since it is not guaranteed that subjects will successfully

learn to control the BCI and so obtaining EEG signals is not guaranteed. Thus, answering the](https://image.slidesharecdn.com/42676b97-d5d0-48d0-94b5-f64f194b739d-160825220239/85/Capstone-Proposal-7-320.jpg)

![13

Furthermore, I hope to show that the characteristic eye-movement and EEG signals differ

significantly from stage to stage, and that these “signatures” can be implemented in a smart BCI

that can respond to the intended task of its user. Non-invasive scalp EEG signals will be obtained

in phase III along with eye-movement data and used to control the cursor’s movement. Electrodes

will be placed according to the international 10-20 system [14]. First, the interface will be

improved through design iterations until three test subjects are able to average an 80% success

rate. Next, eye-movement and EEG signals will be analyzed for consistent differences between the

“searching”, “planning”, and “guiding” stages of the Unity game. The EEG signals will be analyzed

for characteristic fluctuations in power associated with different frequency bands: for instance,

the Mu rhythm at 9-13 Hz is typically desynchronized during planning and execution of hand

movement while it is synchronized when real or imagined hand-movement is suppressed [15].

Eye-movement data will be analyzed for characteristic dwell times (time spent focusing on a single

location) and saccade frequency.

Deliverables:

In this phase I aim to integrate EEG signals into the control system of the Unity game and

use eye movements and EEG to verify the interface according to Figure 2. Furthermore, I will

analyze eye-movements and EEG signals to determine whether there are significant differences

that distinguish the “planning” and “guiding” tasks.

Anticipated Outcome:

This phase is likely to be the most challenging since it relies on the ability of the subjects

to learn to control BCI in order to use EEG as a control signal. If subjects are unable to learn BCI

sufficiently, my fall-back option is to use EMG as the control signal and analyze EMG + eye-

movement signals to determine whether characteristic events occur in the three stages. I

hypothesize that there are characteristic differences between the “planning” and “guiding” tasks

that will be revealed in the user’s eye movements and EEG signals.

Engineering Design Standards:

The design portion of this project involves designing a testing interface in Unity in order

to obtain eye-movement data and haptics/EMG/EEG data (as appropriate, for each phase). Since

the testing interface is computerized, the potential safety issues associated with this design is very

low. This project contains very few potential safety risks, as EMG is completely non-invasive and

the type of EEG we will be using is also non-invasive. A standard electrode placement will be used

(see Figure 4) and all subjects’ voluntary consent will be obtained before collecting their EMG and](https://image.slidesharecdn.com/42676b97-d5d0-48d0-94b5-f64f194b739d-160825220239/85/Capstone-Proposal-13-320.jpg)

![14

EEG signals while they test the design interface in Unity. EEG recordings must follow standards

set out by the International Federation of Clinical Neurophysiology [14]. This involve labeling

basic patient information including name, date of birth, date of the test, laboratory numbers,

current medication, and additional comments. Further, any computer analysis of EEG recordings

should not stand alone but should be accompanied by human visual analysis. While we are not

engaging in clinical EEG, we also plan to follow these labeling practices.

Figure 4: Standard EEG electrode placement [14]

Key Personnel:

Margaret Thompson and Andrew Haddock will oversee my project and advise me on at

least a weekly basis. Margaret Thompson is a graduate student working with BCIs and has

knowledge about the process involved in learning to use a BCI. Andrew Haddock is a graduate

student working on modeling and control of Dynamic Neural Systems and will oversee my use of

the eye-tracker. I have been meeting with Margaret and Andrew on a weekly basis. Professor

Howard Chizeck will meet with me for regular progress reports –no less than monthly—and will

assign me my grade for the 402 project.

Facilities, Equipment, and Resources

The BioRobotics Lab is located in the Electrical Engineering building on the UW campus,

and has the resources necessary to conduct BCI and eye-tracking research. The BioRobotics Lab

will provide all equipment including the MyGaze Eye-Tracker and software, EMG equipment,

EEG equipment, Omni haptics device, and computer for designing the Unity interface and signal

processing. I will use both MATLAB and Unity which I have downloaded on my own computer for

work both inside and outside of lab.](https://image.slidesharecdn.com/42676b97-d5d0-48d0-94b5-f64f194b739d-160825220239/85/Capstone-Proposal-14-320.jpg)

![15

Appendix:

Timeline: My anticipated timeline is as follows. I will begin work during June 2016 and plan to

complete each phase in approximately 3 months, leaving me 2 months as a buffer in the event of

unforeseen circumstances, which will also give me time to write my report.

Additional Figures

Figure 5: Three possible testing interfaces developed in Unity to induce “searching”, “planning”, and

“guiding” tasks. A) A center-out reaching control scheme [16]. B) A maze containing various obstacles.

C) A random word search.

Lorem ipsum dolor

sit amet,

consectetur

adipiscing elit. Ut

leo felis, rutrum a

risus sit amet,

tristique dictum

neque. Mauris.](https://image.slidesharecdn.com/42676b97-d5d0-48d0-94b5-f64f194b739d-160825220239/85/Capstone-Proposal-15-320.jpg)

![17

References

[1] J. e. a. Deenen, "The Epidemiology of Neuromuscular Disorders: A Comprehensive Overview of the

Literature," Journal of Neuromuscular Diseases, vol. 2, pp. 73-85, 2015.

[2] T. K. C. Zander, "Toward Passive Brain-Computer Interfaces: Applying Brain-Computer Interface

Technology to Human-Machine Systems in General," J. Neural Eng., vol. 8, no. 2, 2011.

[3] B. L. A. S. B. Huang, "Integrating EEG Information Improves Performance of Gaze Based Cursor

Control," in 6th International IEEE/EMBS Conference on Neural Engineering (NER), San Diego, 2013.

[4] M. e. a. Gerven, " The Brain-Computer Interface Cycle," J Neural Eng, vol. 6, no. 4, pp. 1-10, 2009.

[5] M. C. S. B. A. Y. B. Golub, "Brain-Computer Interfaces for Dissecting Cognitive Processes Underlying

Sensorimotor Control," Current Opinion in Neurobiology, vol. 37, pp. 53-58, 2016.

[6] S. e. a. 5. 1.-1. Lee, "Effects of Search Intent on Eye-Movement Patterns in a Change Detection

Task," Journal of Eye Movement Research, vol. 8, no. 2, pp. 5,1-10, 2015.

[7] E. A. e. a. Corbett, " Real-Time Evaluation of a Noninvasive Neuroprosthetic Interface for Control of

Reach," IEEE Transactions on Neural Systems and Rehabilitation Engineering,, vol. 21, no. 4, pp.

674-682, 2013.

[8] G. e. a. Bird, "The Role of Eye Movements in Decision Making and the Prospect of Exposure

Effects," Vision Research, vol. 60, pp. 16-21, 2012.

[9] S. B. H. Neggers, "Coordinated Control of Eye and Hand Movements in Dynamic Reaching," Human

Movement Science, vol. 21, no. 3, pp. 349-376, 2002.

[10] Z. T. Vilimek R., " BCI: combining eye-gaze input with brain–computer interaction," in Conference

on Universal Access in Human–Computer Interaction, San Diego, 2009.

[11] G. e. a. Pfurtscheller, "The Hybrid BCI," Front Neurosci., vol. 4, no. 42, pp. 593-602, 2010.

[12] W. Glannon, "Ethical Issues with Brain-Computer Interfaces," Front Syst Neurosci, vol. 8, no. 136,

2014.

[13] T. a. C. H. Bonaci, "Privay by Design in Brain-Computer Interfaces," UW Dept. Elec. Eng., Seattle,

2013.

[14] M. e. a. Nuwer, "FCN Guidelines for Topographic and Frequency Analysis of EEGs and EPs. Report

of an IFCN Committee," Electroencephalogr Clin Neurophysiol Suppl, Los Angeles, 1994.

[15] G. e. a. Pfurtscheller, "Mu Rhythm (de)Synchronization and EEG Single-Trial Classification of

Different Motor Imagery Tasks," NeuroImage, vol. 31, pp. 153-159, 2006.](https://image.slidesharecdn.com/42676b97-d5d0-48d0-94b5-f64f194b739d-160825220239/85/Capstone-Proposal-17-320.jpg)

![18

[16] A. e. a. Hewitt, "Representation of Limb Kinematics in Purkinje Cells Simple Spike Discharge is

Conserved Across Multiple Tasks," Journal of Neurophysiology, vol. 106, no. 5, pp. 2232-2247,

2011.](https://image.slidesharecdn.com/42676b97-d5d0-48d0-94b5-f64f194b739d-160825220239/85/Capstone-Proposal-18-320.jpg)