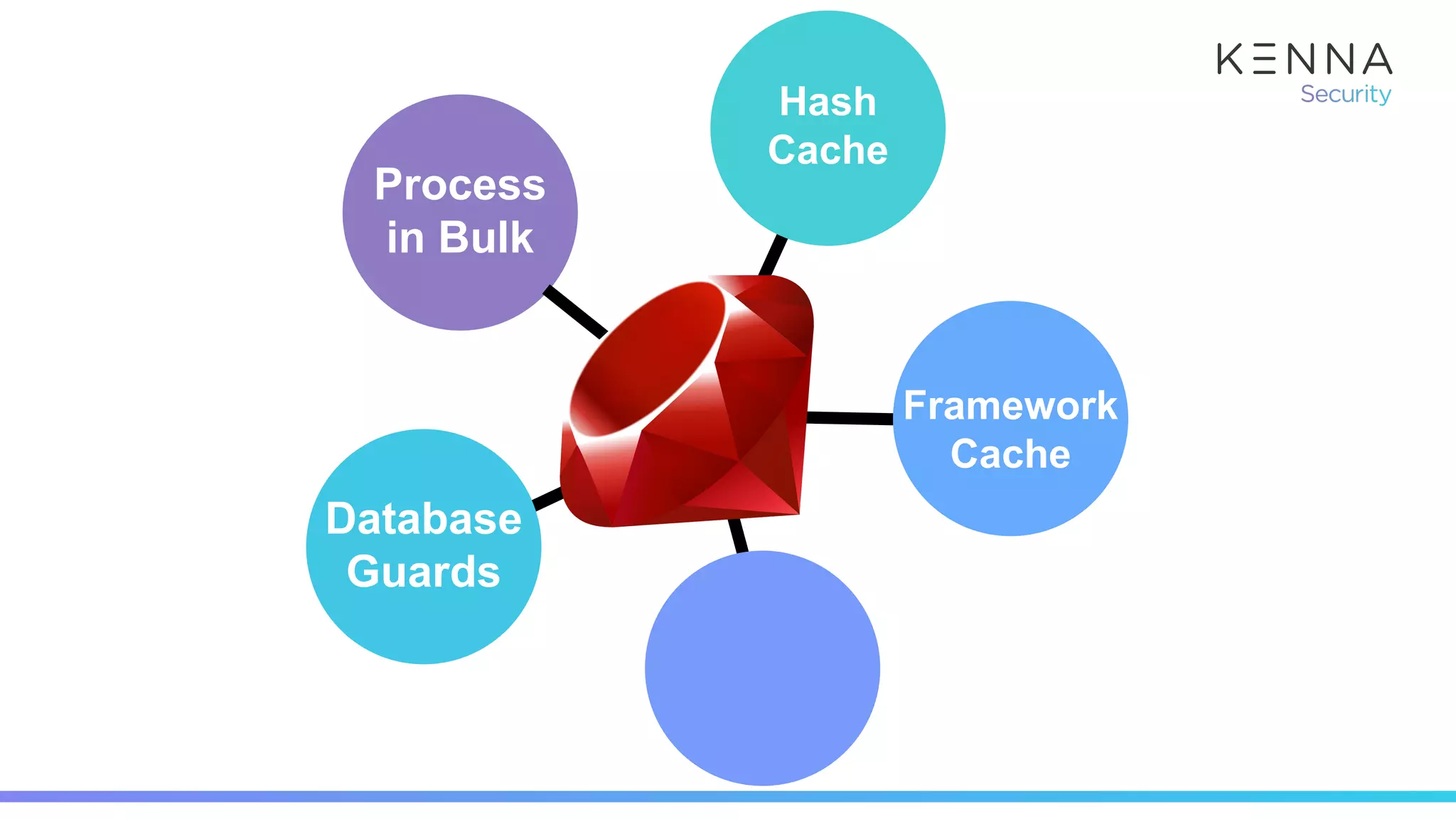

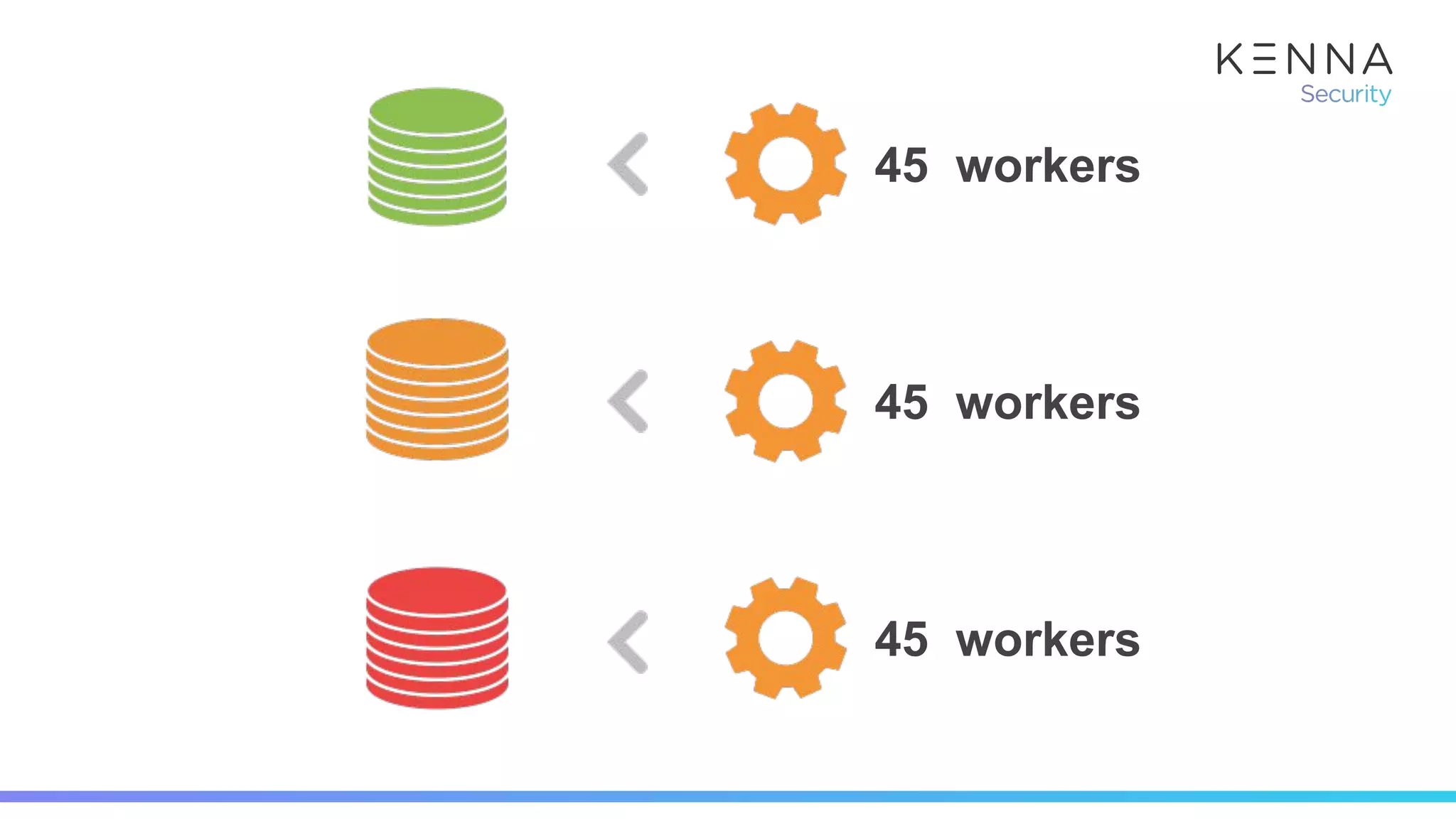

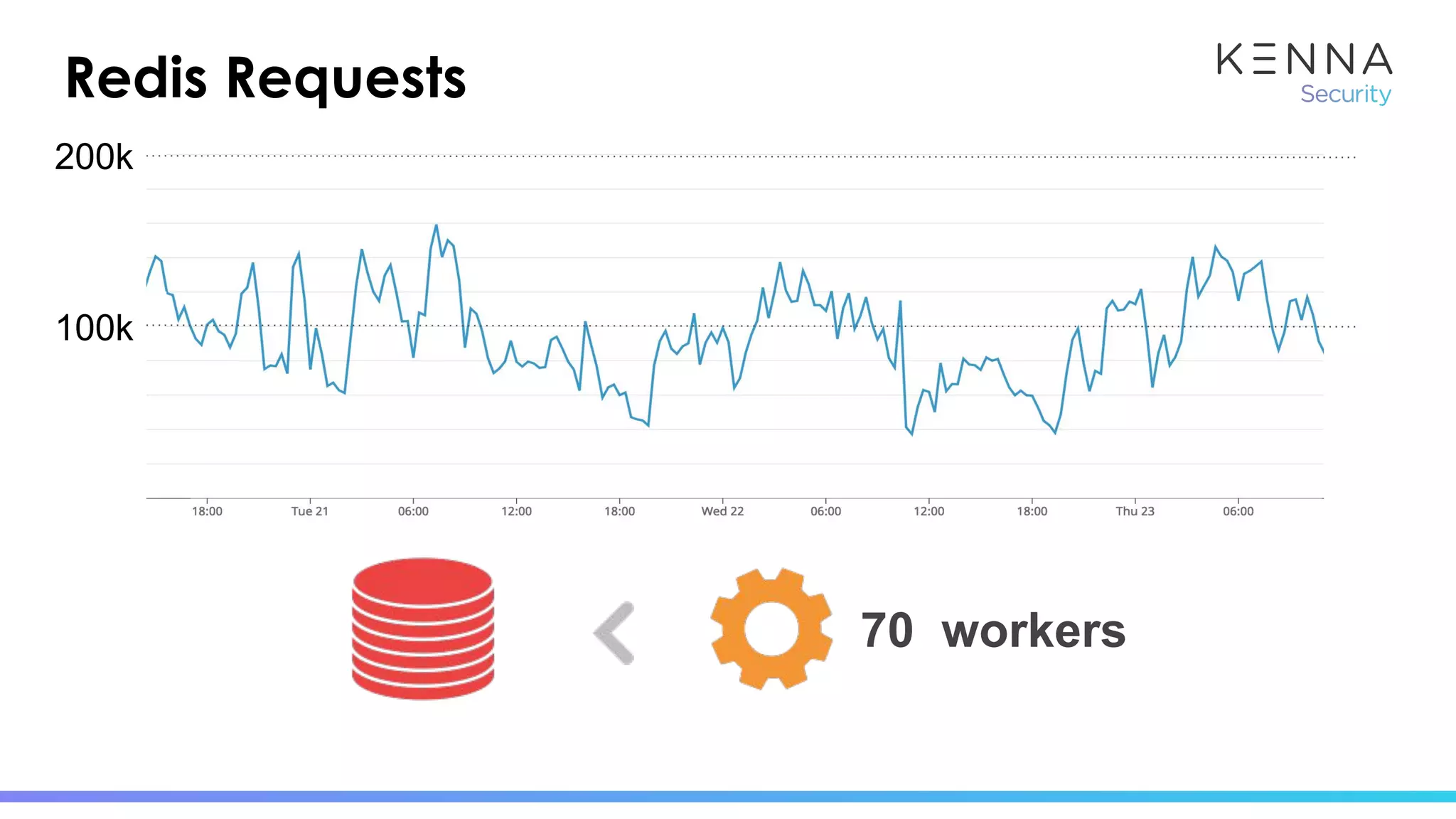

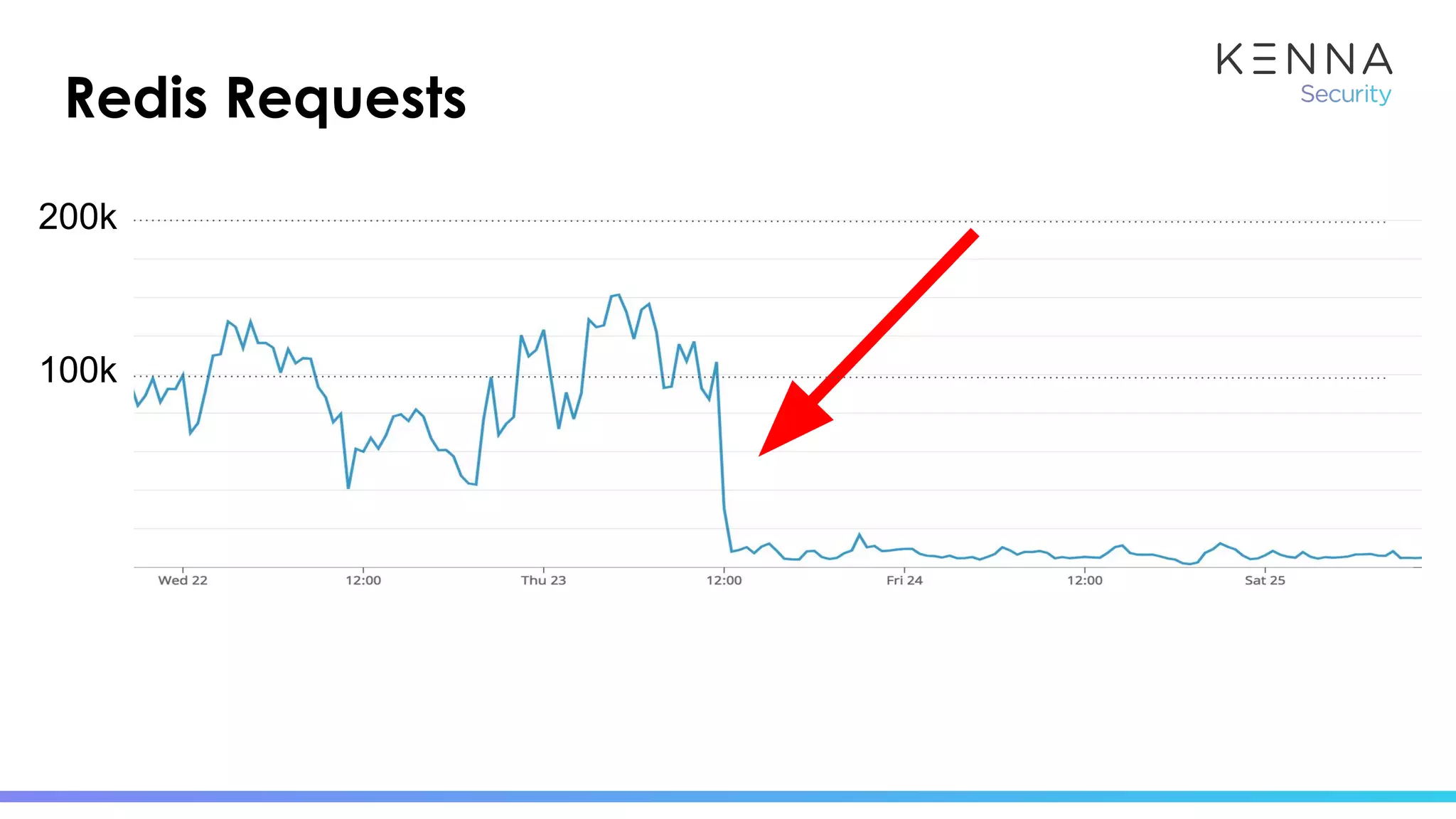

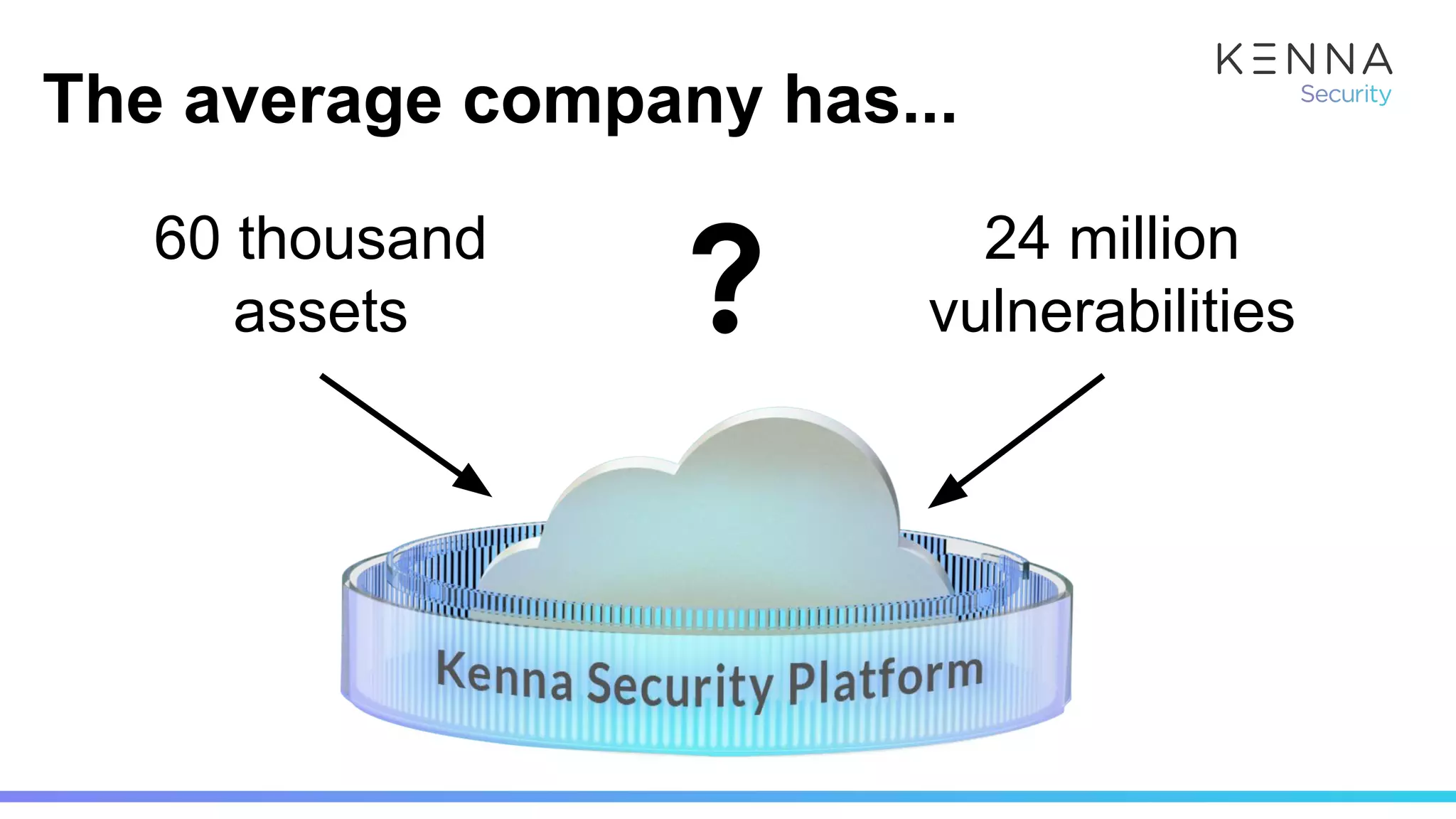

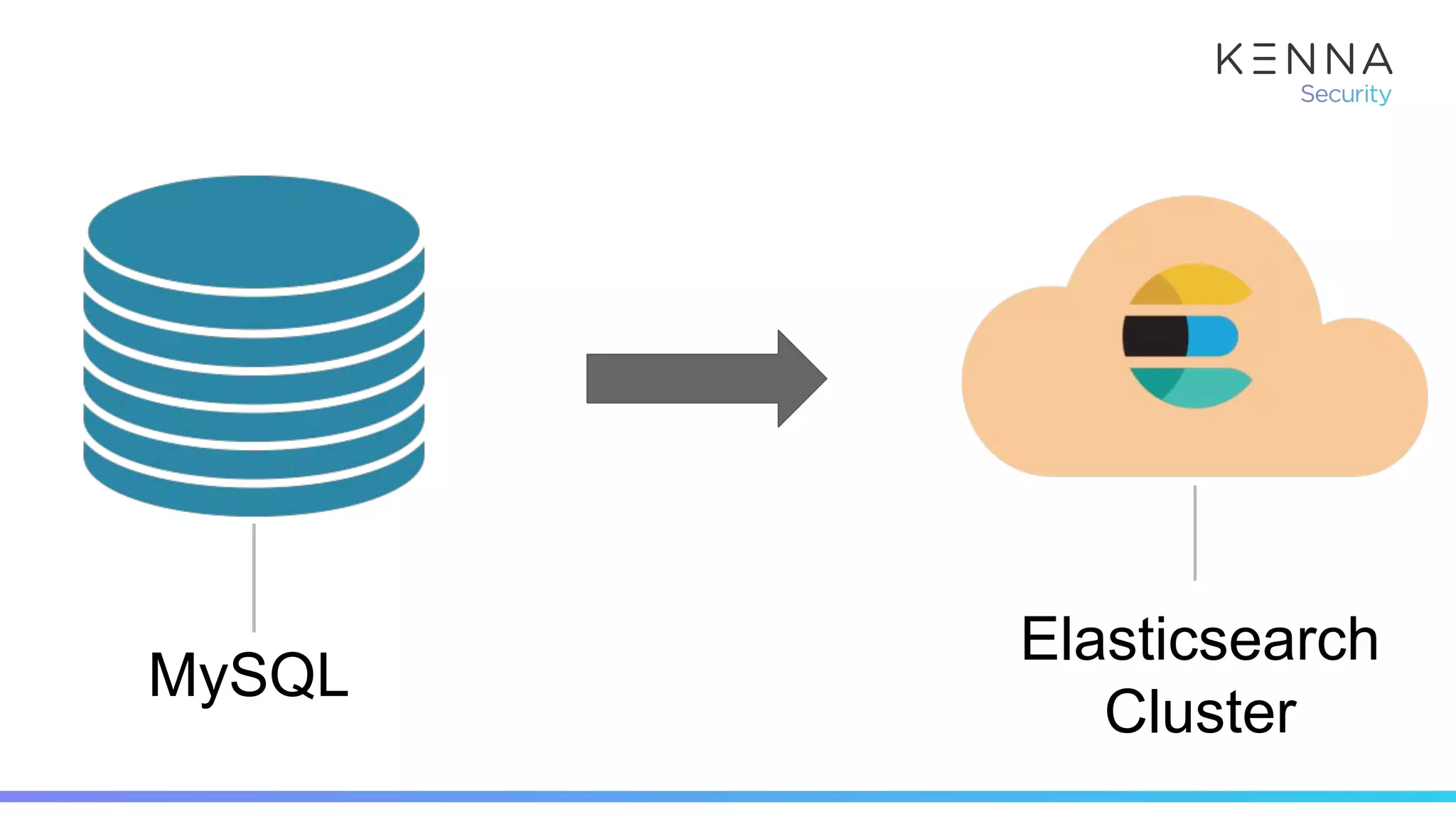

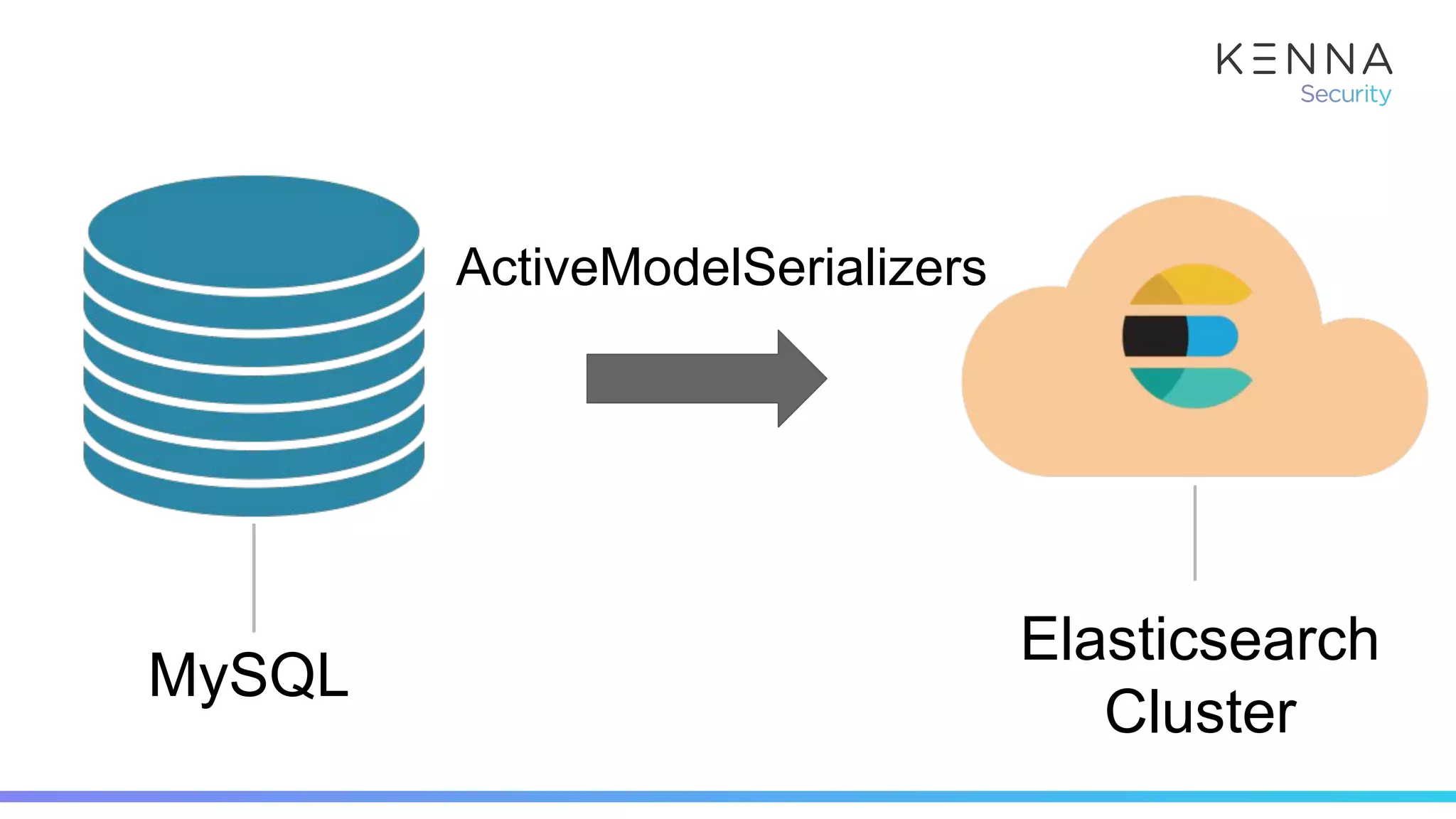

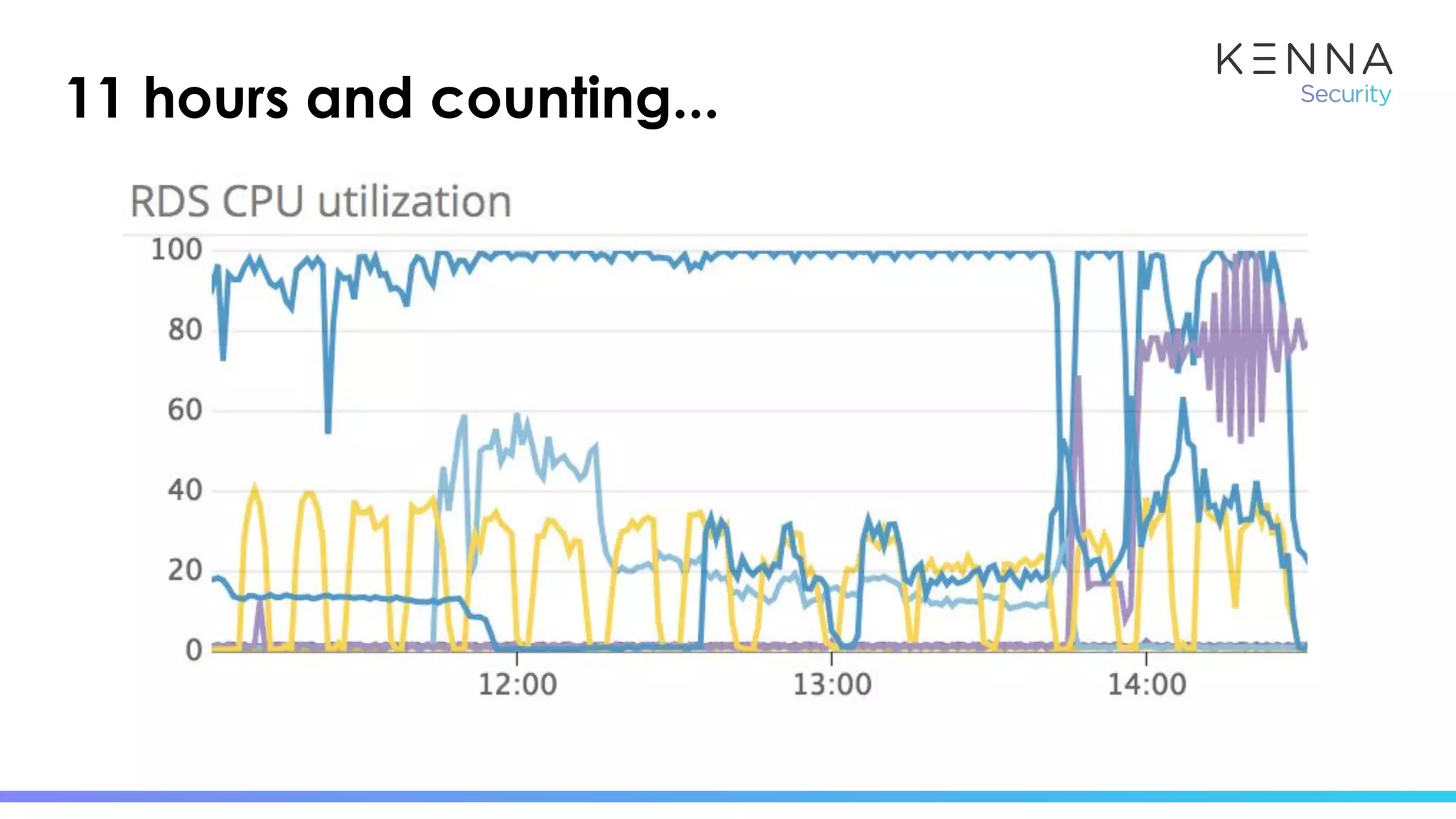

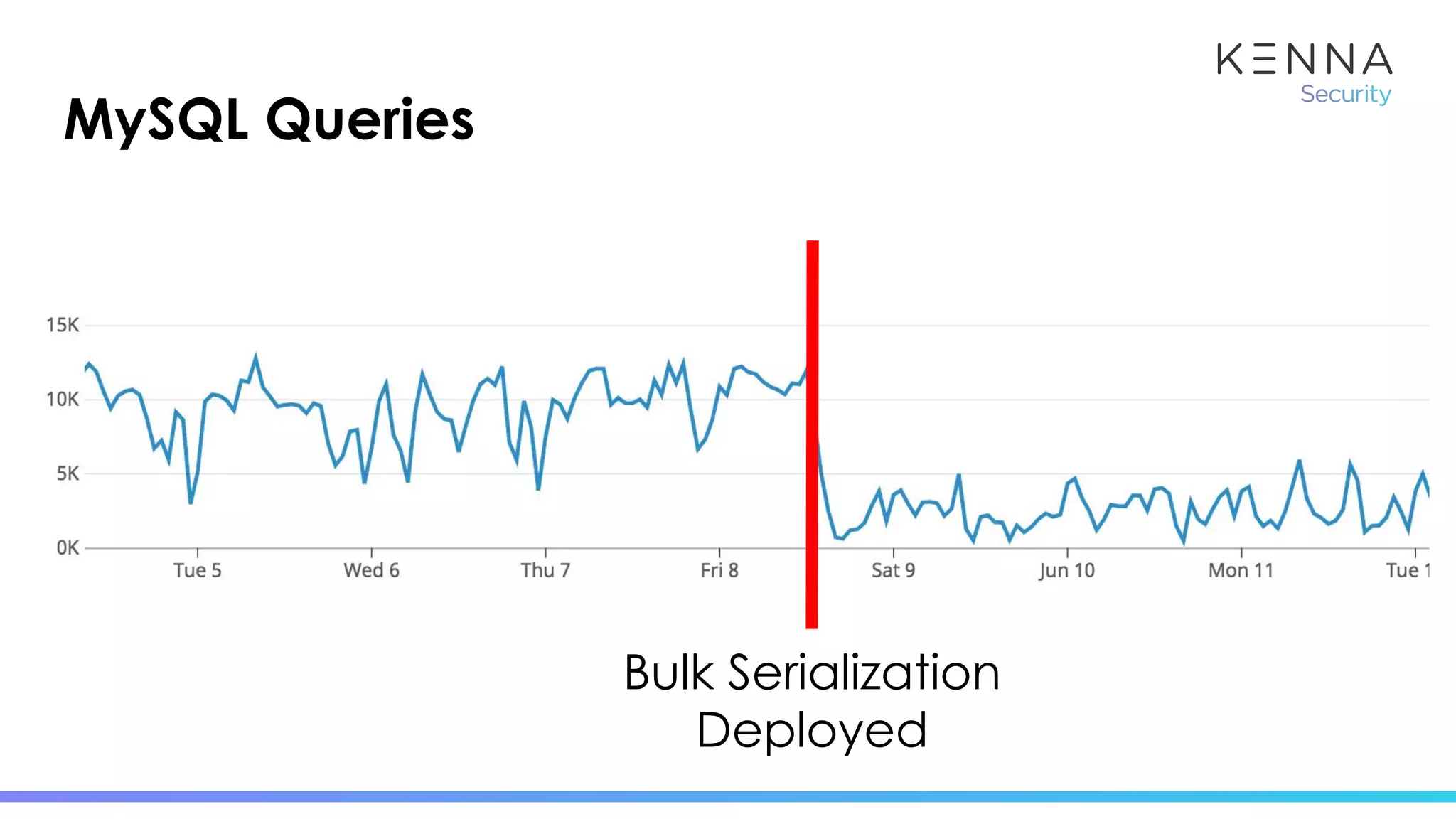

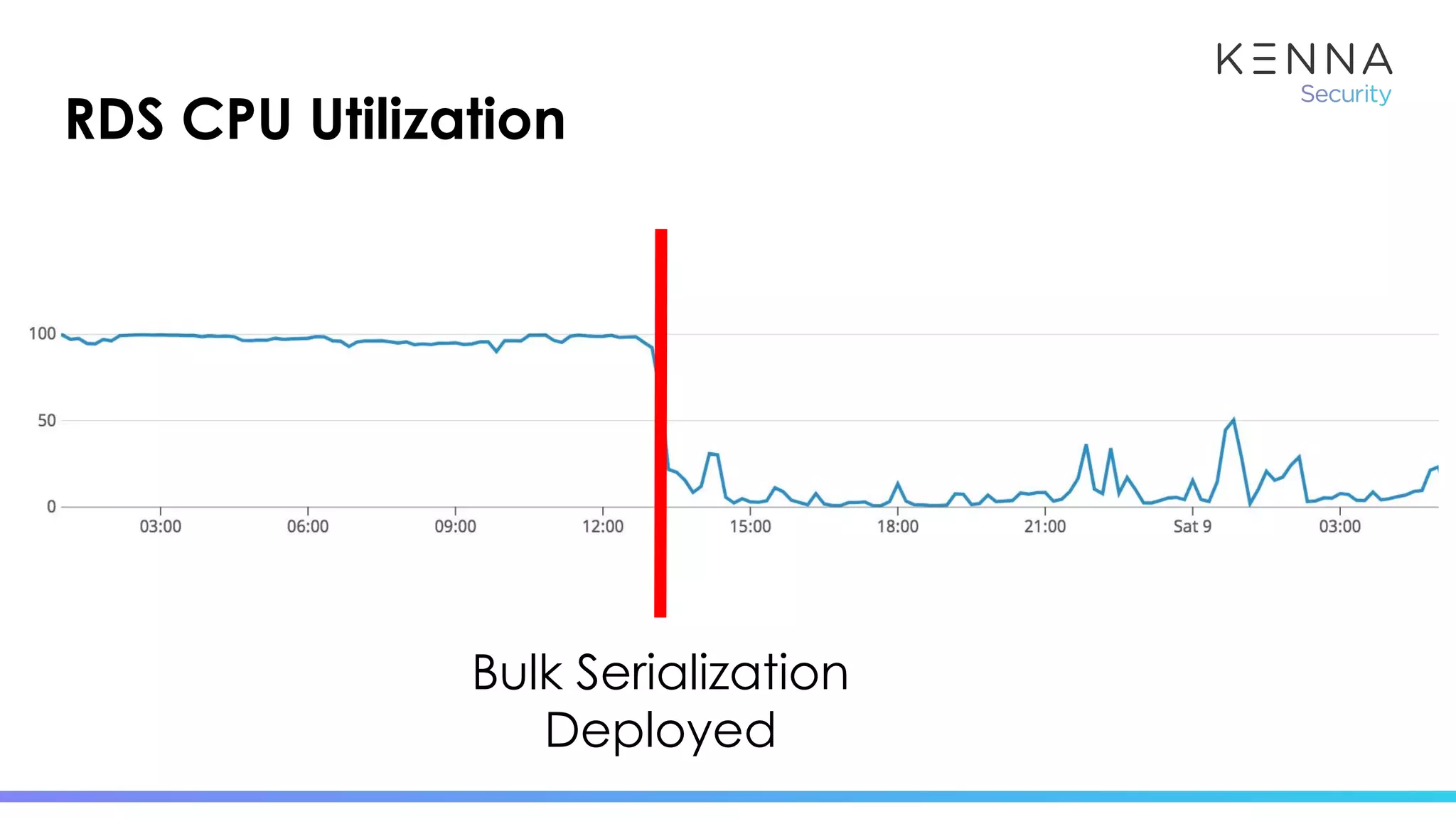

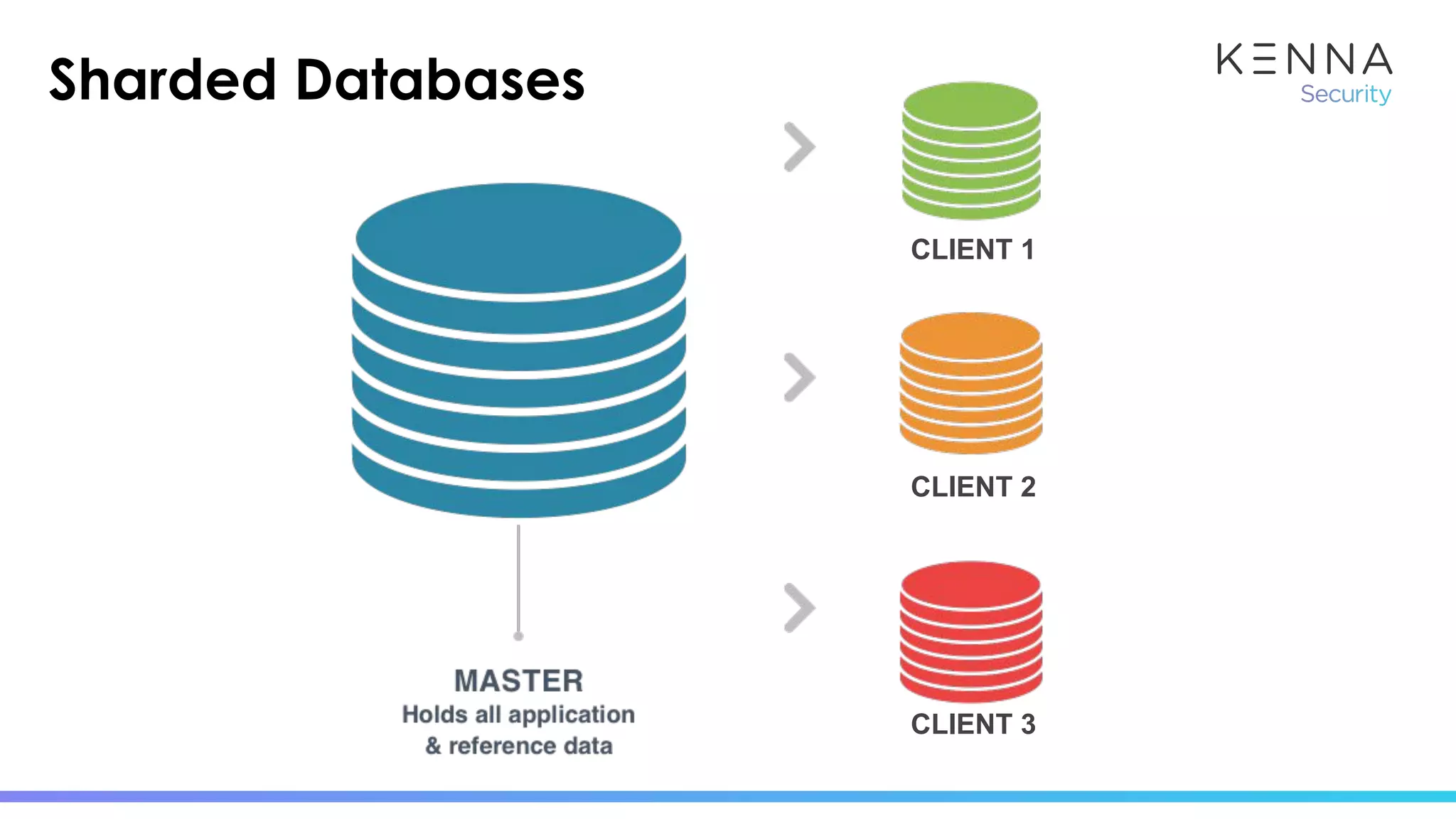

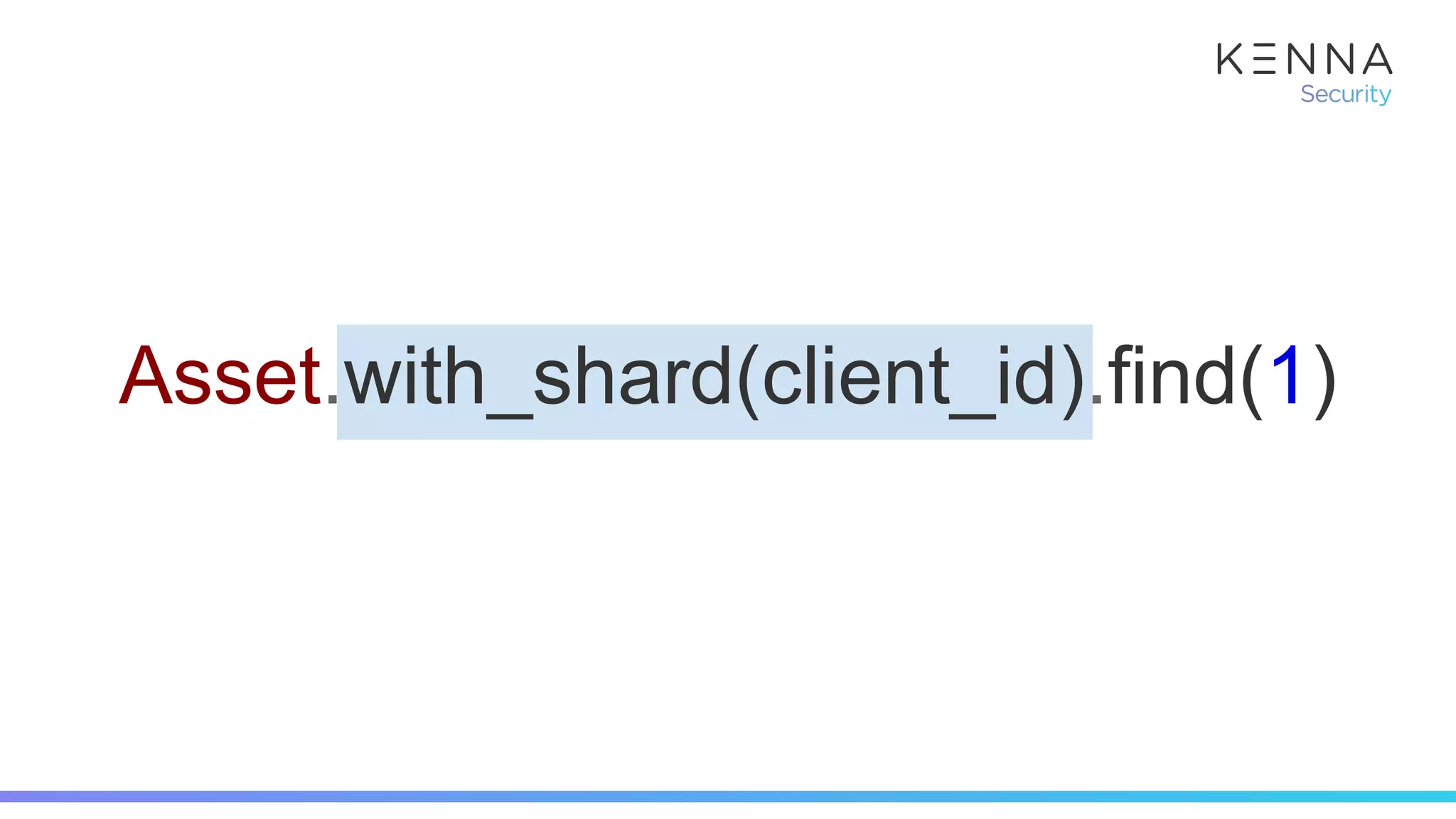

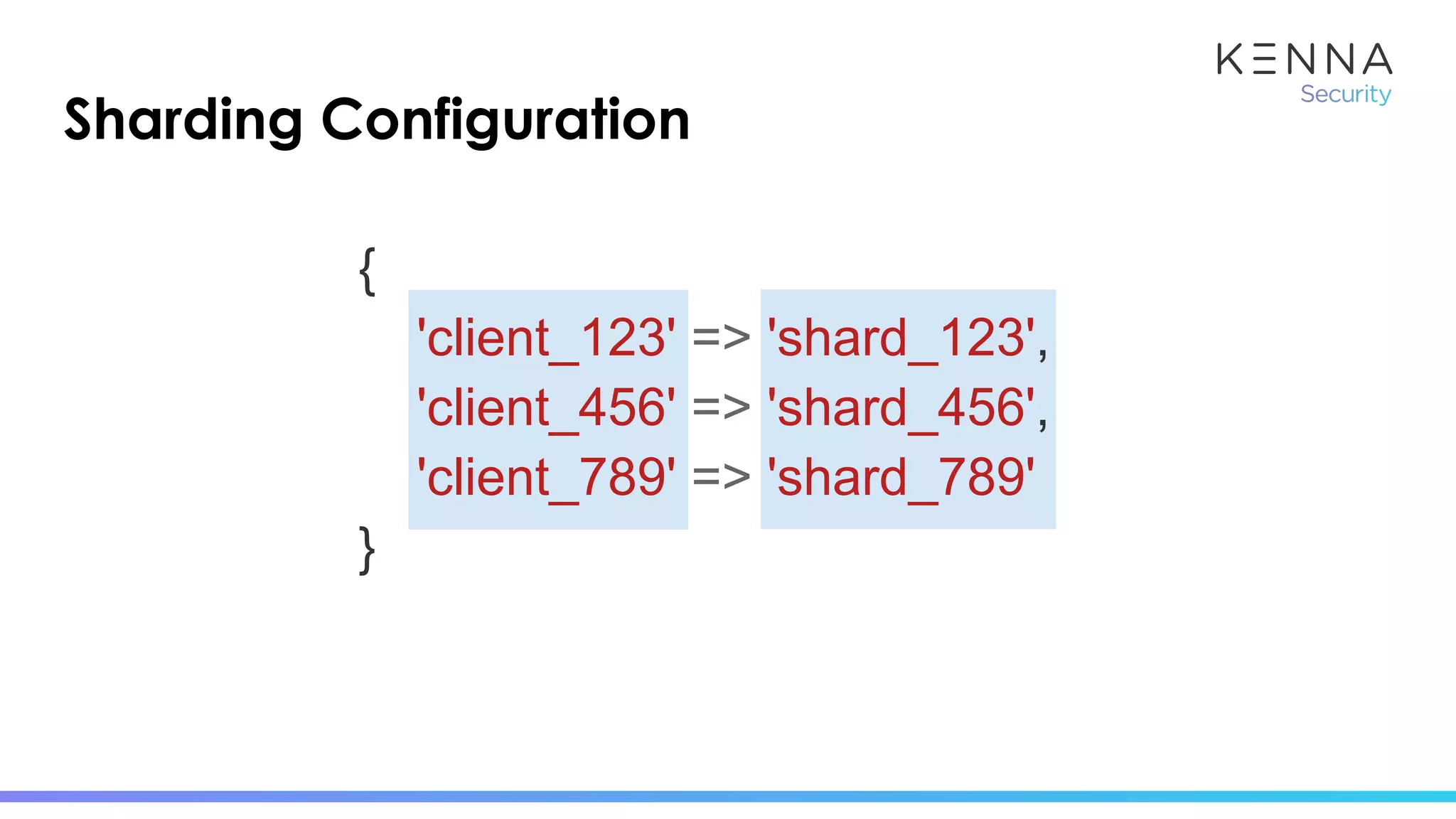

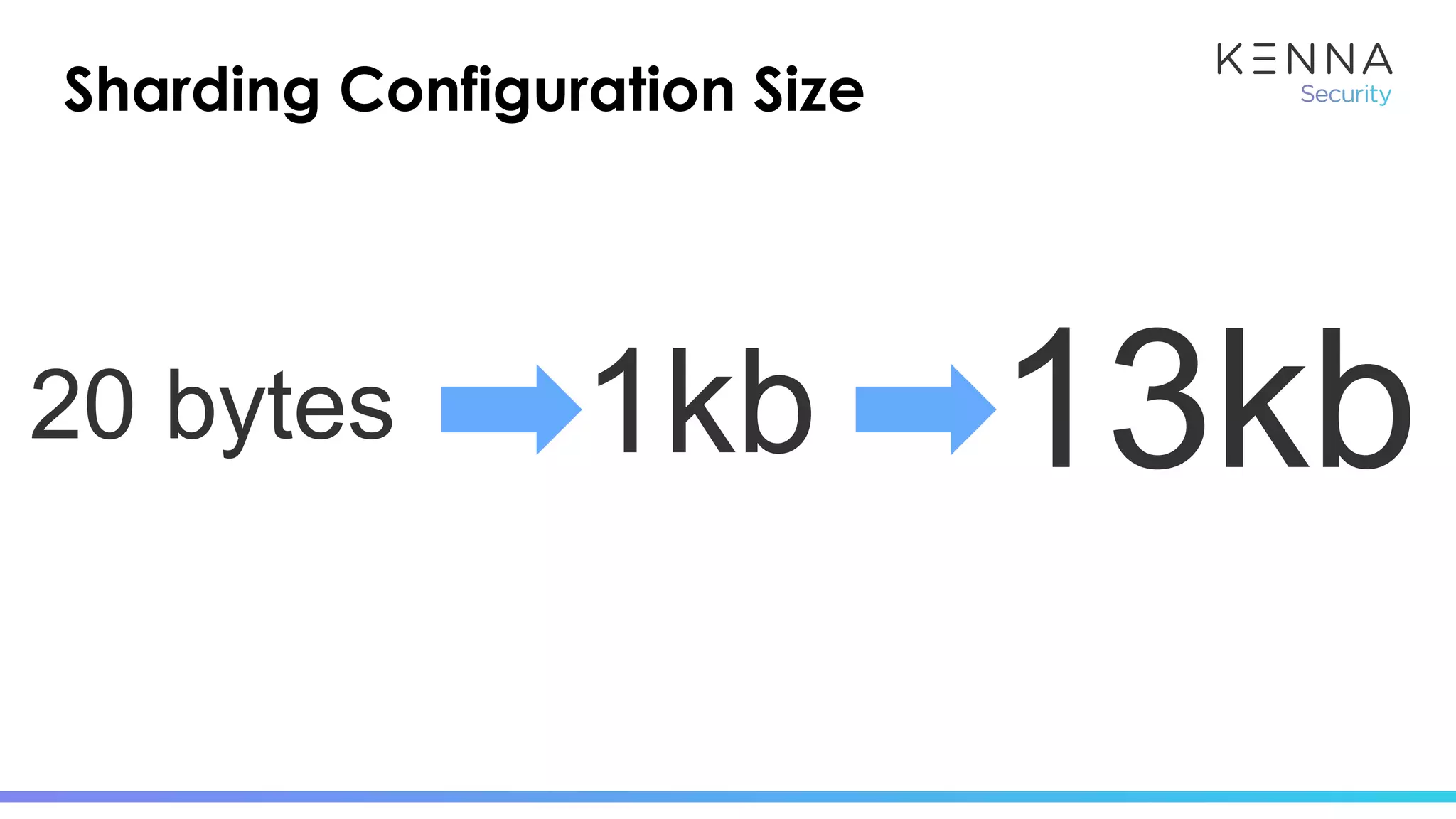

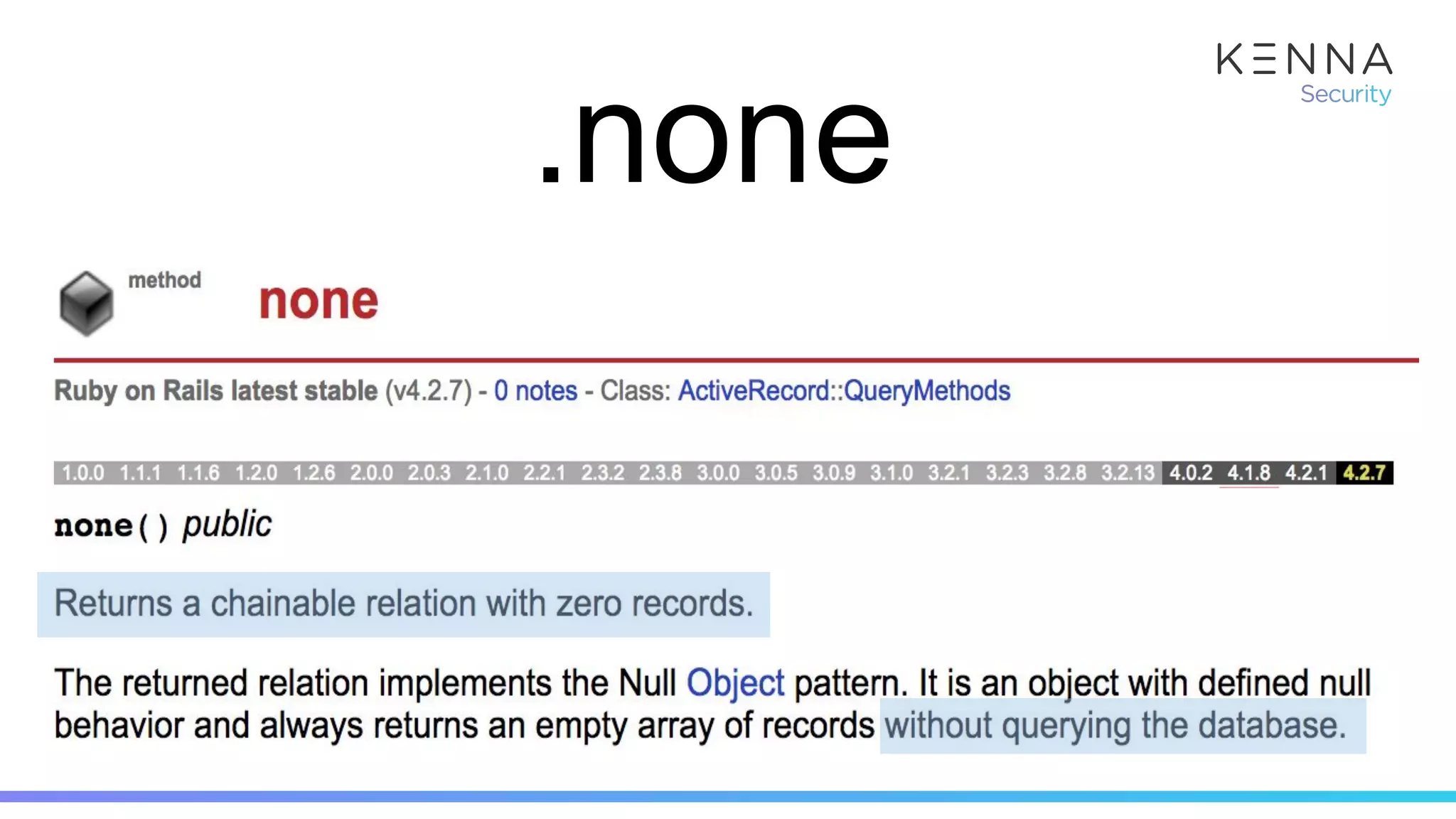

The document discusses various strategies for optimizing database performance and processing efficiency in Ruby applications, focusing on techniques like bulk serialization, caching, and reducing unnecessary database hits. It highlights the importance of leveraging caching mechanisms such as Redis and Elasticsearch to streamline workflows, particularly in scenarios with a large number of assets and vulnerabilities. Additionally, it emphasizes the role of background workers and sharding configurations in enhancing application responsiveness and resource utilization.

![The Result...

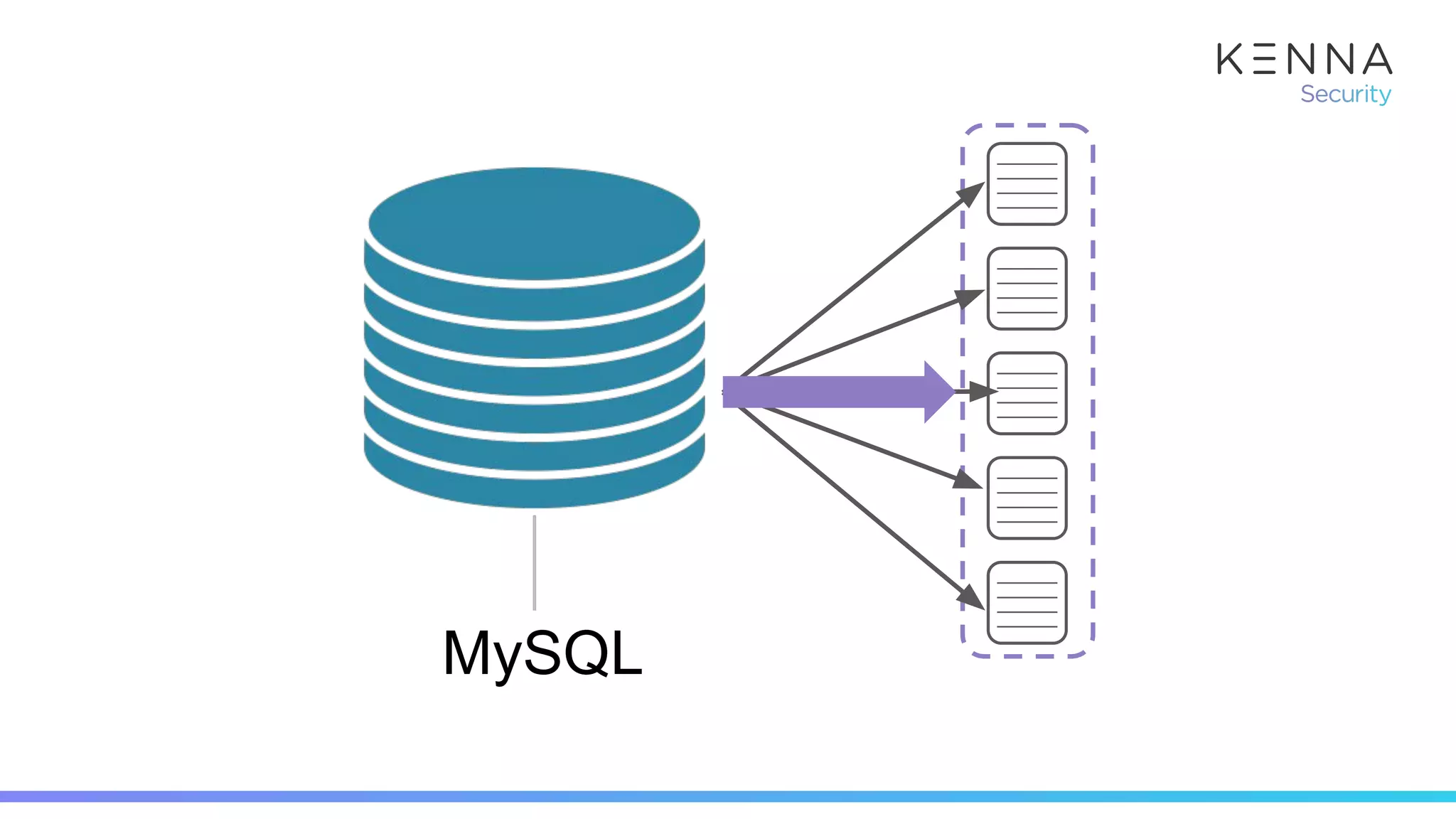

(pry)> vulns = Vulnerability.limit(300);

(pry)> Benchmark.realtime { vulns.each(&:serialize) }

=> 6.022452222998254

(pry)> Benchmark.realtime do

> BulkVulnerability.new(vulns, [], client).serialize

> end

=> 0.7267019419959979](https://image.slidesharecdn.com/cacheisking2-181111171545/75/Cache-is-King-Get-the-Most-Bang-for-Your-Buck-From-Ruby-27-2048.jpg)

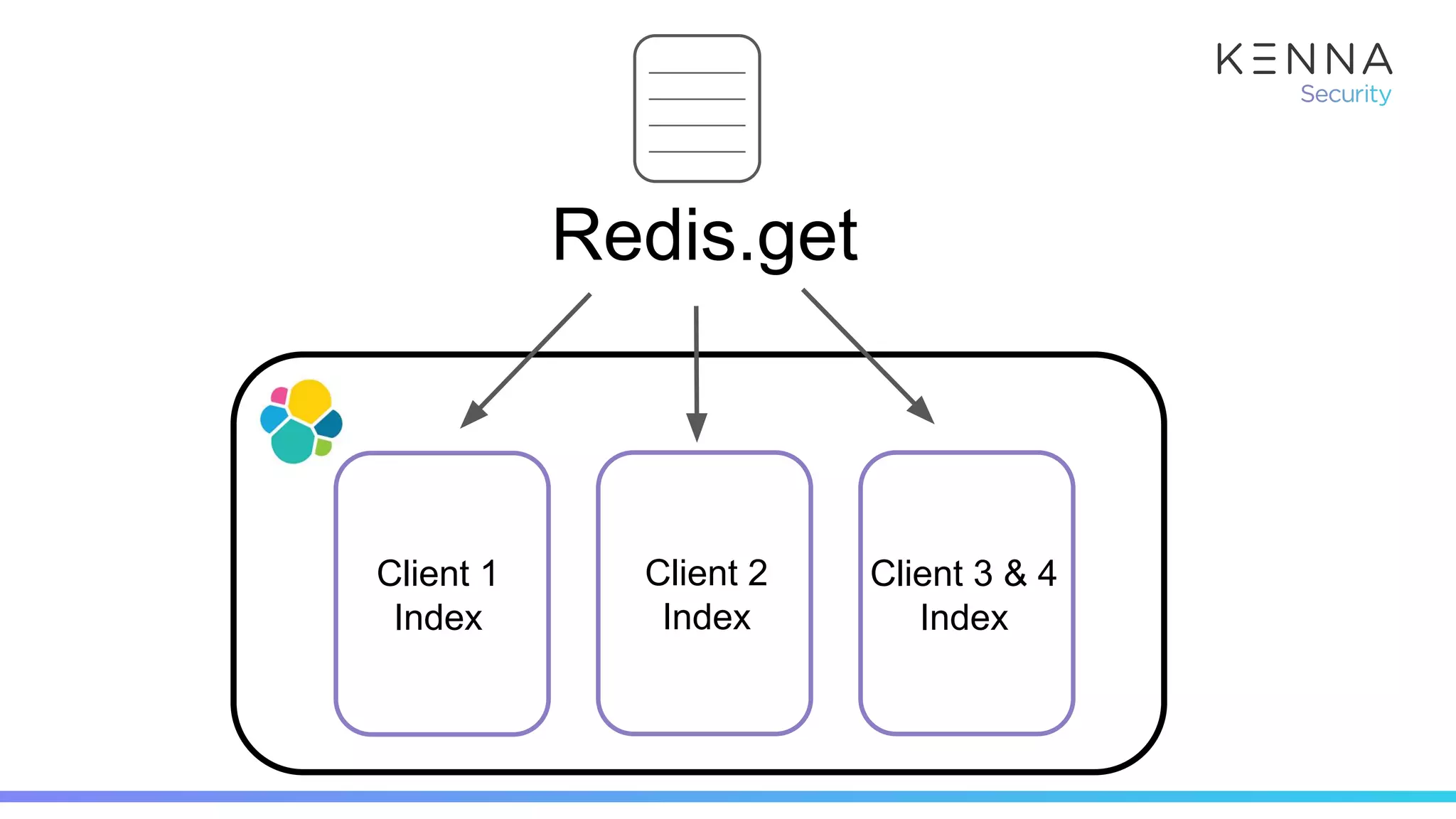

![indexing_hashes = vulnerability_hashes.map do |hash|

{

:_index => Redis.get(“elasticsearch_index_#{hash[:client_id]}”)

:_type => hash[:doc_type],

:_id => hash[:id],

:data => hash[:data]

}

end](https://image.slidesharecdn.com/cacheisking2-181111171545/75/Cache-is-King-Get-the-Most-Bang-for-Your-Buck-From-Ruby-38-2048.jpg)

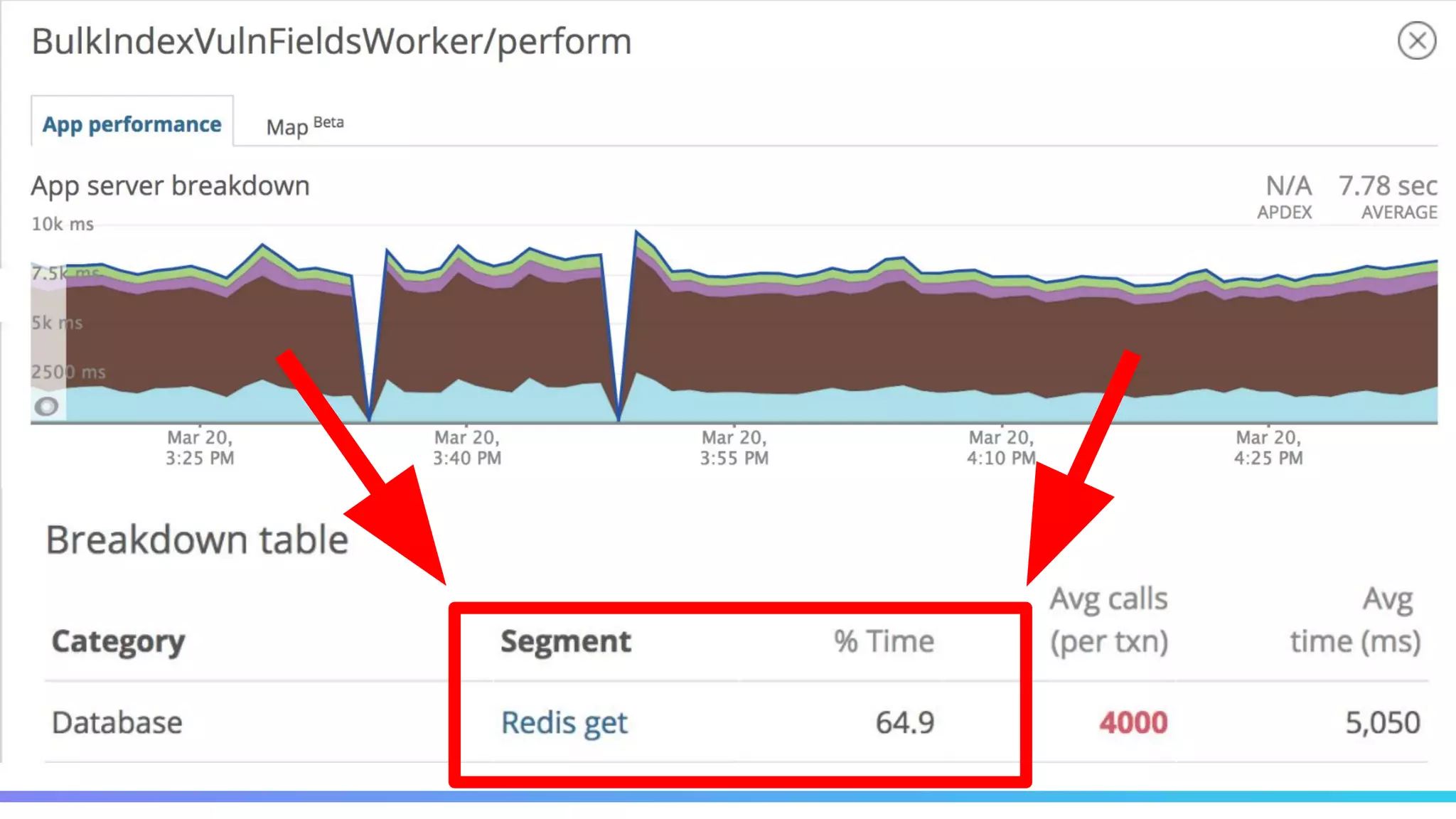

![indexing_hashes = vulnerability_hashes.map do |hash|

{

:_index => Redis.get(“elasticsearch_index_#{hash[:client_id]}”)

:_type => hash[:doc_type],

:_id => hash[:id],

:data => hash[:data]

}

end](https://image.slidesharecdn.com/cacheisking2-181111171545/75/Cache-is-King-Get-the-Most-Bang-for-Your-Buck-From-Ruby-39-2048.jpg)

![(pry)> index_name = Redis.get(“elasticsearch_index_#{client_id}”)

DEBUG -- : [Redis] command=GET args="elasticsearch_index_1234"

DEBUG -- : [Redis] call_time=1.07 ms

GET](https://image.slidesharecdn.com/cacheisking2-181111171545/75/Cache-is-King-Get-the-Most-Bang-for-Your-Buck-From-Ruby-40-2048.jpg)

![client_indexes = Hash.new do |h, client_id|

h[client_id] = Redis.get(“elasticsearch_index_#{client_id}”)

end](https://image.slidesharecdn.com/cacheisking2-181111171545/75/Cache-is-King-Get-the-Most-Bang-for-Your-Buck-From-Ruby-43-2048.jpg)

![indexing_hashes = vuln_hashes.map do |hash|

{

:_index => Redis.get(“elasticsearch_index_#{client_id}”)

:_type => hash[:doc_type],

:_id => hash[:id],

:data => hash[:data]

}

end

client_indexes[hash[:client_id]],](https://image.slidesharecdn.com/cacheisking2-181111171545/75/Cache-is-King-Get-the-Most-Bang-for-Your-Buck-From-Ruby-44-2048.jpg)

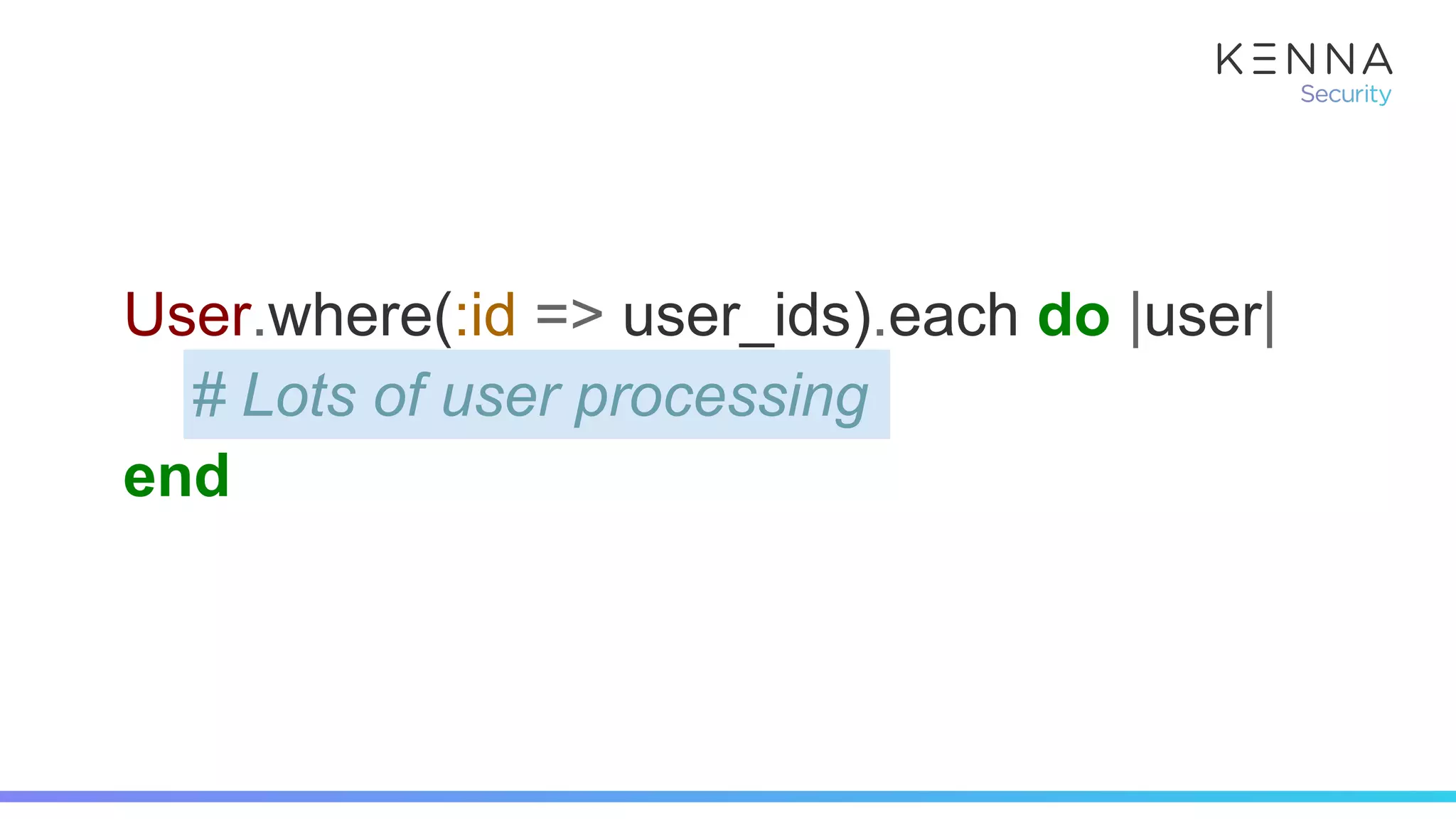

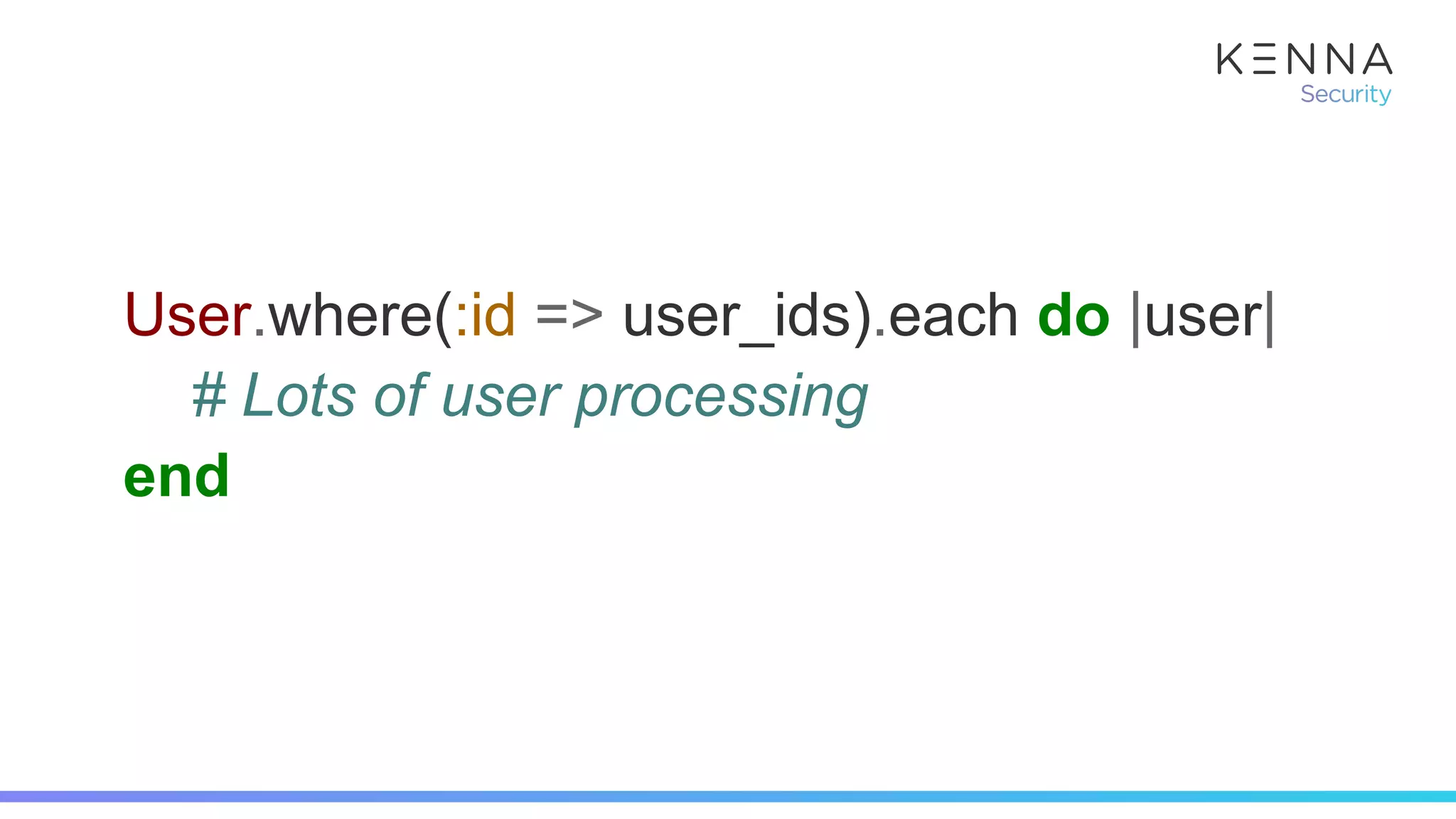

![(pry)> User.where(:id => [])

User Load (1.0ms) SELECT `users`.* FROM `users` WHERE 1=0

=> []](https://image.slidesharecdn.com/cacheisking2-181111171545/75/Cache-is-King-Get-the-Most-Bang-for-Your-Buck-From-Ruby-66-2048.jpg)

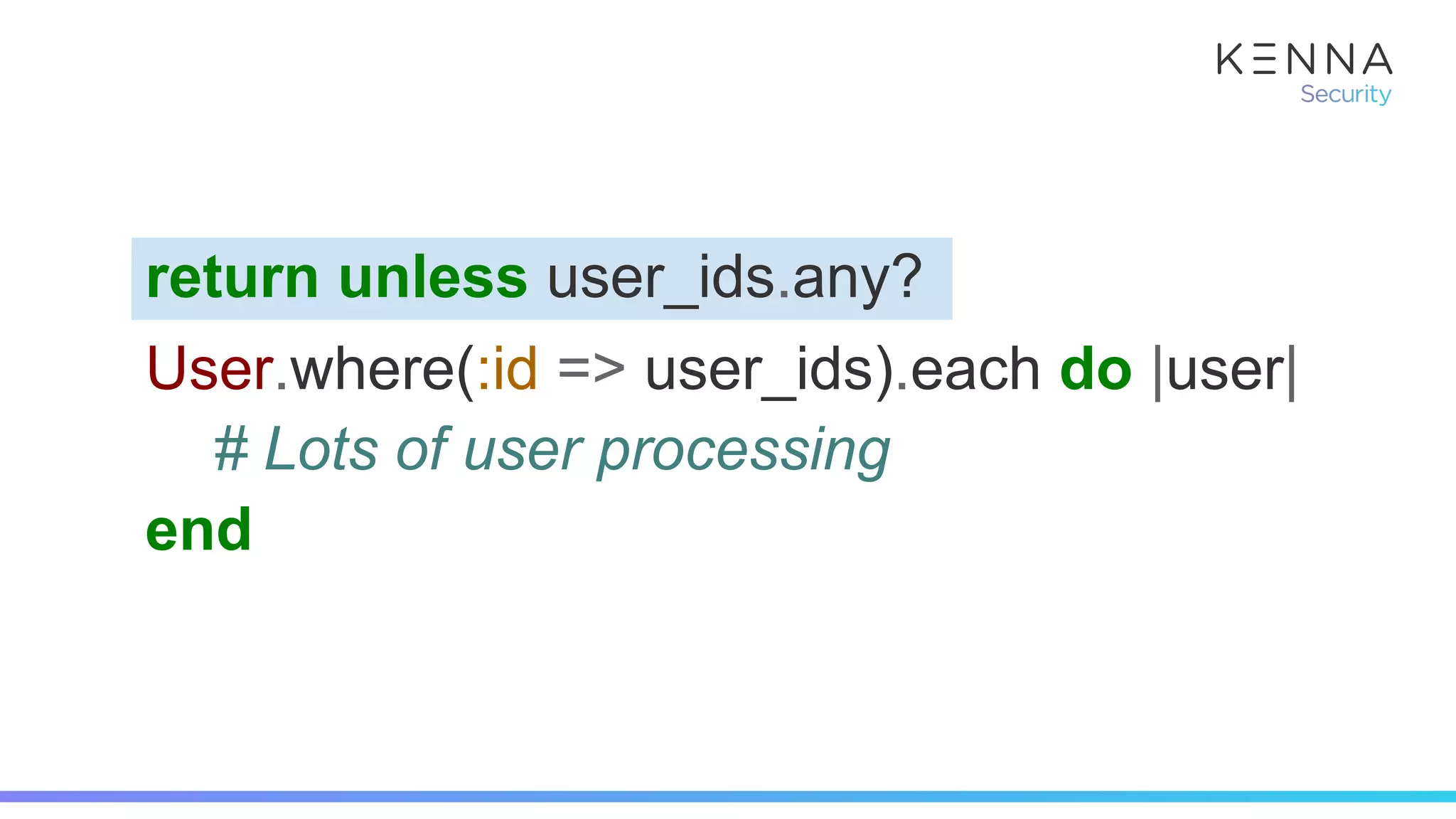

![(pry)> Benchmark.realtime do

> 10_000.times { User.where(:id => []) }

> end

=> 0.5508159045130014

(pry)> Benchmark.realtime do

> 10_000.times do

> next unless ids.any?

> User.where(:id => [])

> end

> end

=> 0.0006368421018123627](https://image.slidesharecdn.com/cacheisking2-181111171545/75/Cache-is-King-Get-the-Most-Bang-for-Your-Buck-From-Ruby-69-2048.jpg)

![(pry)> Benchmark.realtime do

> 10_000.times { User.where(:id => []) }

> end

=> 0.5508159045130014

“Ruby is slow”Hitting the database is slow!](https://image.slidesharecdn.com/cacheisking2-181111171545/75/Cache-is-King-Get-the-Most-Bang-for-Your-Buck-From-Ruby-70-2048.jpg)

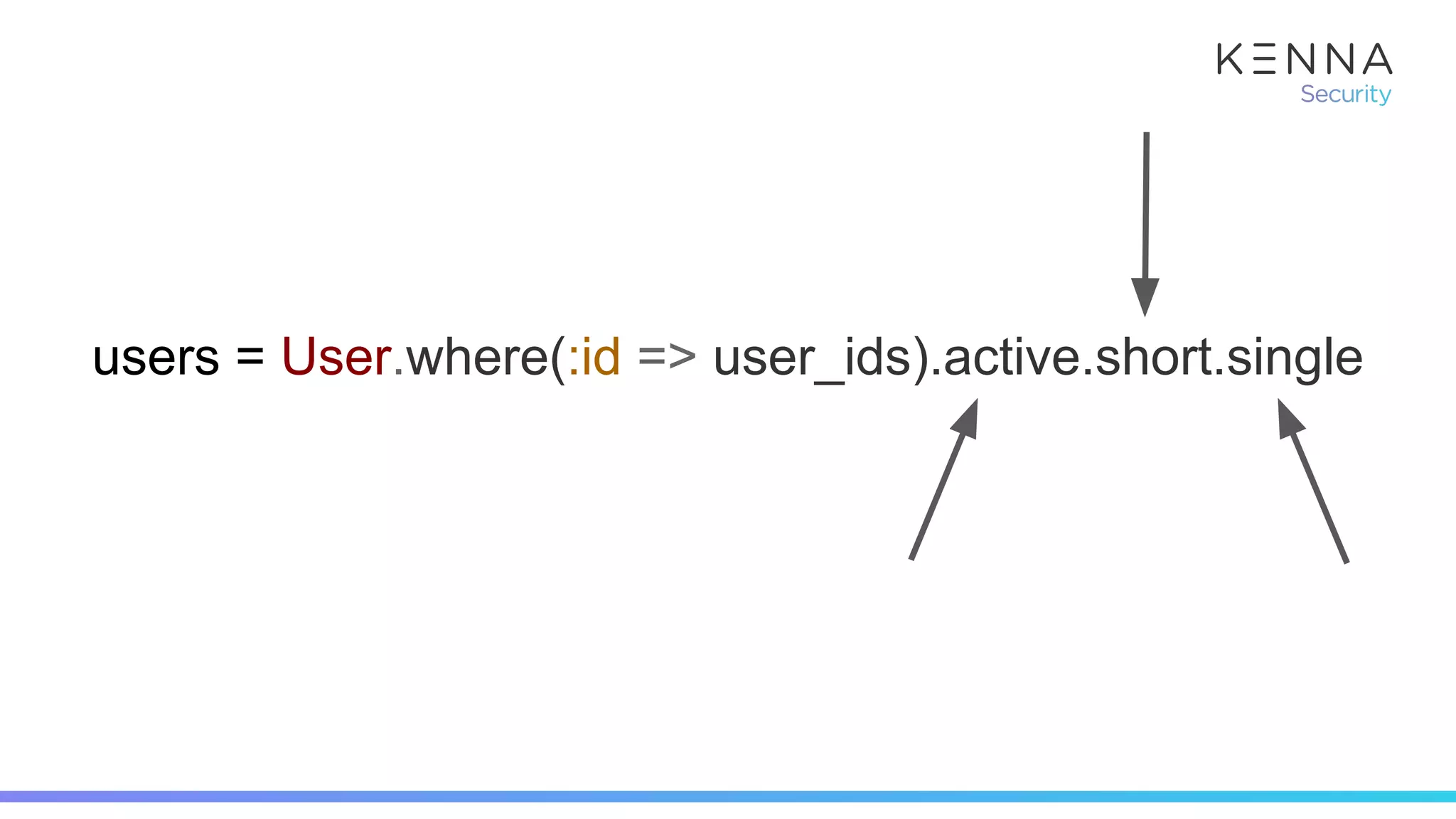

![(pry)> User.where(:id => []).active.tall.single

User Load (0.7ms) SELECT `users`.* FROM `users` WHERE 1=0 AND

`users`.`active` = 1 AND `users`.`short` = 0 AND `users`.`single` = 1

=> []

(pry)> User.none.active.tall.single

=> []

.none in action...](https://image.slidesharecdn.com/cacheisking2-181111171545/75/Cache-is-King-Get-the-Most-Bang-for-Your-Buck-From-Ruby-74-2048.jpg)