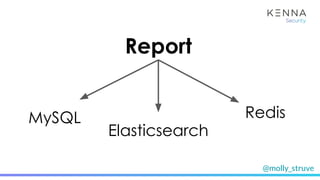

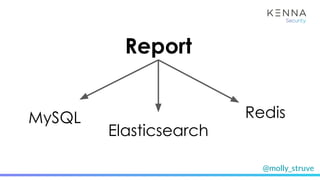

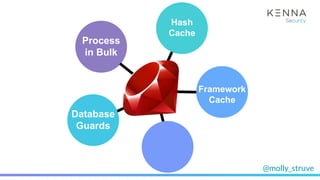

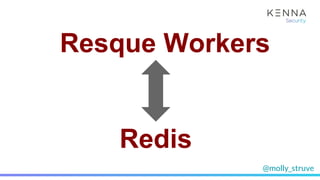

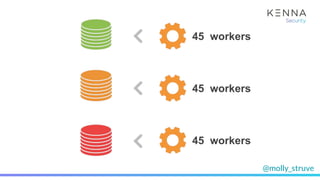

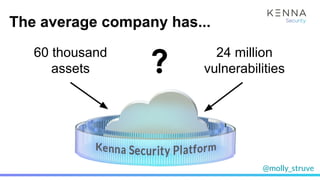

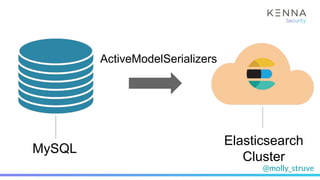

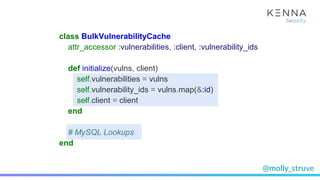

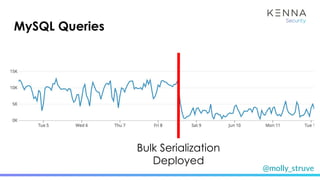

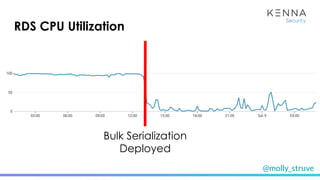

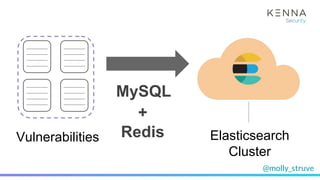

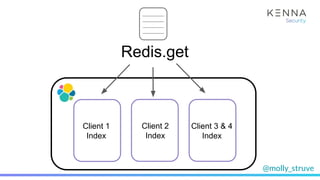

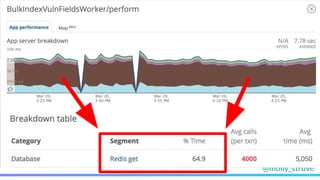

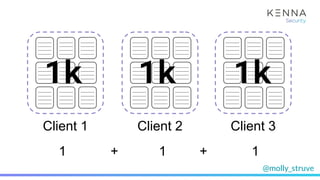

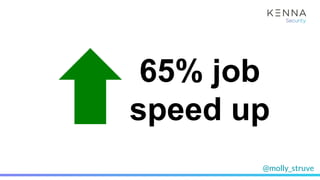

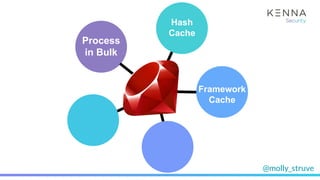

El documento discute estrategias para optimizar el rendimiento en el manejo de datos utilizando Ruby, enfocándose en técnicas como la serialización en lotes, el uso de cachés, y la reducción de consultas a bases de datos. Se presentan ejemplos de implementación y beneficios, destacando una reducción significativa en los tiempos de procesamiento. Además, se mencionan herramientas como Redis y Elasticsearch para mejorar la eficiencia en el acceso y gestión de vulnerabilidades en un entorno de ingeniería de confiabilidad de sitios.

![@molly_struve

The Result...

(pry)> vulns = Vulnerability.limit(300);

(pry)> Benchmark.realtime { vulns.each(&:serialize) }

=> 6.022452222998254

(pry)> Benchmark.realtime do

> BulkVulnerability.new(vulns, [], client).serialize

> end

=> 0.7267019419959979](https://image.slidesharecdn.com/cacheisking-rubyhack-190404233353/85/Cache-is-King-RubyHACK-2019-27-320.jpg)

![@molly_struve

indexing_hashes = vulnerability_hashes.map do |hash|

{

:_index => Redis.get(“elasticsearch_index_#{hash[:client_id]}”)

:_type => hash[:doc_type],

:_id => hash[:id],

:data => hash[:data]

}

end](https://image.slidesharecdn.com/cacheisking-rubyhack-190404233353/85/Cache-is-King-RubyHACK-2019-38-320.jpg)

![@molly_struve

indexing_hashes = vulnerability_hashes.map do |hash|

{

:_index => Redis.get(“elasticsearch_index_#{hash[:client_id]}”)

:_type => hash[:doc_type],

:_id => hash[:id],

:data => hash[:data]

}

end](https://image.slidesharecdn.com/cacheisking-rubyhack-190404233353/85/Cache-is-King-RubyHACK-2019-39-320.jpg)

![@molly_struve

(pry)> index_name = Redis.get(“elasticsearch_index_#{client_id}”)

DEBUG -- : [Redis] command=GET args="elasticsearch_index_1234"

DEBUG -- : [Redis] call_time=1.07 ms

GET](https://image.slidesharecdn.com/cacheisking-rubyhack-190404233353/85/Cache-is-King-RubyHACK-2019-40-320.jpg)

![@molly_struve

client_indexes = Hash.new do |h, client_id|

h[client_id] = Redis.get(“elasticsearch_index_#{client_id}”)

end](https://image.slidesharecdn.com/cacheisking-rubyhack-190404233353/85/Cache-is-King-RubyHACK-2019-43-320.jpg)

![@molly_struve

indexing_hashes = vuln_hashes.map do |hash|

{

:_index => Redis.get(“elasticsearch_index_#{client_id}”)

:_type => hash[:doc_type],

:_id => hash[:id],

:data => hash[:data]

}

end

client_indexes[hash[:client_id]],](https://image.slidesharecdn.com/cacheisking-rubyhack-190404233353/85/Cache-is-King-RubyHACK-2019-44-320.jpg)

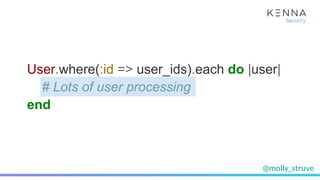

![@molly_struve

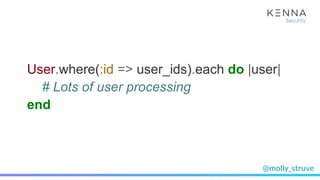

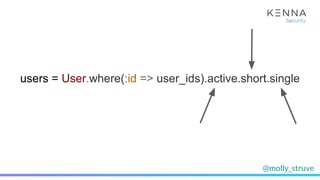

(pry)> User.where(:id => [])

User Load (1.0ms) SELECT `users`.* FROM `users` WHERE 1=0

=> []](https://image.slidesharecdn.com/cacheisking-rubyhack-190404233353/85/Cache-is-King-RubyHACK-2019-66-320.jpg)

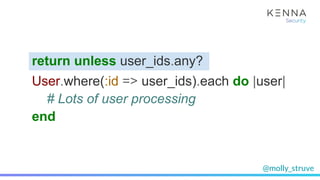

![@molly_struve

(pry)> Benchmark.realtime do

> 10_000.times { User.where(:id => []) }

> end

=> 0.5508159045130014

(pry)> Benchmark.realtime do

> 10_000.times do

> next unless ids.any?

> User.where(:id => [])

> end

> end

=> 0.0006368421018123627](https://image.slidesharecdn.com/cacheisking-rubyhack-190404233353/85/Cache-is-King-RubyHACK-2019-69-320.jpg)

![@molly_struve

(pry)> Benchmark.realtime do

> 10_000.times { User.where(:id => []) }

> end

=> 0.5508159045130014

“Ruby is slow”Hitting the database is slow!](https://image.slidesharecdn.com/cacheisking-rubyhack-190404233353/85/Cache-is-King-RubyHACK-2019-70-320.jpg)

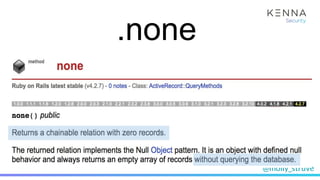

![@molly_struve

(pry)> User.where(:id => []).active.tall.single

User Load (0.7ms) SELECT `users`.* FROM `users` WHERE 1=0 AND

`users`.`active` = 1 AND `users`.`short` = 0 AND `users`.`single` = 1

=> []

(pry)> User.none.active.tall.single

=> []

.none in action...](https://image.slidesharecdn.com/cacheisking-rubyhack-190404233353/85/Cache-is-King-RubyHACK-2019-74-320.jpg)