- Logstash is a tool for managing logs that allows logs to be parsed, enriched, and output to various destinations like Elasticsearch or Graphite.

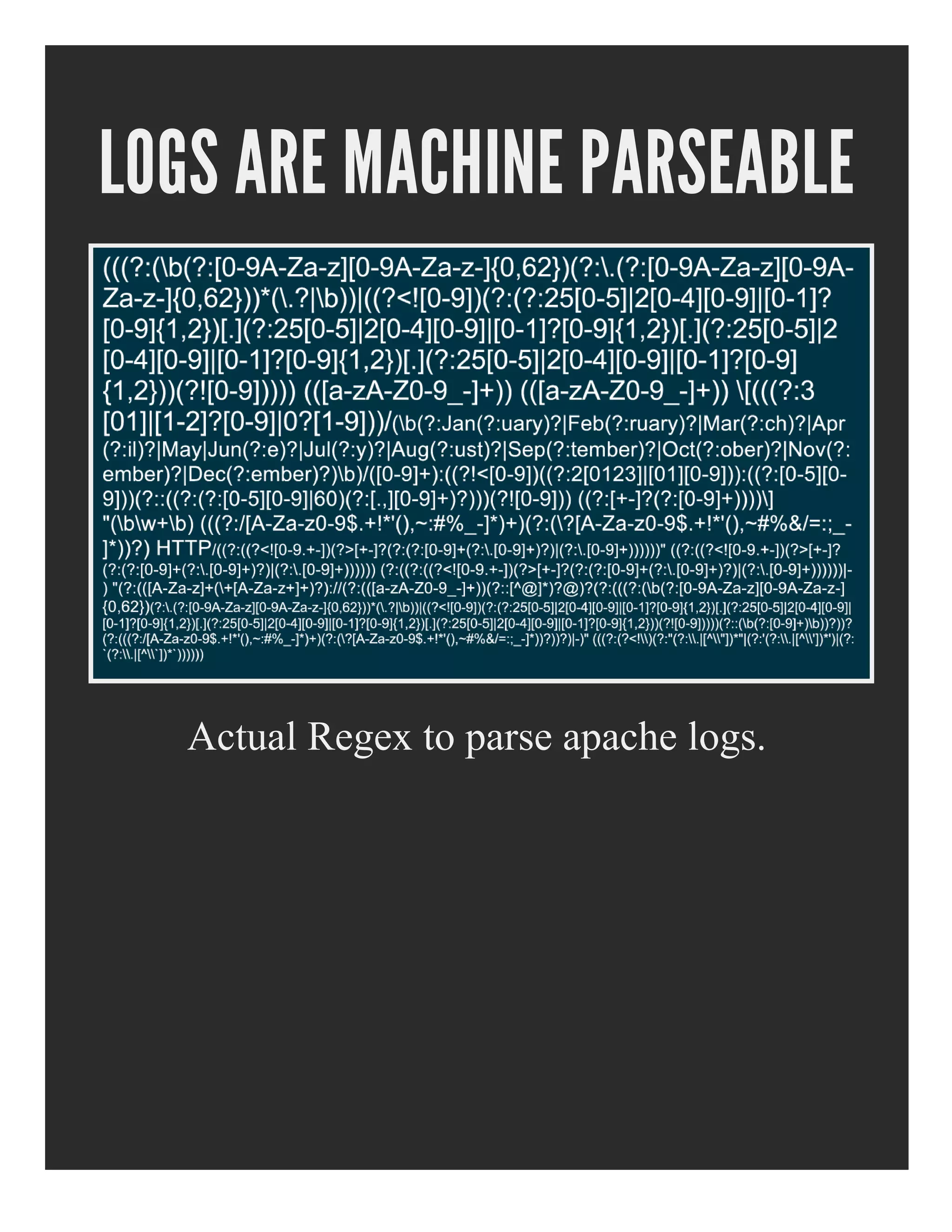

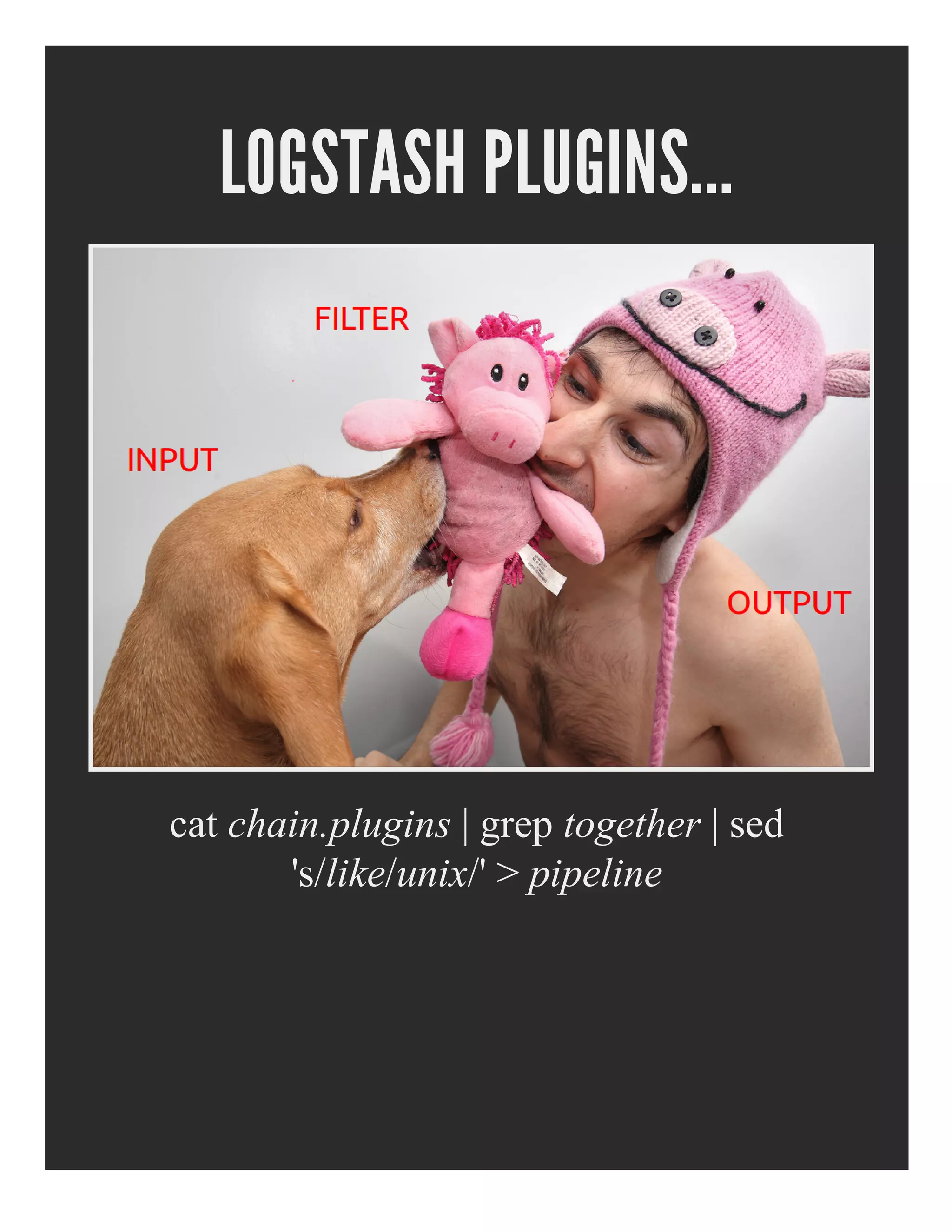

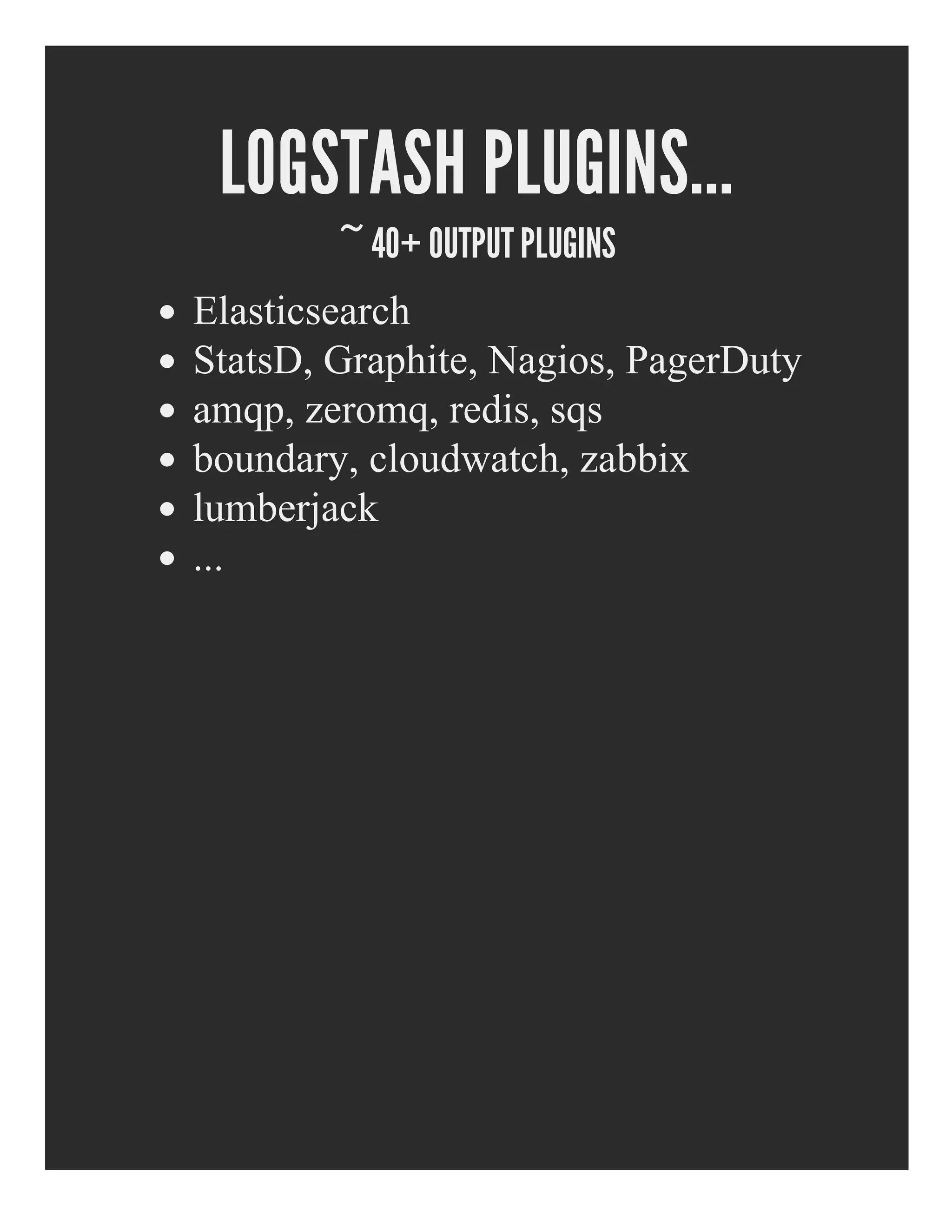

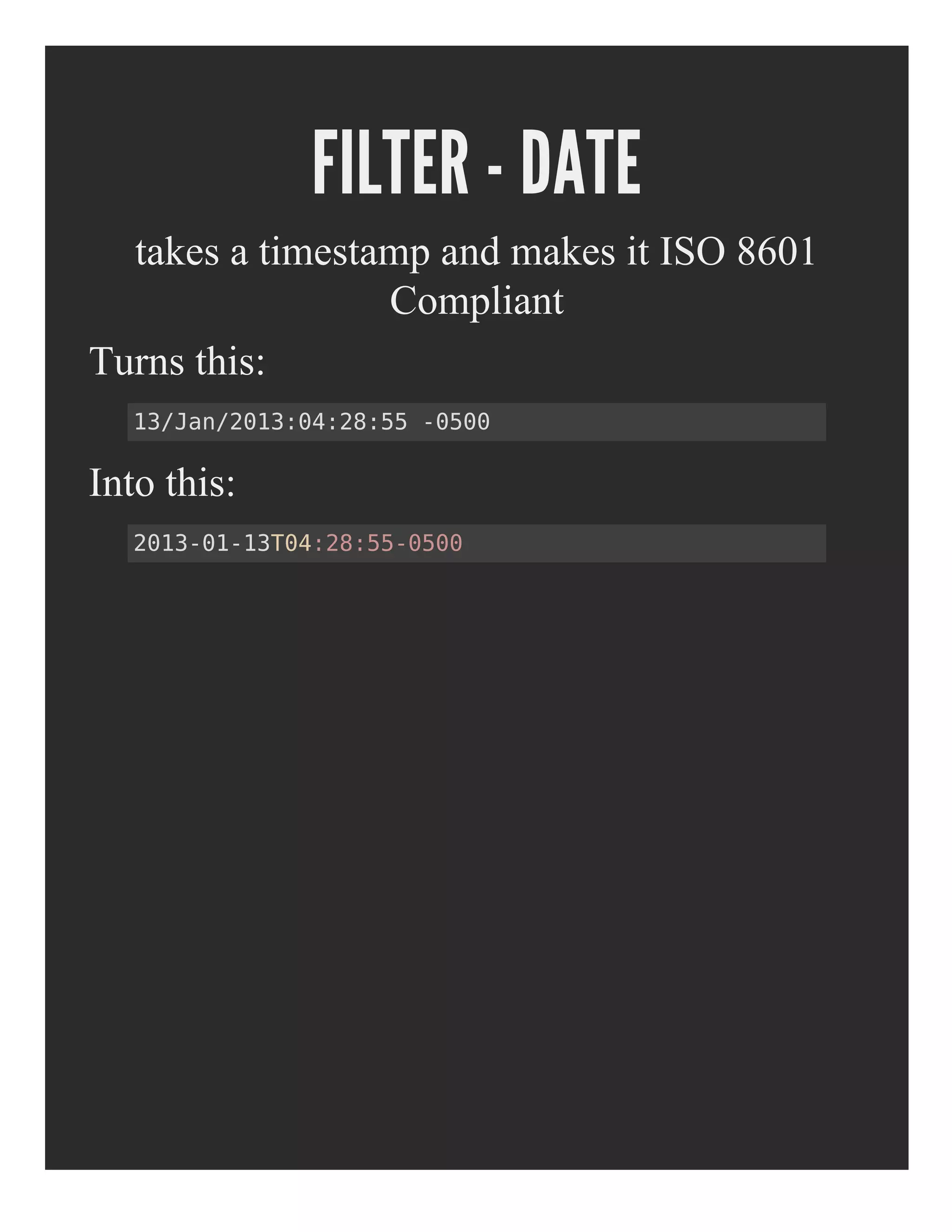

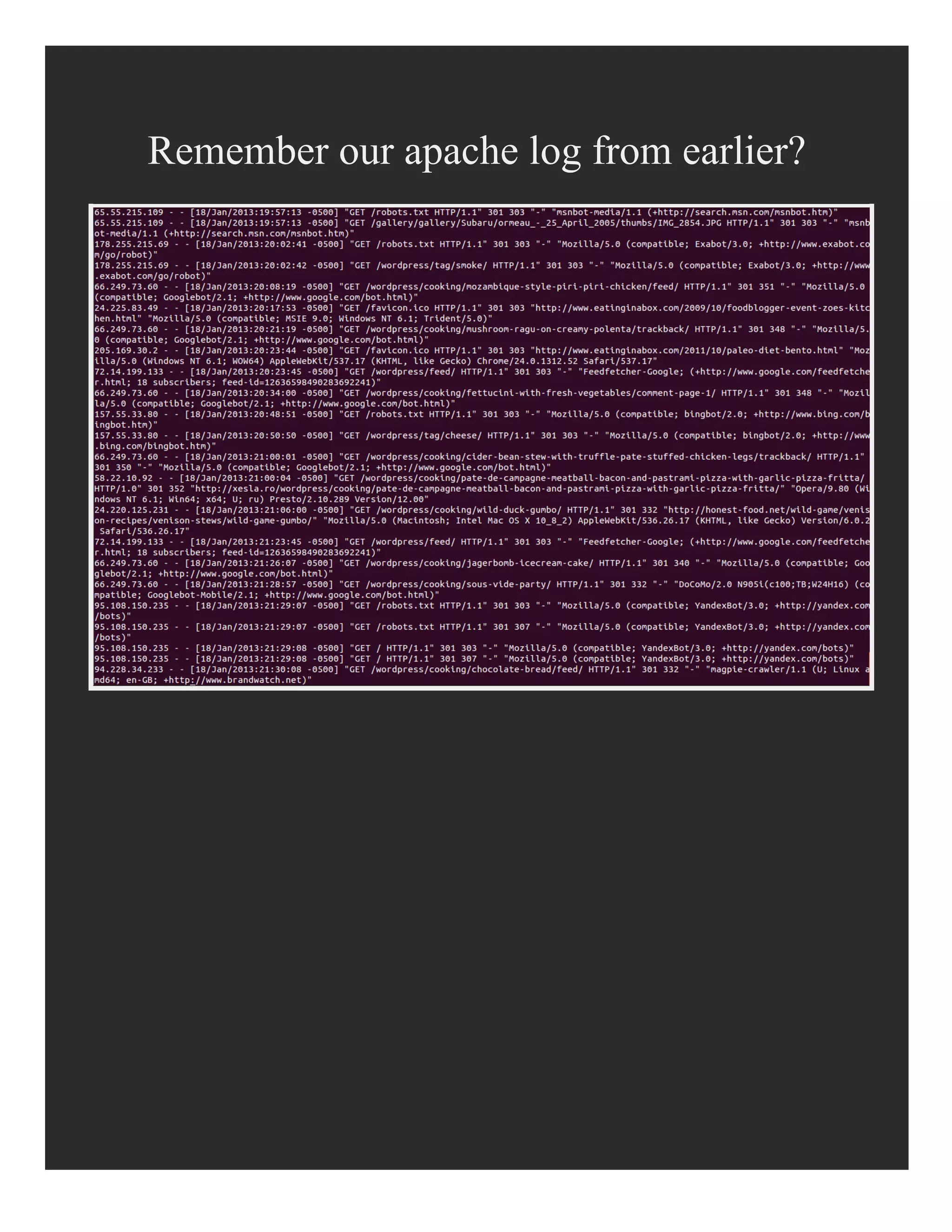

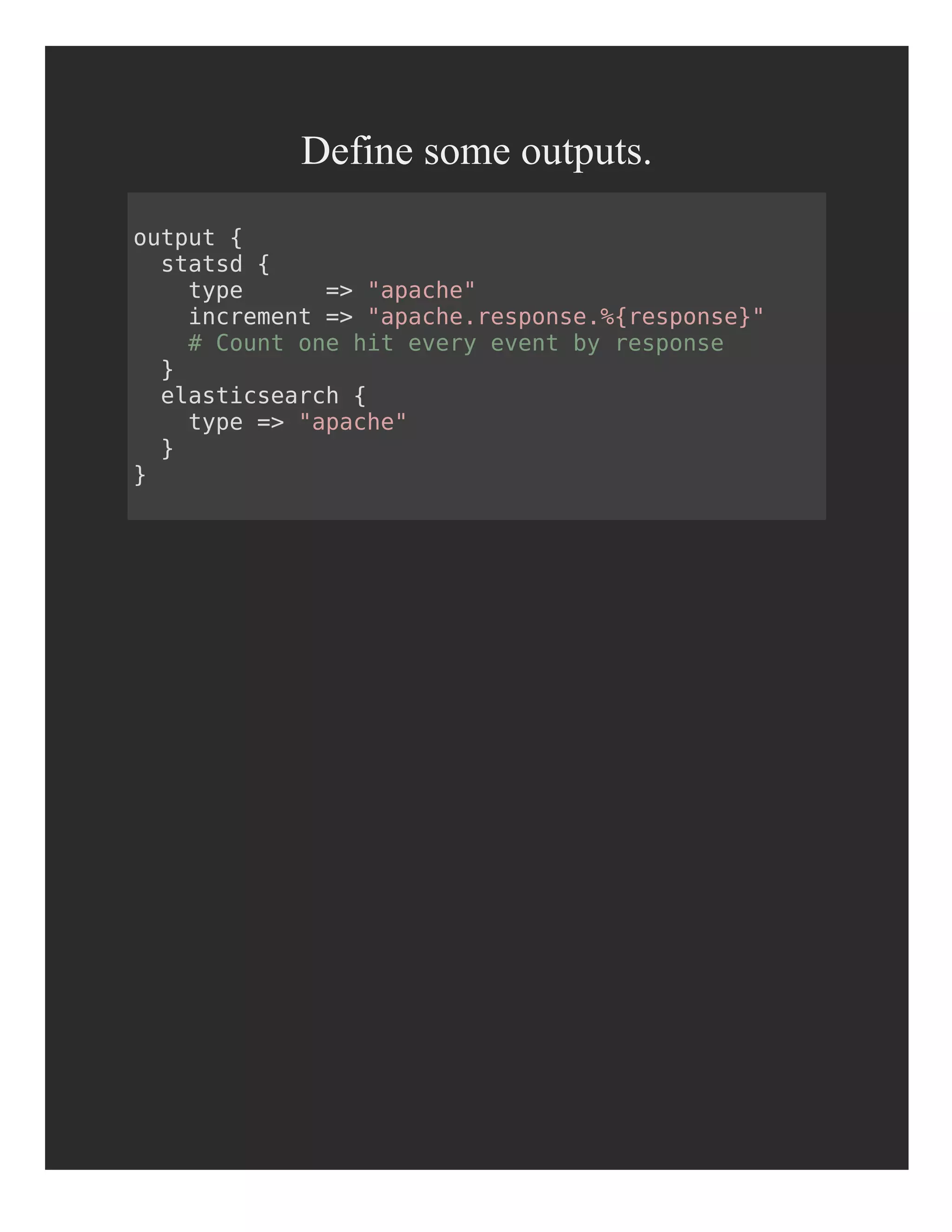

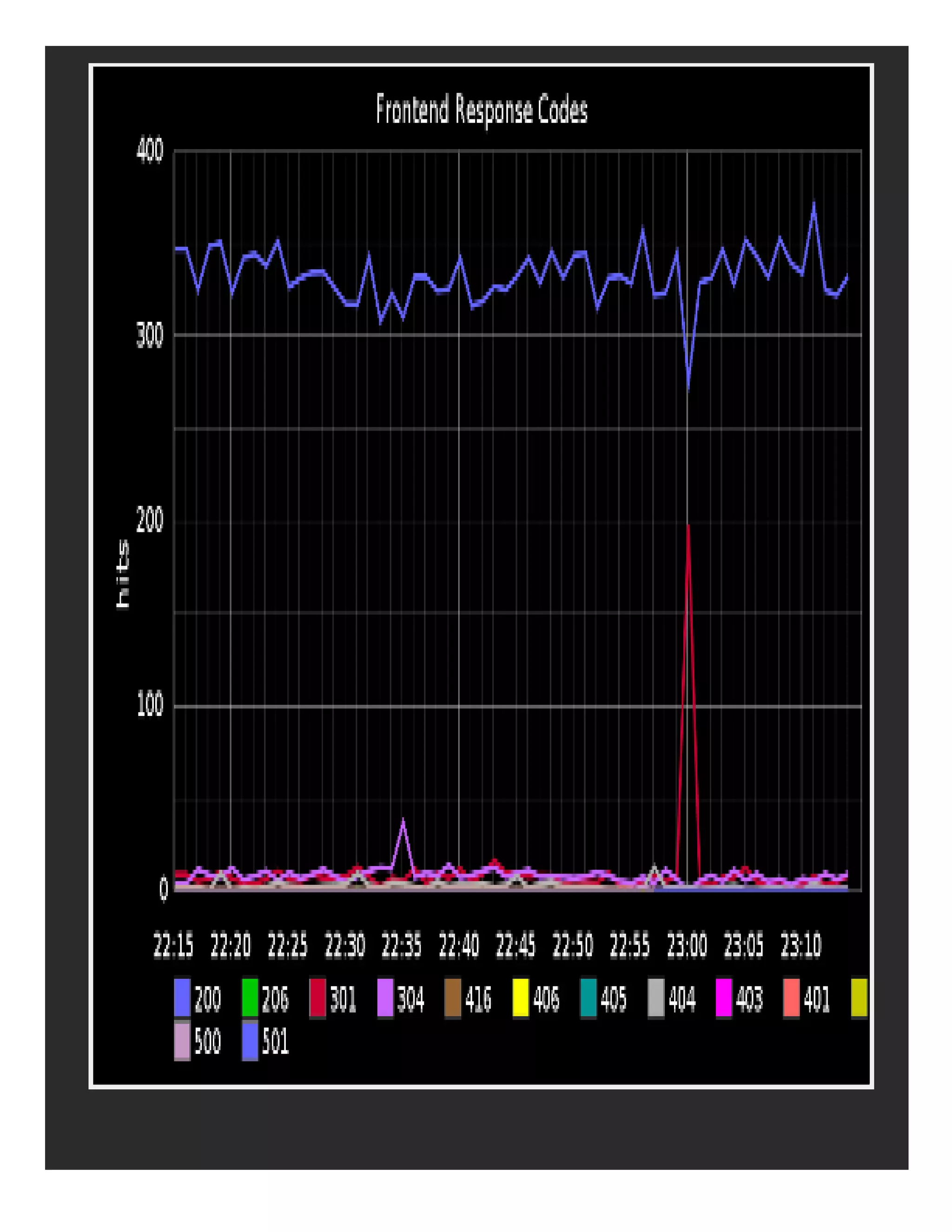

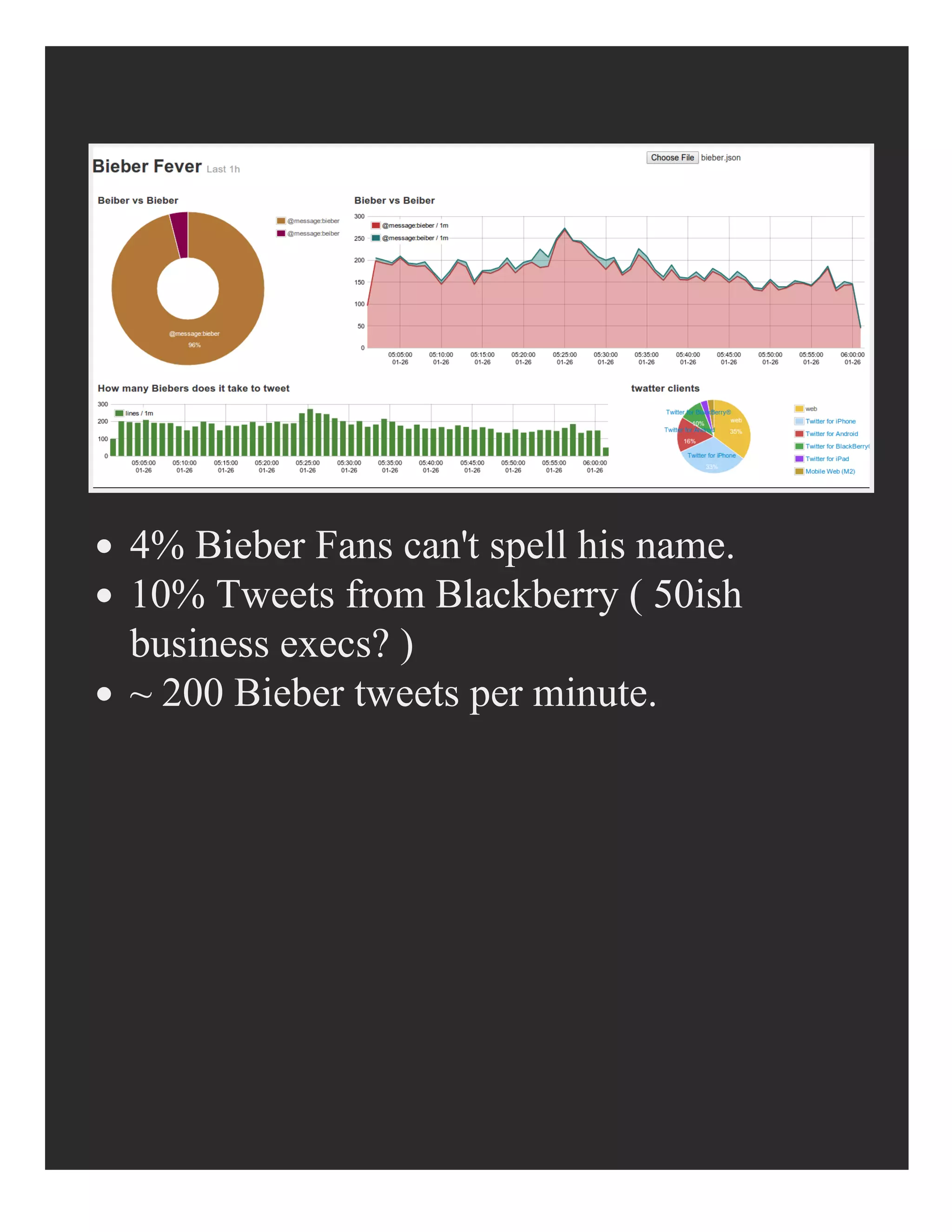

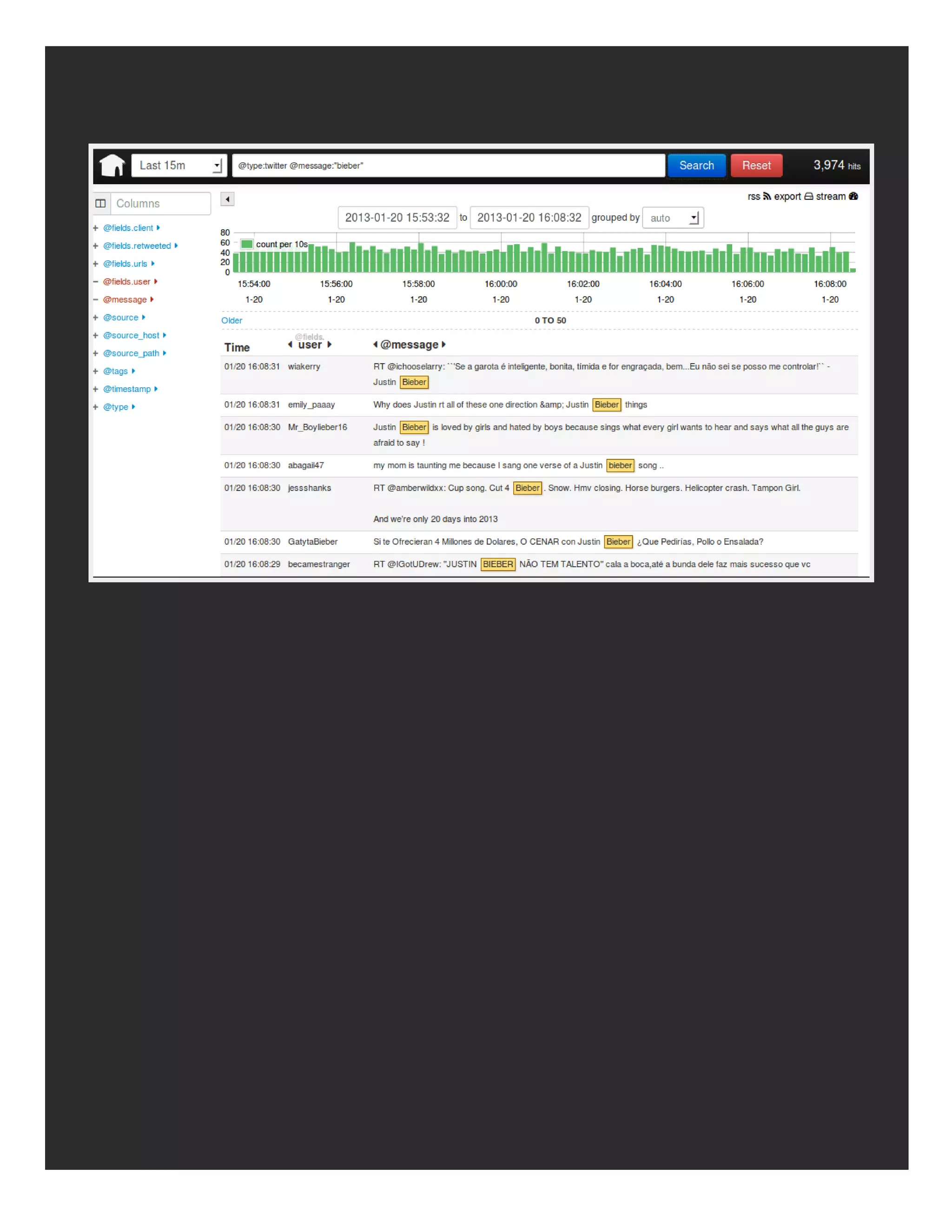

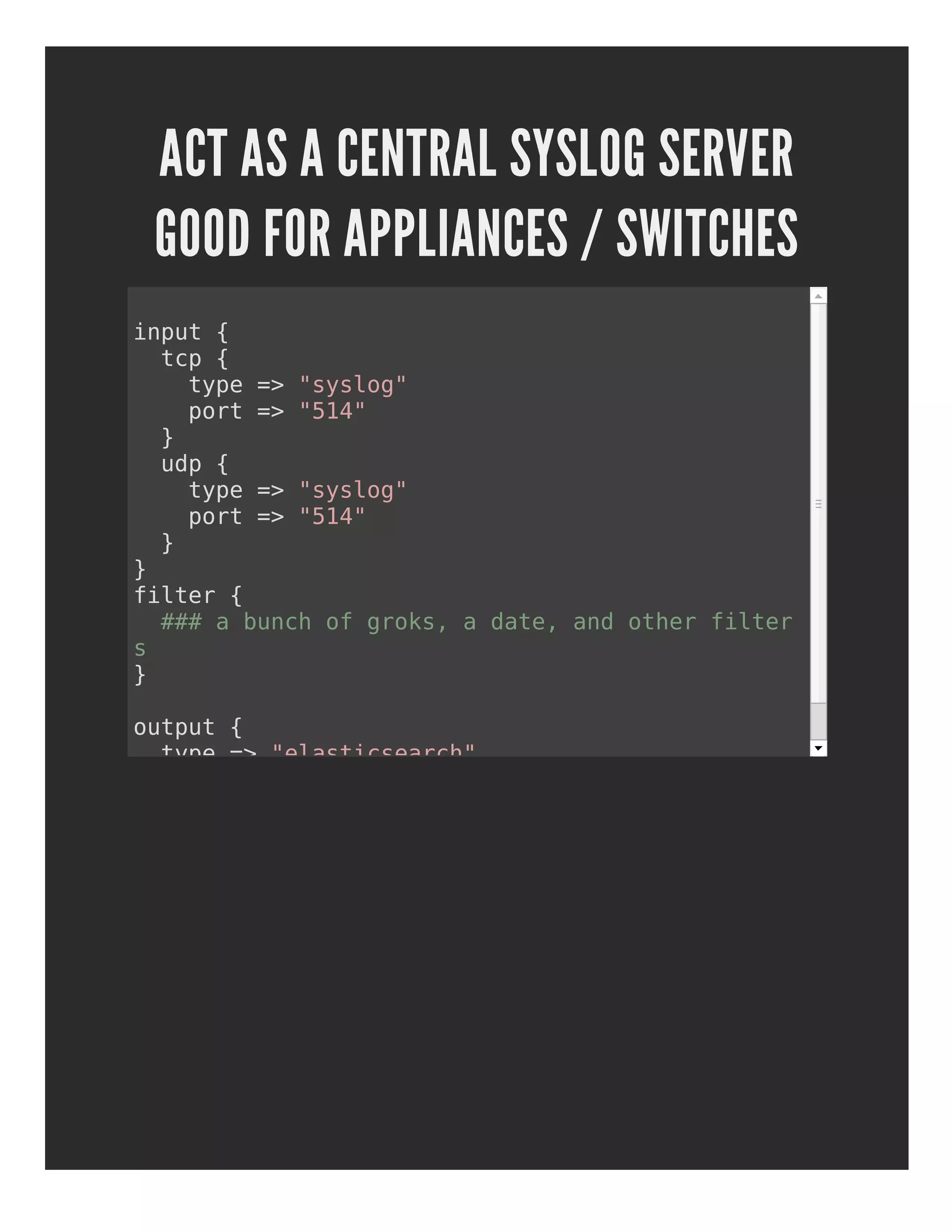

- It uses plugins to handle input, filtering, and output of logs. Common input plugins include files, TCP, and Twitter streams. Filtering plugins like Grok and date are useful for parsing and structuring log data. Outputs include Elasticsearch, StatsD, and cloud services.

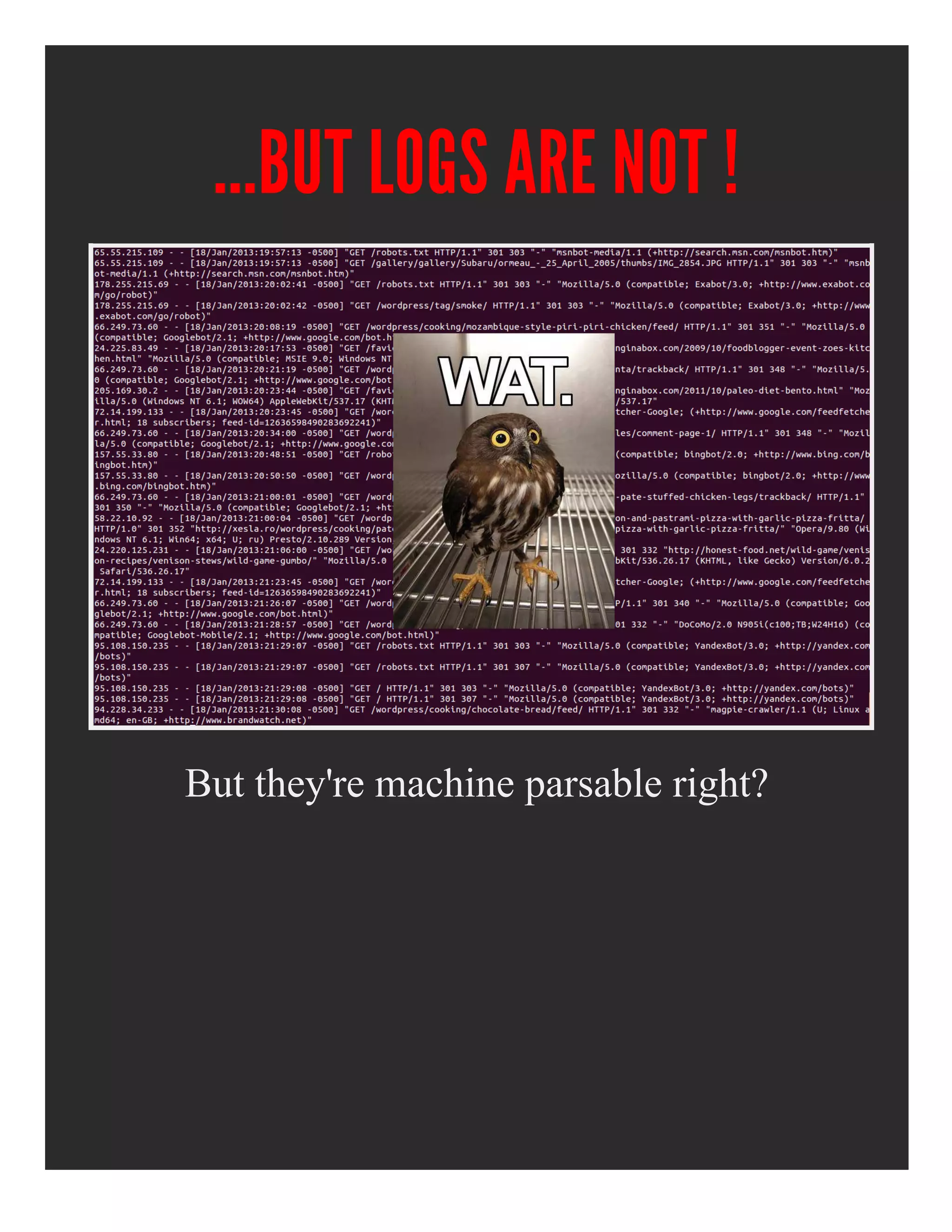

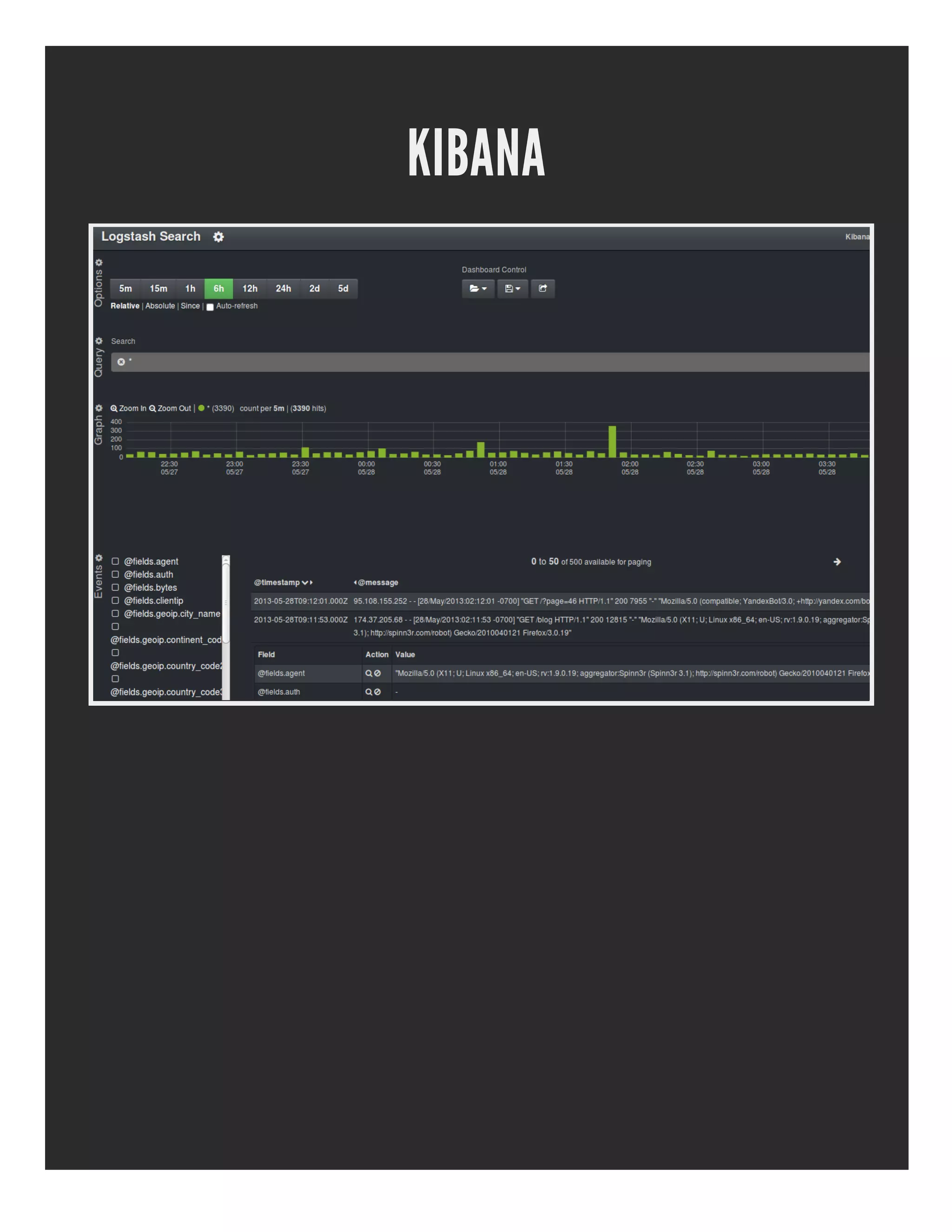

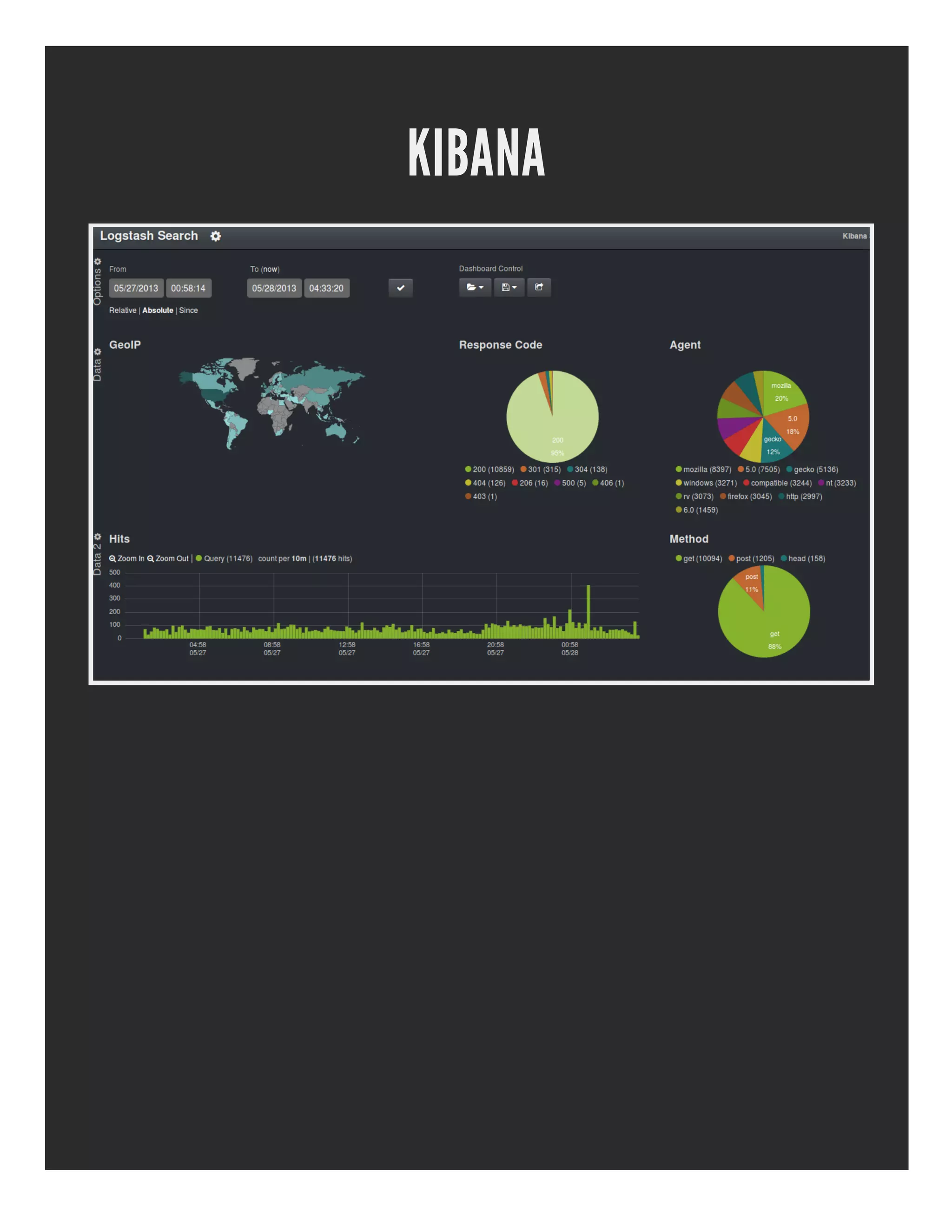

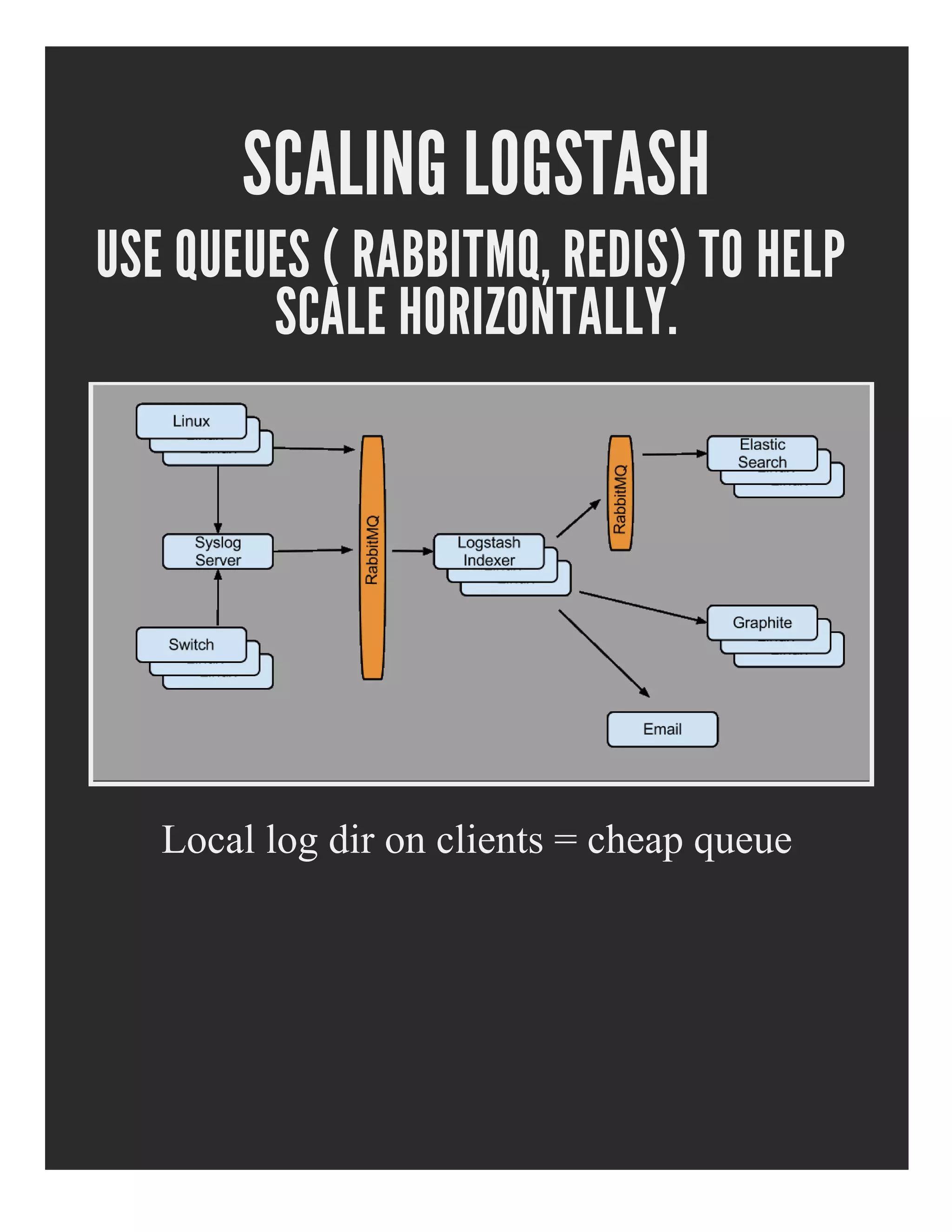

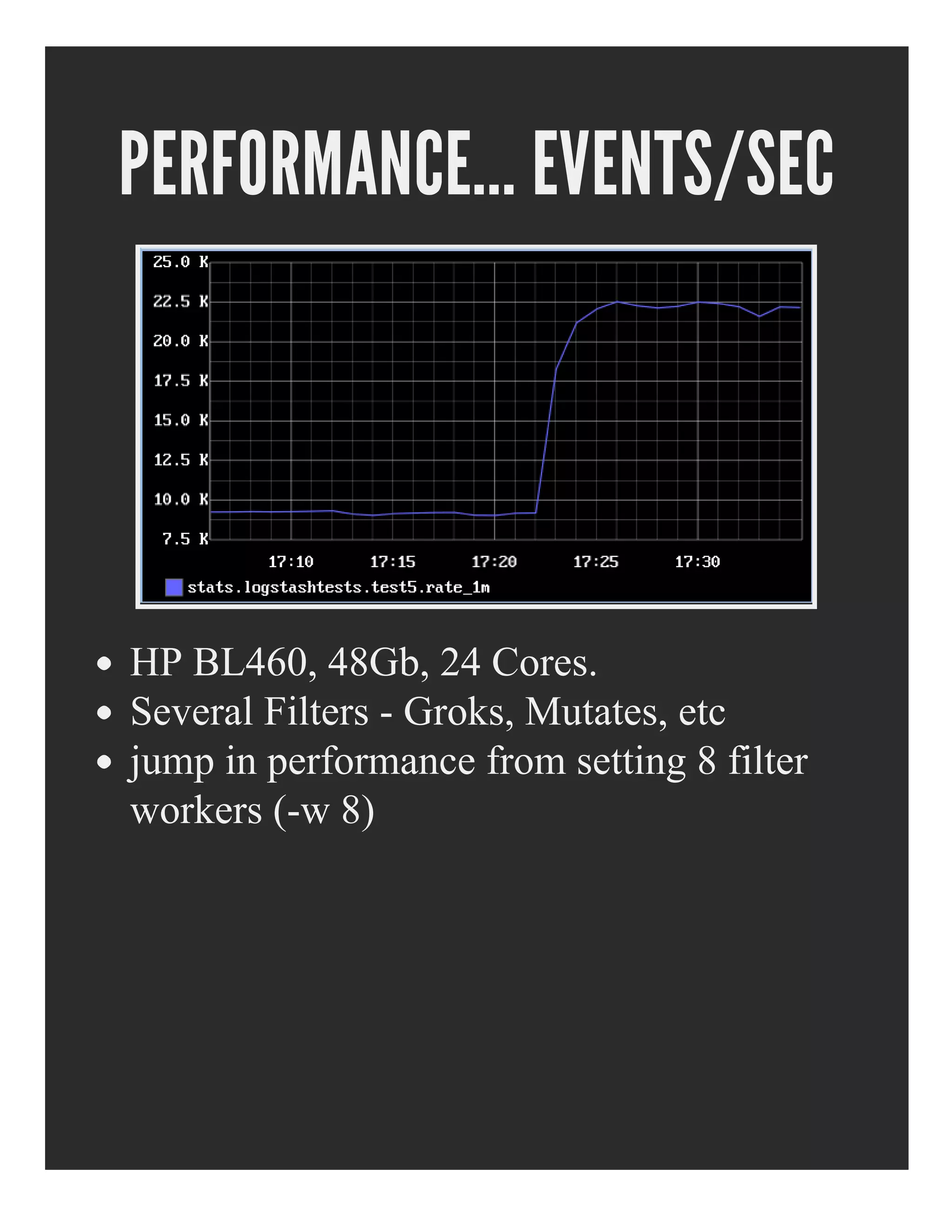

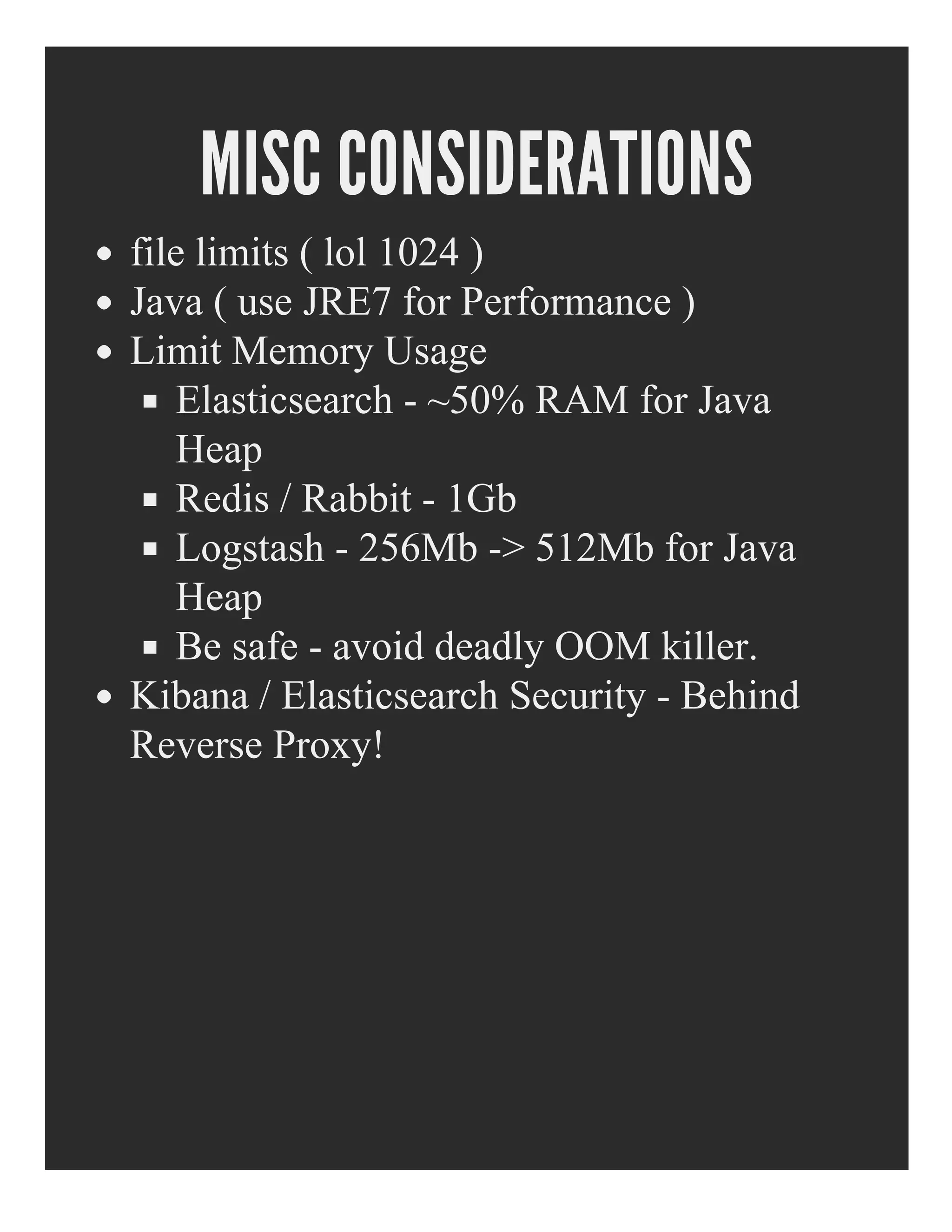

- Logstash can be used to centralize logging from diverse systems, analyze social media streams, and capture system metrics. It helps make logs more machine-parseable and enables capabilities like visualization with Kibana. Scaling Logstash requires techniques like queues to process logs horizontally.

![WHAT IS A LOG?

A log is a human readable, machine parsable

representation of an event.

LOG = TIMESTAMP + DATA

Jan 19 13:01:13 paulczlaptop anacron[7712]:

Normal exit (0 jobs run)

120607 14:07:00 InnoDB: Starting an apply

batch of log records to the database...

[1225306053] SERVICE ALERT:

FTPSERVER;FTP

SERVICE;OK;SOFT;2;FTP OK 0.029

second response time on port 21 [220

ProFTPD 1.3.1 Server ready.]

[Sat Jan 19 01:04:25 2013] [error] [client

78.30.200.81] File does not exist:

/opt/www/vhosts/crappywebsite/html/robots.txt](https://image.slidesharecdn.com/brisdevops-logstash-130530213448-phpapp02/75/Brisbane-DevOps-Meetup-Logstash-4-2048.jpg)

![A LOG IS HUMAN READABLE...

“A human readable, machine parsable

representation of an event.”

208.115.111.74 - - [13/Jan/2013:04:28:55 -0500] "GET /robots.txt H

TTP/1.1"

301 303 "-" "Mozilla/5.0 (compatible; Ezooms/1.0; ezooms.bot@g

mail.com)"](https://image.slidesharecdn.com/brisdevops-logstash-130530213448-phpapp02/75/Brisbane-DevOps-Meetup-Logstash-6-2048.jpg)

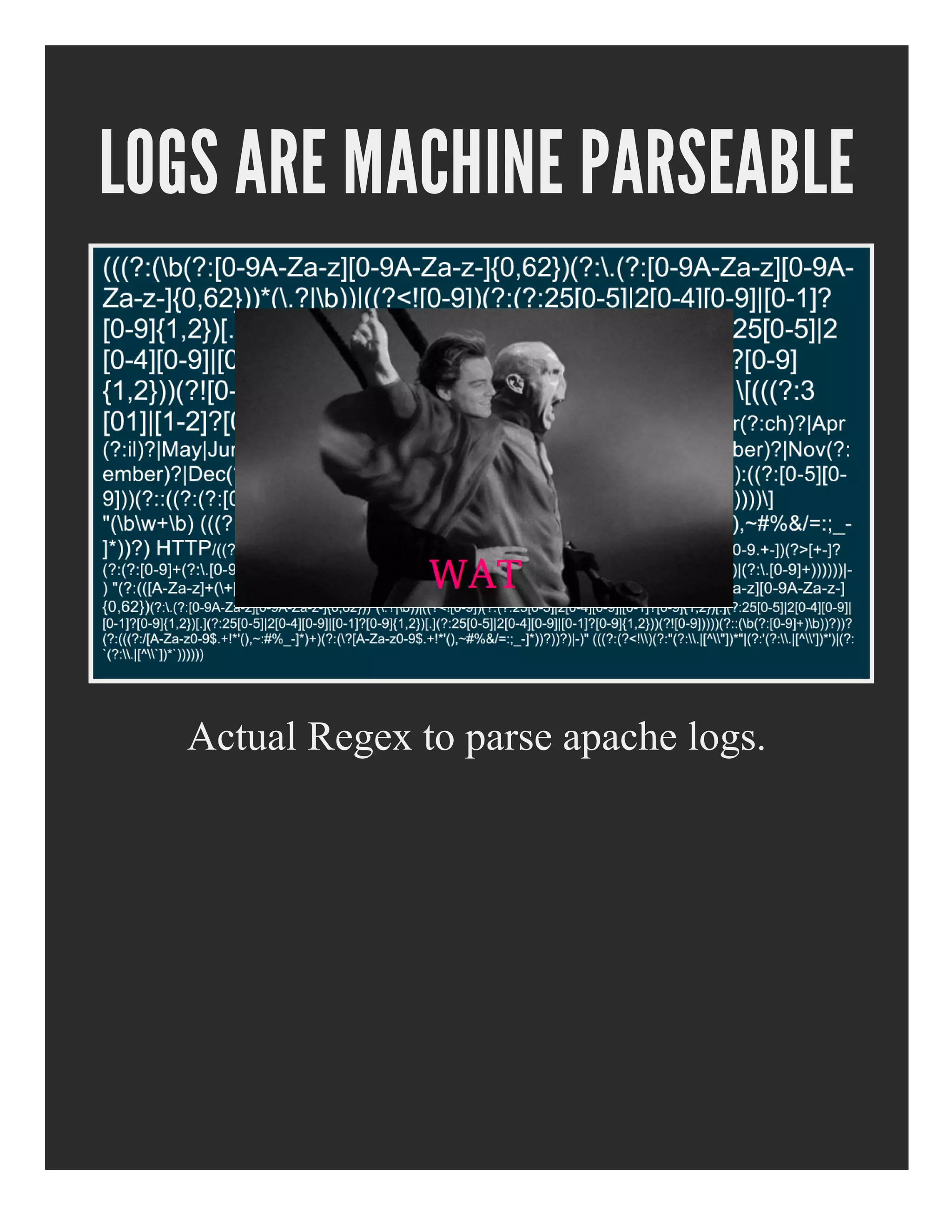

![LOGS ARE MACHINE PARSEABLE

208.115.111.74 - - [13/Jan/2013:04:28:55 -0500] "GET /robots.txt H

TTP/1.1"

301 303 "-" "Mozilla/5.0 (compatible; Ezooms/1.0; ezooms.bot@g

mail.com)"](https://image.slidesharecdn.com/brisdevops-logstash-130530213448-phpapp02/75/Brisbane-DevOps-Meetup-Logstash-9-2048.jpg)

![LOGS ARE MACHINE PARSEABLE

Users will now call PERL Ninja to solve

every problem they have Hero Syndrome.

Does it work for every possible log line ?

Who's going to maintain that shit ?

Is it even useful without being surrounded

by [bad] sysadmin scripts ?](https://image.slidesharecdn.com/brisdevops-logstash-130530213448-phpapp02/75/Brisbane-DevOps-Meetup-Logstash-12-2048.jpg)

![SO WE AGREE ... THIS IS BAD.

208.115.111.74 - - [13/Jan/2013:04:28:55 -0500] "GET /robots.txt H

TTP/1.1"

301 303 "-" "Mozilla/5.0 (compatible; Ezooms/1.0; ezooms.bot@g

mail.com)"](https://image.slidesharecdn.com/brisdevops-logstash-130530213448-phpapp02/75/Brisbane-DevOps-Meetup-Logstash-13-2048.jpg)

![LOGSTASH TURNS THIS

INTO THAT

208.115.111.74 - - [13/Jan/2013:04:28:55 -0500] "GET /robots.txt H

TTP/1.1"

301 303 "-" "Mozilla/5.0 (compatible; Ezooms/1.0; ezooms.bot@g

mail.com)"

{

"client address": "208.115.111.74",

"user": null,

"timestamp": "2013-01-13T04:28:55-0500",

"verb": "GET",

"path": "/robots.txt",

"query": null,

"http version": 1.1,

"response code": 301,

"bytes": 303,

"referrer": null

"user agent": "Mozilla/5.0 (compatible; Ezooms/1.0; ezooms.bot@g

mail.com)"

}](https://image.slidesharecdn.com/brisdevops-logstash-130530213448-phpapp02/75/Brisbane-DevOps-Meetup-Logstash-14-2048.jpg)

![FILTER - GROK

Grok parses arbitrary text and structures it.

Makes complex regex patterns simple.

USERNAME [a-zA-Z0-9_-]+

USER %{USERNAME}

INT (?:[+-]?(?:[0-9]+))

MONTH b(?:Jan(?:uary)?|Feb(?:ruary)?|Mar(?:ch)?|A

pr(?:il)?|May|Jun(?:e)?|Jul(?:y)?|Aug(?:ust)?|Sep(

?:tember)?|Oct(?:ober)?|Nov(?:ember)?|Dec(?:ember)

?)b

DAY (?:Mon(?:day)?|Tue(?:sday)?|Wed(?:nesday)?|Thu

(?:rsday)?|Fri(?:day)?|Sat(?:urday)?|Sun(?:day)?)

COMBINEDAPACHELOG %{IPORHOST:clientip} %{USER:iden

t} %{USER:auth}

[%{HTTPDATE:timestamp}] "(?:%{WORD:verb} %{NOT

SPACE:request}

(?: HTTP/%{NUMBER:httpversion})?|-)" %{NUMBER:re

sponse}

(?:%{NUMBER:bytes}|-) %{QS:referrer} %{QS:agent}](https://image.slidesharecdn.com/brisdevops-logstash-130530213448-phpapp02/75/Brisbane-DevOps-Meetup-Logstash-21-2048.jpg)

![Define Inputs and Filters.

input {

file {

type => "apache"

path => ["/var/log/httpd/httpd.log"]

}

}

filter {

grok {

type => "apache"

pattern => "%{COMBINEDAPACHELOG}"

}

date {

type => "apache"

}

geoip {

type => "apache"

}

}](https://image.slidesharecdn.com/brisdevops-logstash-130530213448-phpapp02/75/Brisbane-DevOps-Meetup-Logstash-23-2048.jpg)

![LOGSTASH - TWITTER INPUT

input {

twitter {

type => "twitter"

keywords => ["bieber","beiber"]

user => "username"

password => "*******"

}

}

output {

elasticsearch {

type => "twitter"

}

}](https://image.slidesharecdn.com/brisdevops-logstash-130530213448-phpapp02/75/Brisbane-DevOps-Meetup-Logstash-31-2048.jpg)

![ALREADY HAVE CENTRAL

RSYSLOG/SYSLOGNG SERVER?

input {

file {

type => "syslog"

path => ["/data/rsyslog/**/*.log"]

}

}

filter {

### a bunch of groks, a date, and other filters

}

output {

type => "elasticsearch"

}](https://image.slidesharecdn.com/brisdevops-logstash-130530213448-phpapp02/75/Brisbane-DevOps-Meetup-Logstash-35-2048.jpg)

![IN CLOUD? DON'T OWN

NETWORK?

Use an encrypted Transport

Logstash Agent

Logstash Indexer

input { file { ... } }

output {

lumberjack {

hosts => ["logstash-indexer1", "logs

tash-indexer2"]

ssl_certificate => "/etc/ssl/logstash.crt"

}

}

input {

lumberjack {

ssl_certificate => "/etc/ssl/logstash.crt"

ssl_key => "/etc/ssl/logstash.key"

}

}

output { elasticsearch {} }](https://image.slidesharecdn.com/brisdevops-logstash-130530213448-phpapp02/75/Brisbane-DevOps-Meetup-Logstash-37-2048.jpg)

![SYSTEM METRICS ?

input {

exec {

type => "system-loadavg"

command => "cat /proc/loadavg | awk '{print $1

,$2,$3}'"

interval => 30

}

}

filter {

grok {

type => "system-loadavg"

pattern => "%{NUMBER:load_avg_1m} %{NUMBER:loa

d_avg_5m}

%{NUMBER:load_avg_15m}"

named_captures_only => true

}

}

output {

graphite {

host => "10.10.10.10"

port => 2003

type => "system-loadavg"

metrics => [ "hosts.%{@source_host}.load_avg.1

m", "%{load_avg_1m}",

"hosts.%{@source_host}.load_avg.5m", "%{load_avg_5

m}",

"hosts.%{@source_host}.load_avg.15m", "%{load_avg_

15m}" ]

}

}](https://image.slidesharecdn.com/brisdevops-logstash-130530213448-phpapp02/75/Brisbane-DevOps-Meetup-Logstash-38-2048.jpg)

![UNIQUE PROBLEM TO SOLVE ?

write a logstash module!

Input Snmptrap

Filter Translate

Can do powerful things with [ boilerplate + ] a

few lines of ruby](https://image.slidesharecdn.com/brisdevops-logstash-130530213448-phpapp02/75/Brisbane-DevOps-Meetup-Logstash-39-2048.jpg)

![FURTHER READING

http://www.logstash.net/

http://www.logstashbook.com/ [James

Turnbull]

https://github.com/paulczar/vagrantlogstash

http://jujucharms.com/charms/precise/logstash

indexer

Logstash Puppet (github/electrical)

Logstash Chef (github/lusis)](https://image.slidesharecdn.com/brisdevops-logstash-130530213448-phpapp02/75/Brisbane-DevOps-Meetup-Logstash-44-2048.jpg)