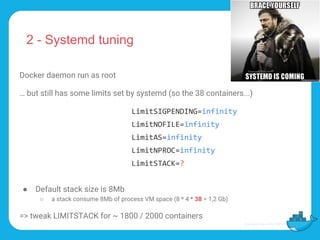

The document summarizes the efforts of three individuals to break the RPiDocker challenge of running the most Docker containers on a Raspberry Pi 2. They took a methodical approach, measuring performance and automating setup. Key steps included systemd and Docker tuning, using a highly optimized web server, and addressing the RPi2's network namespace limit. Collaboration and sharing ideas helped optimize the process and ultimately run 2499 containers, hitting a Go runtime thread limit. Working around the limit confirmed the ability to run nearly 2740 containers before hitting memory limits.

![Challenge

Completed

● We started 2499containers !

● RAM on RPi2 was not exhausted but Docker daemon crashed

docker[307]: runtime: program exceeds 10000-thread limit](https://image.slidesharecdn.com/breakingtherpidockerchallenge1-151116230728-lva1-app6891/85/Breaking-the-RpiDocker-challenge-18-320.jpg)