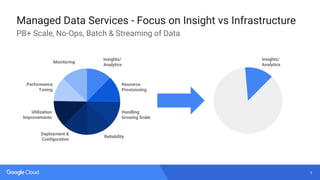

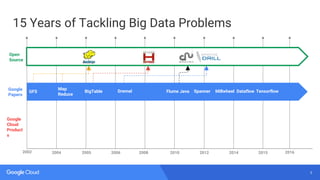

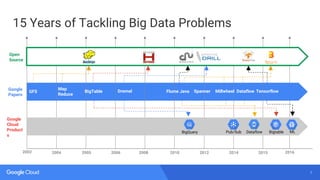

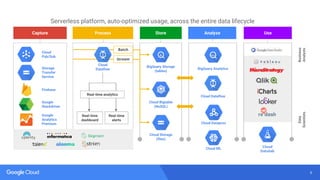

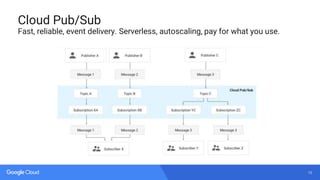

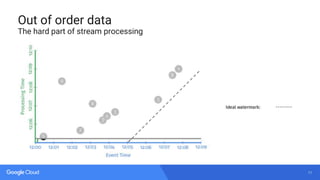

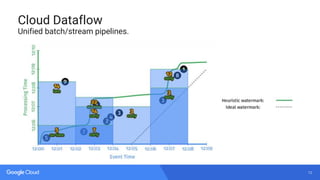

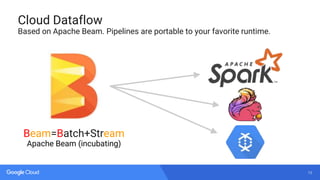

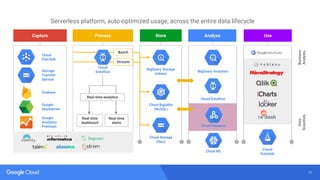

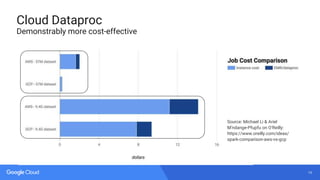

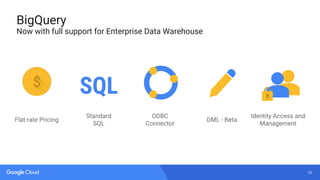

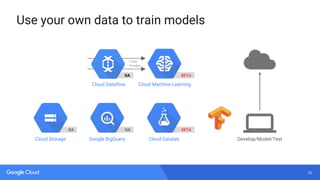

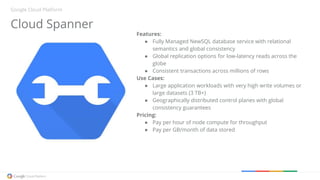

Google provides a suite of data and analytics services and tools for businesses to manage large datasets and gain insights from data. These include BigQuery for analytics, Cloud Dataproc for batch processing, Cloud Dataflow for streaming data, Cloud Pub/Sub for event delivery, and Cloud Bigtable for large-scale NoSQL databases. Google has been developing these services based on its own experiences managing big data over 15 years.