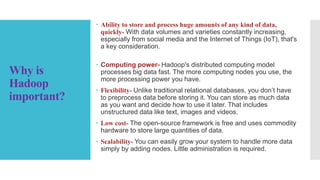

Hadoop is an open-source software framework that facilitates the storage and processing of large data sets across distributed computing environments using commodity hardware. It offers advantages such as low cost, scalability, and flexibility but poses challenges in data management, governance, and security. Hadoop is increasingly integrated with data warehouses and is essential for industries managing vast data, including IoT applications.