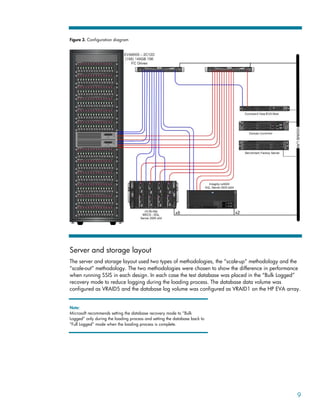

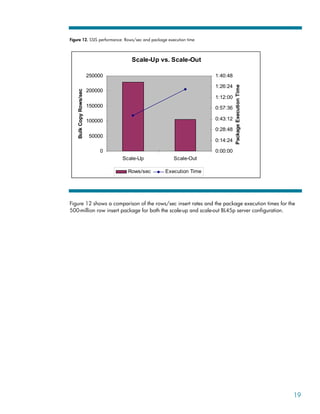

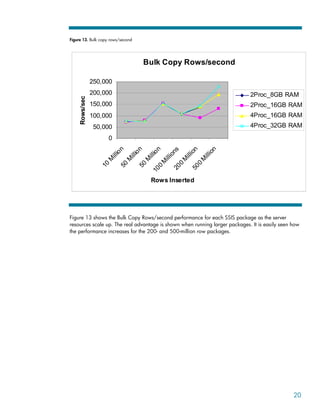

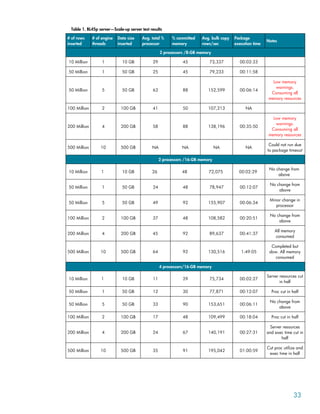

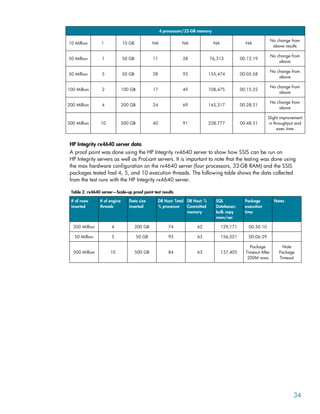

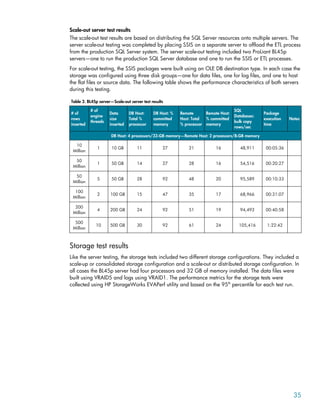

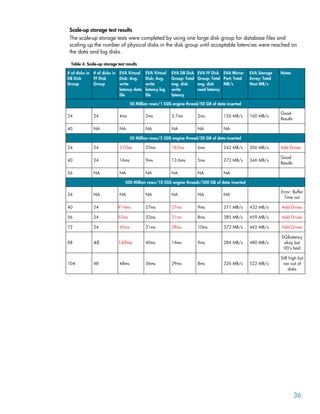

This document outlines best practices for managing Microsoft SQL Server 2005 databases on HP servers and storage, focusing on SQL Server Integration Services (SSIS). It provides insights into performance factors, testing methodologies, and configurations that optimize SSIS execution, including scale-up versus scale-out options. Key findings indicate that tailored server configurations and appropriate storage management significantly enhance SSIS performance, offering guidance for effective database administration.