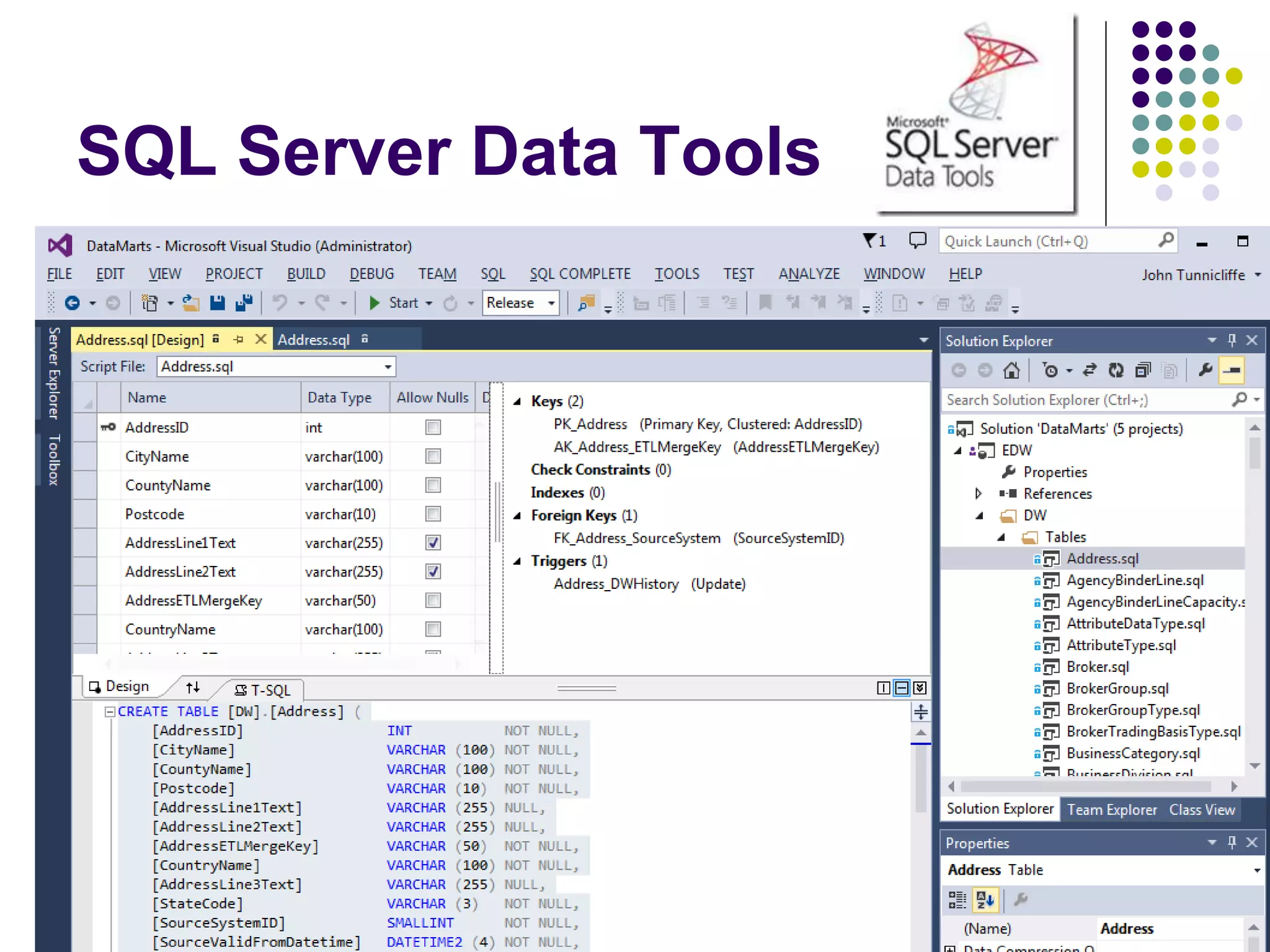

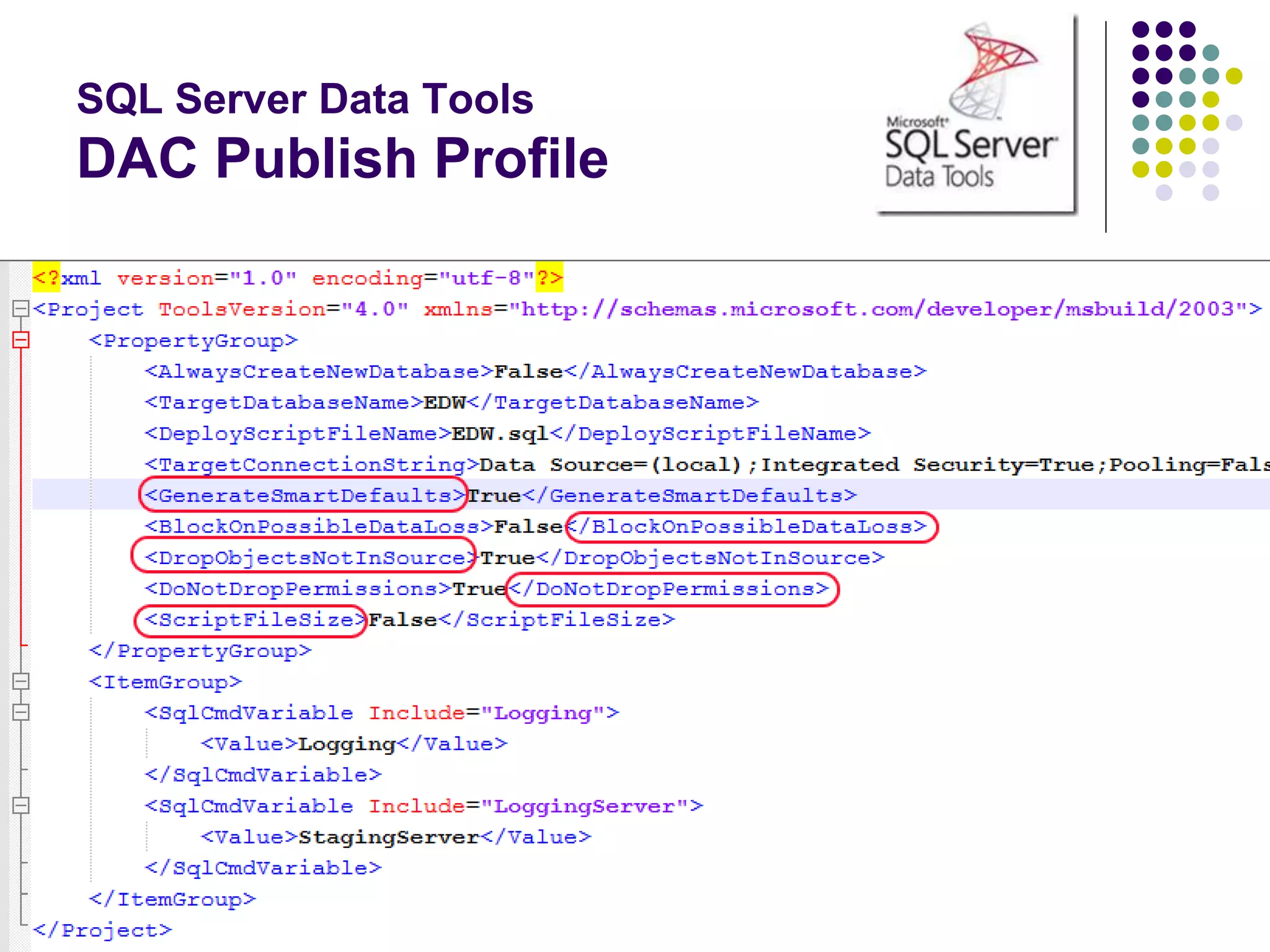

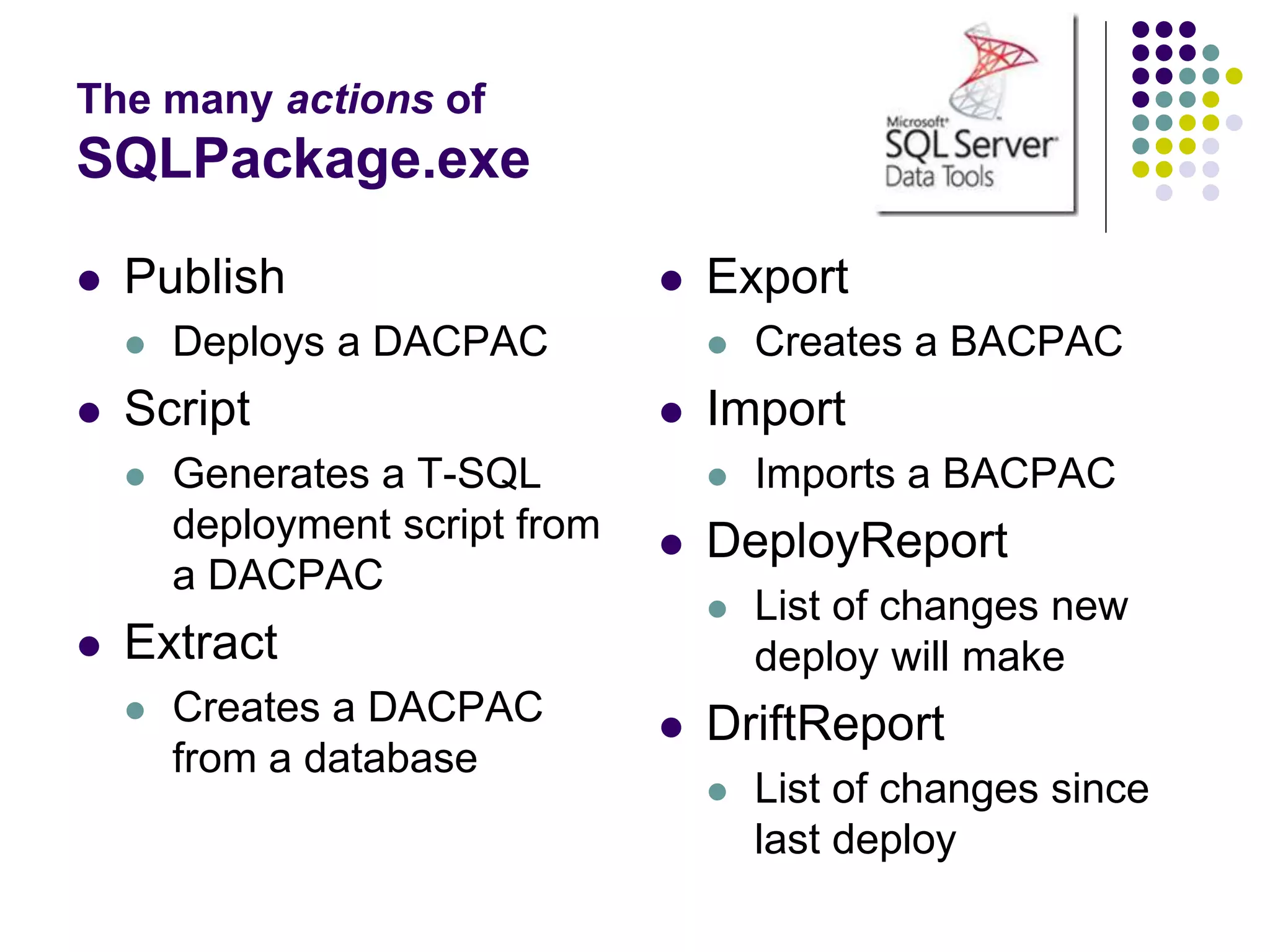

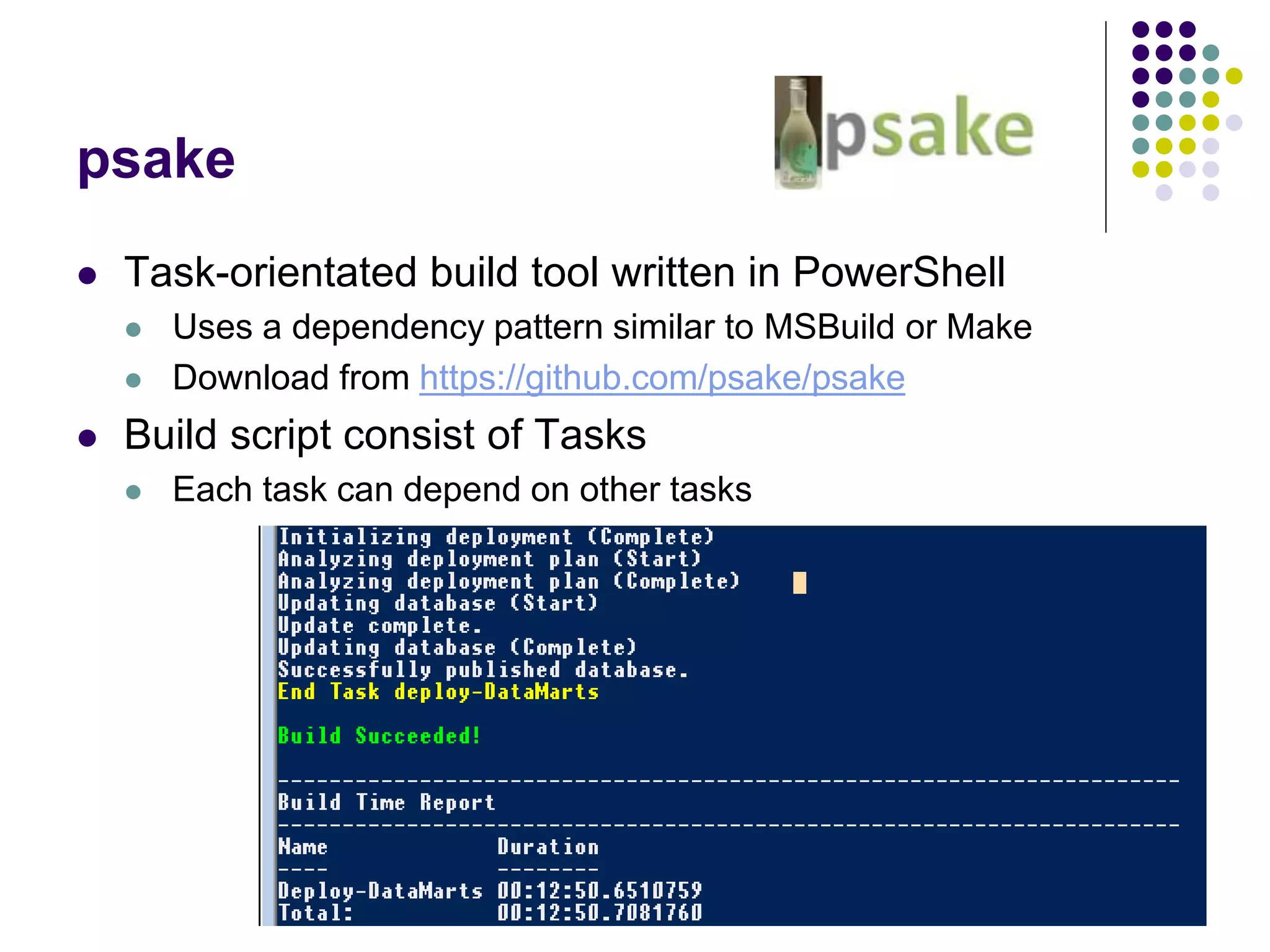

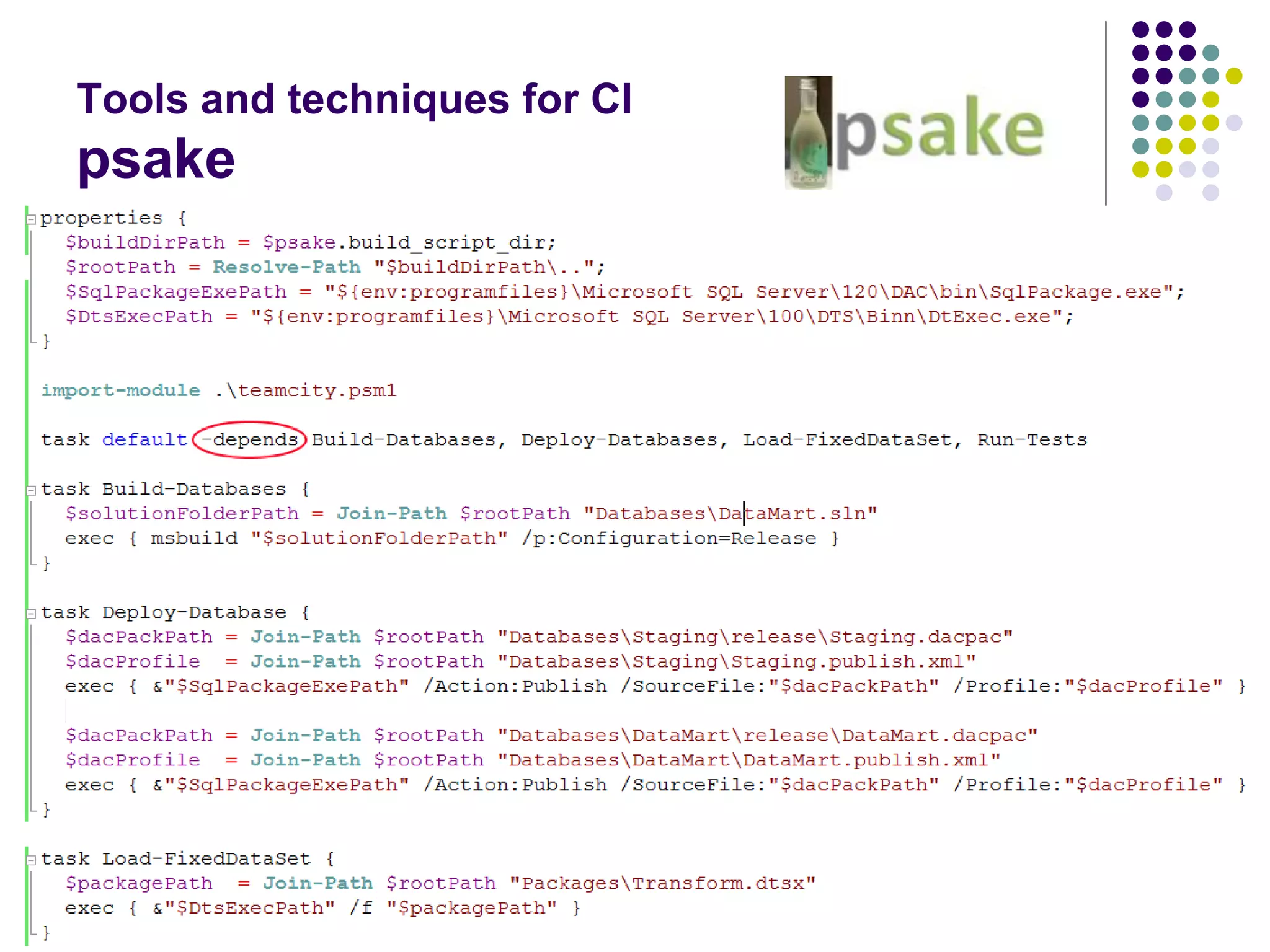

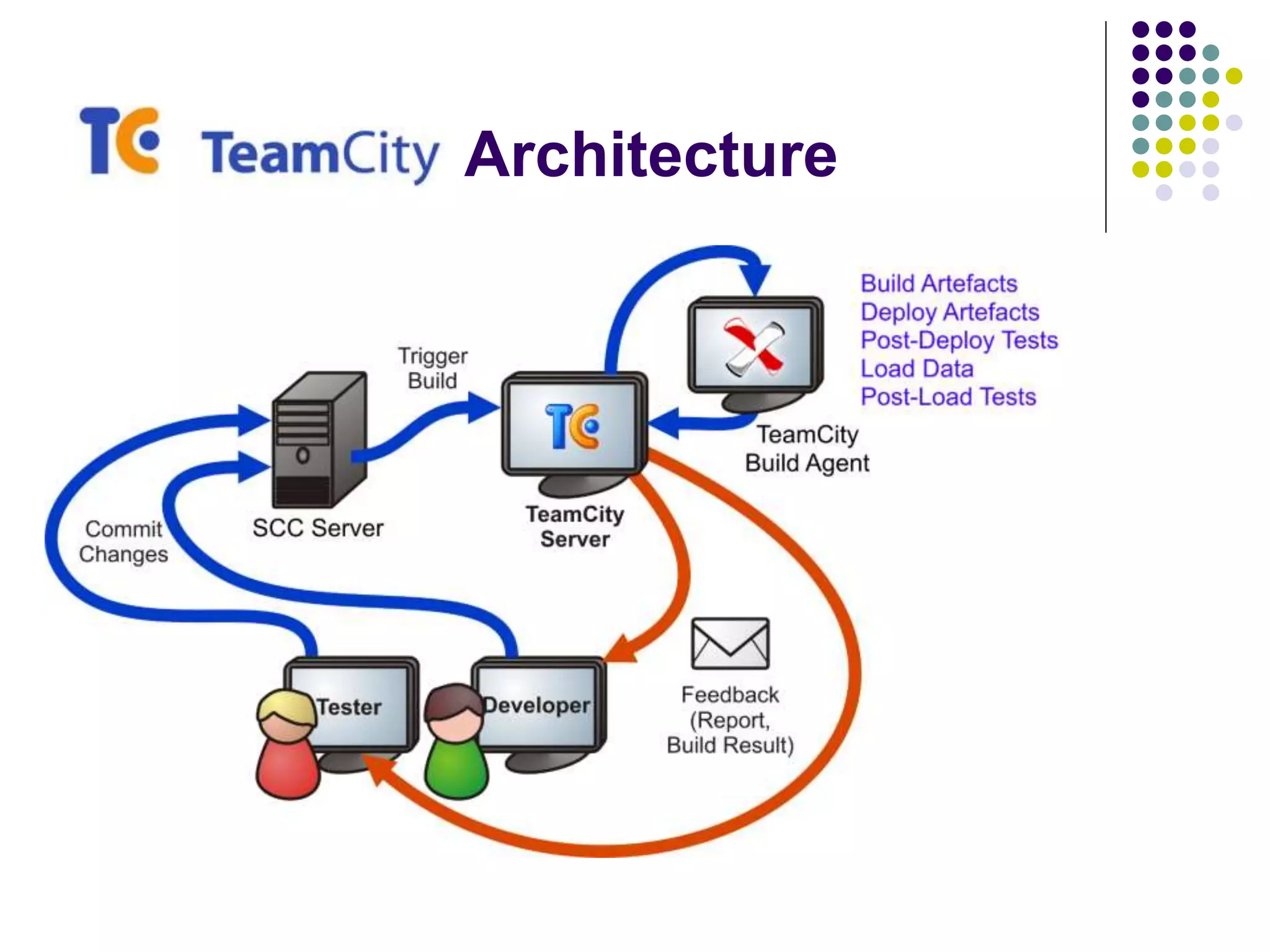

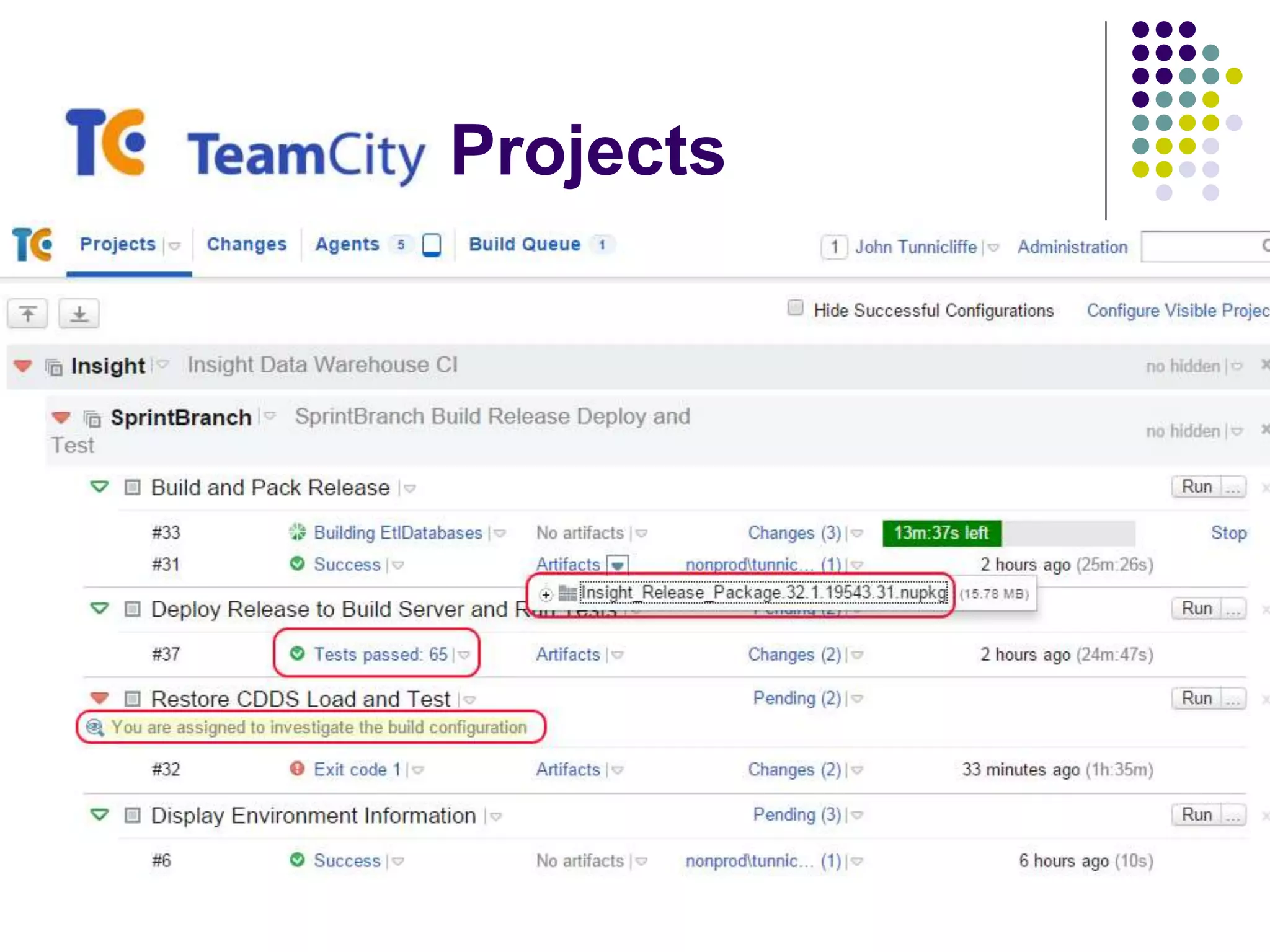

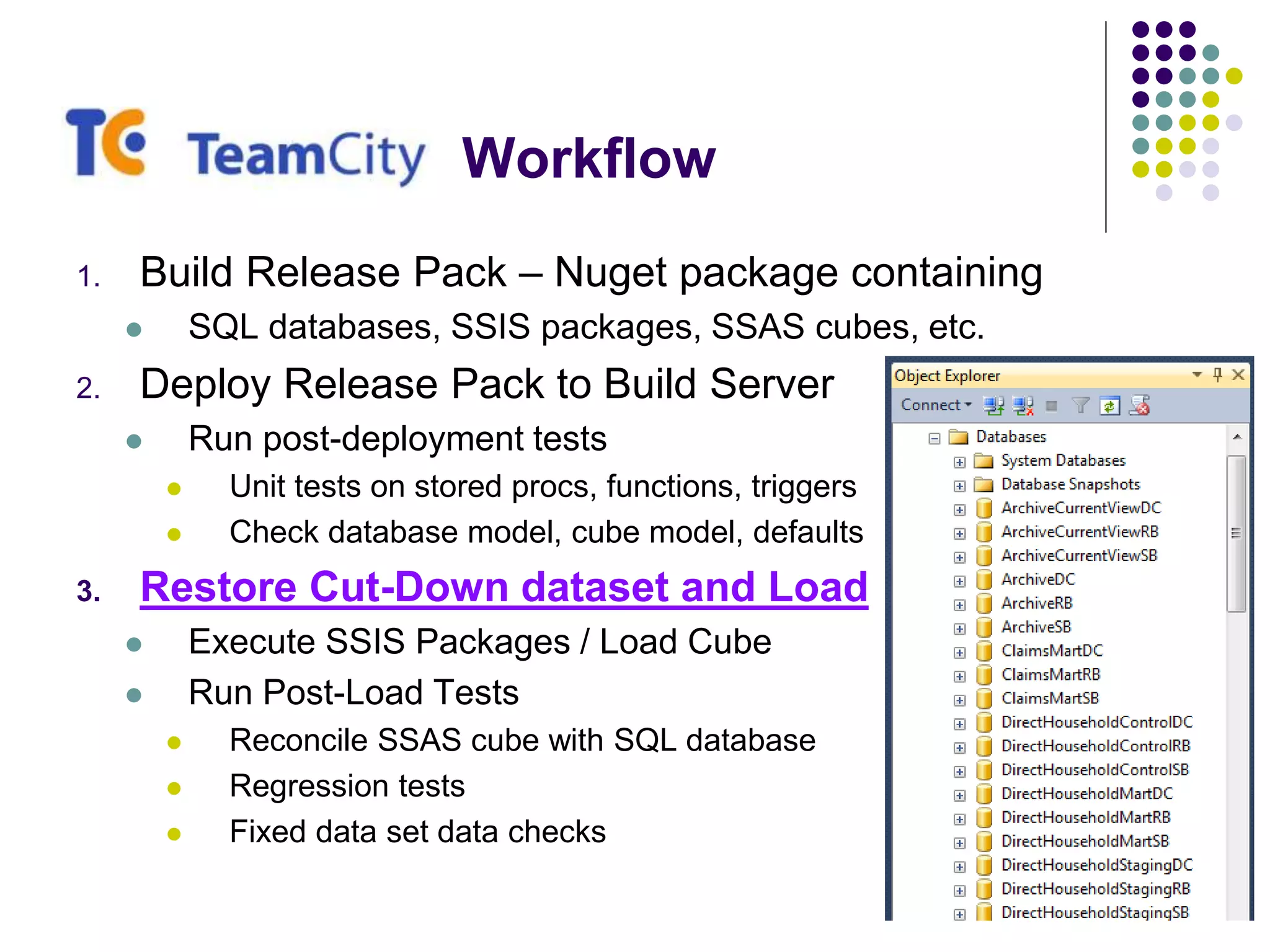

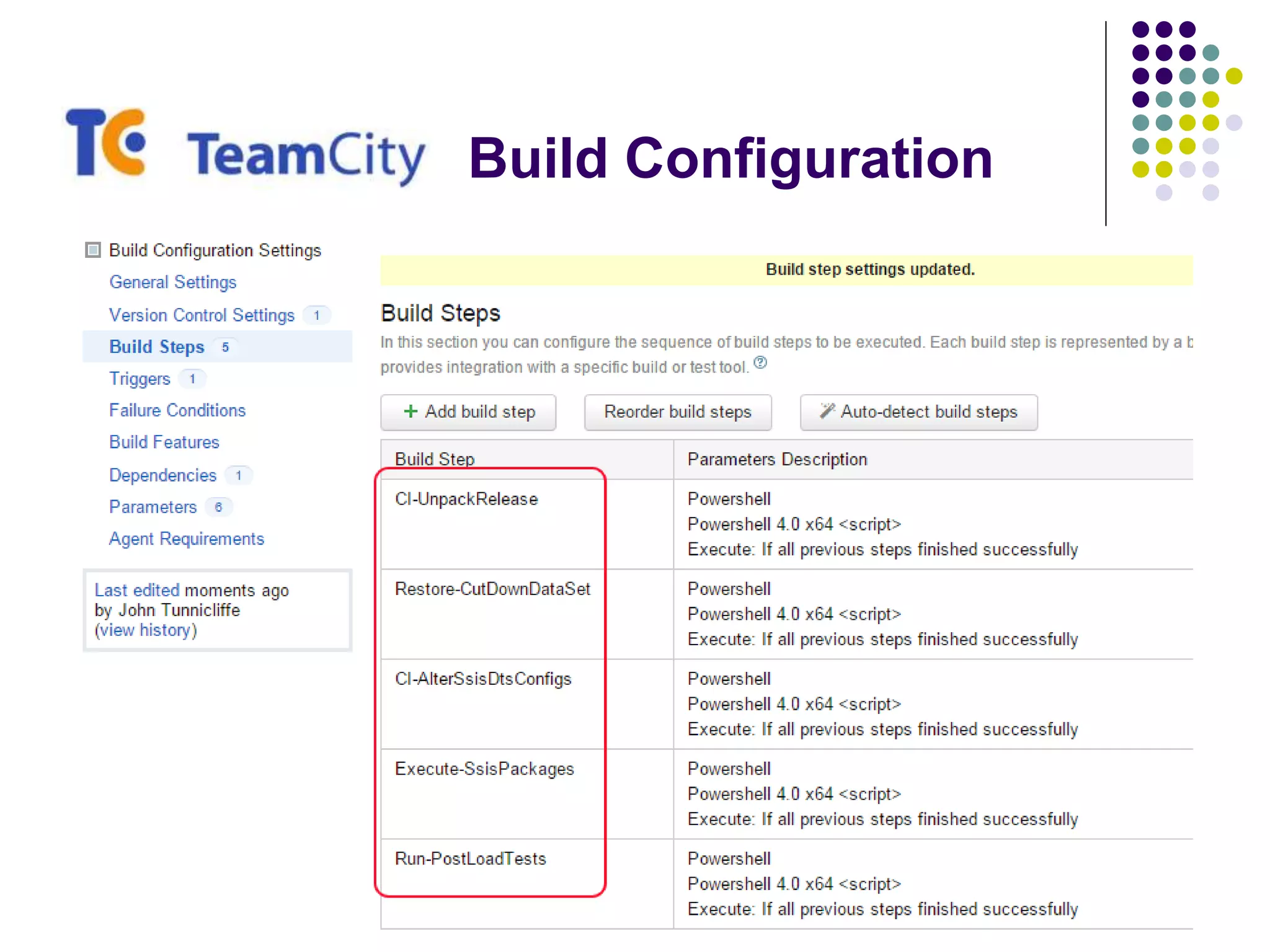

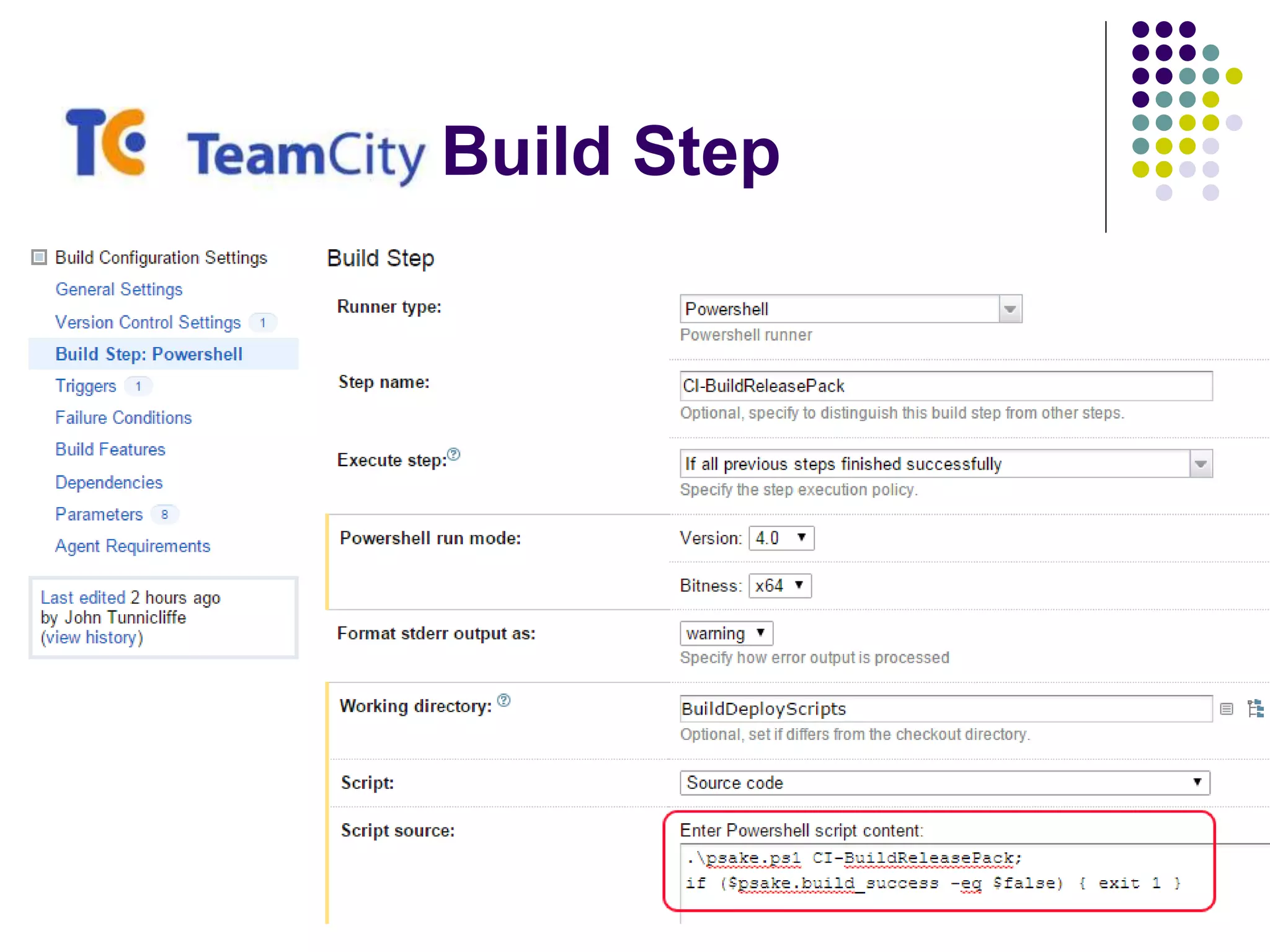

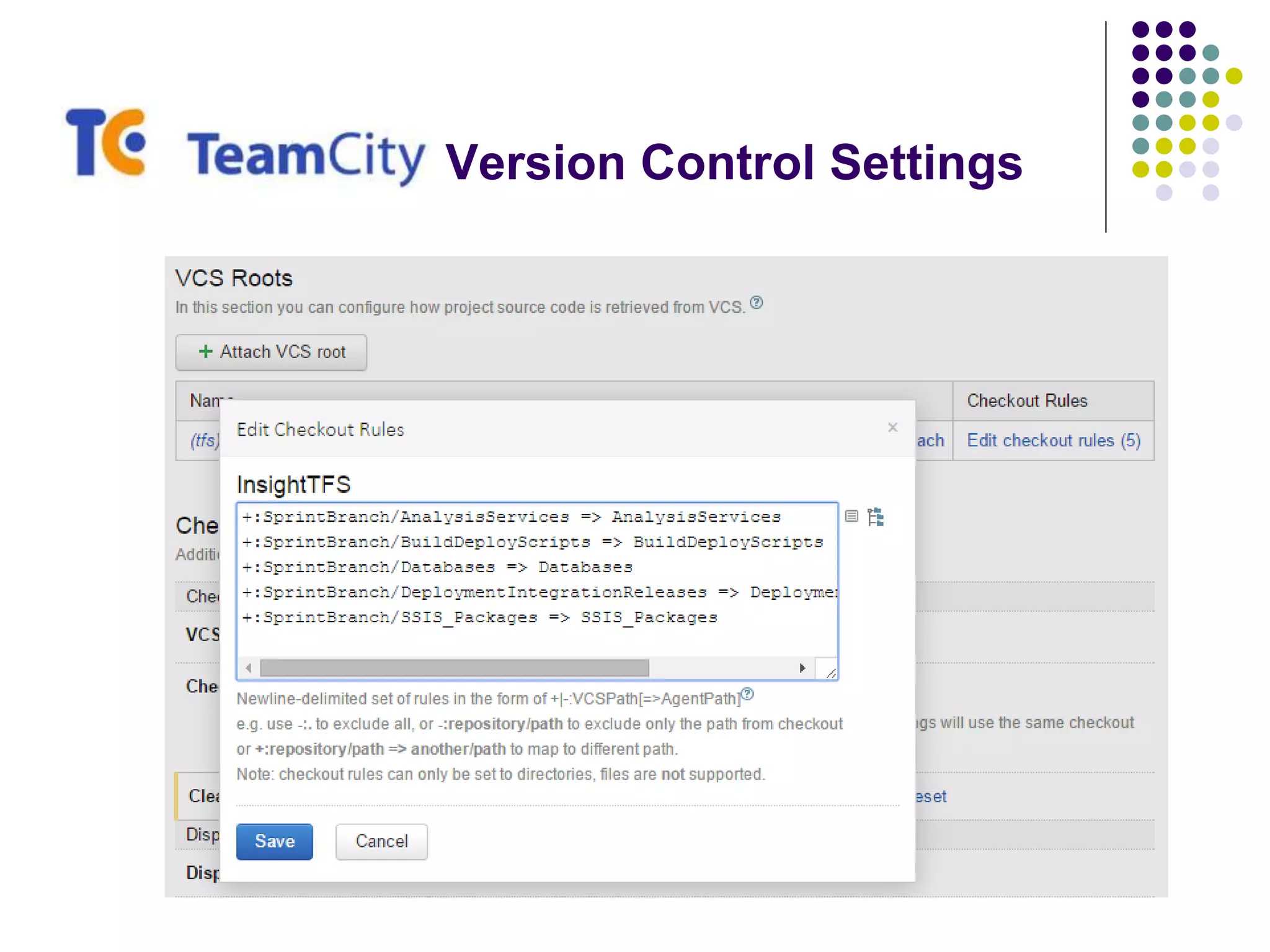

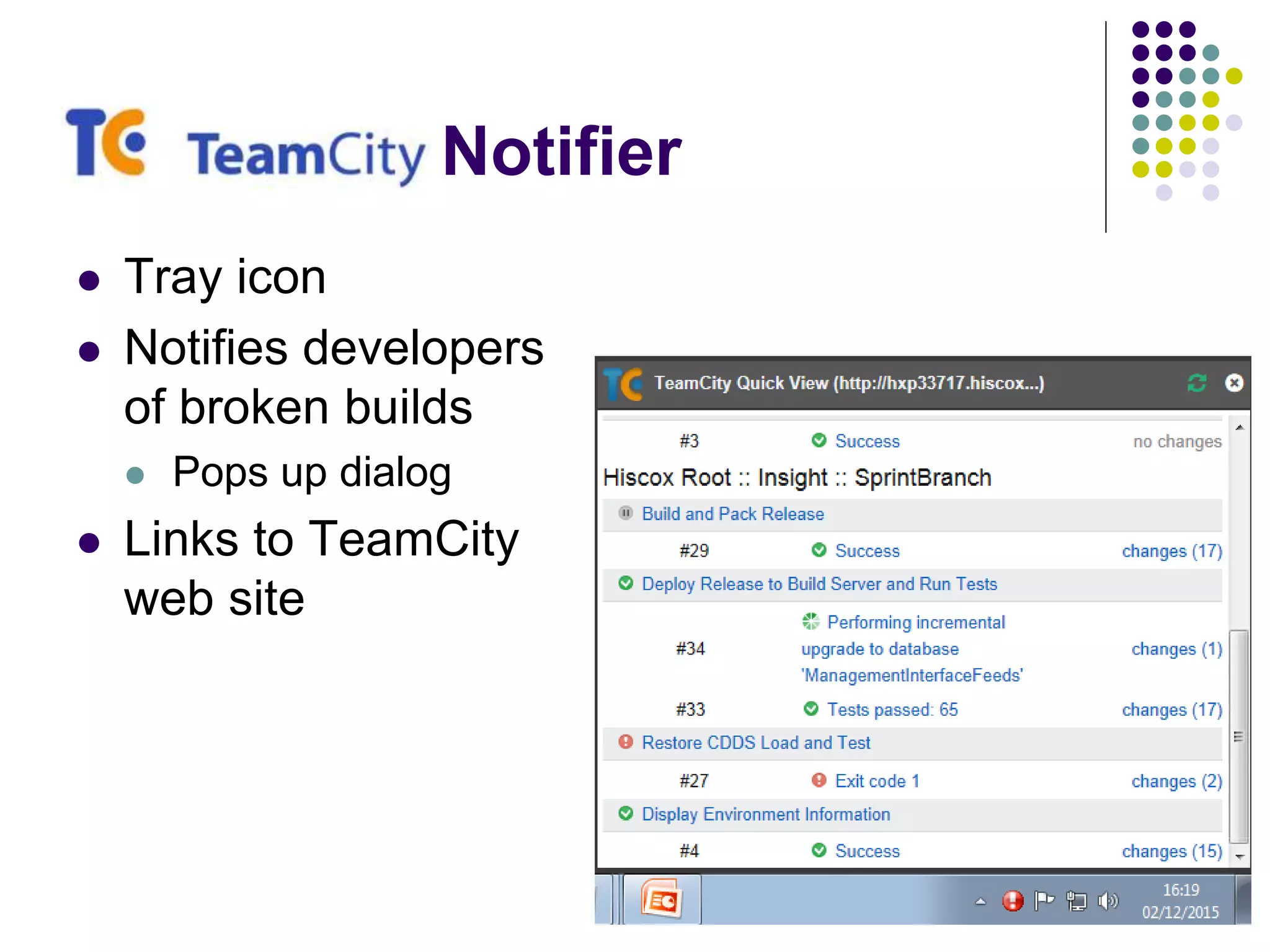

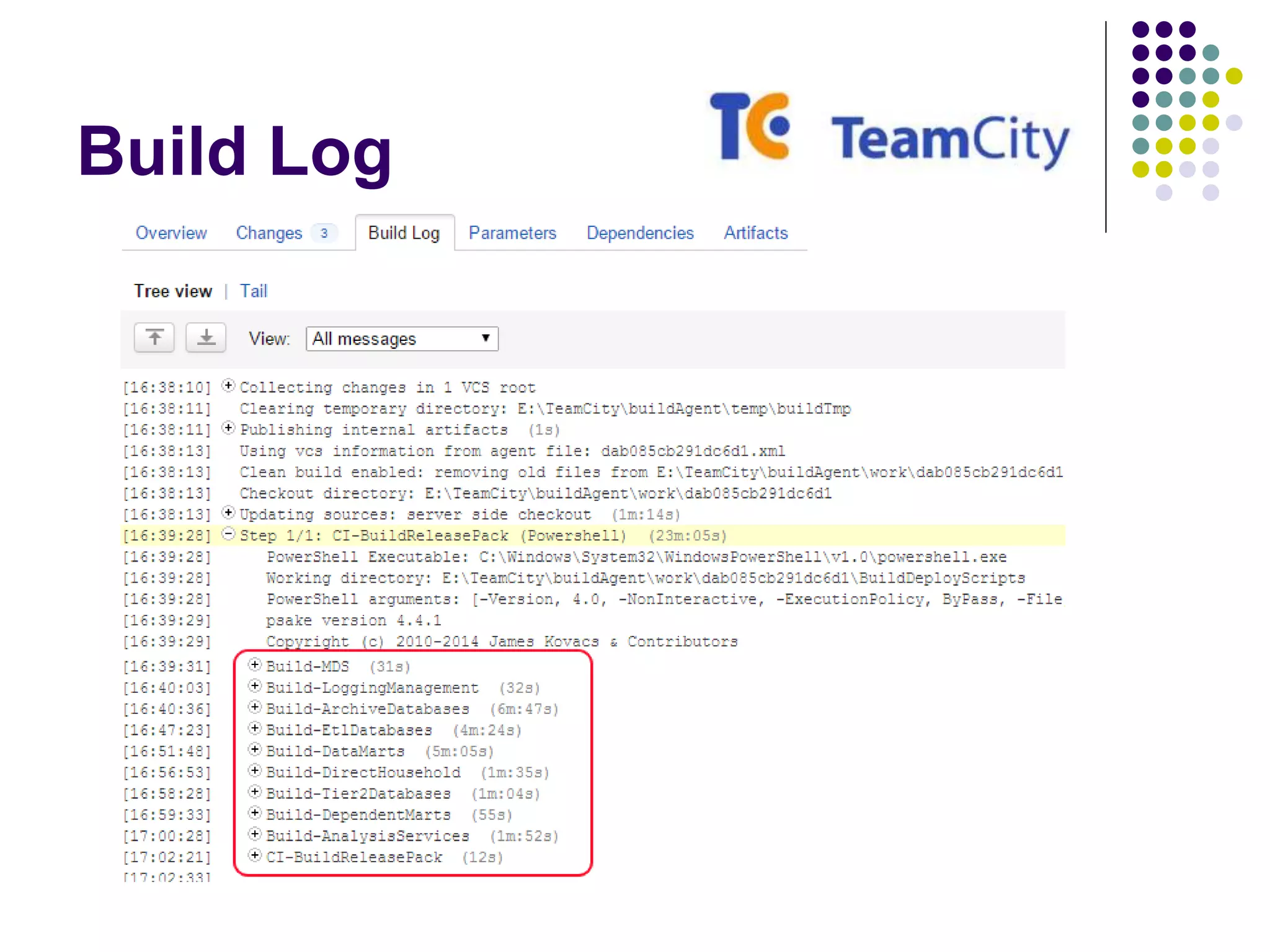

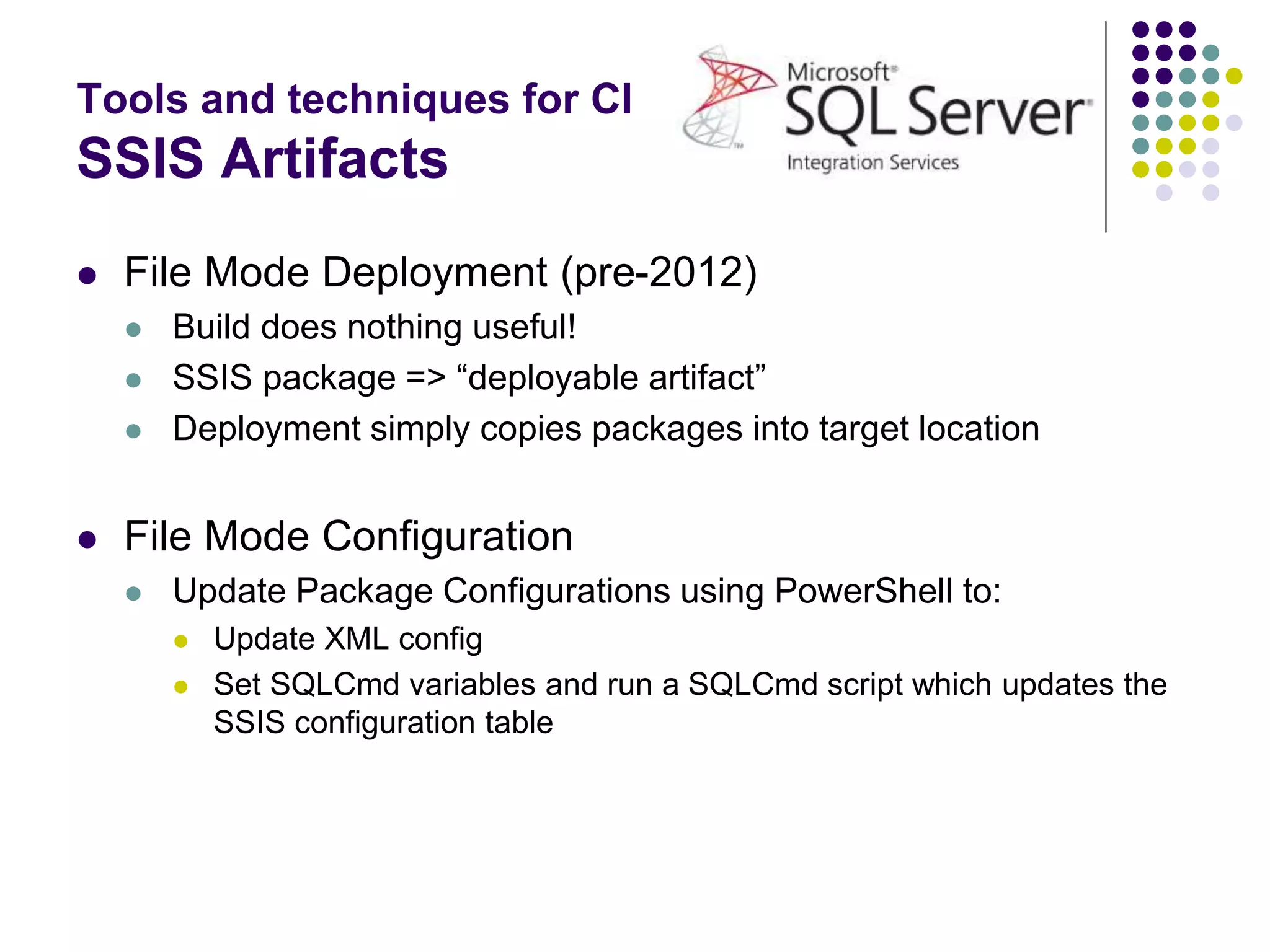

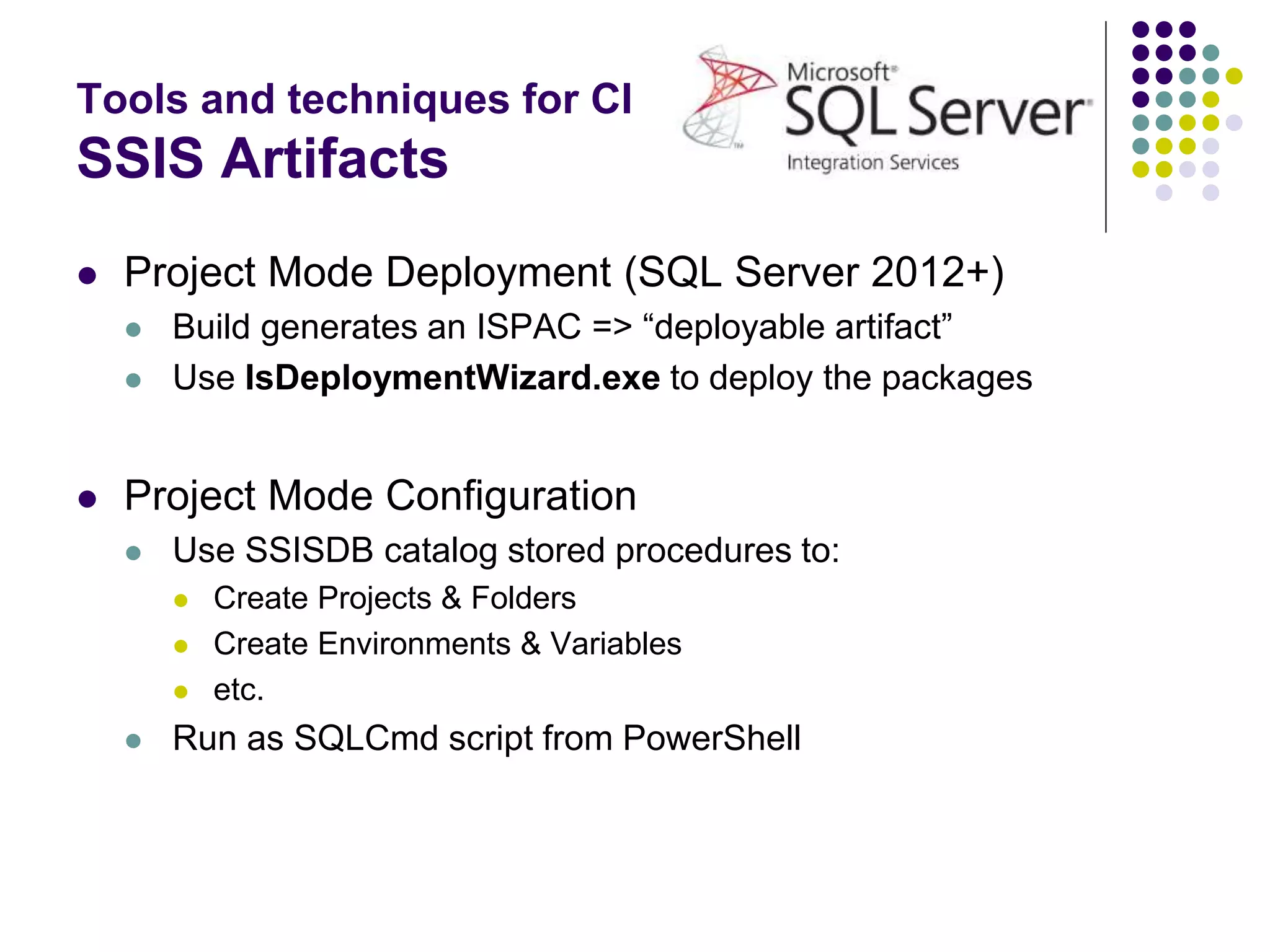

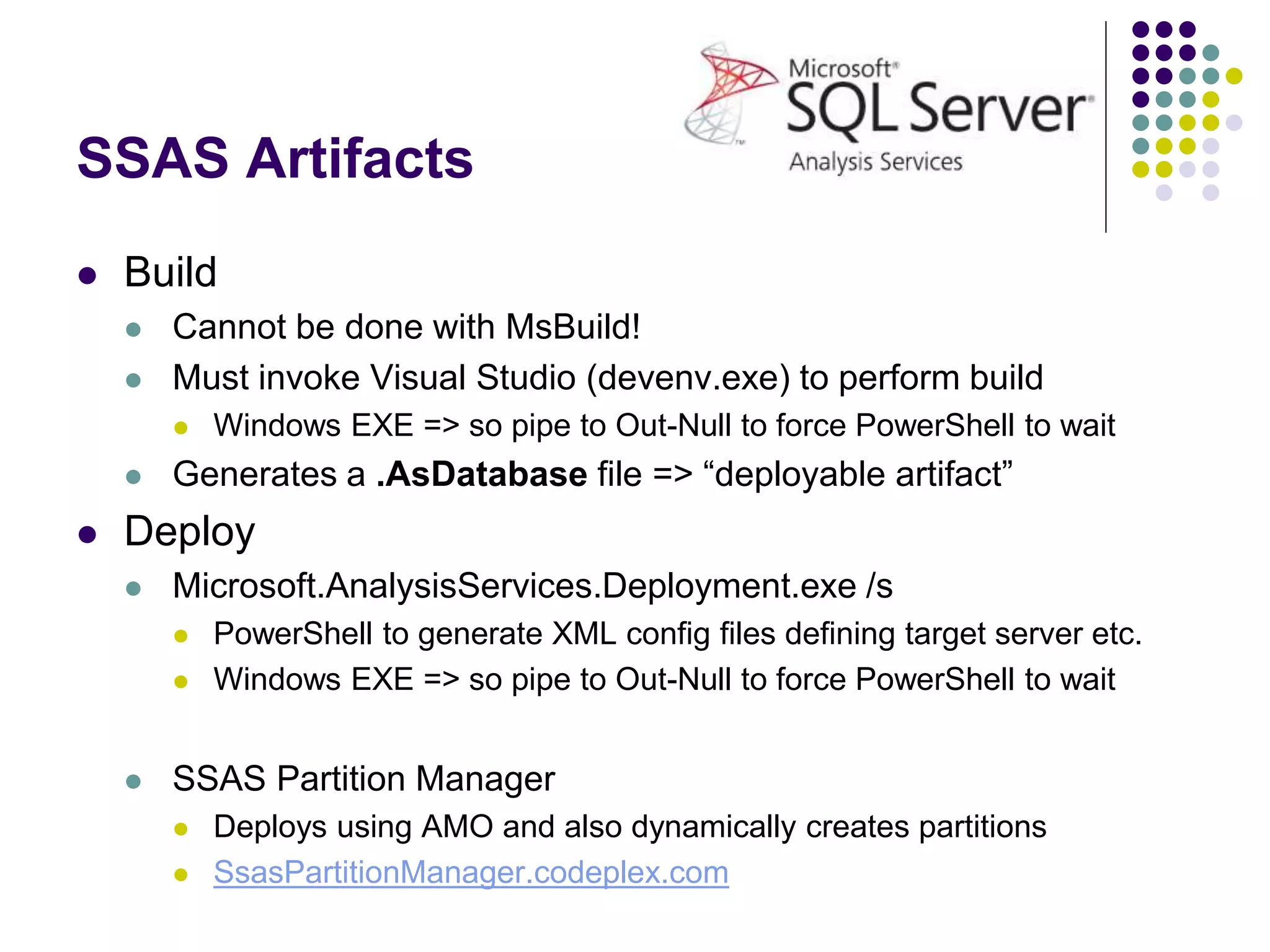

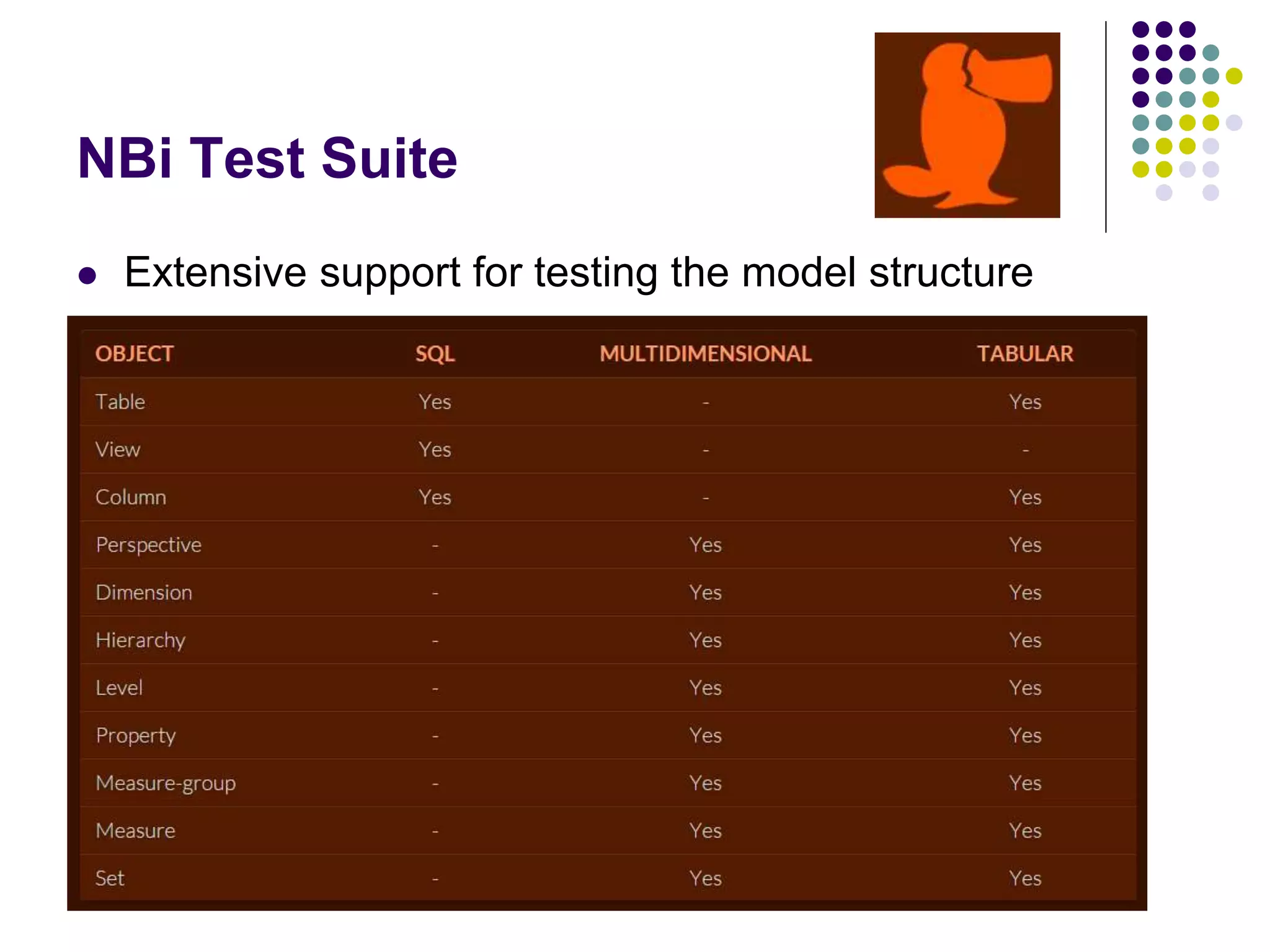

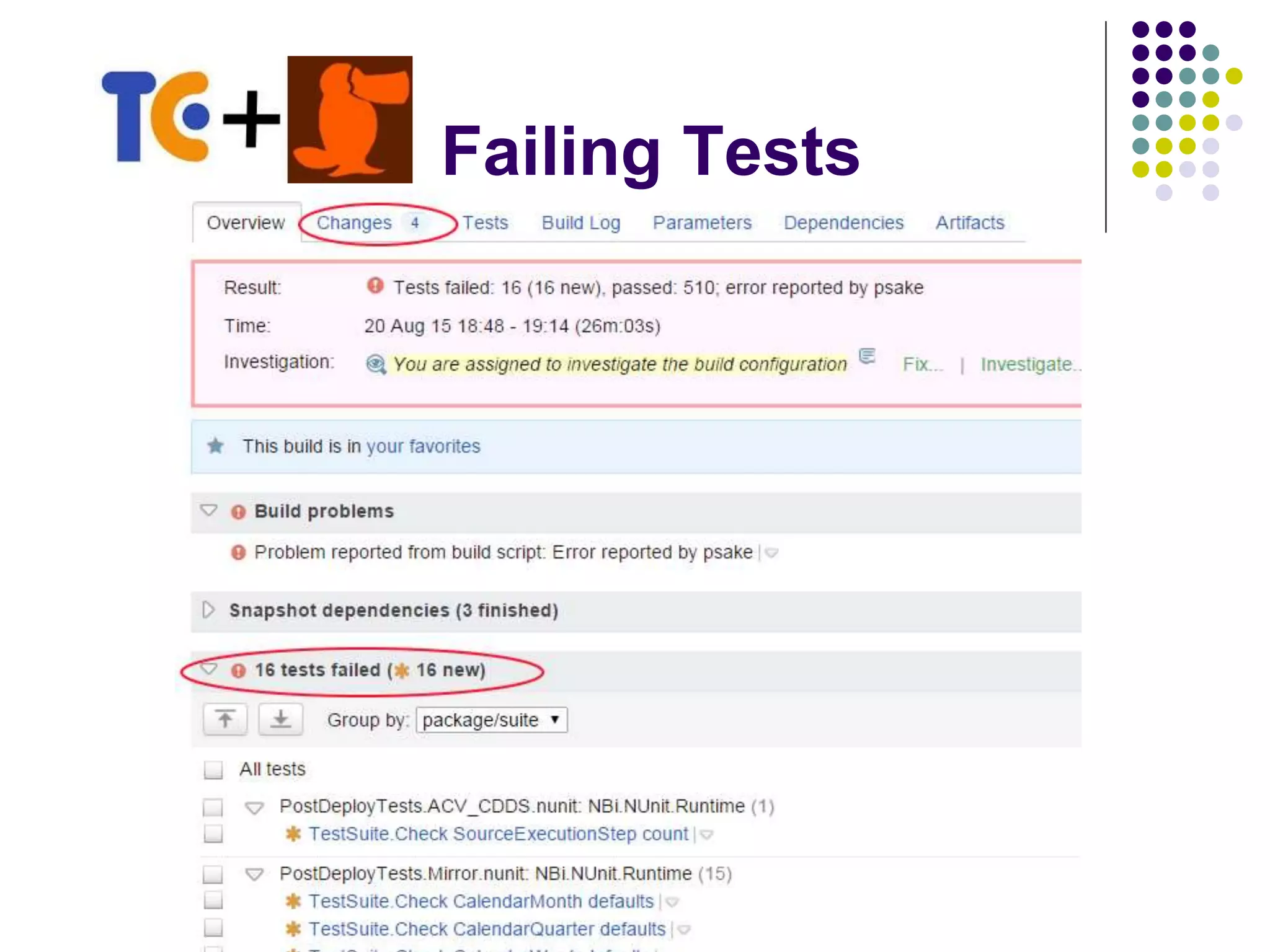

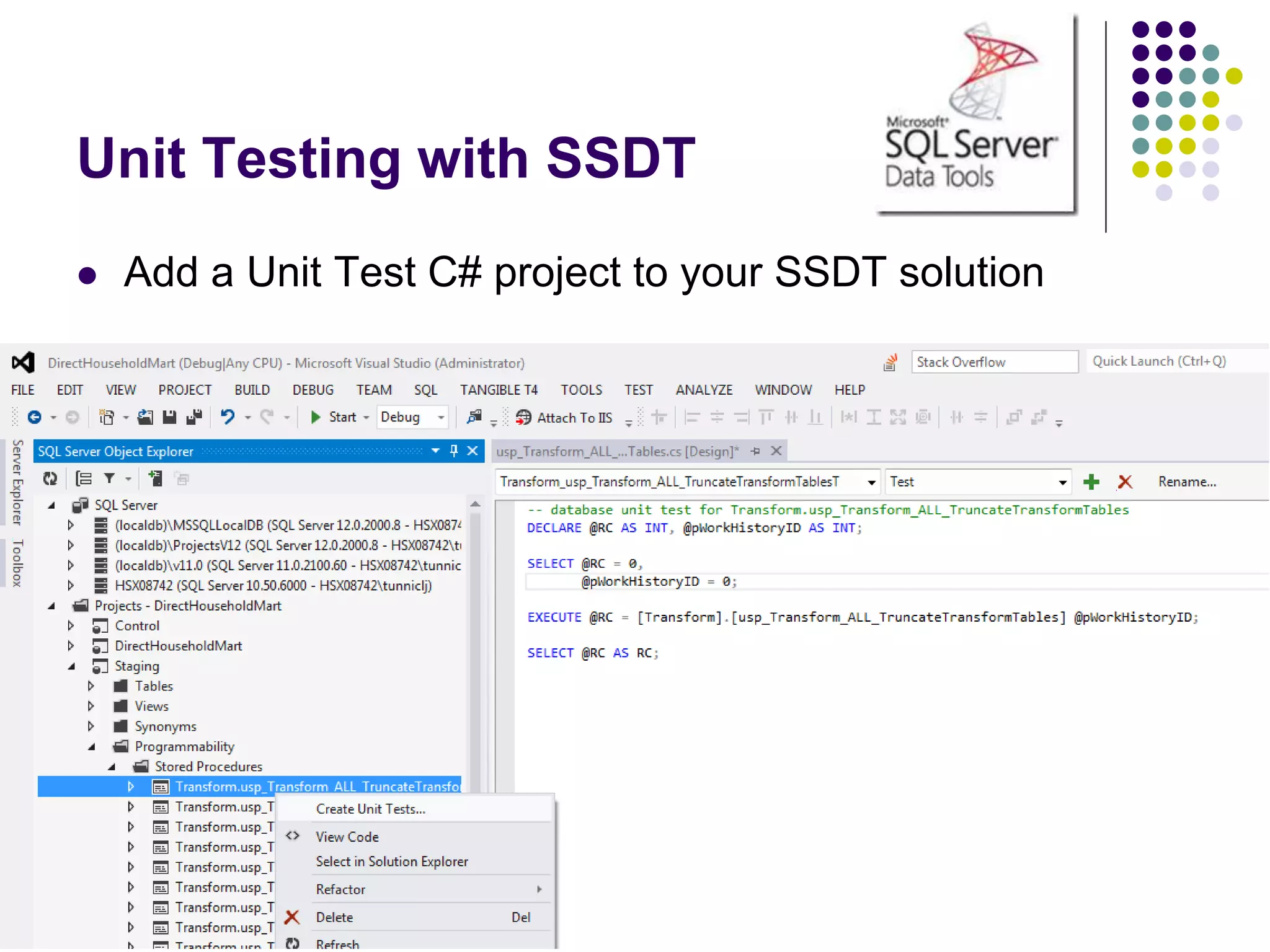

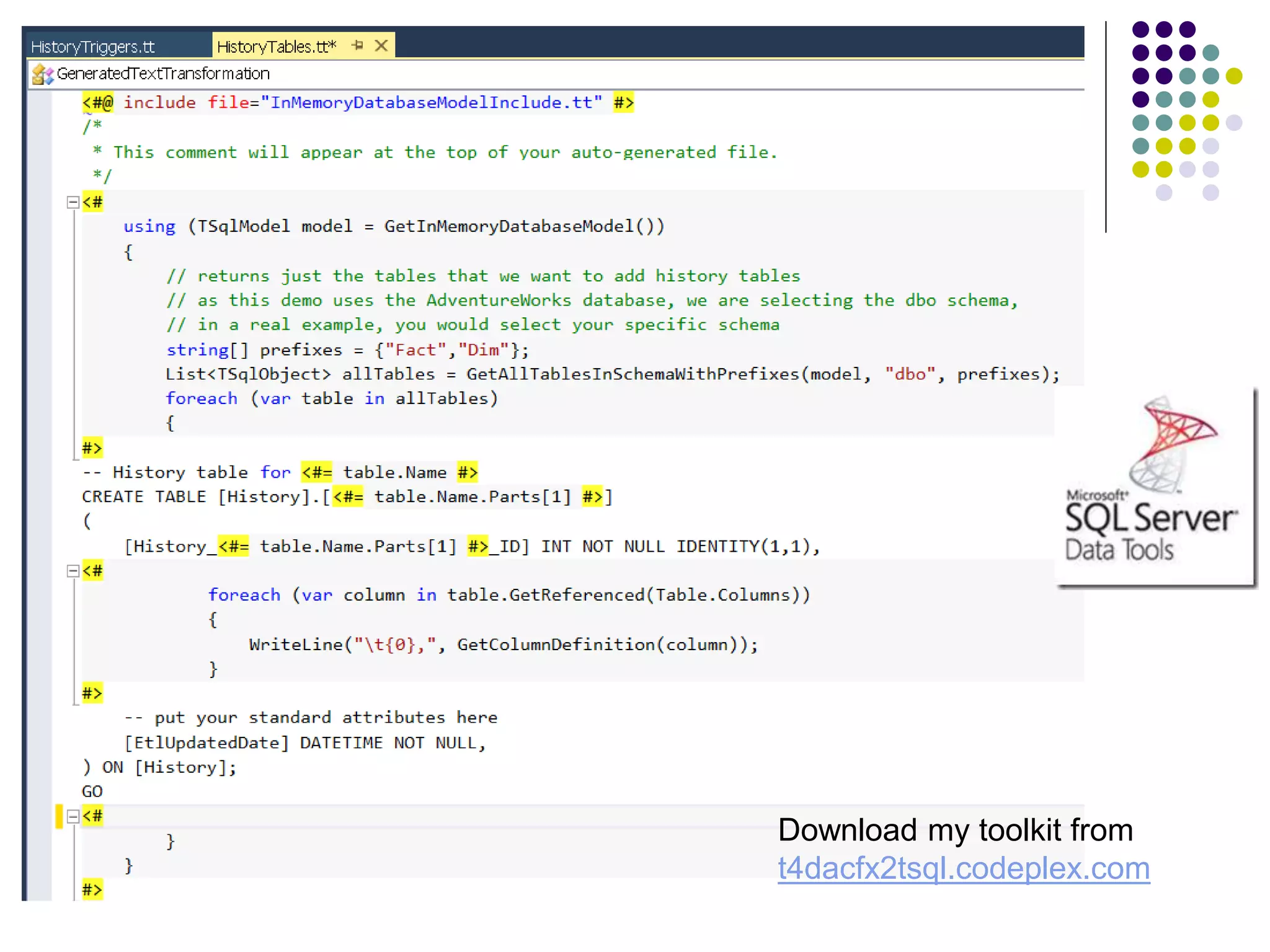

The document discusses the implementation of Continuous Integration (CI) in the context of data warehouse development, highlighting its significance in automating code integration, deployment, and testing processes. It details the challenges faced by BI developers in adopting CI practices, including unfamiliarity with tools and the complexity of environments. Additionally, the document outlines the necessary tools and techniques needed for successful CI, including SQL Server Data Tools (SSDT), Powershell scripts, and various testing frameworks.