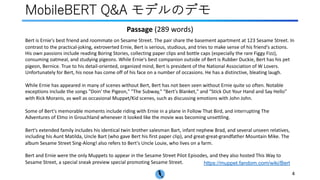

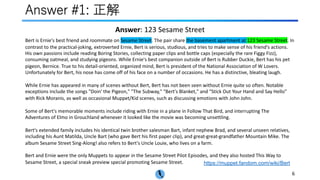

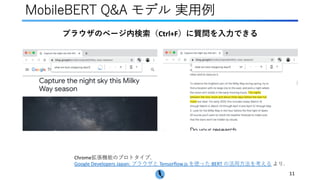

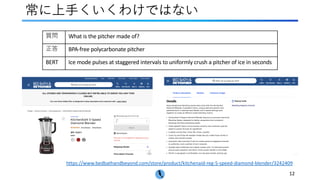

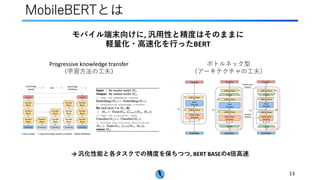

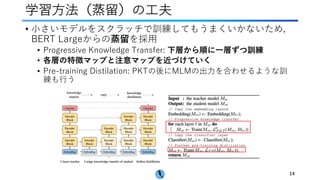

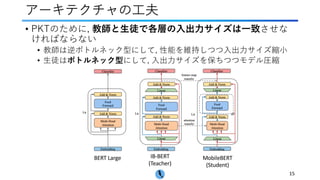

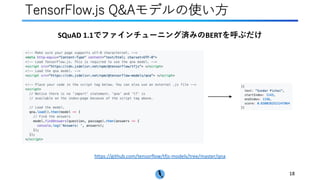

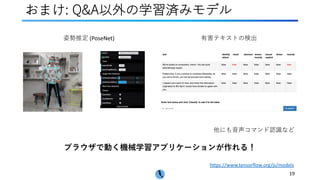

The document discusses using MobileBERT and TensorFlow.js to run BERT models in browsers. It introduces MobileBERT as a lightweight version of BERT optimized for mobile devices through architectural and training method optimizations. It then demonstrates a TensorFlow.js question answering model using MobileBERT finetuned on SQuAD. The model is deployed as a browser extension that allows querying pages. While not perfect, it shows the potential of running NLP models directly in browsers.

![⾃⼰紹介

3

• 本⽥ 志温(ほんだ しおん)

• データサイエンティストっぽい仕事@リクルートライフスタイル

• 3⽉までは東京⼤学⼤学院にてAI創薬の研究

• これまでも[cv/nl]paper.challengeで何度か発表してきました

• ブログ: https://hippocampus-garden.com/](https://image.slidesharecdn.com/bertjs-200515041126/85/BERT-MobileBERT-TensorFlow-js-3-320.jpg)

![参考⽂献など

[1] Zhiqing Sun, Hongkun Yu, Xiaodan Song, Renjie Liu, Yiming Yang, Denny Zhou. “MobileBERT:

a Compact Task-Agnostic BERT for Resource-Limited Devices.” ACL. 2020.

[2] Google Developers Japan: ブラウザと Tensorflow.js を使った BERT の活⽤⽅法を考える

[3] MobileBERT Q&Aモデルのデモ

[4] tfjs-models/qna at master · tensorflow/tfjs-models

[5] Jacob Devlin, Ming-Wei Chang, Kenton Lee, Kristina Toutanova. “BERT: Pre-training of Deep

Bidirectional Transformers for Language Understanding.” NACCL. 2019.

[6] アバター: Ultraman Mebius by sousaguilherme5

21](https://image.slidesharecdn.com/bertjs-200515041126/85/BERT-MobileBERT-TensorFlow-js-21-320.jpg)