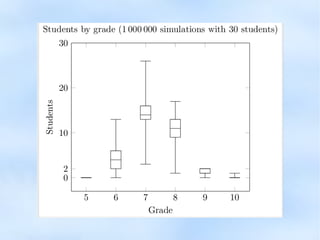

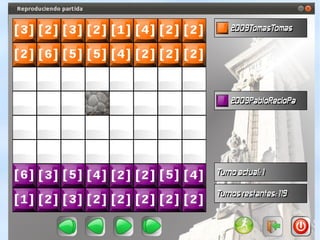

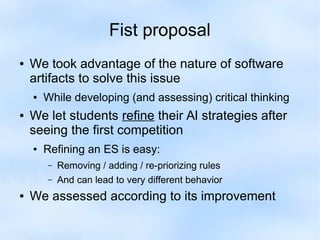

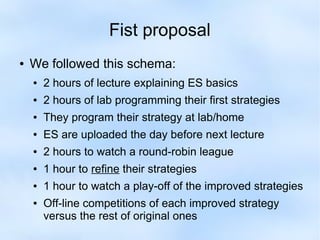

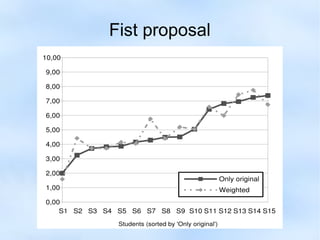

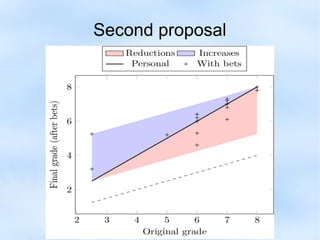

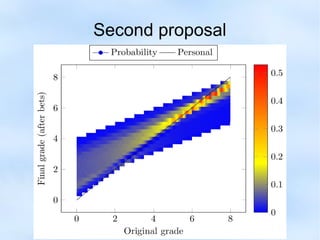

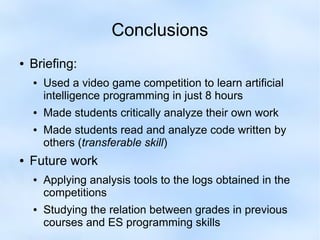

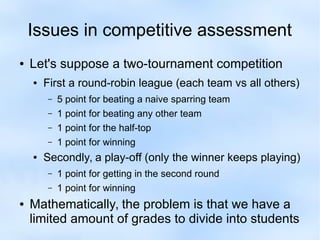

The document discusses the complexities of competitive assessment in educational contexts, highlighting both motivational aspects and the potential negative impacts on student morale. It examines case studies and proposes methods to improve learning outcomes through refined AI strategy competitions, including a betting system that encourages critical analysis of both personal and peer strategies. Conclusions suggest the effectiveness of this approach in fostering critical thinking and collaborative learning among students.

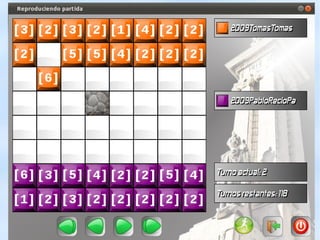

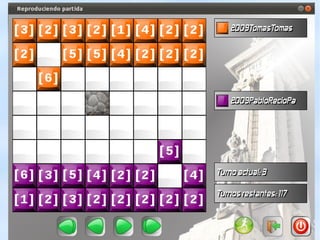

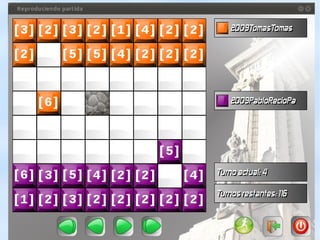

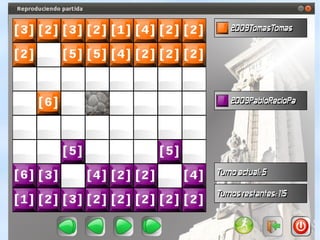

![WL

8

ML

7

DO

6

DS

5

WP

8

WLRP

9

RP

7

[n, n]

[n, n]

[n, n]

[n - 2^l, 2^l]

where

l = floor(log2(n))

1 unique token

per student

[n, n]

[n, n]

[n, n]

[n, n]

[n, n] [n, n]

[1, 1]

[1, 1]

[floor(n/2), n]

MLRP

8

WLWP

10

MLWP

9

que puede

una entrada

cada una de

de tokens,

recibido. "[n/2, n]"

que tiene,

nota es

tokens en cada

haya llegado](https://image.slidesharecdn.com/assessingcompetitions-141130042752-conversion-gate02/85/Assessment-in-programming-competitive-assignments-8-320.jpg)