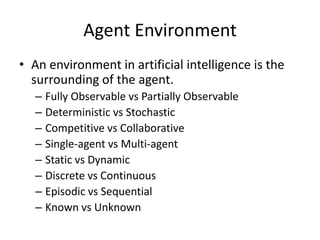

Artificial intelligence and machine learning agents can be categorized based on their architecture, characteristics, and type. The document discusses several types of agents including simple reflex agents, model-based reflex agents, goal-based agents, utility-based agents, learning agents, multi-agent systems, and hierarchical agents. It also covers reasoning methods like forward chaining and backward chaining.