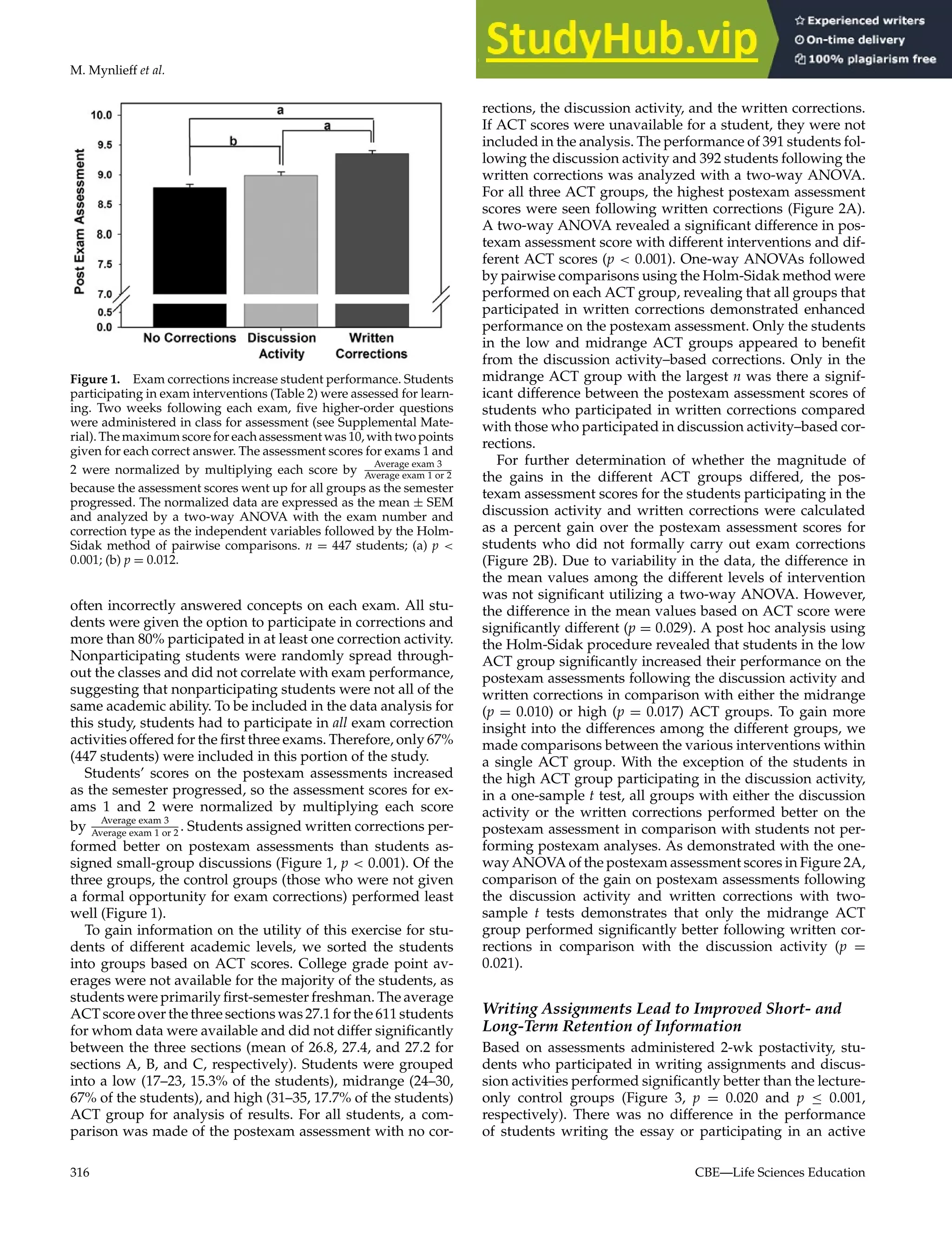

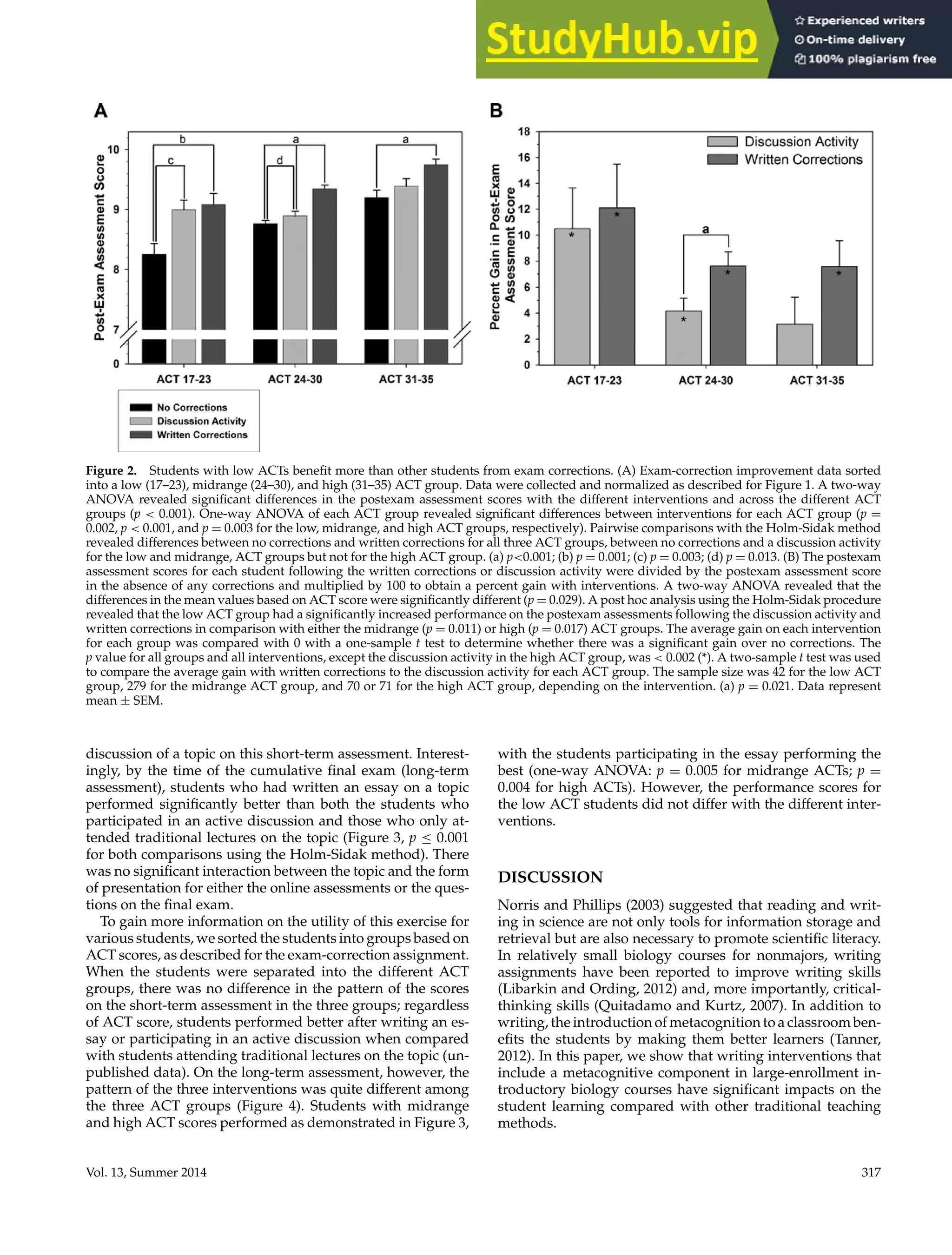

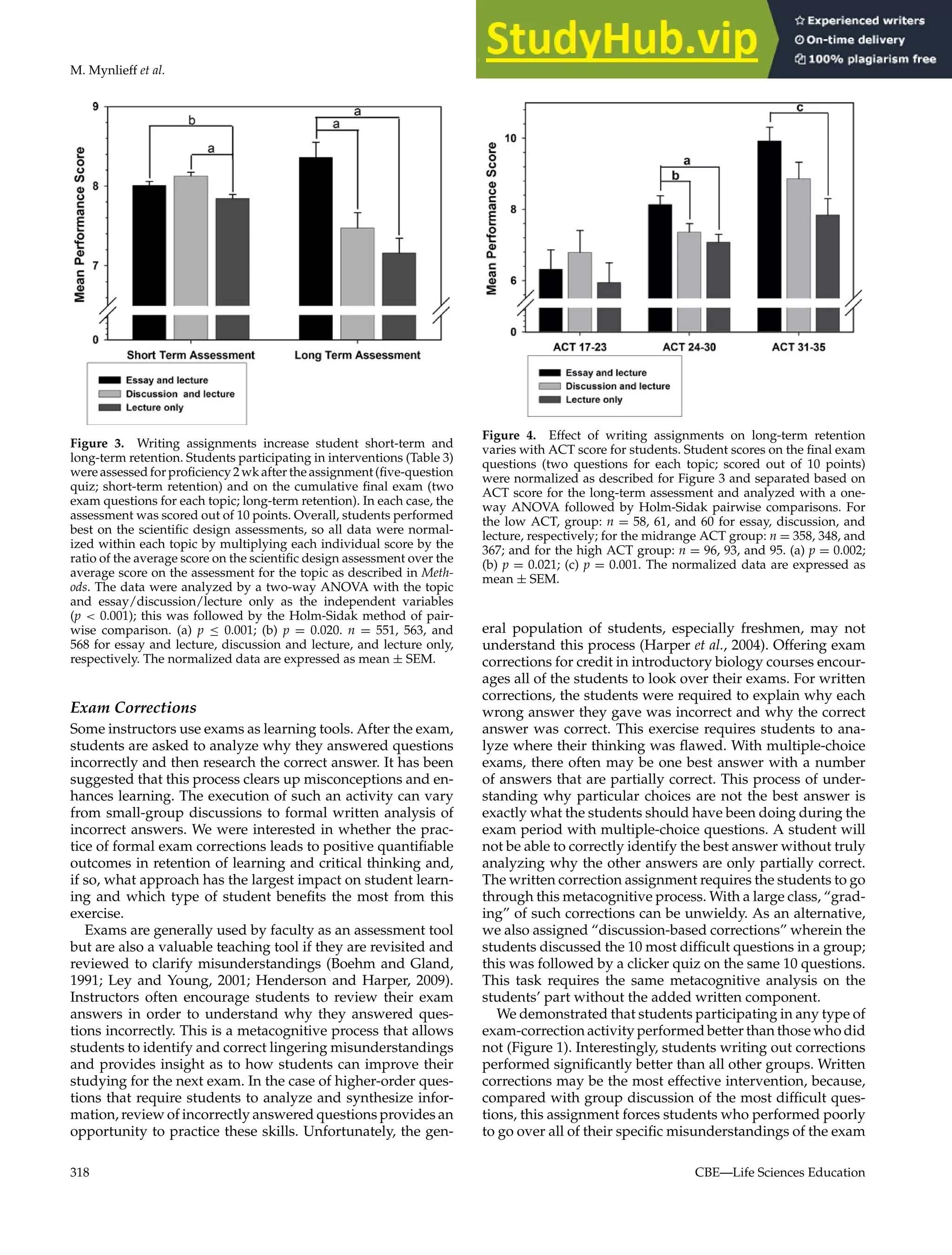

This document describes two writing assignments used in a large introductory biology course to enhance student learning: exam corrections and peer-reviewed writing assignments using Calibrated Peer Review (CPR). The study found that students who corrected exam questions showed significant improvement on subsequent assessments compared to students who did not participate. Students also scored higher on topics learned through the CPR writing assignments than through classroom discussion or lecture. However, students with lower ACT scores did not benefit as much from the CPR assignments. The writing assignments promoted metacognition and higher-order thinking skills.