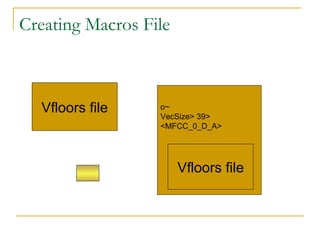

The document discusses Arabic optical character recognition (AOCR). It introduces AOCR and its challenges. It then describes the preprocessing steps of image rotation, segmentation, and enhancement. It explains the feature extraction process and the features selected. It details the implementation of an AOCR system using Hidden Markov Models in HTK, including data preparation, model creation, and recognition. It presents experimental results on isolated character recognition with variations in font, size, and length. Recognition accuracy was highest using vertical histograms and modeling each character.

![(2) Baseline Count calculate number of black pixels above baseline (with [+ve] value) & number of black pixels below baseline (with [-ve] value) in each slide.](https://image.slidesharecdn.com/aocrhmmpresentation-124593740014-phpapp01/85/Aocr-Hmm-Presentation-35-320.jpg)