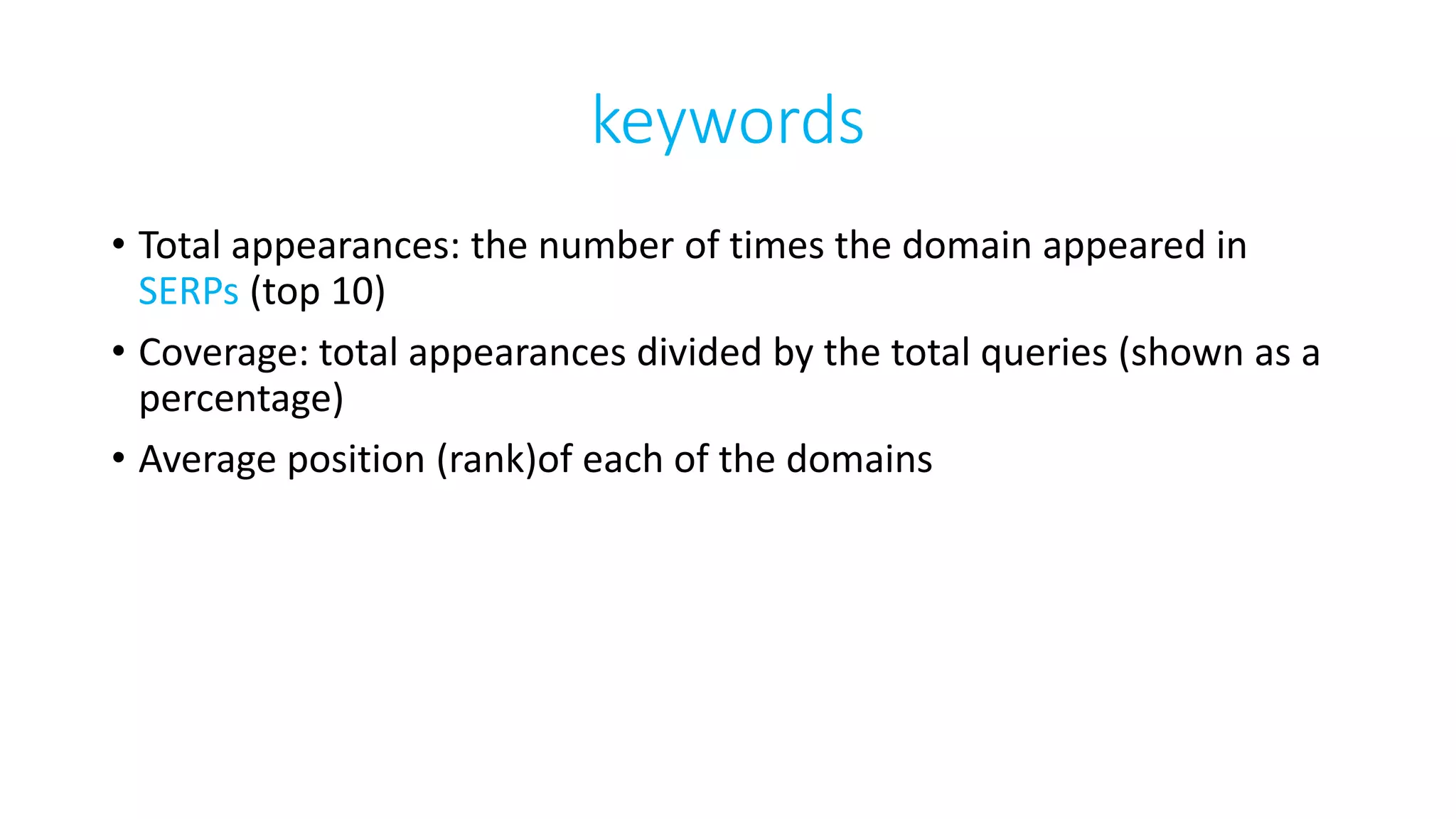

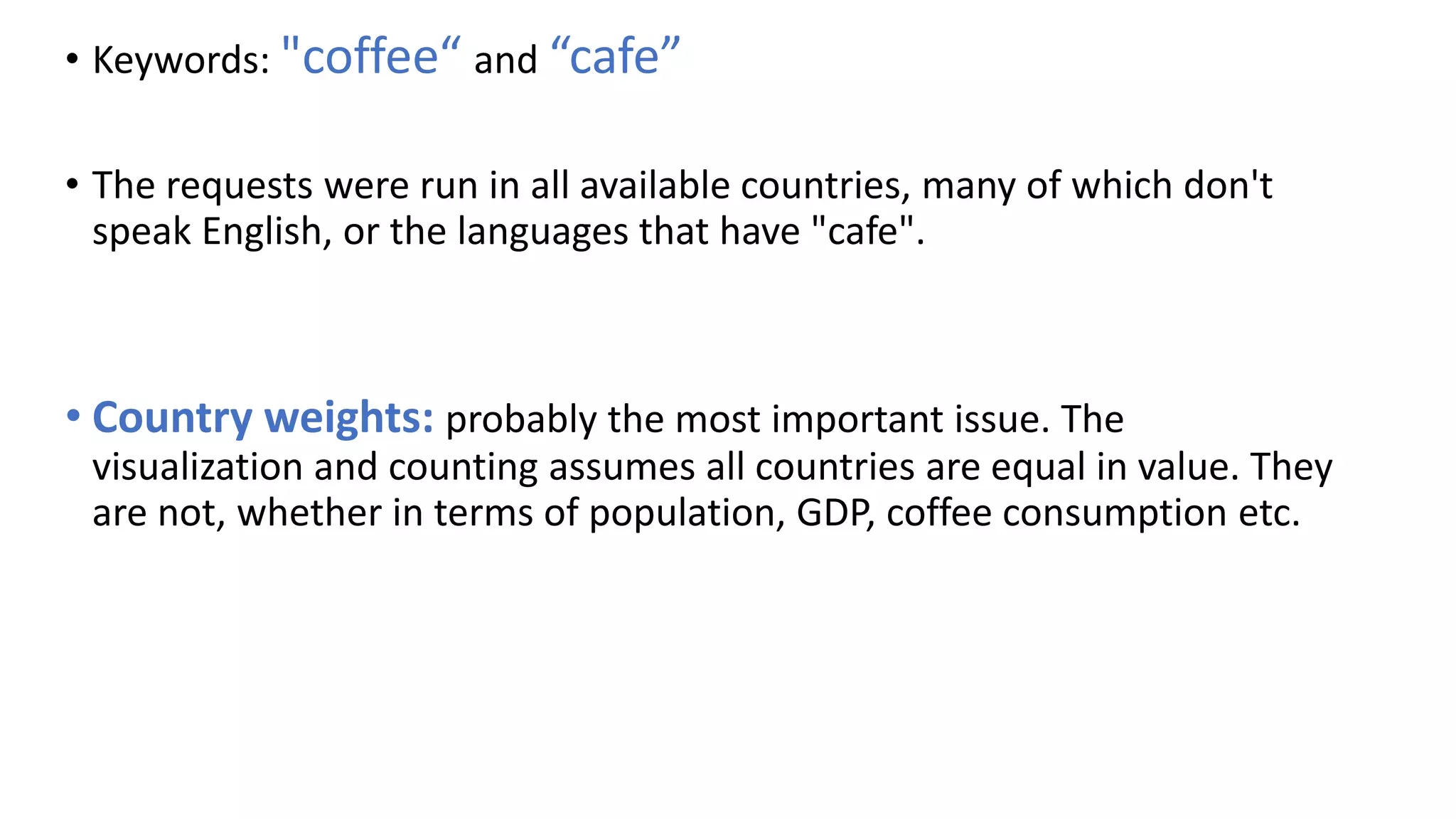

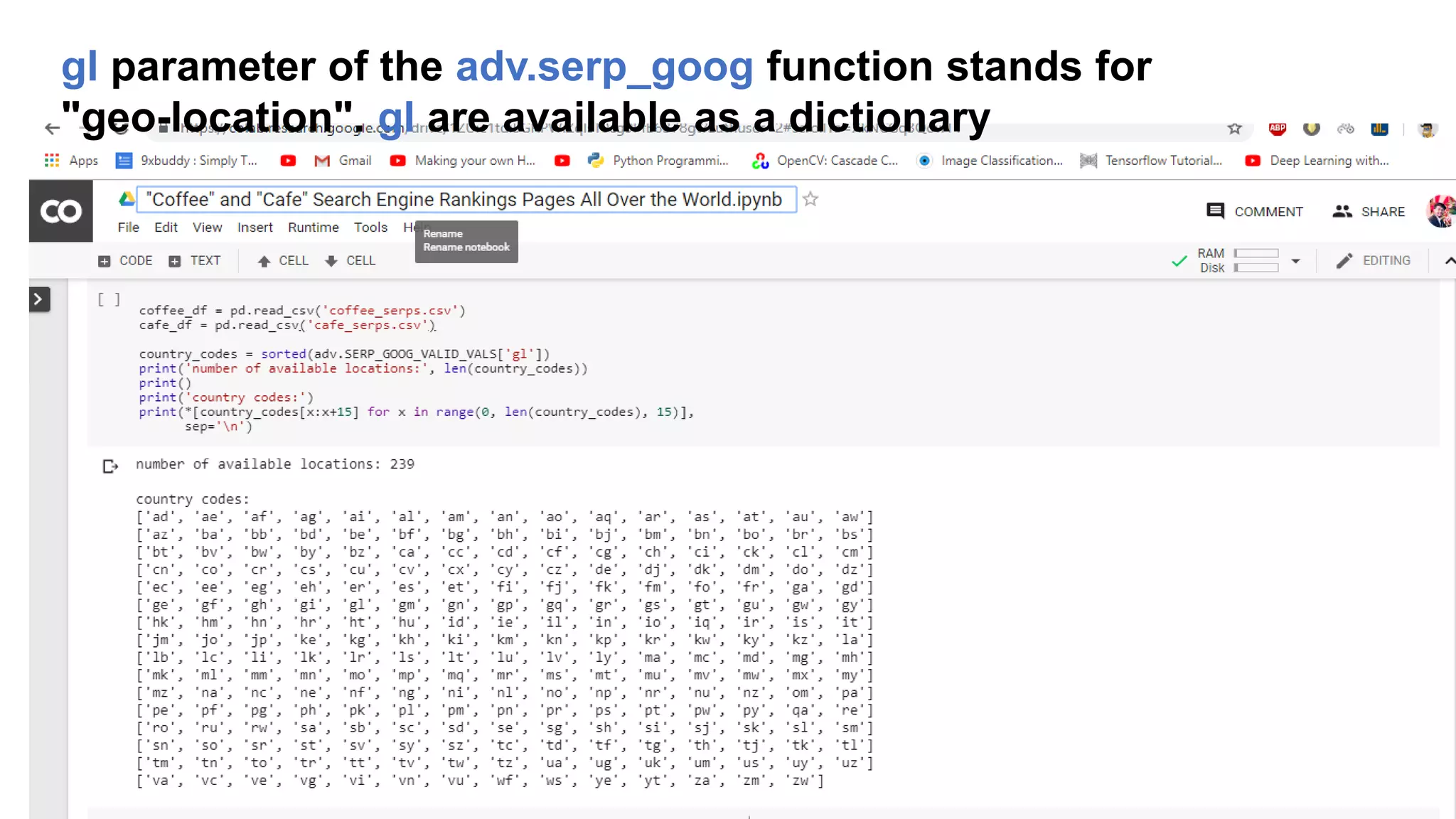

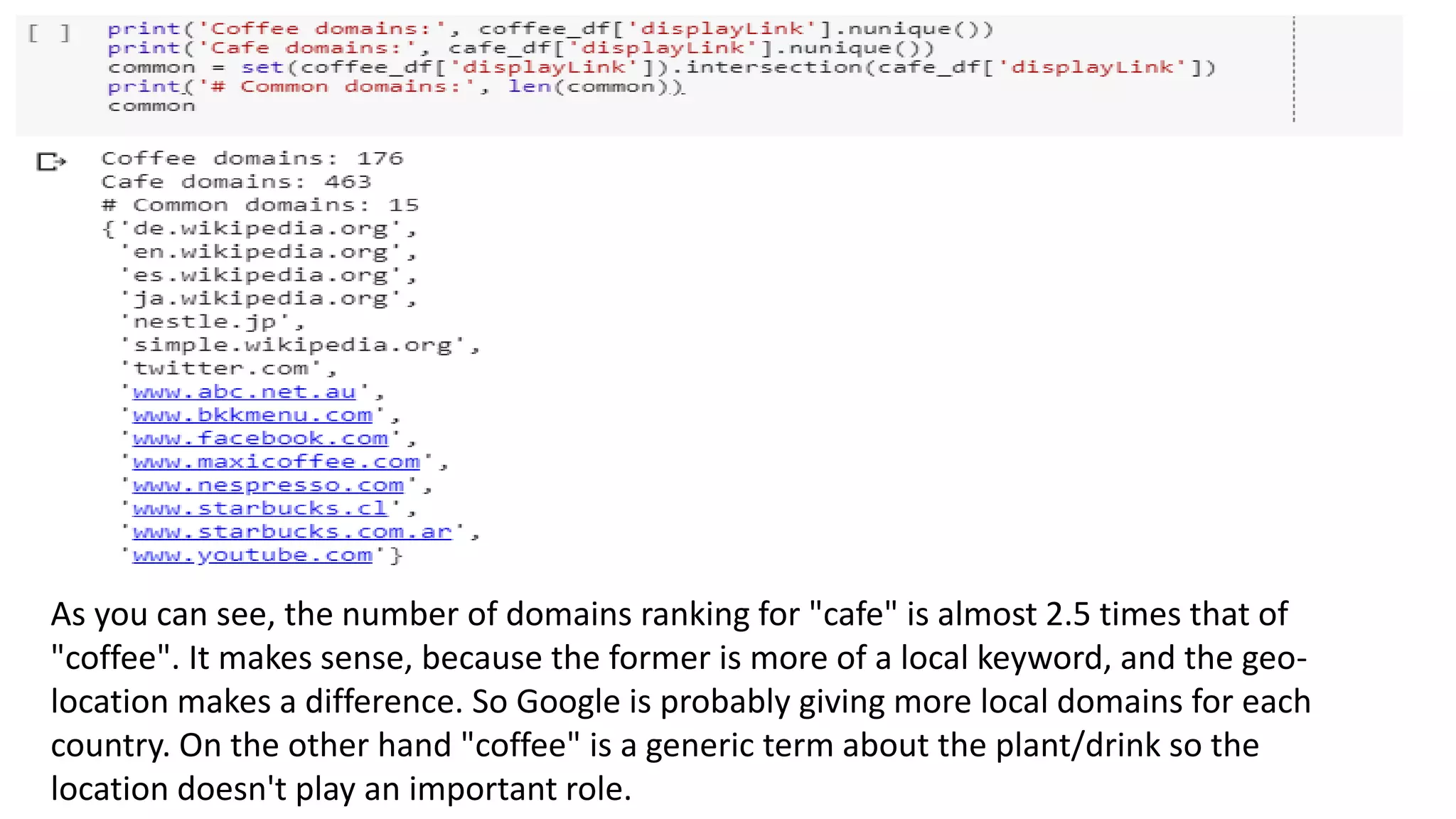

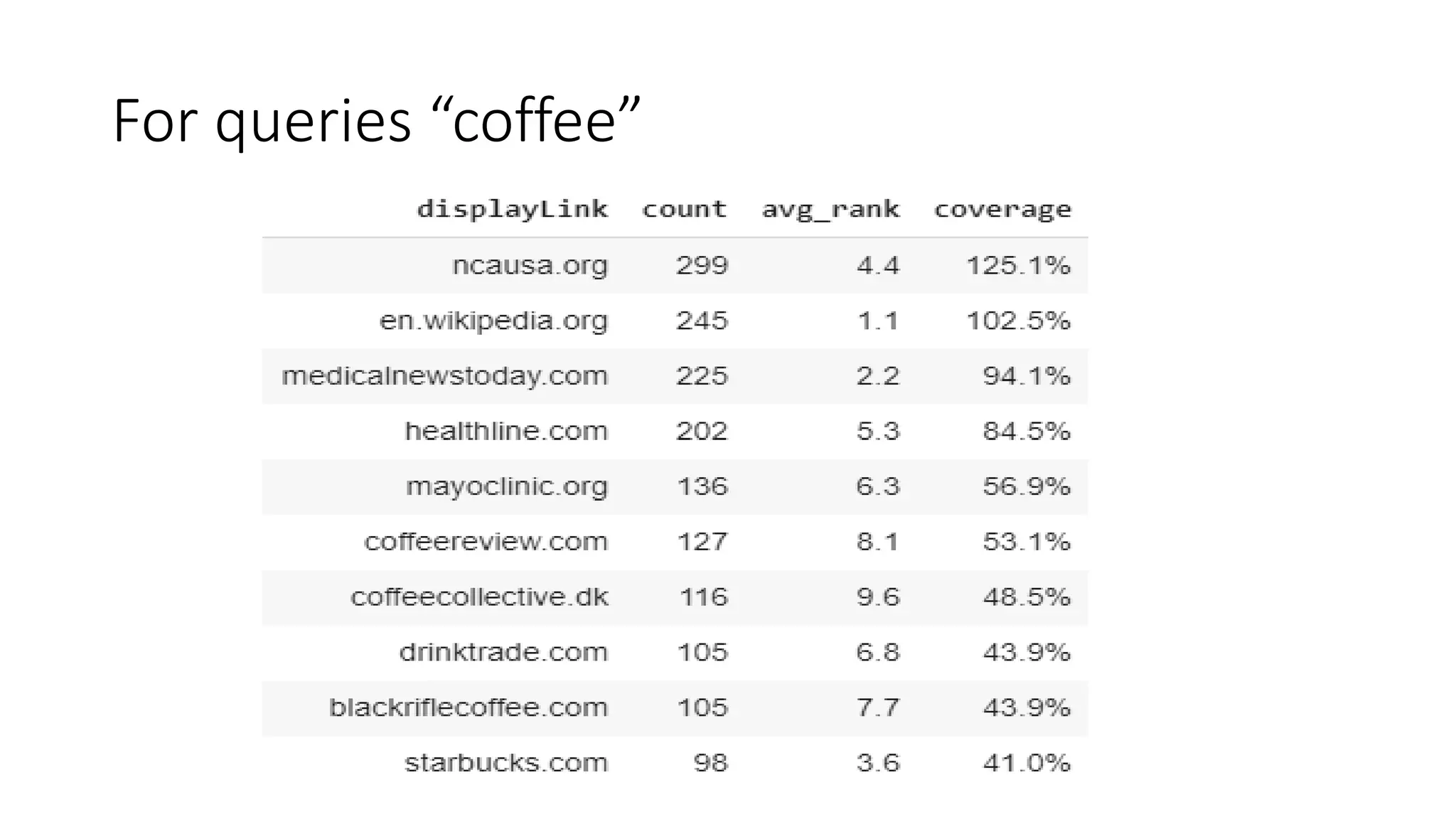

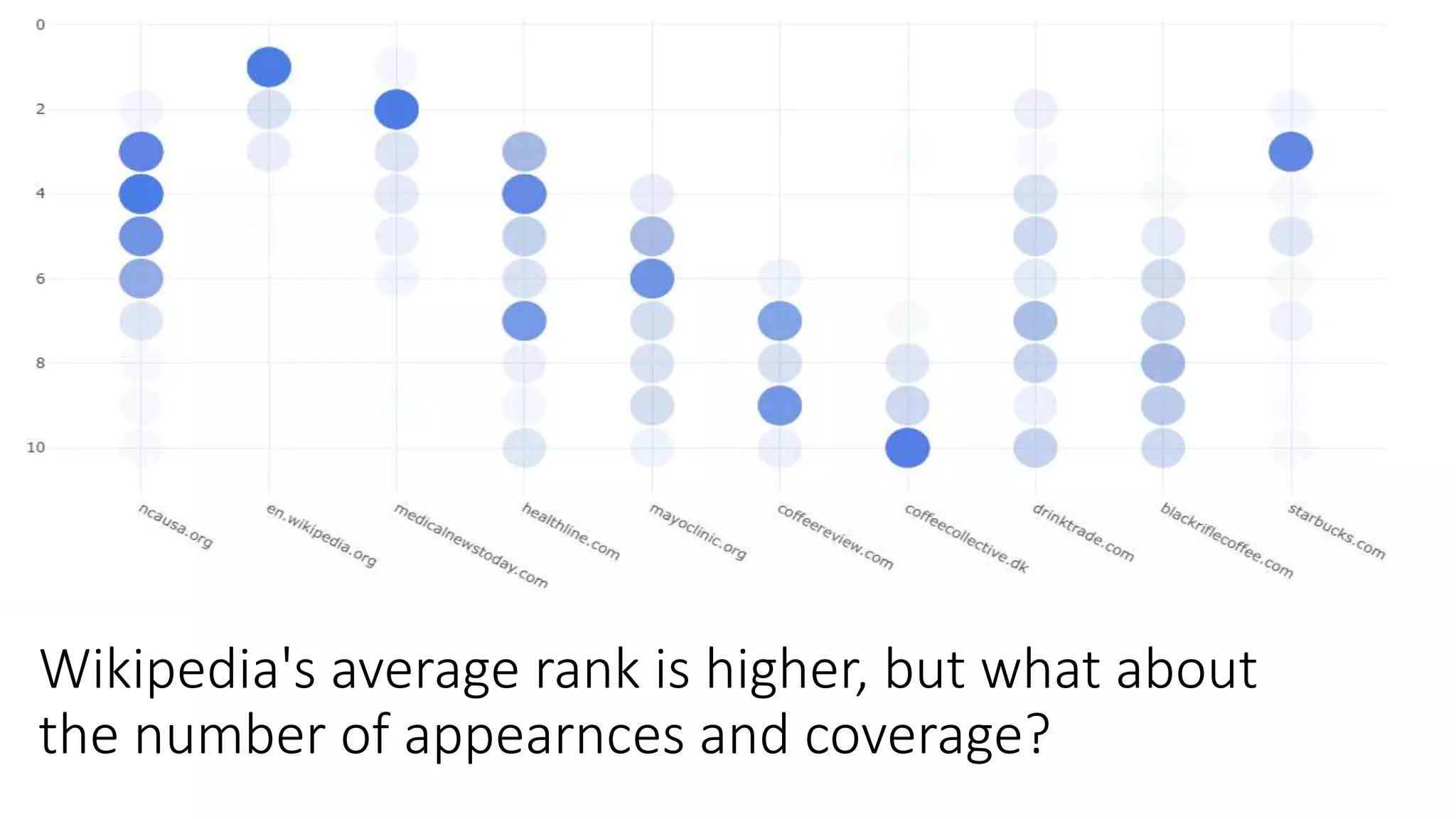

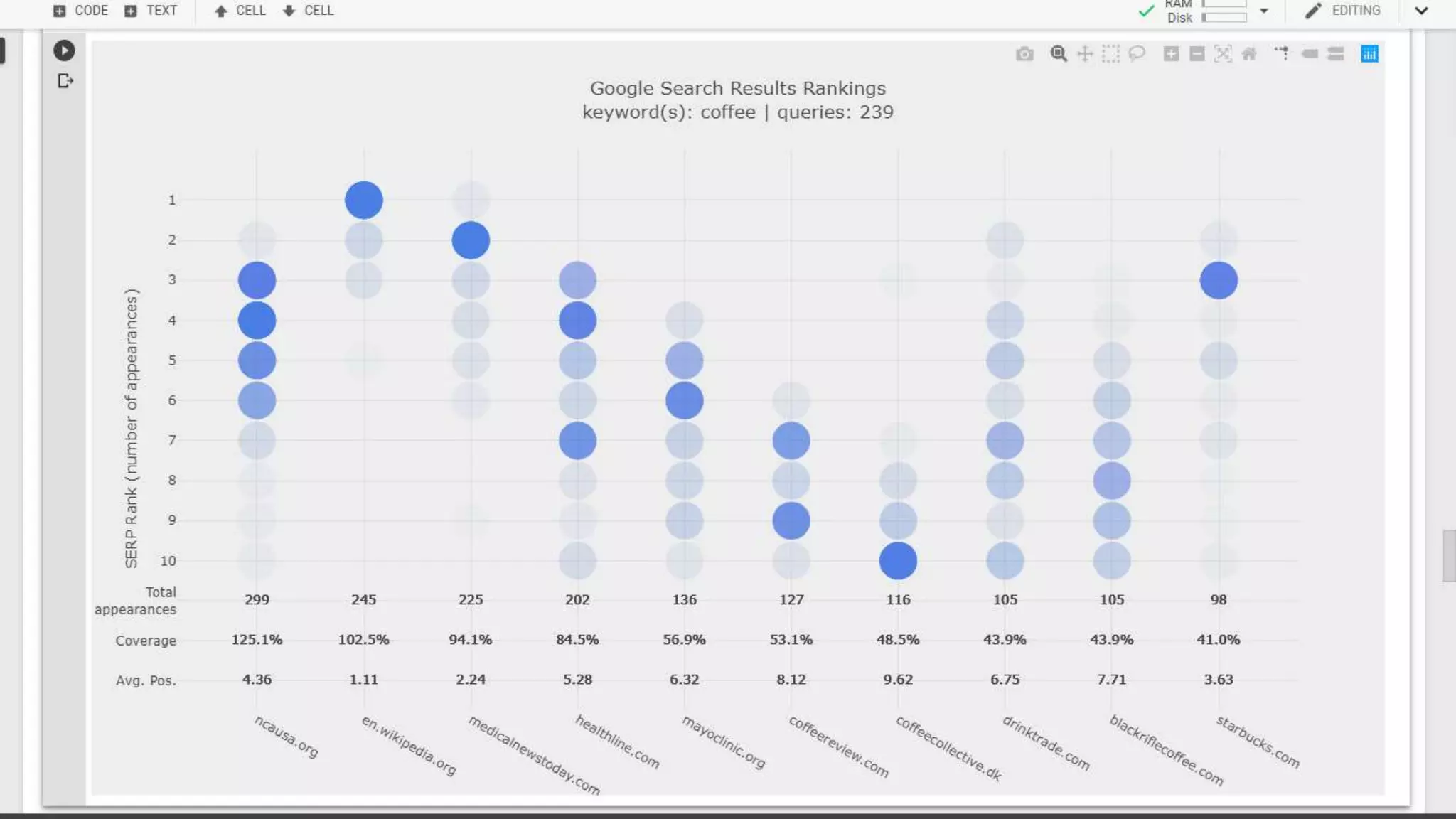

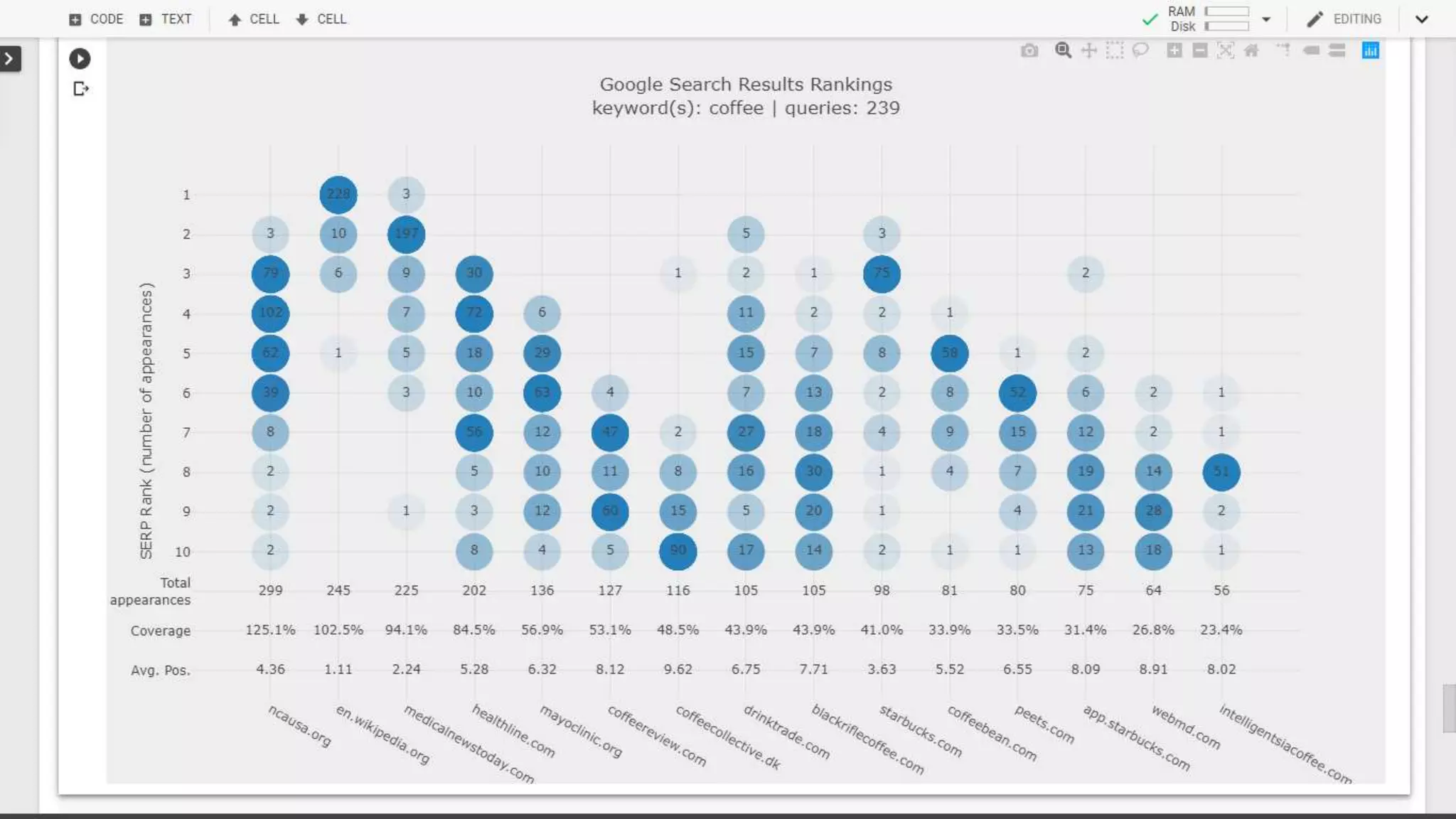

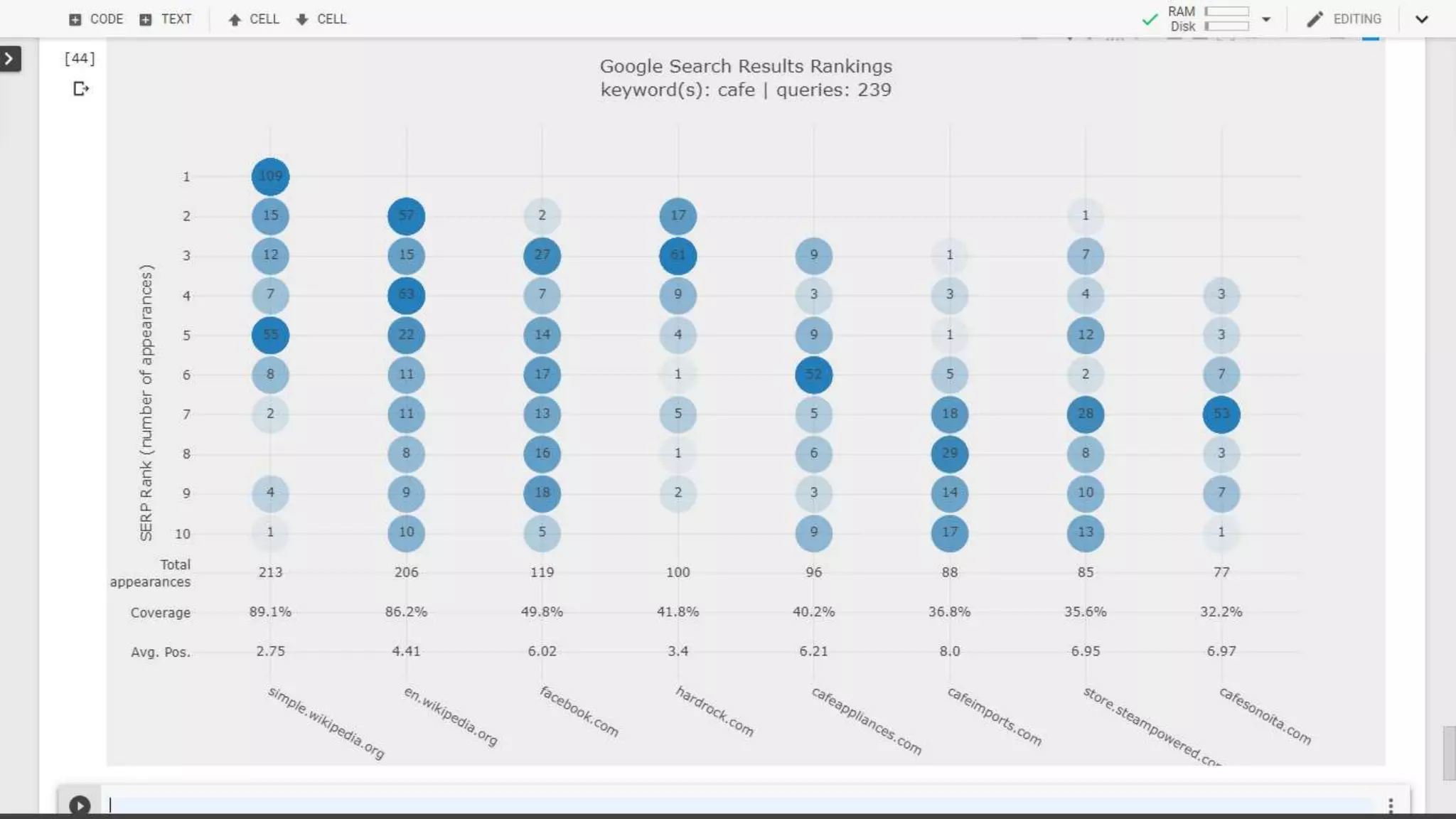

The document discusses the evolution of search engine ranking techniques and the importance of frameworks for utilizing big data effectively in search implementations. It highlights various factors that influence the quality of search engine results pages (SERPs), including organic and sponsored search listings, metrics for evaluating web pages, and the significance of geo-location in keyword searches. Additionally, it emphasizes the underutilization of valuable external data in search algorithms due to the absence of an inclusive framework.

![Have any queries?

Don’t ask me .

Search yourself

References [1]: https://www.semrush.com/blog/analyzing-search-engine-results-

pages/

References [2]: https://www.kaggle.com/eliasdabbas/coffee-and-cafe-search-engine-

rankings-on-google](https://image.slidesharecdn.com/analyzingsearchengineresultspages-190717182834/75/Analyzing-search-engine-results-pages-SERPs-All-over-the-worlds-21-2048.jpg)