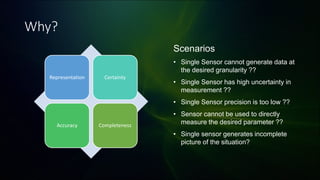

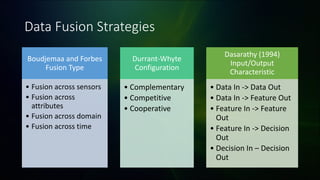

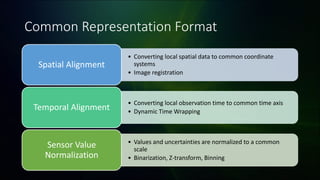

The document introduces sensor fusion, highlighting the limitations of single sensors, such as high uncertainty and low precision. It discusses various data fusion strategies, including fusion across sensors, attributes, domains, and time, along with input/output characteristics. A common representation format is outlined to improve data processing by normalizing values and aligning spatial and temporal data.