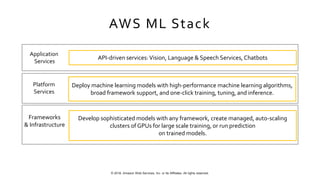

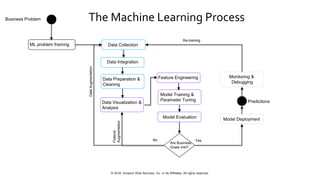

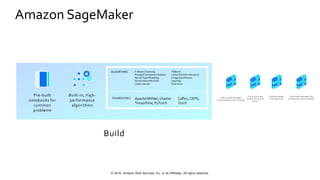

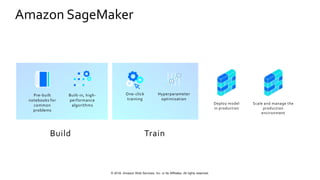

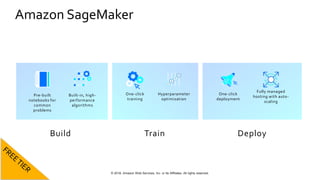

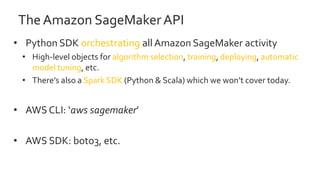

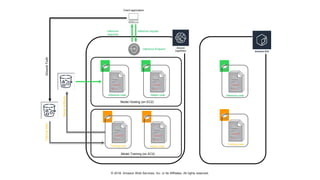

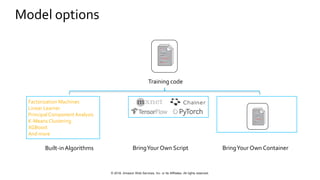

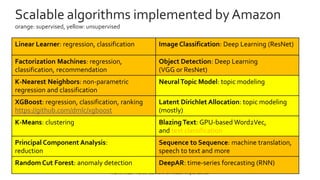

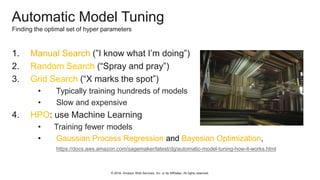

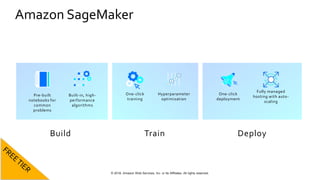

This document introduces Amazon SageMaker, a fully managed platform for building, training, and deploying machine learning models. It provides several key services: built-in algorithms for common ML tasks, the ability to bring your own algorithms or scripts for model training, hyperparameter tuning to optimize models, and managed hosting for deploying trained models. The document outlines the ML workflow that SageMaker aims to simplify and provides demos of using XGBoost and BlazingText for text classification tasks on SageMaker.