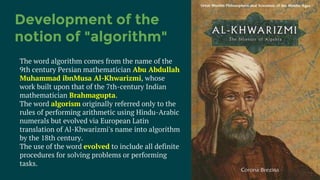

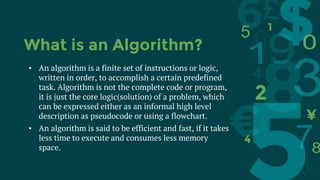

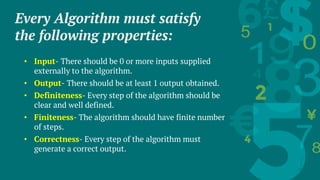

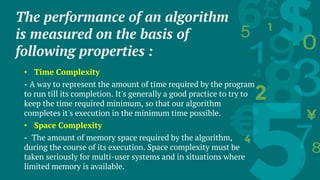

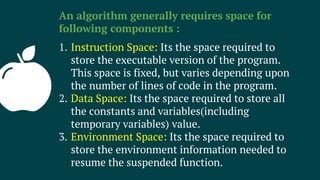

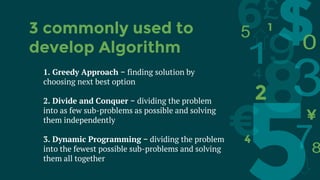

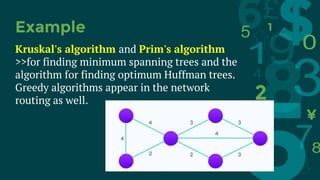

This document discusses algorithms. It begins by defining an algorithm and its key properties: algorithms must have inputs, outputs, defined steps, and be finite and correct. It then discusses measuring algorithm performance through time and space complexity. Common algorithm design approaches are also introduced: greedy algorithms make locally optimal choices; divide-and-conquer breaks problems into subproblems; and dynamic programming optimizes recursion through storing subproblem solutions. Examples are provided for each approach.