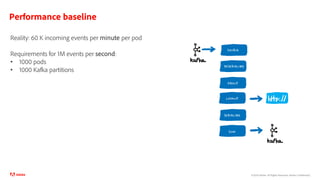

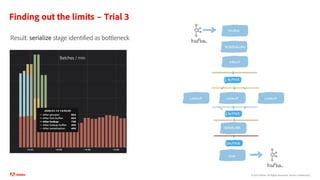

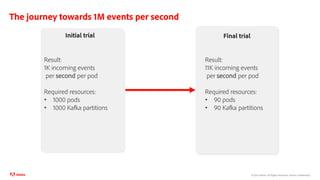

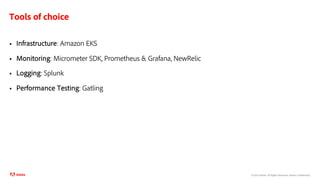

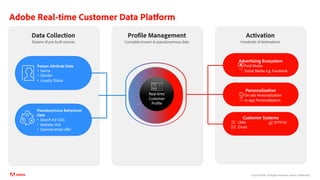

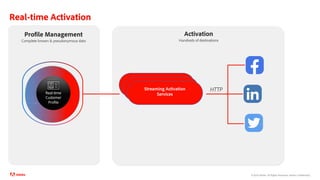

The document discusses Adobe's implementation of Akka Streams for real-time customer data processing, emphasizing the importance of handling high throughput while managing resources and errors effectively. It describes the architecture and workflows for processing data, including the integration of various sources and sinks, as well as performance tuning strategies to achieve significant scaling. A performance case study highlights the challenges and results of optimizing the system to handle 1 million events per second.

![©2020 Adobe. All Rights Reserved. Adobe Confidential.12

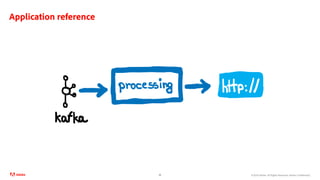

SOURCE[Message, NotUsed]

FLOW[Message, ProfileUpdate, NotUsed]

SINK[ProfileUpdate, Future[Done]]](https://image.slidesharecdn.com/reactive-summit-akka-streams-final-201110195728/85/Akka-Streams-An-Adobe-data-intensive-story-12-320.jpg)

![©2020 Adobe. All Rights Reserved. Adobe Confidential.

13

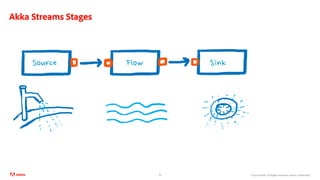

SOURCE[Message, NotUsed]

SINK[ProfileUpdate,

Future[Done]]

FLOW[Message,

Either[ValidationError,ProfileUpdate],

NotUsed]

SINK[ValidationError,

NotUsed]](https://image.slidesharecdn.com/reactive-summit-akka-streams-final-201110195728/85/Akka-Streams-An-Adobe-data-intensive-story-13-320.jpg)

![©2020 Adobe. All Rights Reserved. Adobe Confidential.14

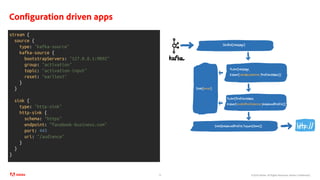

SOURCE[Message]

FLOW[Message,

Either[ValidationError,ProfileUpdate]]

SINK[Error]

FLOW[ProfileUpdate,

Either[InvalidProfileError,EnhancedProfile]]

SINK[EnhancedProfile]](https://image.slidesharecdn.com/reactive-summit-akka-streams-final-201110195728/85/Akka-Streams-An-Adobe-data-intensive-story-14-320.jpg)

![©2020 Adobe. All Rights Reserved. Adobe Confidential.

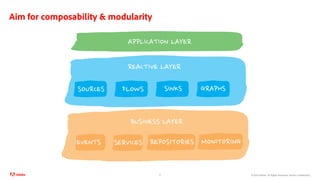

From whiteboard to code

15

def stream(source: Source[M, NotUsed],

deserialize: Flow[M, Either[E, P], NotUsed],

enhance: Flow[P, Either[E, EP], NotUsed],

sinkError: Sink[E, Future[Done]],

httpSink: Sink[EP, Future[Done]])

: Future[Done] = {

val stream: RunnableGraph[Future[Done]] =

source

.via(deserialize via divertErrors(sinkError))

.via(enhance via divertErrors(sinkError)

.toMat(httpSink)(Keep.right)

RunnableGraph.fromGraph(stream).run()

}

}](https://image.slidesharecdn.com/reactive-summit-akka-streams-final-201110195728/85/Akka-Streams-An-Adobe-data-intensive-story-15-320.jpg)

![©2020 Adobe. All Rights Reserved. Adobe Confidential.

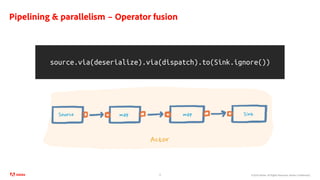

Pipelining & parallelism– mapAsync(parallelism: Int)

21

source.via(deserialize).async.via(dispatch).to(Sink.ignore())

Source map mapAsync(2) Sink

Actor 1 Actor 2

Future[T]](https://image.slidesharecdn.com/reactive-summit-akka-streams-final-201110195728/85/Akka-Streams-An-Adobe-data-intensive-story-21-320.jpg)

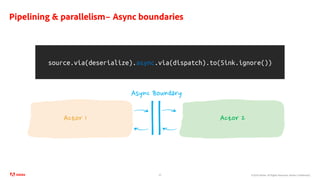

![©2020 Adobe. All Rights Reserved. Adobe Confidential.

Pipelining & parallelism– mapAsync vs mapAsyncUnordered

22

mapAsync(3)

Future[T]

123457 689](https://image.slidesharecdn.com/reactive-summit-akka-streams-final-201110195728/85/Akka-Streams-An-Adobe-data-intensive-story-22-320.jpg)

![©2020 Adobe. All Rights Reserved. Adobe Confidential.

Pipelining & parallelism– mapAsync vs mapAsyncUnordered

23

mapAsync(3)

Future[T]

123457 68

mapAsyncUnordered(3)

Future[T]

123547 68

9

9](https://image.slidesharecdn.com/reactive-summit-akka-streams-final-201110195728/85/Akka-Streams-An-Adobe-data-intensive-story-23-320.jpg)

![©2020 Adobe. All Rights Reserved. Adobe Confidential.

Pipelining & parallelism– Internal buffers

24

mapAsync(3)

Future[T]

123457 689

# Default max size of buffers used in stream elements

max-input-buffer-size = 16

val dispatch: Future[T] = ???

Flow[T]

.buffer(bufferSize, OverflowStrategy.backpressure)

.mapAsync(parallelism)(dispatch)](https://image.slidesharecdn.com/reactive-summit-akka-streams-final-201110195728/85/Akka-Streams-An-Adobe-data-intensive-story-24-320.jpg)

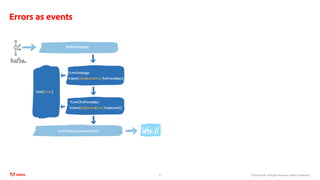

![©2020 Adobe. All Rights Reserved. Adobe Confidential.

Errors as events: is it enough?

26

def source : Source[Either[E, I], NotUsed] =

Source.fromGraph( new GraphStage[SourceShape[Either[E,I]]]{

override def onPull(): Unit = kafkaConsumer.fetch() match {

case Success(i) => push(out, Right(i)

case Failure(t) => push(out, Left(PipelineSourceError))

}

})](https://image.slidesharecdn.com/reactive-summit-akka-streams-final-201110195728/85/Akka-Streams-An-Adobe-data-intensive-story-26-320.jpg)

![©2020 Adobe. All Rights Reserved. Adobe Confidential.

Let the errors be

27

def source : Source[I, NotUsed] =

Source.fromGraph( new GraphStage[SourceShape[I]]{

override def onPull(): Unit = pipelineConsumer.fetch() match {

case Success(i) => push(out, i)

case Failure(t) => throw PipelineSourceError

}

})](https://image.slidesharecdn.com/reactive-summit-akka-streams-final-201110195728/85/Akka-Streams-An-Adobe-data-intensive-story-27-320.jpg)

![©2020 Adobe. All Rights Reserved. Adobe Confidential.

Let the errors be, but supervised

28

def source : Source[I, NotUsed] =

Source.fromGraph( new GraphStage[SourceShape[I]]{

override def onPull(): Unit = pipelineConsumer.fetch() match {

case Success(i) => push(out, i)

case Failure(t) => throw PipelineSourceError

}

})

def supervisedSource : Source[I, NotUsed] =

RestartSource.withBackoff(minBackoff, maxBackoff, maxRestarts) { () => source

}

https://doc.akka.io/docs/akka/current/stream/stream-error.html#supervision-strategies](https://image.slidesharecdn.com/reactive-summit-akka-streams-final-201110195728/85/Akka-Streams-An-Adobe-data-intensive-story-28-320.jpg)

![©2020 Adobe. All Rights Reserved. Adobe Confidential.

Sometimes the stream needs to actually stop

val supervisedSource : Source[I, NotUsed] =

RestartSource.withBackoff(minBackoff, maxBackoff, maxRestarts) { () => source }

val killSwitch : SharedKillSwitch = KillSwitches.shared("...")

val stream: RunnableGraph[Future[Done]] =

supervisedSource

.async

.via(killSwitch.flow)

.via(deserialize via divertErrors(sinkError))

.via(dispatch via divertErrors(sinkError))

.toMat(Sink.ignore)(Keep.right)

val runningStream: Future[Done] = stream.run()

runningStream onComplete { case _ => sys.exit(1) }

CoordinatedShutdown(system) addJvmShutdownHook {

killSwitch.shutdown()

}

https://doc.akka.io/docs/akka/current/stream/stream-dynamic.html#controlling-stream-completion-with-killswitch

When the source fails, we want the stream to stop:

• cancel its upstream

• complete its downstream

Akka Streams Kill Switch:

• complete the stream via shutdown()

• downstream in-flight messages are processed

before returning from shutdown()](https://image.slidesharecdn.com/reactive-summit-akka-streams-final-201110195728/85/Akka-Streams-An-Adobe-data-intensive-story-29-320.jpg)