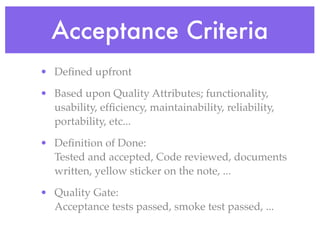

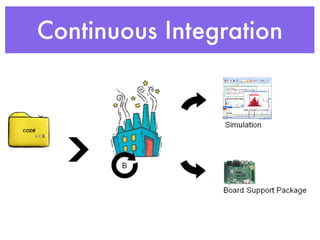

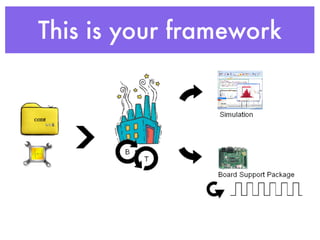

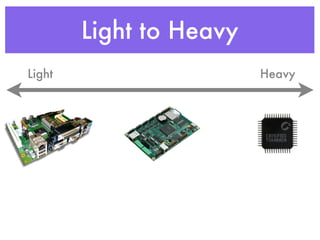

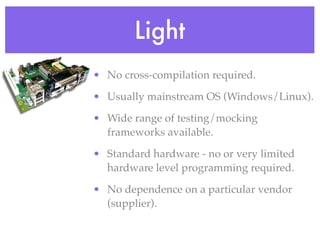

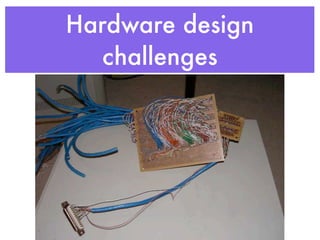

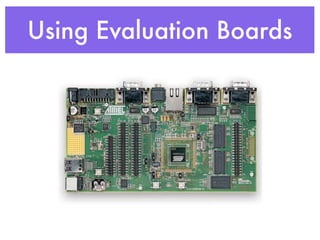

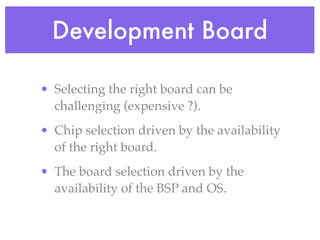

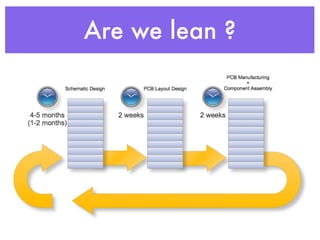

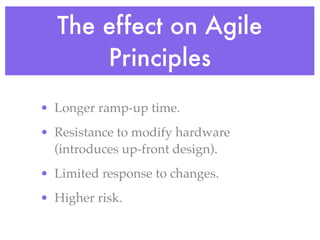

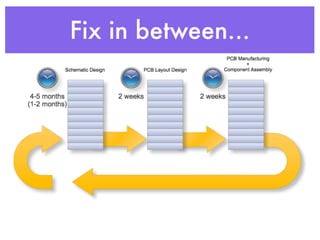

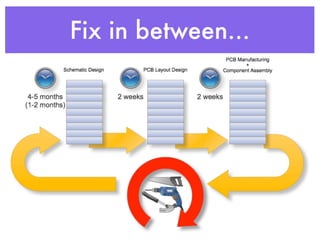

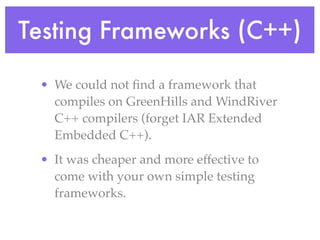

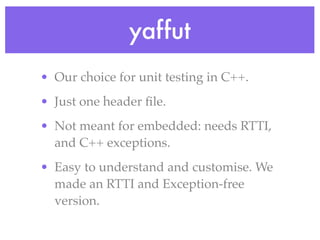

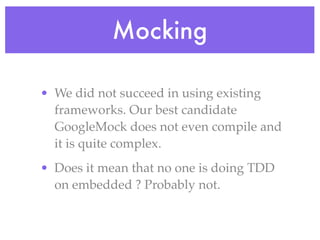

The document discusses test-driven development (TDD) for embedded systems, highlighting the challenges of integrating agile practices due to hardware constraints. It emphasizes the need for a structured testing strategy, continuous integration, and effective acceptance criteria to enhance the quality of software in an embedded context. Key insights include the necessity for experienced testers and the limitations posed by hardware development, which often hinders the adoption of lean methodologies.