This document summarizes several action recognition datasets for human activities. It describes both single-label datasets that classify entire videos, as well as multi-label datasets that temporally localize actions within videos. It also categorizes datasets as generic, instructional, ego-centric, compositional, multi-view, or multi-modal depending on the type of activities and data modalities included. Several prominent multi-modal datasets are highlighted, such as PKU-MMD, NTU RGB+D, MMAct, and HOMAGE, which provide video alongside additional modalities like depth, infrared, audio, and sensor data.

![2

Action Recognition Datasets

Generic

Kinetics [1]

Charades [2]

Activity Net [3]

UCF101 [4]

Instructional

YouCook [5]

COIN [6]

HowTo100M [7]

[1] Carreira, Joao, et al. " Quo vadis, action recognition? a new model and the kinetics dataset." CVPR 2017

[2] Sigurdsson, Gunnar A., et al. "Hollywood in homes: Crowdsourcing data collection for activity understanding." ECCV 2016

[3] Caba Heilbron, Fabian, et al. "Activitynet: A large-scale video benchmark for human activity understanding." CVPR 2015

[4] Soomro, Khurram, et al. "UCF101: A dataset of 101 human actions classes from videos in the wild." arXiv 2012

[5] Zhou, Luowei, et al. "Towards automatic learning of procedures from web instructional videos." AAAI 2018

[6] Tang, Yansong, et al. "Coin: A large-scale dataset for comprehensive instructional video analysis." CVPR 2019

[7] Miech, Antoine, et al. "Howto100m: Learning a text-video embedding by watching hundred million narrated video clips." ICCV 2019](https://image.slidesharecdn.com/actionrecognitiondatasets-220421022156/85/Action-Recognition-Datasets-pptx-2-320.jpg)

![3

Action Recognition Datasets

Ego-centric

EPIC Kitchens [1]

Compositional

Action Genome [2]

Something-Something [3]

HOMAGE [4]

[1] Damen, Dima, et al. "Scaling egocentric vision: The epic-kitchens dataset." ECCV 2018

[2] Ji, Jingwei, et al. "Action genome: Actions as compositions of spatio-temporal scene graphs." CVPR 2020

[3] Goyal, Raghav, et al. "The" something something" video database for learning and evaluating visual common sense." ICCV 2017

[4] Rai, Nishant, et al. "Home Action Genome: Cooperative Compositional Action Understanding." CVPR 2021](https://image.slidesharecdn.com/actionrecognitiondatasets-220421022156/85/Action-Recognition-Datasets-pptx-3-320.jpg)

![4

Action Recognition Datasets

Multi-view

LEMMA [1]

[1] Jia, Baoxiong, et al. "Lemma: A multi-view dataset for learning multi-agent multi-task activities." ECCV 2020](https://image.slidesharecdn.com/actionrecognitiondatasets-220421022156/85/Action-Recognition-Datasets-pptx-4-320.jpg)

![5

Action Recognition Datasets

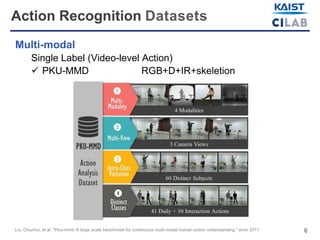

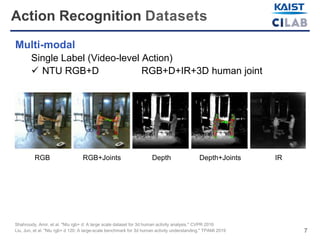

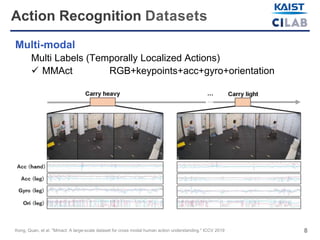

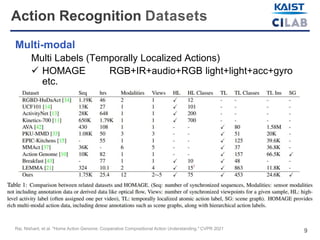

Multi-modal

Single Label (Video-level Action)

MSR-Action3D [1] depth map

PKU-MMD [2] RGB+D+IR+skeletion

NTU RGB+D [3, 4] RGB+D+IR+3D human joint

Multi Labels (Temporally Localized Actions)

MMAct [5] RGB+keypoints+acc+gyro+orientation

LEMMA[6] RGB+D

HOMAGE [7] RGB+IR+audio+RGB light+light+acc+gyro

etc.

[1] Li, Wanqing, et al. "Action recognition based on a bag of 3d points." CVPRW 2010

[2] Liu, Chunhui, et al. "Pku-mmd: A large scale benchmark for continuous multi-modal human action understanding." arxiv 2017

[3] Shahroudy, Amir, et al. "Ntu rgb+ d: A large scale dataset for 3d human activity analysis." CVPR 2016

[4] Liu, Jun, et al. "Ntu rgb+ d 120: A large-scale benchmark for 3d human activity understanding." TPAMI 2019

[5] Kong, Quan, et al. "Mmact: A large-scale dataset for cross modal human action understanding." ICCV 2019

[6] Jia, Baoxiong, et al. "Lemma: A multi-view dataset for learning multi-agent multi-task activities." ECCV 2020

[7] Rai, Nishant, et al. "Home Action Genome: Cooperative Compositional Action Understanding." CVPR 2021](https://image.slidesharecdn.com/actionrecognitiondatasets-220421022156/85/Action-Recognition-Datasets-pptx-5-320.jpg)