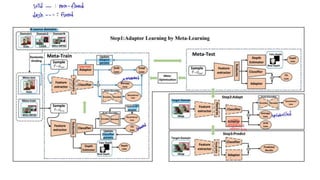

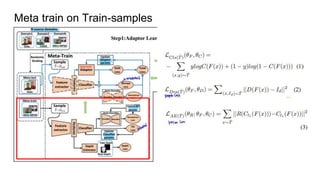

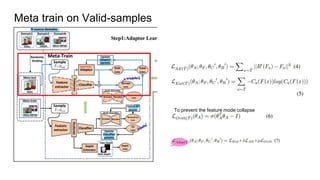

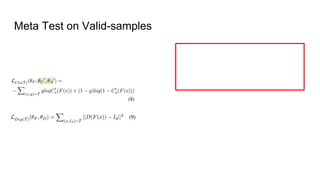

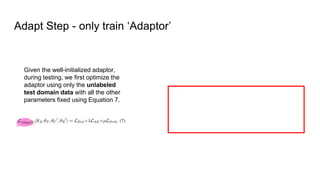

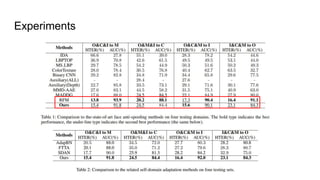

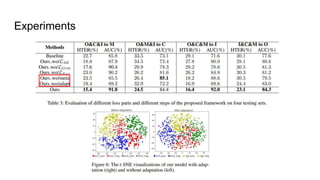

The document proposes a self-domain adaptation framework that utilizes unlabeled target data at inference time. It introduces meta-learning based adaptor learning for training, where the adaptor is initialized using multiple source domains and meta-learning. During testing, the adaptor is further trained using only the unlabeled target data while keeping other parameters fixed, allowing adaptation to the target domain. The conclusions are that this approach introduces effective adaptor learning and loss functions under unsupervised learning conditions.