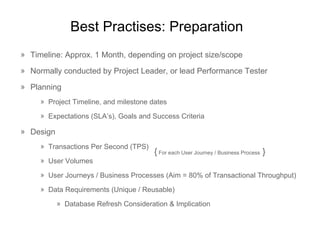

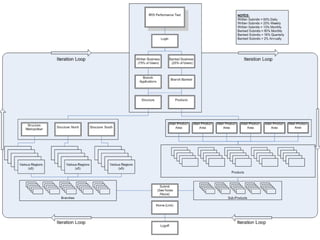

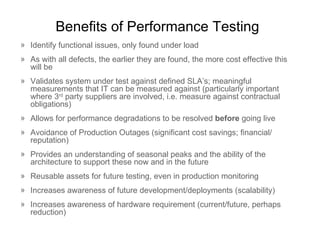

The document outlines best practices for load and performance testing including preparation, development, execution, analysis, and results. It recommends spending approximately 1 month preparing by planning test scenarios, environments, and resources. Development should also take 1 month to create load models, scripts, and test scenarios. Execution and analysis take another month to run tests, identify issues, analyze results, and iterate. Finally, results are summarized in 2-4 weeks to review findings, identify outstanding issues, and agree on future monitoring. The benefits of performance testing are also listed such as identifying defects early, validating systems meet SLAs, avoiding outages, and increasing scalability and hardware awareness.