The document provides information about the Chapman & Hall/CRC Machine Learning & Pattern Recognition Series, including its aims and scope. It publishes reference works, textbooks, and handbooks reflecting the latest advances and applications in machine learning and pattern recognition. The series includes titles in areas such as machine learning, pattern recognition, computational intelligence, robotics, natural language processing, computer vision, and other relevant topics. It has already published titles such as "Machine Learning: An Algorithmic Perspective" and the second edition of the "Handbook of Natural Language Processing."

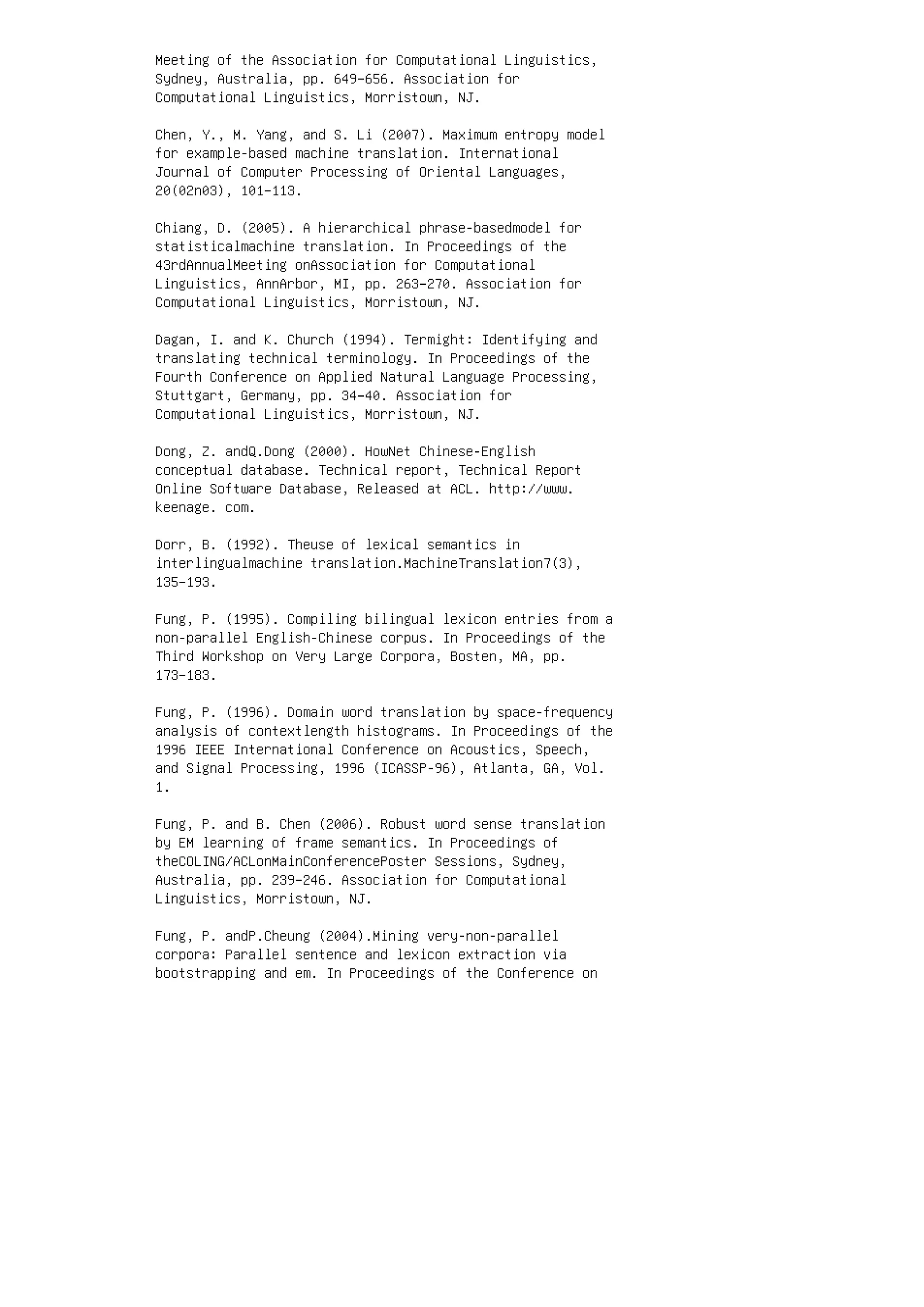

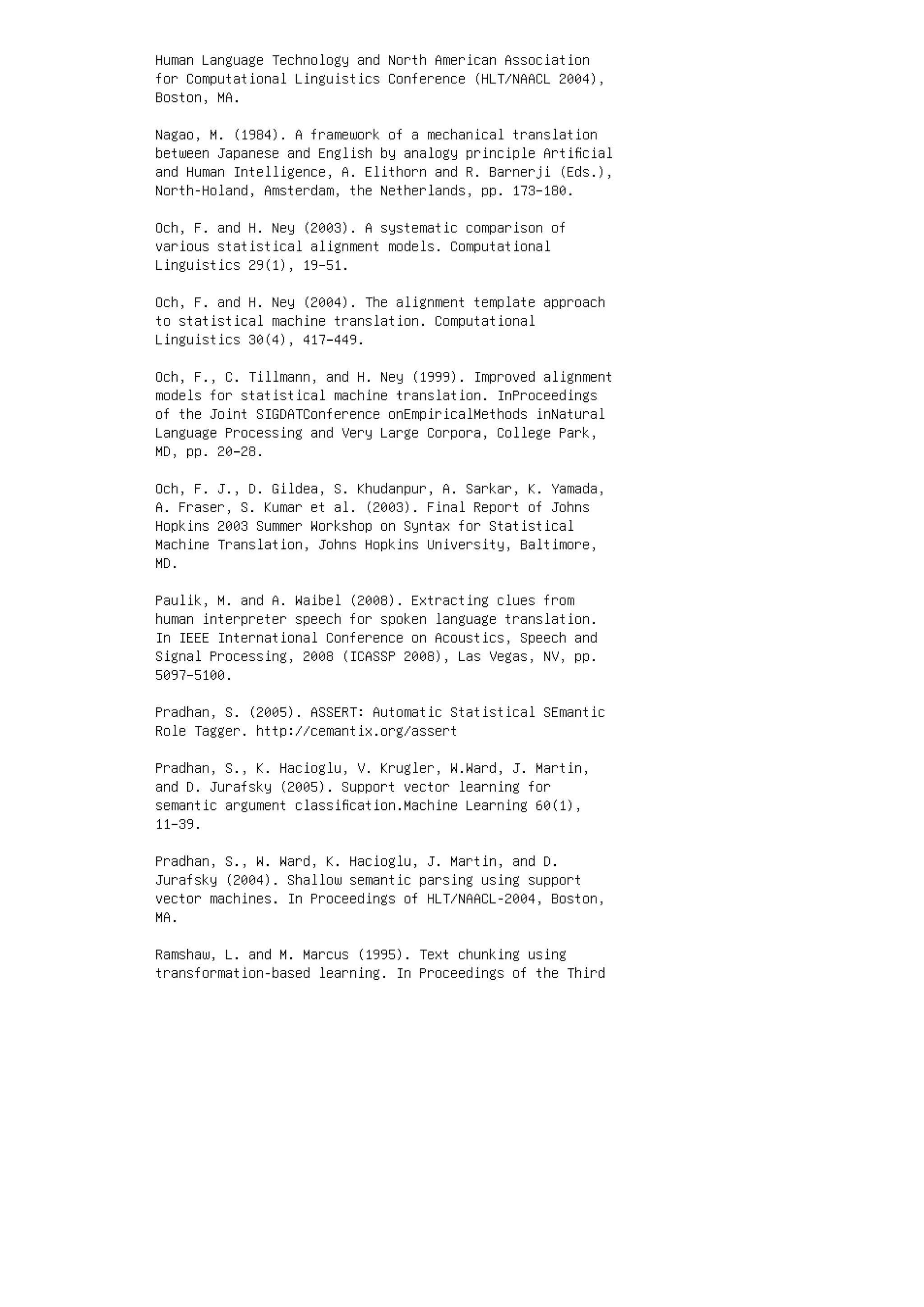

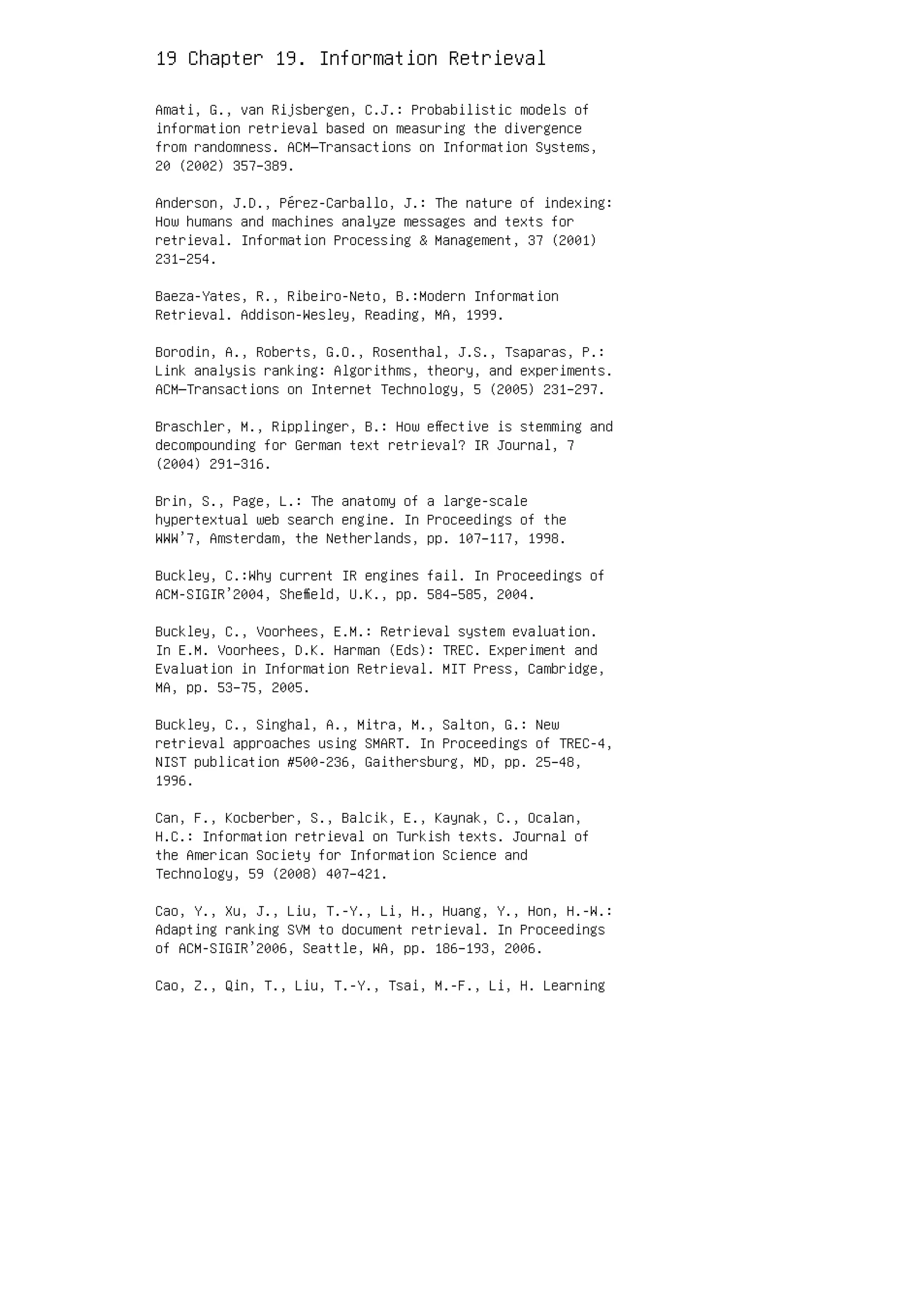

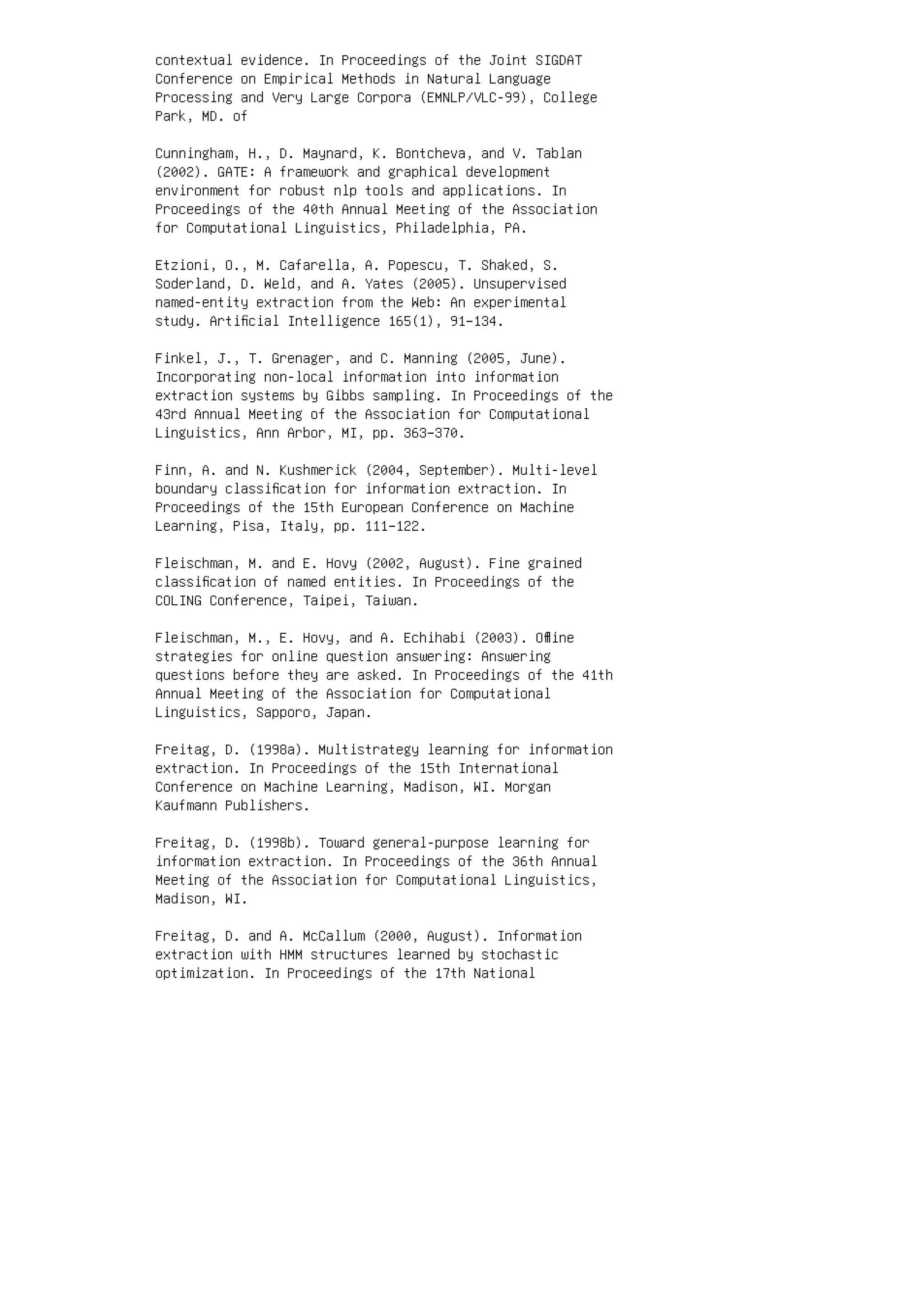

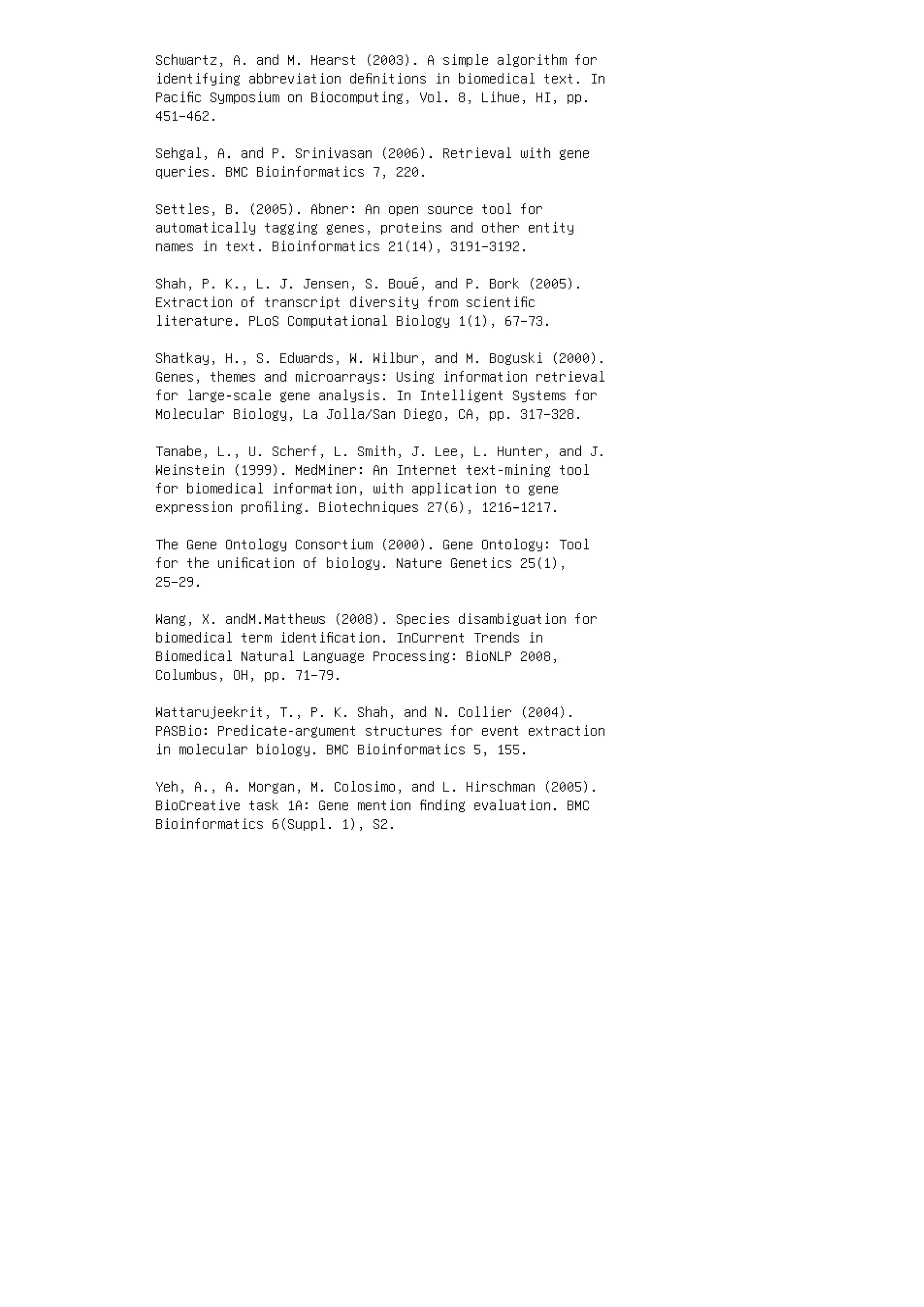

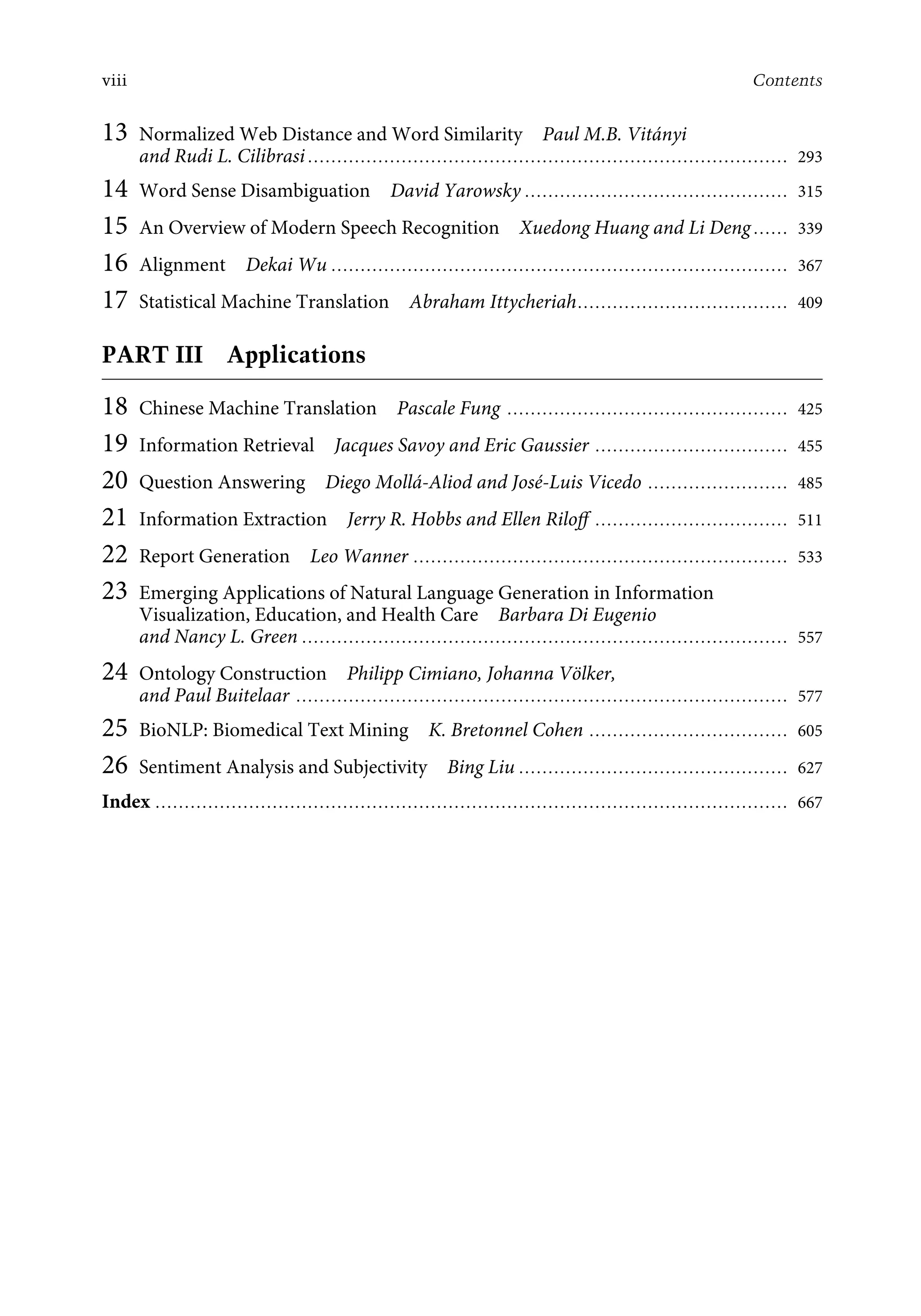

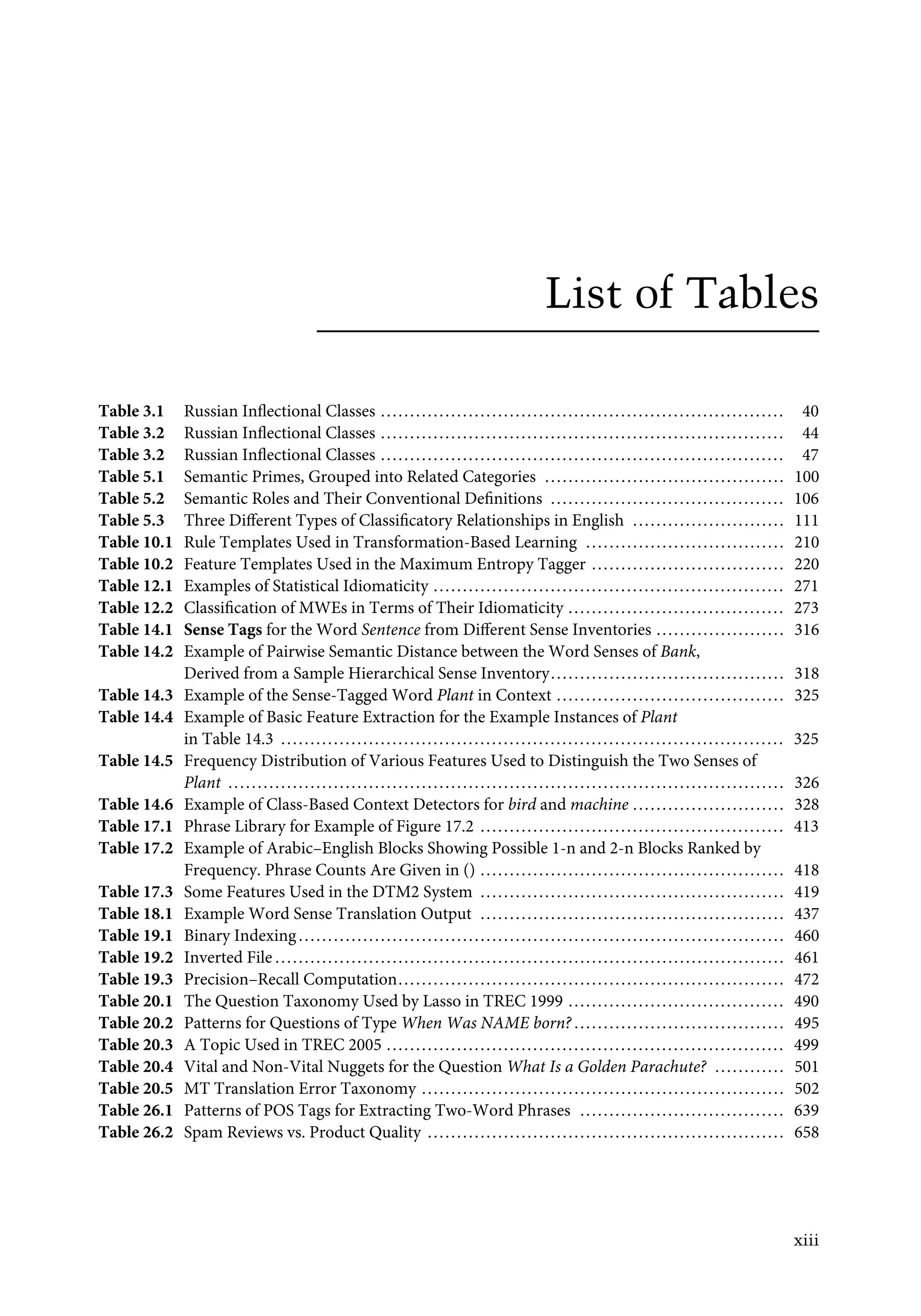

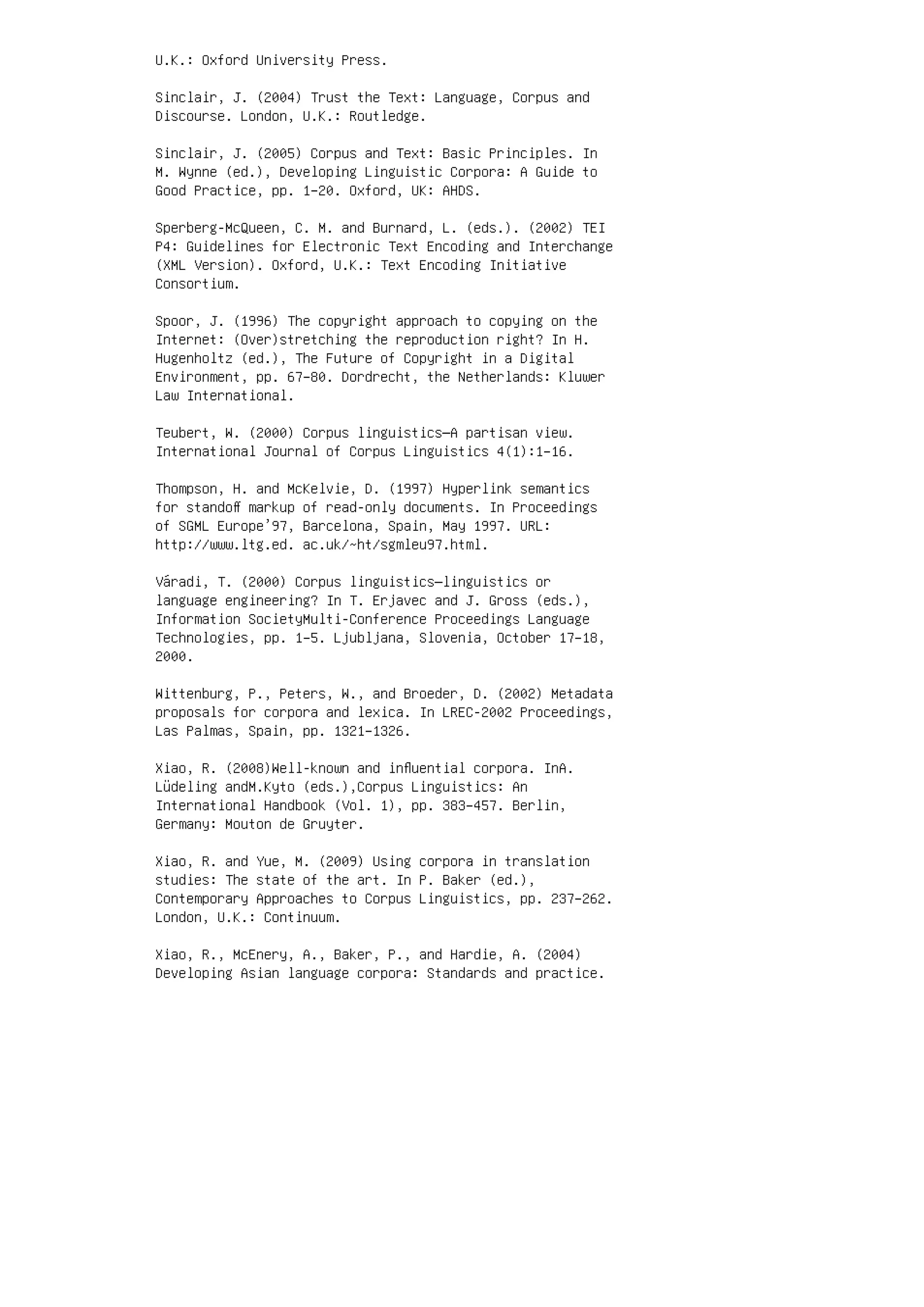

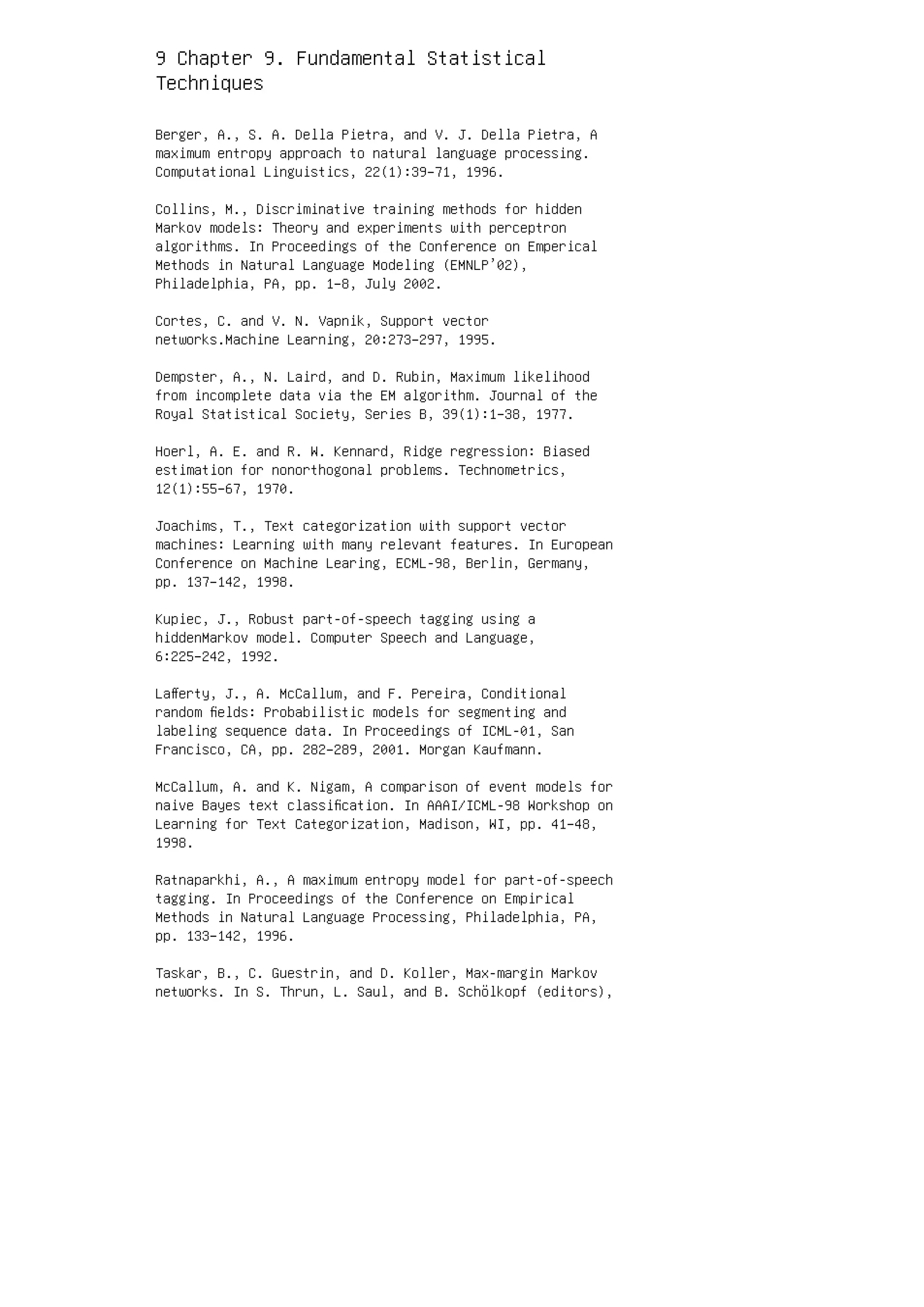

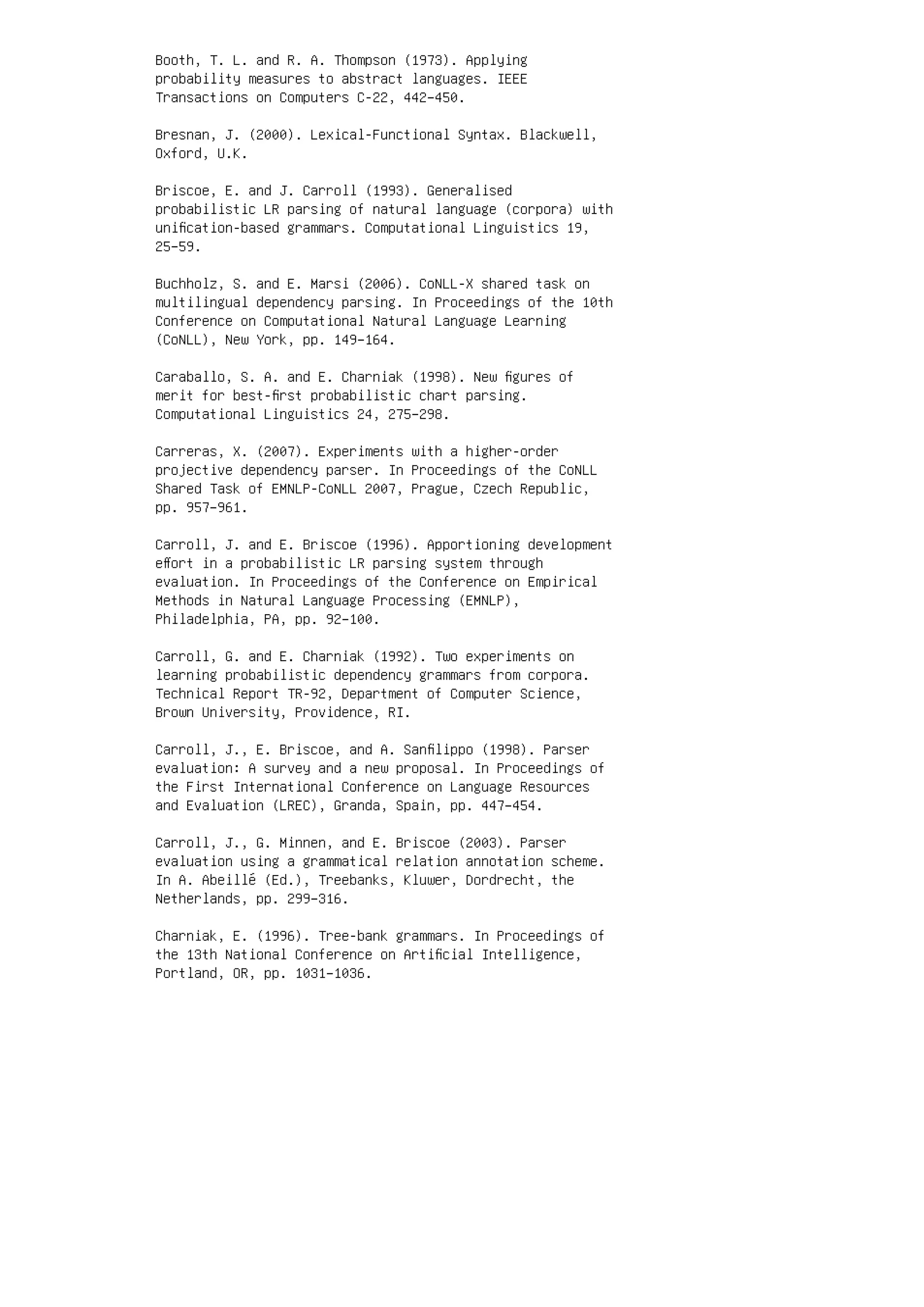

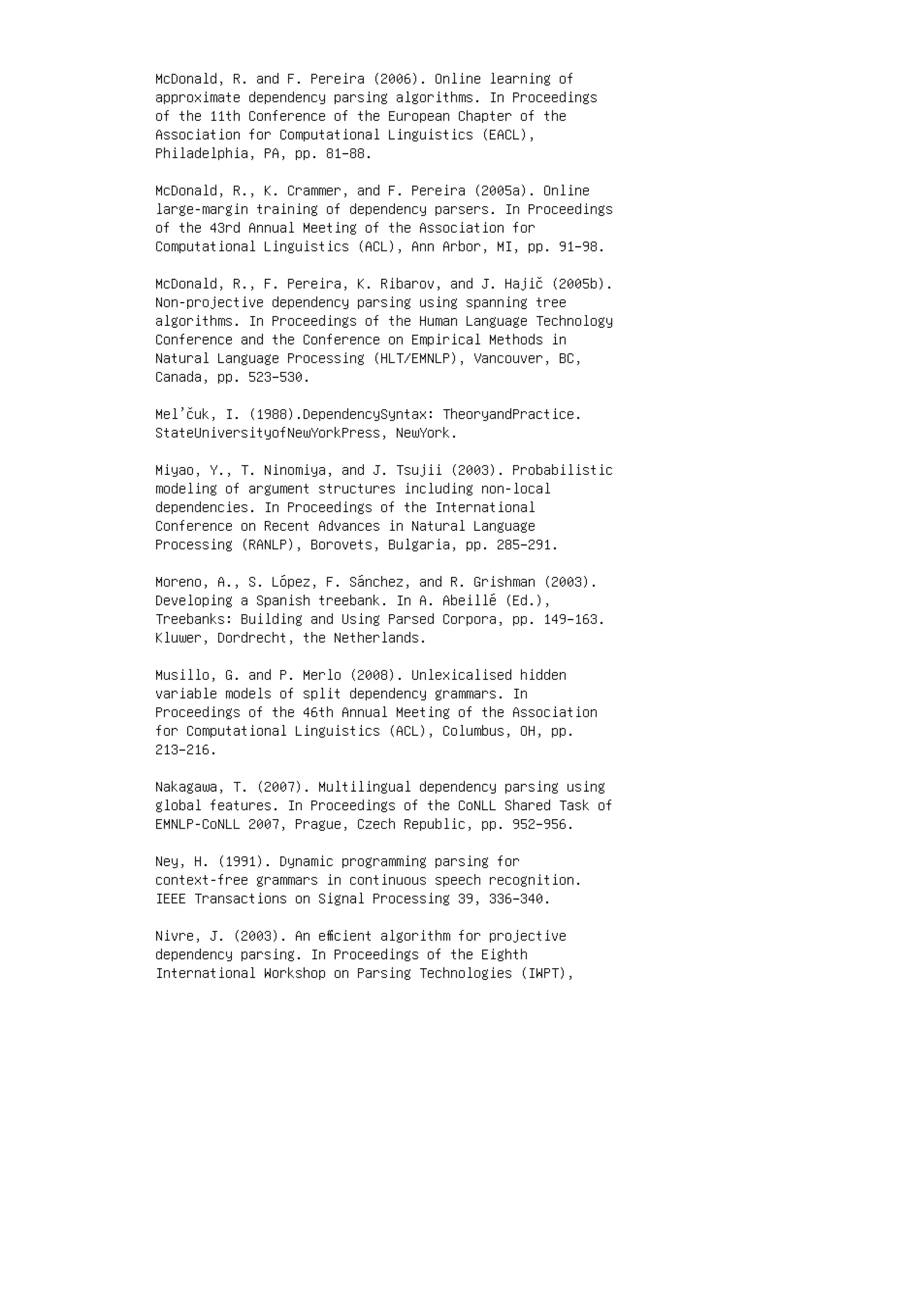

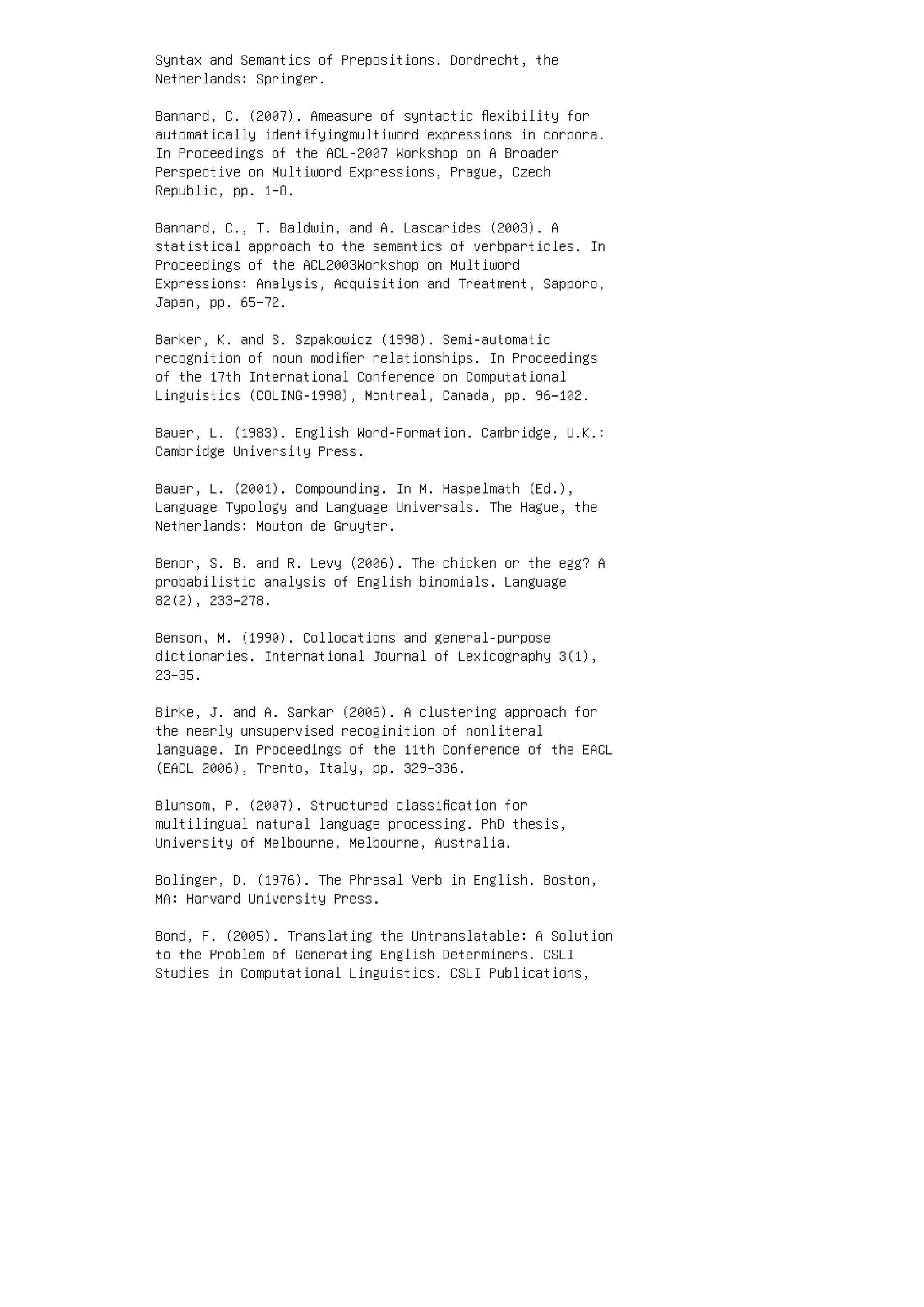

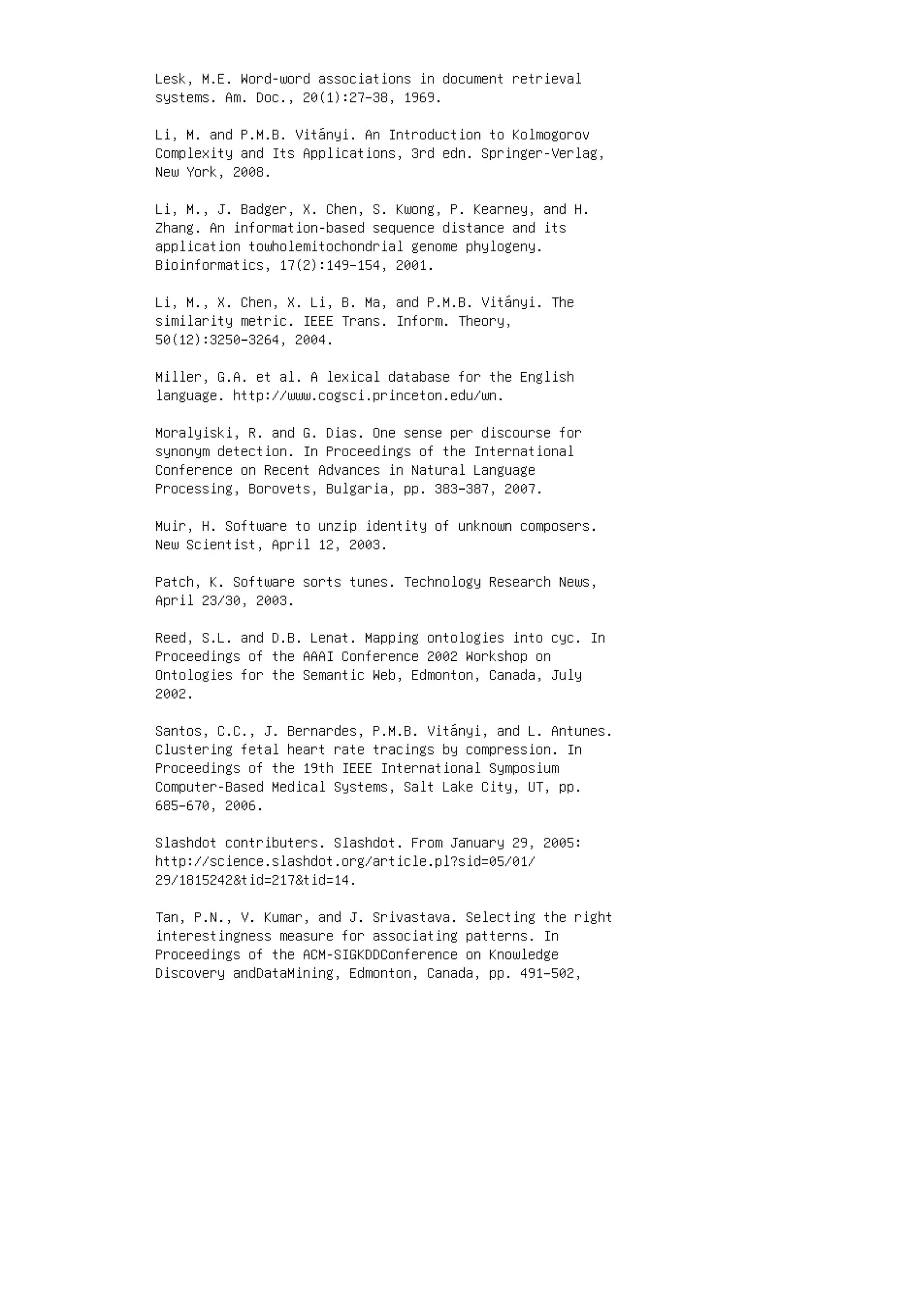

![List of Figures

Figure 1.1 The stages of analysis in processing natural language ........................................ 4

Figure 3.1 A spelling rule FST for glasses ................................................................... 34

Figure 3.2 A spelling rule FST for flies ...................................................................... 34

Figure 3.3 An FST with symbol classes ..................................................................... 36

Figure 3.4 Russian nouns classes as an inheritance hierarchy ........................................... 48

Figure 4.1 Example grammar ................................................................................ 62

Figure 4.2 Syntax tree of the sentence “the old man a ship”.............................................. 62

Figure 4.3 CKY matrix after parsing the sentence “the old man a ship” ................................ 67

Figure 4.4 Final chart after bottom-up parsing of the sentence “the old man a ship.” The dotted

edges are inferred but useless.................................................................... 71

Figure 4.5 Final chart after top-down parsing of the sentence “the old man a ship.” The dotted

edges are inferred but useless.................................................................... 71

Figure 4.6 Example LR(0) table for the grammar in Figure 4.1 .......................................... 74

Figure 5.1 The lexical representation for the English verb build......................................... 99

Figure 5.2 UER diagrammatic modeling for transitive verb wake up ................................... 103

Figure 8.1 A scheme of annotation types (layers) ......................................................... 169

Figure 8.2 Example of a Penn treebank sentence .......................................................... 171

Figure 8.3 A simplified constituency-based tree structure for the sentence

John wants to eat cakes ........................................................................... 171

Figure 8.4 A simplified dependency-based tree structure for the sentence

John wants to eat cakes ........................................................................... 171

Figure 8.5 A sample tree from the PDT for the sentence: Česká opozice se nijak netají tím, že

pokud se dostane k moci, nebude se deficitnímu rozpočtu nijak bránit. (lit.: Czech

opposition Refl. in-no-way keeps-back the-fact that in-so-far-as [it] will-come into

power, [it] will-not Refl. deficit budget in-no-way oppose. English translation: The

Czech opposition does not keep back that if they come into power, they will not oppose

the deficit budget.) ................................................................................ 173

Figure 8.6 A sample French tree. (English translation: It is understood that the public functions

remain open to all the citizens.) ................................................................. 174

Figure 8.7 Example from the Tiger corpus: complex syntactic and semantic dependency

annotation. (English translation: It develops and prints packaging materials

and labels.)......................................................................................... 174

Figure 9.1 Effect of regularization ........................................................................... 191

Figure 9.2 Margin and linear separating hyperplane...................................................... 192

Figure 9.3 Multi-class linear classifier decision boundary ................................................ 193

Figure 9.4 Graphical representation of generative model ................................................. 195

Figure 9.5 Graphical representation of discriminative model ............................................ 196

ix](https://image.slidesharecdn.com/9780429149207previewpdf-231005165728-2b1ccb76/75/9780429149207_previewpdf-pdf-10-2048.jpg)

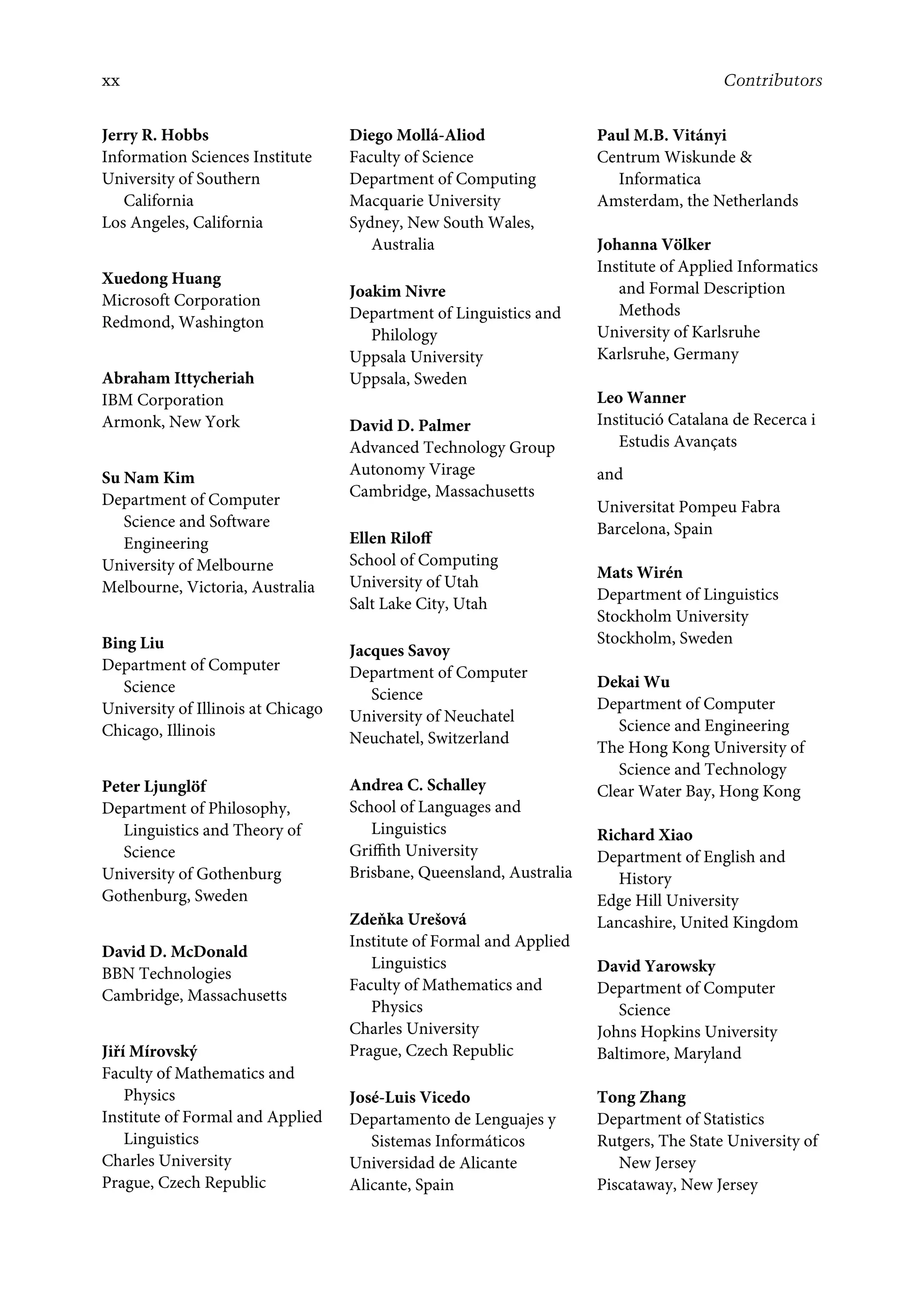

![International Conference on Computational Linguistics,

COLING’86, Bonn, Germany.

Karttunen, L. and A. M. Zwicky (1985). Introduction. In D.

Dowty, L. Karttunen, and A. Zwicky (Eds.), Natural Language

Processing: Psycholinguistic, Computational and Theoretical

Perspectives, pp. 1–25. Cambridge University Press, New

York.

Kasami, T. (1965). An efficient recognition and syntax

algorithm for context-free languages. Technical Report

AFCLR-65-758, Air Force Cambridge Research Laboratory,

Bedford, MA.

Kasper, R. T. and W. C. Rounds (1986). A logical semantics

for feature structures. In Proceedings of the 24th Annual

Meeting of the Association for Computational Linguistics,

ACL’86, New York, pp. 257–266.

Kasper, W., B. Kiefer, H. U. Krieger, C. J. Rupp, and K. L.

Worm (1999). Charting the depths of robust speech parsing.

In Proceedings of the 37th Annual Meeting of the

Association for Computational Linguistics, ACL’99, College

Park, MD, pp. 405–412.

Kay,M. (1967). Experiments with a powerful parser.

InProceedings of the Second International Conference on

Computational Linguistics [2ème conférence internationale

sur le traitement automatique des langues], COLING’67,

Grenoble, France.

Kay, M. (1973). The MIND system. In R. Rustin (Ed.),

Natural Language Processing, pp. 155–188. Algorithmics

Press, New York.

Kay, M. (1986). Algorithm schemata and data structures in

syntactic processing. In B. Grosz, K. S. Jones, and B. L.

Webber (Eds.), Readings in Natural Language Processing, pp.

35–70, Morgan Kaufmann Publishers, Los Altos, CA.

Originally published as Report CSL-80-12, Xerox PARC, Palo

Alto, CA, 1980.

Kay, M. (1989). Head-driven parsing. In Proceedings of the

First International Workshop on Parsing Technologies,

IWPT’89, Pittsburgh, PA.

Kay, M. (1999). Chart translation. In Proceedings of the MT

Summit VII, Singapore, pp. 9–14.

Kipps, J. R. (1991). GLR parsing in time O(n 3 ). In M.](https://image.slidesharecdn.com/9780429149207previewpdf-231005165728-2b1ccb76/75/9780429149207_previewpdf-pdf-49-2048.jpg)

![5 Chapter 5. Semantic Analysis

Andrews, A. D. 2006. Semantic composition for NSM, using

LFG + glue. In Selected Papers from the 2005 Conference of

the Australian Linguistics Society, Melbourne, Australia,

K. Allan (ed.). http://www.als.asn.au

Apresjan, J. D. 1992. Lexical Semantics: User’s Guide to

Contemporary Russian Vocabulary. Ann Arbor, MI: Karoma.

[Orig. published 1974 as Leksiceskaja

Semantika—Sinonimeceskie Sredstva Jazyka. Moskva, Russia:

Nauka.]

Apresjan, J. D. 2000. Systematic Lexicography (Trans.

K.Windle). Oxford, U.K.: Oxford University Press.

Asher, N. 1993. Reference to Abstract Objects in Discourse.

Dordrecht, the Netherlands: Kluwer.

Asher, N. and A. Lascarides 2003. Logics of Conversation.

Cambridge, U.K.: Cambridge University Press.

Barwise, J. and J. Perry. 1983. Situations and Attitudes.

Cambridge, MA: MIT Press.

Battig, W. F. and W. E. Montague. 1969. Category norms for

verbal items in 56 categories: A replication and extension

of the Connecticut category norms. Journal of Experimental

Psychology Monograph 80(3):1–46.

Behrens, L. 1998. Polysemy as a problem of

representation—‘representation’ as a problem of polysemy.

Review article on: James Pustejovsky. The Generative

Lexicon. Lexicology 4(1):105–154.

Blackburn, P. and J. Bos. 1999. Working with Discourse

Representation Theory: An Advanced Course in Computational

Semantics. Stanford, CA: CSLI Press. Manuscript.

http://homepages. inf.ed.ac.uk/jbos/comsem/book2.html

Blackburn, P. and J. Bos. 2005. Representation and

Inference for Natural Language: A First Course in

Computational Semantics. Stanford, CA: CSLI Press.

Bowerman, M. and P. Brown (eds.). 2008. Crosslinguistic

Perspectives on Argument Structure. New York, NY/London,

U.K.: Taylor Francis.

Brachman, R. 1983. What IS-A is and isn’t: An analysis of

taxonomic links in semantic networks. IEEE Computer](https://image.slidesharecdn.com/9780429149207previewpdf-231005165728-2b1ccb76/75/9780429149207_previewpdf-pdf-57-2048.jpg)

![the description of emotion (Special Issue). Pragmatics and

Cognition 10(1).

Fauconnier, G. 1985.Mental Spaces. Cambridge, MA: MIT Press.

Fellbaum, C. 1998a. A semantic network of English: The

mother of all WordNets. In EuroWordNet: A Multilingual

Database with Lexical Semantic Networks, P. Vossen (ed.),

pp. 209–220. Dordrecht, the Netherlands: Kluwer.

Fellbaum, C. (ed.). 1998b.WordNet: An Electronic Lexical

Database. Cambridge, MA: MIT Press.

Fellbaum, C. 2007. The ontological loneliness of verb

phrase idioms. In Ontolinguistics: How Ontological Status

Shapes the Linguistic Coding of Concepts, A. C. Schalley

and D. Zaefferer (eds.), pp. 419–434. Berlin, Germany:

Mouton de Gruyter. of

Fillmore, C. J. 1968. The case for case. In Universals in

Linguistic Theory, E. Bach and R. T. Harms (eds.), pp.

1–88. New York, NY: Holt, Rinehart Winston.

Fried, M. and J.-O.Östman. 2004. Construction grammar. A

thumbnail sketch. InConstructionGrammar in Cross-Language

Perspective, M. Fried and J.-O. Östman (eds.), pp. 11–86.

Amsterdam, the Netherlands: Benjamins.

Gamut, L. T. F. 1991. Logic, Language and Meaning, 2 Vols.

Chicago, IL/London, U.K.: University of Chicago Press.

Geeraerts, D. 2002. Conceptual approaches III: Prototype

theory. In Lexikologie/Lexicology. Ein internationales

Handbuch zur Natur und Struktur von Wörtern und

Wortschätzen. [An International Handbook on the Nature and

Structure of Words and Vocabularies], Vol. I., D. A. Cruse,

F. Hundsnurscher, M. Job, and P. R. Lutzeier (eds.), pp.

284–291. Berlin, Germany: Mouton de Gruyter.

Geurts, B. and D. I. Beaver. 2008. Discourse representation

theory. In The Stanford Encyclopedia of Philosophy (Winter

2008 Edition), E. N. Zalta (ed.), Stanford University,

Stanford, CA.

Goddard, C. 1996a. The “social emotions” of Malay (Bahasa

Melayu). Ethos 24(3):426–464.

Goddard, C. 1996b. Pitjantjatjara/Yankunytjatjara to

English Dictionary, revised 2nd edn. Alice Springs,

Australia: Institute for Aboriginal Development.](https://image.slidesharecdn.com/9780429149207previewpdf-231005165728-2b1ccb76/75/9780429149207_previewpdf-pdf-59-2048.jpg)

![Guenthner, F. 2008. Electronic Dictionaries, Tagsets and

Tagging. Munich, Germany: Lincom.

Gutiérrez-Rexach, J. (ed.). 2003. Semantics. Critical

Concepts in Linguistics, 6 Vols. London, U.K.: Routledge.

Harkins, J. and A. Wierzbicka (eds.). 2001. Emotions in

Crosslinguistic Perspective. Berlin, Germany: Mouton de

Gruyter.

Heim, I. 1982. The semantics of definite and indefinite noun

phrases. PhD thesis, University of Massachusetts, Amherst,

MA.

Heim, I. 1983. File change semantics and the familiarity

theory of definiteness. In Meaning, Use and Interpretation

of Language, R. Bäuerle, C. Schwarze, and A. von Stechow

(eds.), pp. 164–189. Berlin, Germany: Walter de Gruyter.

[Reprinted in Gutiérrez-Rexach (2003) Vol. III, pp.

108–135].

Ho, D. Y. F. 1996. Filial piety and its psychological

consequences. In The Handbook of Chinese Psychology, M. H.

Bond (ed.), pp. 155–165. Hong Kong, China: Oxford

University Press.

Hoelter, M. 1999. Lexical-Semantic Information in

Head-Driven Phrase Structure Grammar and Natural Language

Processing. Munich, Germany: Lincom.

Huang, C.-R., N. Calzolari, A. Gangemi, A. Lenci, A.

Oltramari, and L. Prévot (eds.). In press. Ontology and the

Lexicon: A Natural Language Processing Perspective.

Cambridge, U.K.: Cambridge University Press.

Jackendoff, R. 1990. Semantic Structures. Cambridge, MA: MIT

Press.

Jackendoff, R. 2002. Foundations of Language: Brain,

Meaning, Grammar, Evolution. Oxford, U.K.: Oxford

University Press.

Johnson, M. 1987. The Body in theMind: The Bodily Basis of

Meaning, Imagination, and Reason. Chicago, IL: University

of Chicago Press.

Kamp,H.1981. A theoryof truthandsemantic representation.

InFormalMethods in theStudyofLanguage, J. Groenendijk, T.

Janssen, and M. Stokhof (eds.), pp. 277–322. Amsterdam, the](https://image.slidesharecdn.com/9780429149207previewpdf-231005165728-2b1ccb76/75/9780429149207_previewpdf-pdf-61-2048.jpg)

![Netherlands: Mathematical Centre. [Reprinted in Portner and

Partee (eds.) (2002), pp. 189–222; Reprinted in

Gutiérrez-Rexach (ed.) (2003) Vol. VI, pp. 158–196].

Kamp, H. and U. Reyle. 1993. From Discourse to Logic:

Introduction to Modeltheoretic Semantics of Natural

Language, Formal Logic and Discourse Representation Theory.

Dordrecht, the Netherlands: Kluwer.

Kamp, H. and U. Reyle. 1996. A calculus for first order

discourse representation structures. Journal of Logic,

Language, and Information 5(3/4):297–348.

Karttunen, L. 1976. Discourse referents. In Syntax and

Semantics 7, J. McCawley (ed.), pp. 363–385. New York, NY:

Academic Press.

Lakoff, G. 1987. Women, Fire and Dangerous Things. What

Categories Reveal about the Mind. Chicago, IL: Chicago

University Press.

van Lambalgen, M. and F. Hamm. 2005. The Proper Treatment

of Events. Malden, MA: Blackwell.

Langacker, R. W. 1987. Foundations of Cognitive Grammar,

Vol. 1: Theoretical Prerequisites. Stanford, CA: Stanford

University Press.

Langacker, R. W. 1990. Concept, Image, and Symbol. The

Cognitive Basis of Grammar. Berlin, Germany: Mouton de

Gruyter.

Langacker, R.W. 1991. Foundations of Cognitive Grammar,

Vol. 2: Descriptive Application. Stanford, CA: Stanford

University Press.

Lappin, S. (ed.). 1997. The Handbook of Contemporary

Semantic Theory. Oxford, U.K.: Blackwell.

Lyons, J. 1977. Semantics. Cambridge, U.K.: Cambridge

University Press.

Majid, A. and M. Bowerman (eds.). 2007. “Cutting and

breaking” events—A cross-linguistic perspective (Special

Issue). Cognitive Linguistics 18(2).

Mark, D. M. and A. G. Turk. 2003. Landscape categories in

Yindjibarndi: Ontology, environment, and language. In

Spatial Information Theory: Foundations of Geographic

Information Science. Proceedings of the International](https://image.slidesharecdn.com/9780429149207previewpdf-231005165728-2b1ccb76/75/9780429149207_previewpdf-pdf-62-2048.jpg)

![Conference COSIT 2003, Kartause Ittingen, Switzerland,

September 24–28, 2003, W. Kuhn, M. Worboys, and S. Timpf

(eds.). Lecture Notes in Computer Science 2825:28–45.

Berlin, Germany: Springer-Verlag.

Mark, D. M. and A. G. Turk. 2004. Ethnophysiography and the

ontology of landscape. In Proceedings of GIScience,

Adelphi, MD, October 20–23, 2004, pp. 152–155.

Mark, D. M., A. G. Turk, and D. Stea. 2007. Progress on

Yindjibarndi Ethnophysiography. In Spatial Information

Theory. Proceedings of the International Conference COSIT

2007, Melbourne, Australia, of September 19–23, 2007, S.

Winter, M. Duckham, and L. Kulik (eds.). Lecture Notes in

Computer Science 4736:1–19. Berlin, Germany:

Springer-Verlag.

Martin, P. 2002. Knowledge representation in CGLF, CGIF,

KIF, Frame-CG, and Formalized English. In Conceptual

Structures: Integration and Interfaces. Proceedings of the

10th International Conference on Conceptual Structures,

ICSS 2002, Borovets, Bulgaria, July 15–19, 2002, U. Priss,

D. Corbett, and G. Angelova (eds.). Lecture Notes in

Artificial Intelligence 2393:77–91. Berlin, Germany:

Springer-Verlag.

Mihatsch, W. 2007. Taxonomic and meronomic superordinates

with nominal coding. InOntolinguistics: How Ontological

Status Shapes the Linguistic Coding of Concepts, A. C.

Schalley and D. Zaefferer (eds.), pp. 359–377. Berlin,

Germany: Mouton de Gruyter.

Montague, R. 1973. The proper treatment of quantification in

ordinary English. InApproaches to Natural Language.

Proceedings of the 1970 Stanford Workshop on Grammar and

Semantics, J. Hintikka, J. M. E. Moravcsik, and P. Suppes

(eds.), pp. 221–242. Dordrecht, the Netherlands: Reidel.

[Reprinted in Montague (1974), pp. 247–270; Reprinted in

Portner and Partee (eds.) (2002), pp. 17–34; Reprinted in

Gutiérrez-Rexach (ed.) (2003) Vol. I, pp. 225–244].

Montague, R. 1974. Formal Philosophy: Selected Papers of

Richard Montague, ed. and with an intr. by R. H. Thomason.

New Haven, CT: Yale University Press.

Morato, J., M. Á. Marzal, J. Lloréns, and J. Moreiro. 2004.

WordNet applications. In GWC 2004: Proceedings of the

Second InternationalWordNet Conference, P. Sojka, K. Pala,

P. Smrž, C. Fellbaum, and P. Vossen (eds.), pp. 270–278.

Brno, Czech Republic: Masaryk University.](https://image.slidesharecdn.com/9780429149207previewpdf-231005165728-2b1ccb76/75/9780429149207_previewpdf-pdf-63-2048.jpg)

![Springer-Verlag.

Sowa, J. 2000. Knowledge Representation. Logical,

Philosophical, and Computational Foundations. Pacific Grove,

CA: Brooks/Cole.

Stock, K. 2008. Determining semantic similarity of

behaviour using natural semantic metalanguage to match user

objectives to available web services. Transactions in GIS

12(6):733–755.

Talmy, L. 2000. Toward a Cognitive Semantics. 2 Vols.

Cambridge, MA: MIT Press.

Van Valin, R. D. 1999. Generalised semantic roles and the

syntax-semantics interface. In Empirical Issues in Formal

Syntax and Semantics 2, F. Corblin, C. Dobrovie-Sorin, and

J.-M. Marandin (eds.), pp. 373–389. TheHague, the

Netherlands: Thesus. Available at

http://linguistics.buffalo.edu/

people/faculty/vanvalin/rrg/vanvalin_papers/gensemroles.pdf

Van Valin, R. D. 2004. Semantic macroroles in Role and

Reference Grammar. In Semantische Rollen, R. Kailuweit and

M. Hummel (eds.), pp. 62–82. Tübingen, Germany: Narr.

Van Valin, R. D., and R. J. LaPolla. 1997. Syntax:

Structure, Meaning and Function. Cambridge, U.K.: Cambridge

University Press.

Veres, C. and J. Sampson. 2005. Ontology and taxonomy: Why

“is-a” still isn’t just “is-a.” In Proceedings of the 2005

International Conference on e-Business, Enterprise

Information Systems, e-Government, and Outsourcing, Las

Vegas, NV, June 20–23, 2005, H. R. Arabnia (ed.), pp.

174–186. Las Vegas, NV: CSREA Press.

Vossen, P. 2001. Condensed meaning in EuroWordNet. In The

Language of Word Meaning, P. Bouillon and F. Busa (eds.),

pp. 363–383. Cambridge, U.K.: Cambridge University Press.

Vossen, P. (ed.). 1998. EuroWordNet: A Multilingual

Database With Lexical Semantic Networks. Dordrecht, the

Netherlands: Kluwer. [Reprinted from Computers and the

Humanities 32(2/3)]

Wanner, L. (ed.). 1996. Lexical Functions in Lexicography

and Natural Language Processing. Amsterdam, the

Netherlands: John Benjamins.](https://image.slidesharecdn.com/9780429149207previewpdf-231005165728-2b1ccb76/75/9780429149207_previewpdf-pdf-66-2048.jpg)

![Riezler, S., M. H. King, R. M. Kaplan, R. Crouch, J. T.

Maxwell III, and M. Johnson (2002). Parsing the Wall Street

Journal using a Lexical-Functional Grammar and

discriminative estimation techniques. In Proceedings of the

40th Annual Meeting of the Association for Computational

Linguistics (ACL), Philadelphia, PA, pp. 271–278.

Sagae, K. and A. Lavie (2005). A classifier-based parser

with linear run-time complexity. In Proceedings of the

Ninth International Workshop on Parsing Technologies

(IWPT), Vancouver, BC, Canada, pp. 125–132.

Sagae, K. and A. Lavie (2006a). A best-first probabilistic

shift-reduce parser. In Proceedings of the COLING/ACL 2006

Main Conference Poster Sessions, Sydney, Australia, pp.

691–698.

Sagae, K. and A. Lavie (2006b). Parser combination by

reparsing. In Proceedings of the Human Language Technology

Conference of the NAACL, Companion Volume: Short Papers,

New York, pp. 129–132.

Sagae, K. and J. Tsujii (2007). Dependency parsing and

domain adaptation with LR models and parser ensembles. In

Proceedings of the CoNLL Shared Task of EMNLP-CoNLL 2007,

Prague, Czech Republic, pp. 1044–1050.

Sánchez, J. A. and J.M. Benedí (1997). Consistency of

stochastic context-free grammars fromprobabilistic

estimation based on growth transformations. IEEE

Transactions on Pattern Analysis and Machine Intelligence

19, 1052–1055.

Sarkar, A. (2001). Applying co-training methods to

statistical parsing. In Proceedings of the Second Meeting

of the North American Chapter of the Association for

Computational Linguistics (NAACL), Pittsburgh, PA, pp.

175–182.

Scha, R. (1990). Taaltheorie en taaltechnologie; competence

en performance [language theory and language technology;

competence and performance]. In R. de Kort and G. L. J.

Leerdam (Eds.), Computertoepassingen in de Neerlandistiek,

LVVN, Almere, the Netherlands, pp. 7–22.

Seginer, Y. (2007). Fast unsupervised incremental parsing.

In Proceedings of the 45th Annual Meeting of the

Association of Computational Linguistics, Prague, Czech

Republic, pp. 384–391.](https://image.slidesharecdn.com/9780429149207previewpdf-231005165728-2b1ccb76/75/9780429149207_previewpdf-pdf-124-2048.jpg)

![machine translation. Computational Linguists, 29(1):97–133.

Vauquois, B. (1968). A survey of formal grammars and

algorithms for recognition and transformation in machine

translation. In IFIP Congress-68, Edinburgh, U.K., pp.

254–260.

Vogel, S., Ney, H., and Tillmann, C. (1996). HMM Based-Word

Alignment in Statistical Machine Translation. In

Proceedings of the 16th International Conference on

Computational Linguistics (COLING 1996), pp. 836–841,

Copenhagen, Denmark.

Weaver, W. (1955). Translation. [Repr. Machine translation

of languages: fourteen essays, ed. W.N. Locke and A.D.

Booth]. Technology Press of MIT, Cambridge, MA.

Yamada, K. and Knight, K. (2001). A syntax-based

statistical translation model. Proceedings of the ACL,

Toulouse, France, pp. 523–530.

Zollmann, A. and Venugopal, A. (2006). Syntax augmented

machine translation via chart parsing. In NAACL

2006—Workshop on Statistical Machine Translation, New York.

Association for Computational Linguistics.](https://image.slidesharecdn.com/9780429149207previewpdf-231005165728-2b1ccb76/75/9780429149207_previewpdf-pdf-177-2048.jpg)