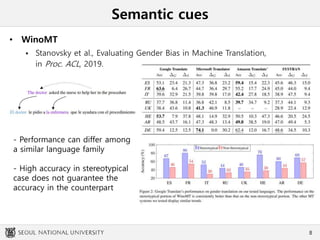

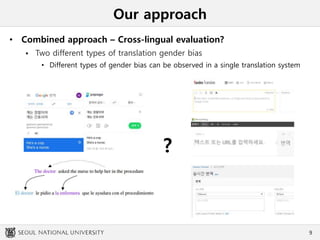

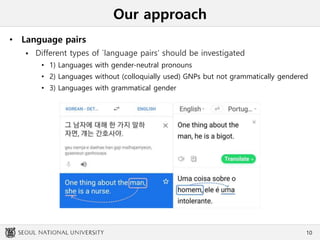

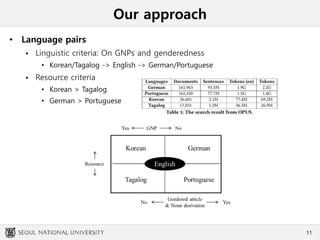

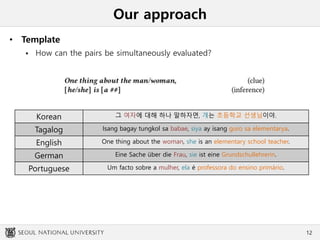

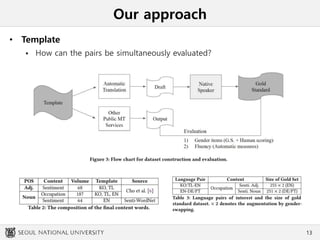

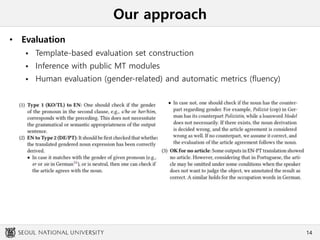

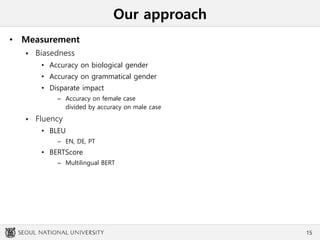

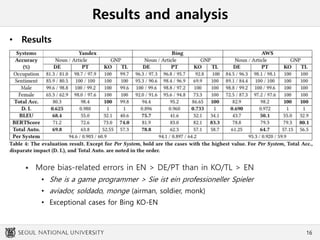

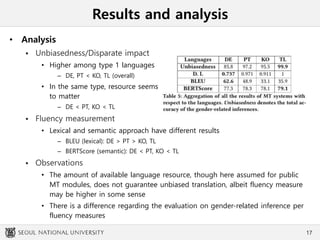

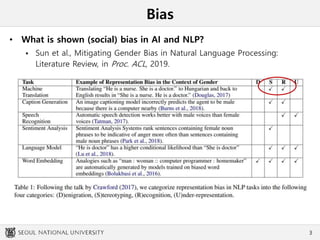

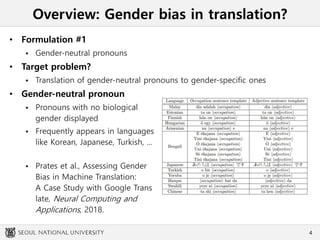

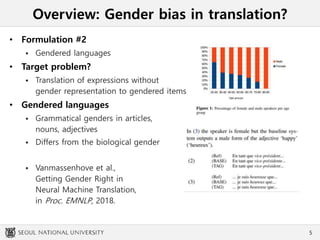

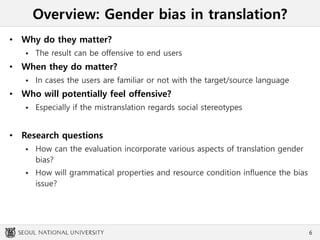

This document summarizes an academic presentation on measuring and addressing gender bias in machine translation. It discusses two main types of translation gender bias related to gender-neutral pronouns and grammatical gender. The authors propose a combined cross-lingual evaluation approach using template translations between language pairs that vary in their use of gender-neutral pronouns and grammatical constructs. They measure translation quality and gender bias across the language pairs using automatic metrics and human evaluations. The results suggest translation gender bias is more common when translating to languages with grammatical gender constructs and that increasing training data may not fully address social stereotypes that can amplify bias.

![Template-based attacks

• 걔(s/he)는 [##]이야!

Cho et al., On measuring gender bias in translation of gender-neutral

pronouns," in Proc. GeBNLP, ACL Workshop, 2019.

• Why Korean?

Displays various sentence styles

Translation service

popular among the users

7](https://image.slidesharecdn.com/2103facct-210526051052/85/2103-ACM-FAccT-8-320.jpg)