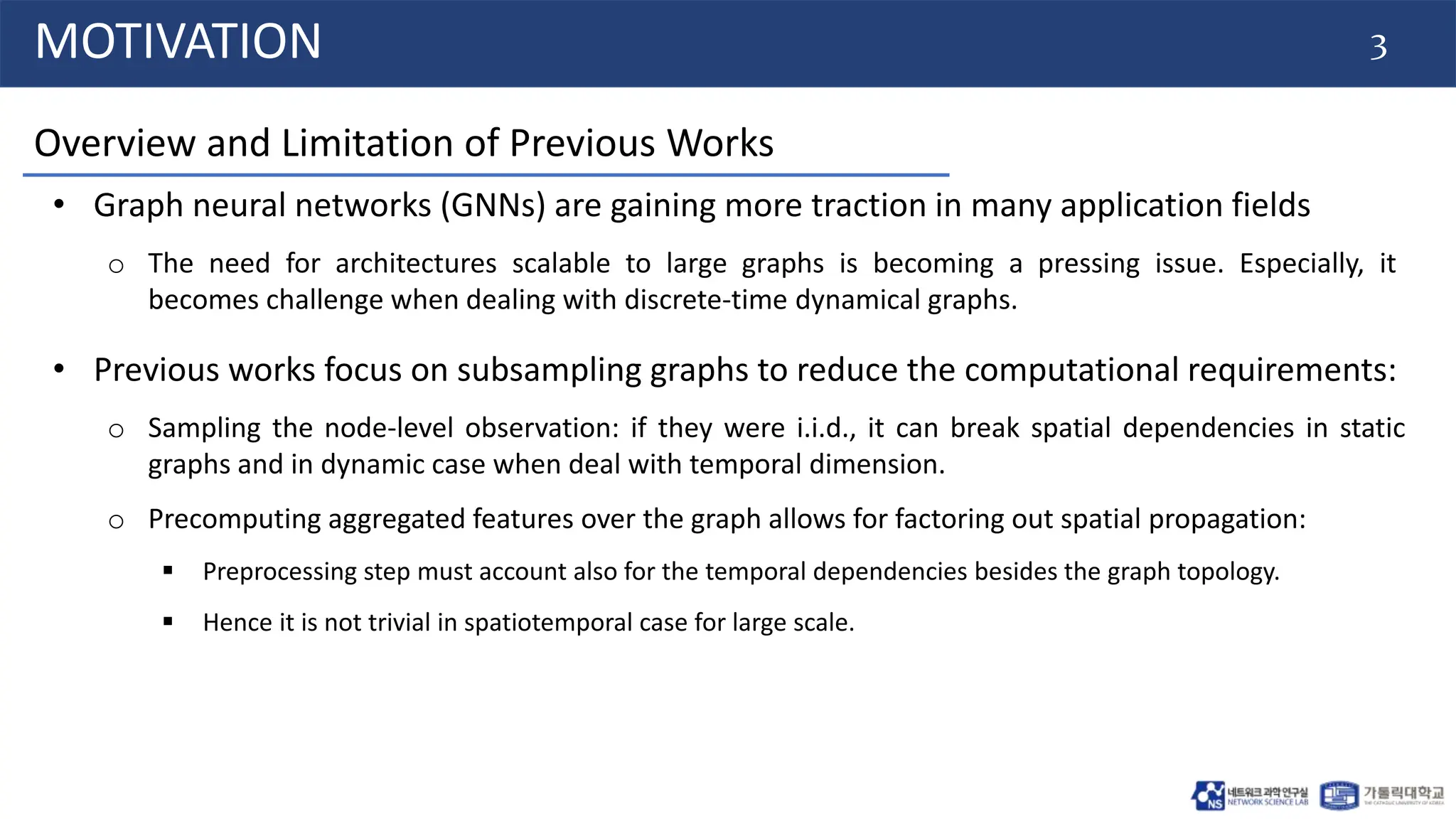

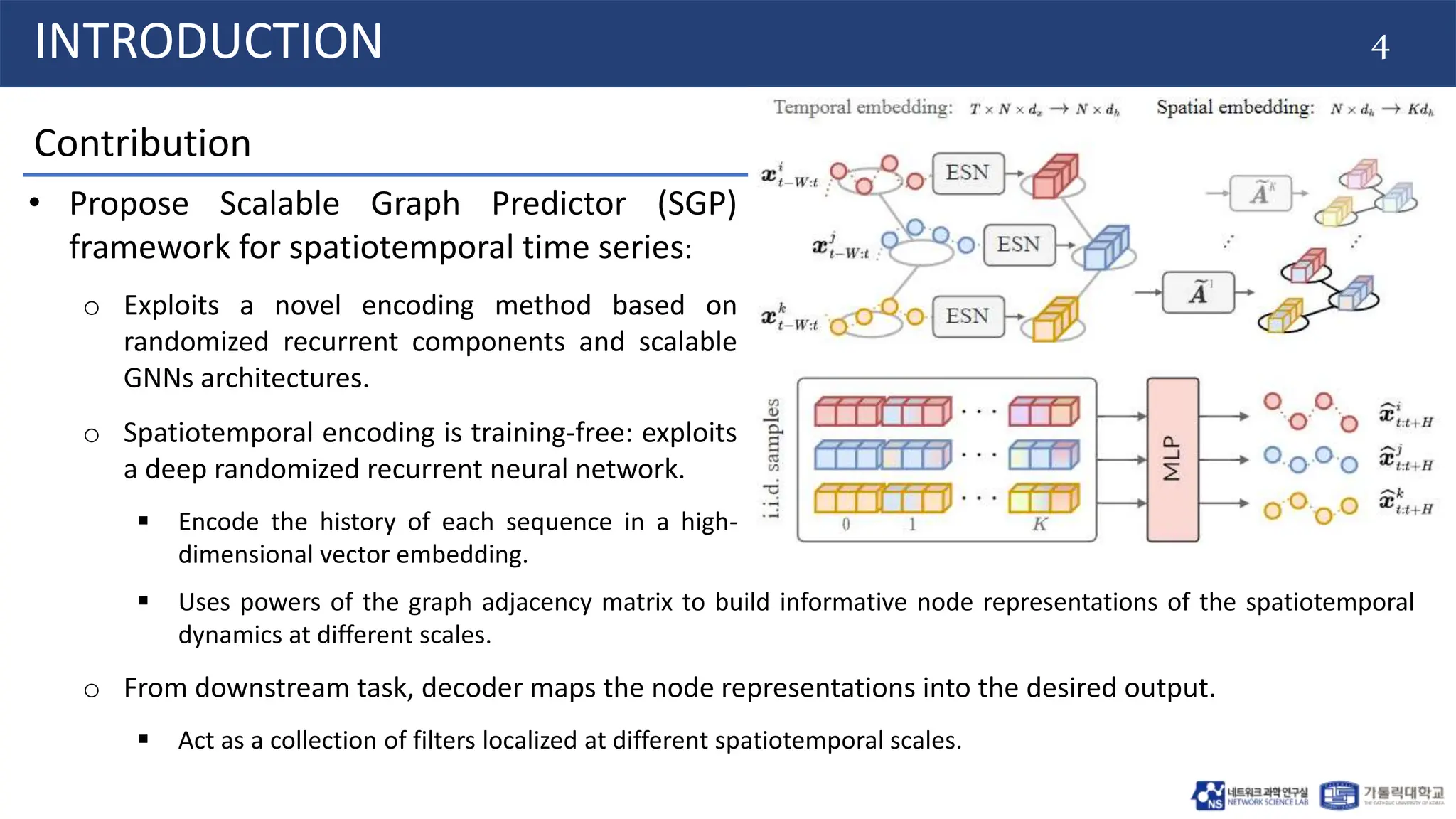

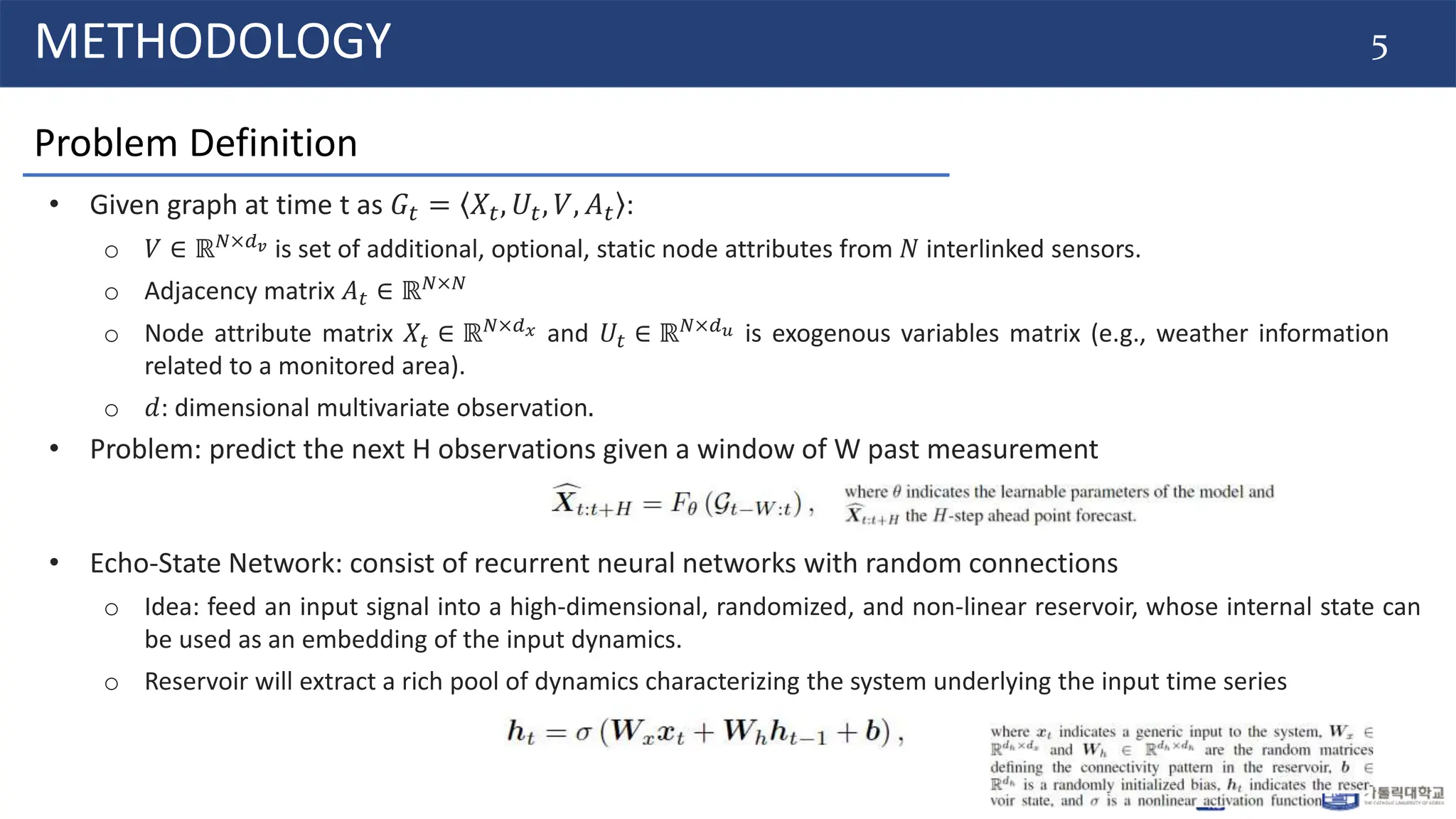

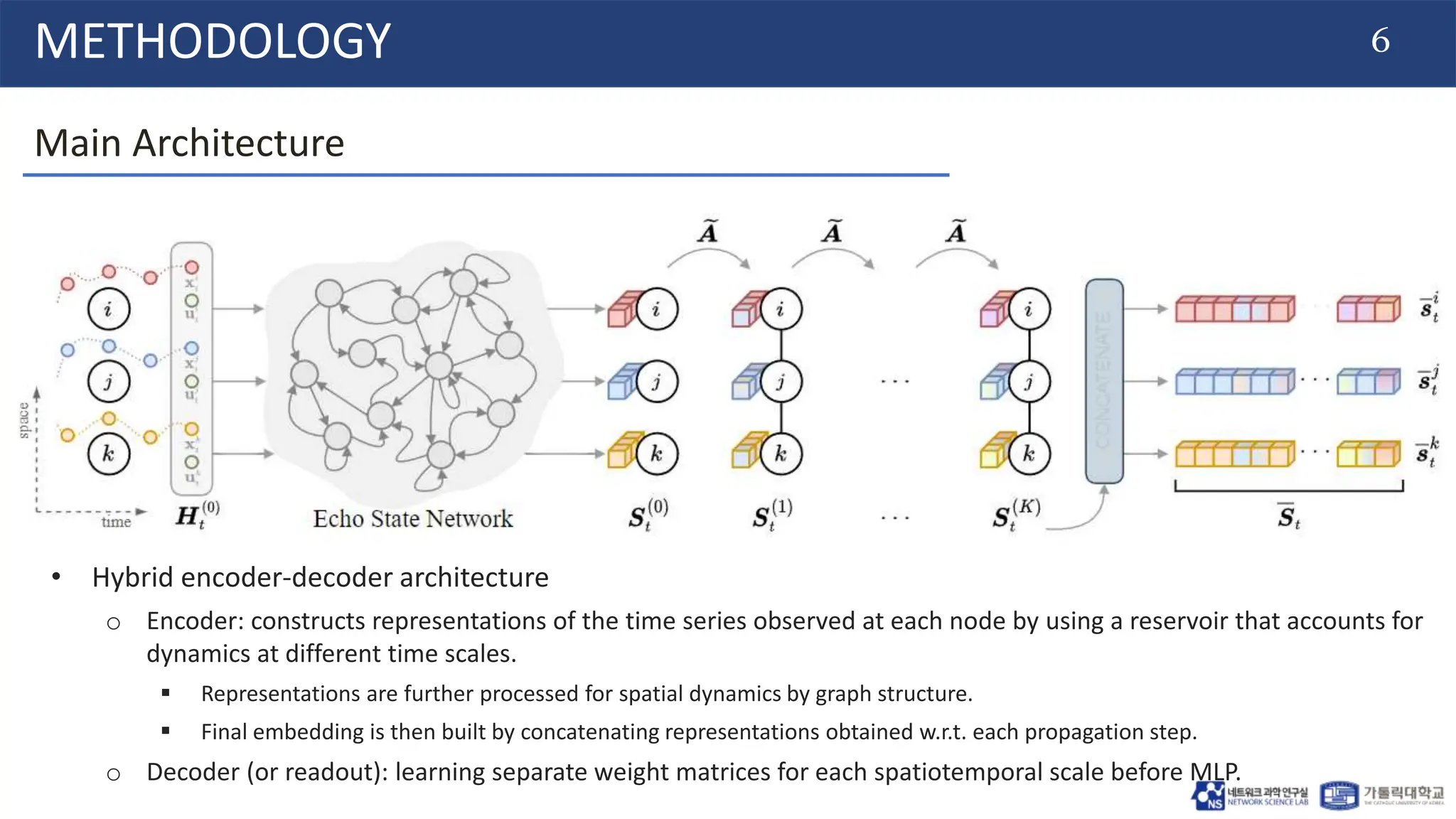

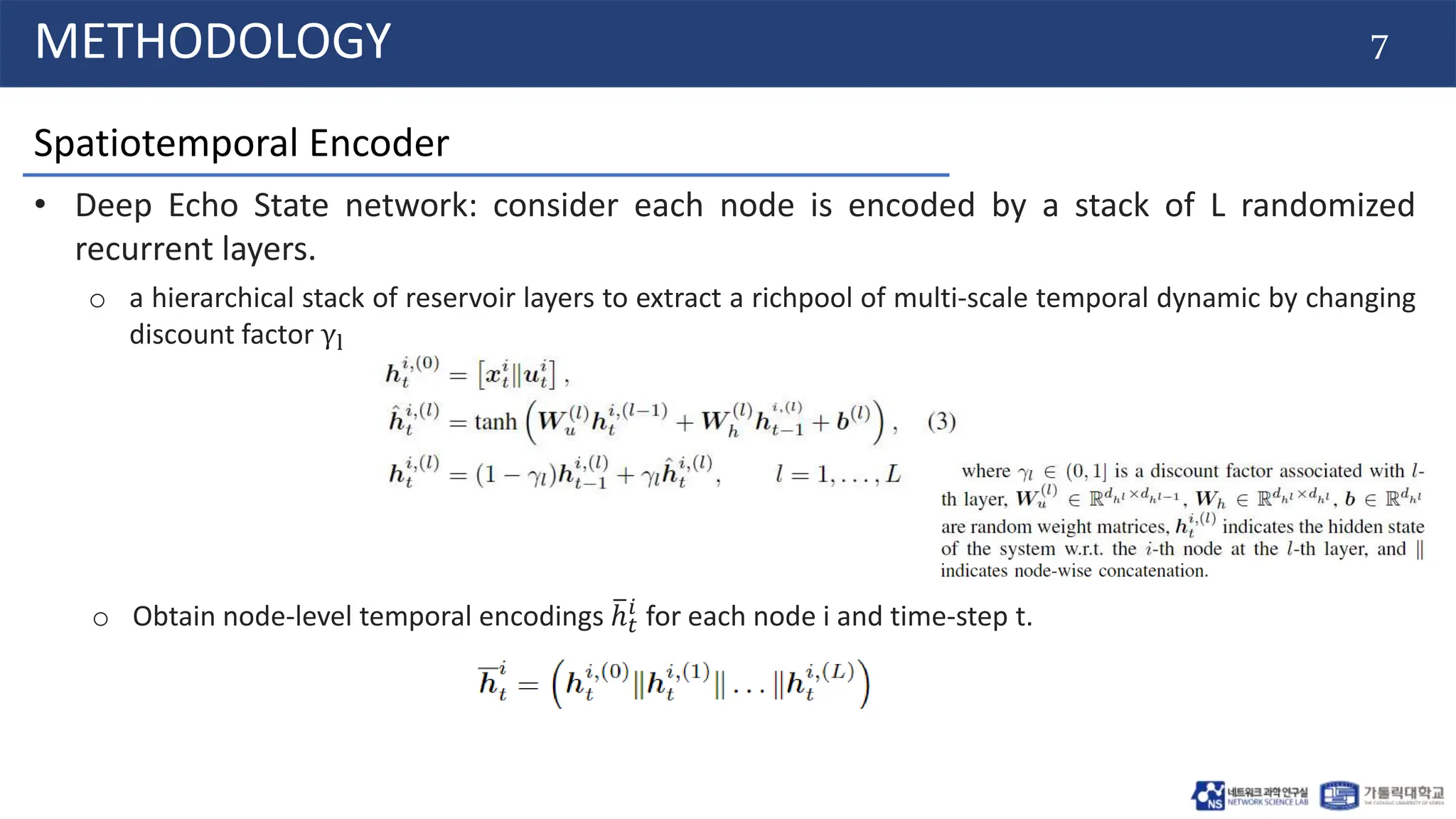

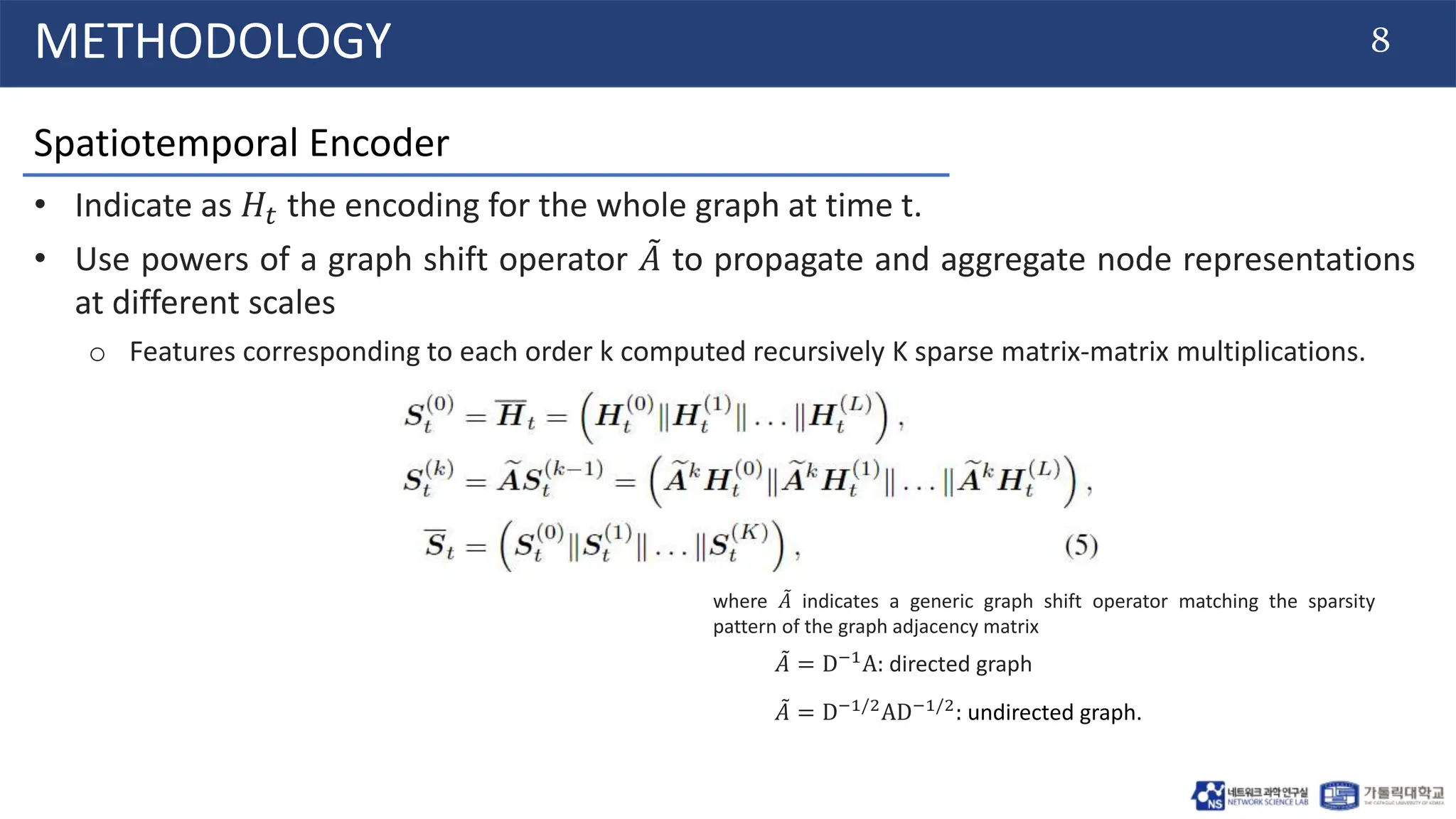

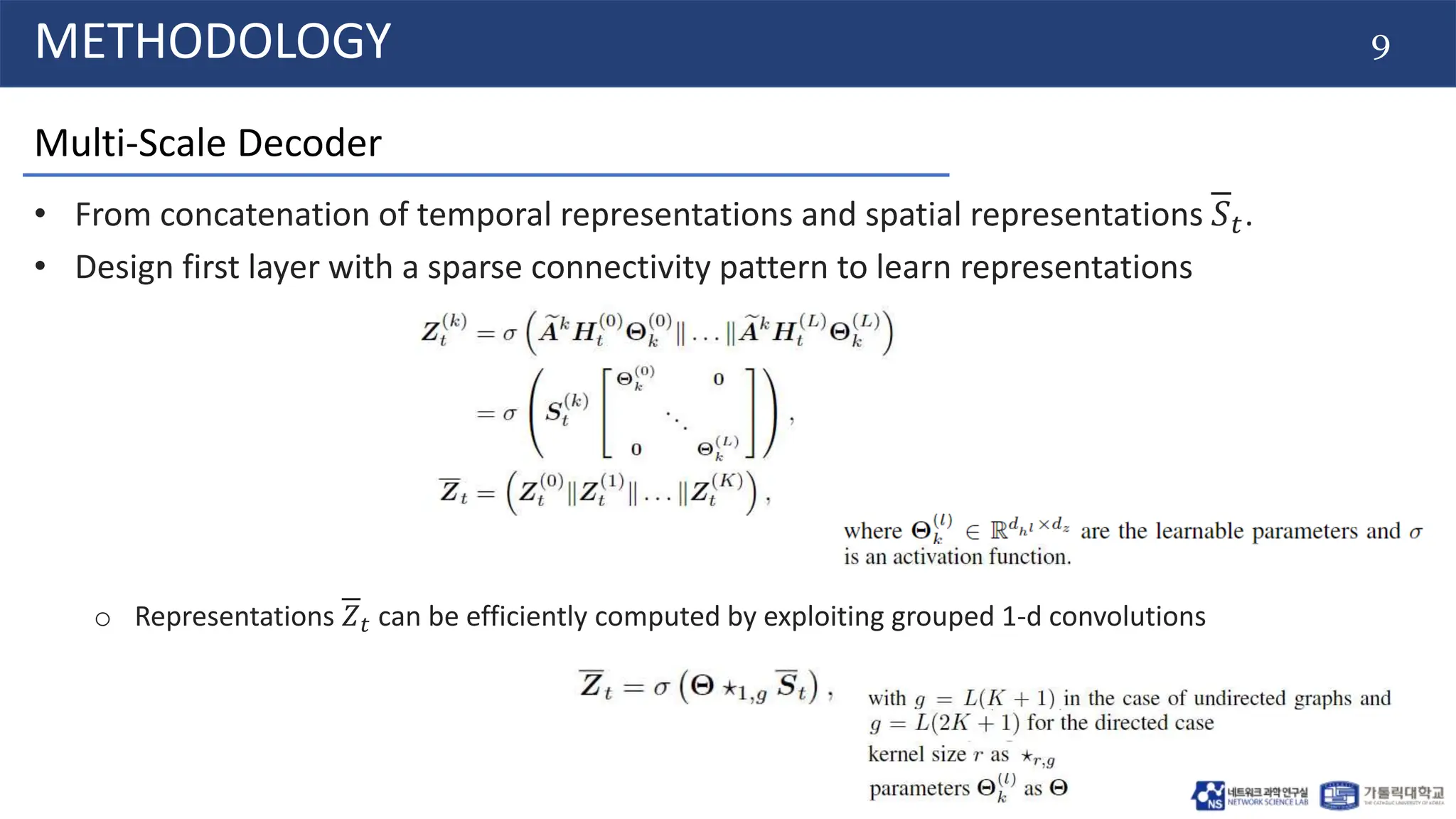

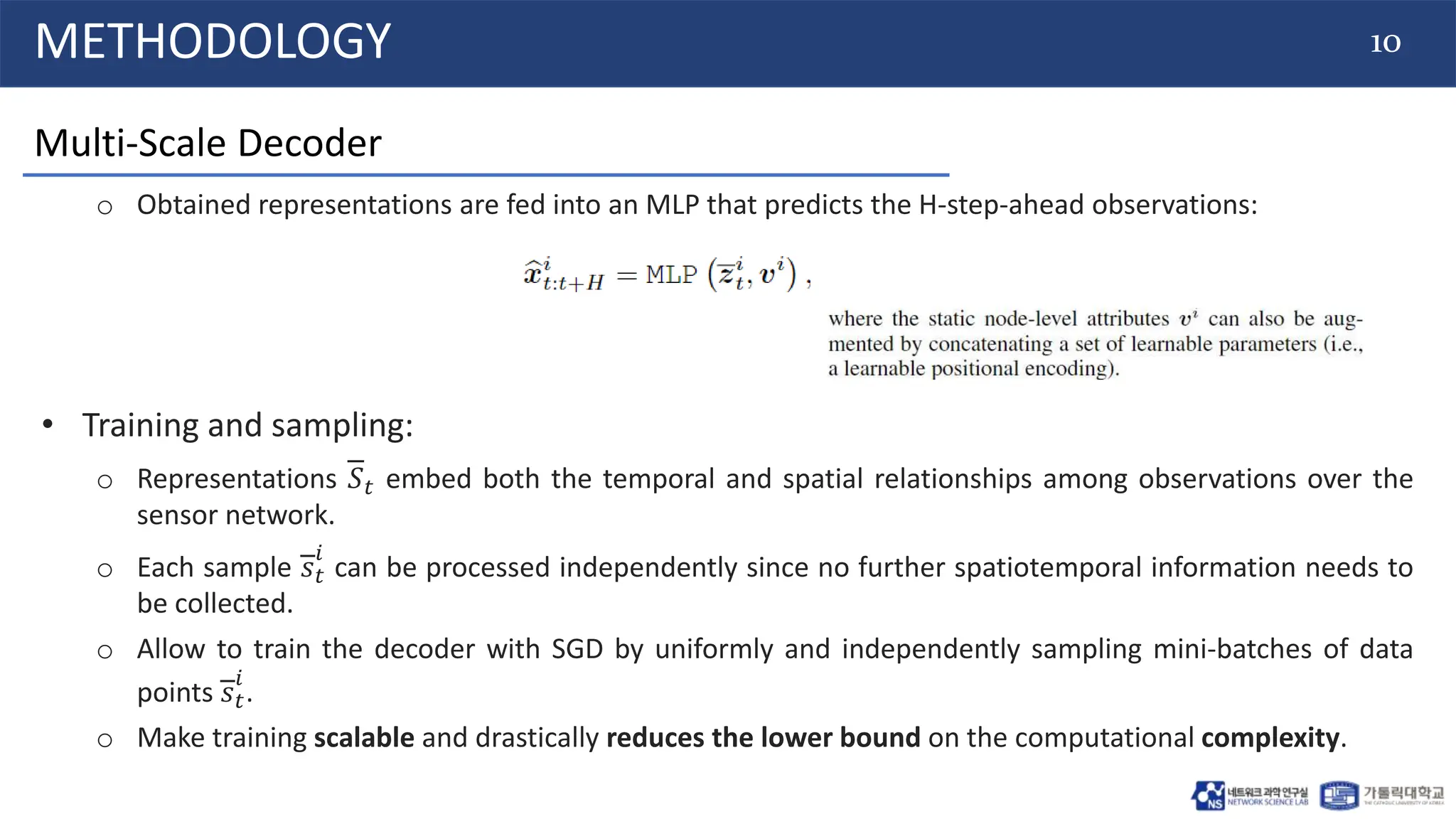

The document introduces a scalable graph predictor (SGP) framework designed for spatiotemporal time series forecasting using graph neural networks (GNNs). It addresses the challenges of computational efficiency and spatial dependencies while predicting future observations from historical data across various datasets. The proposed architecture allows for effective real-time predictions with reduced memory usage and aims to enhance processing capabilities for larger sensor networks.

![11

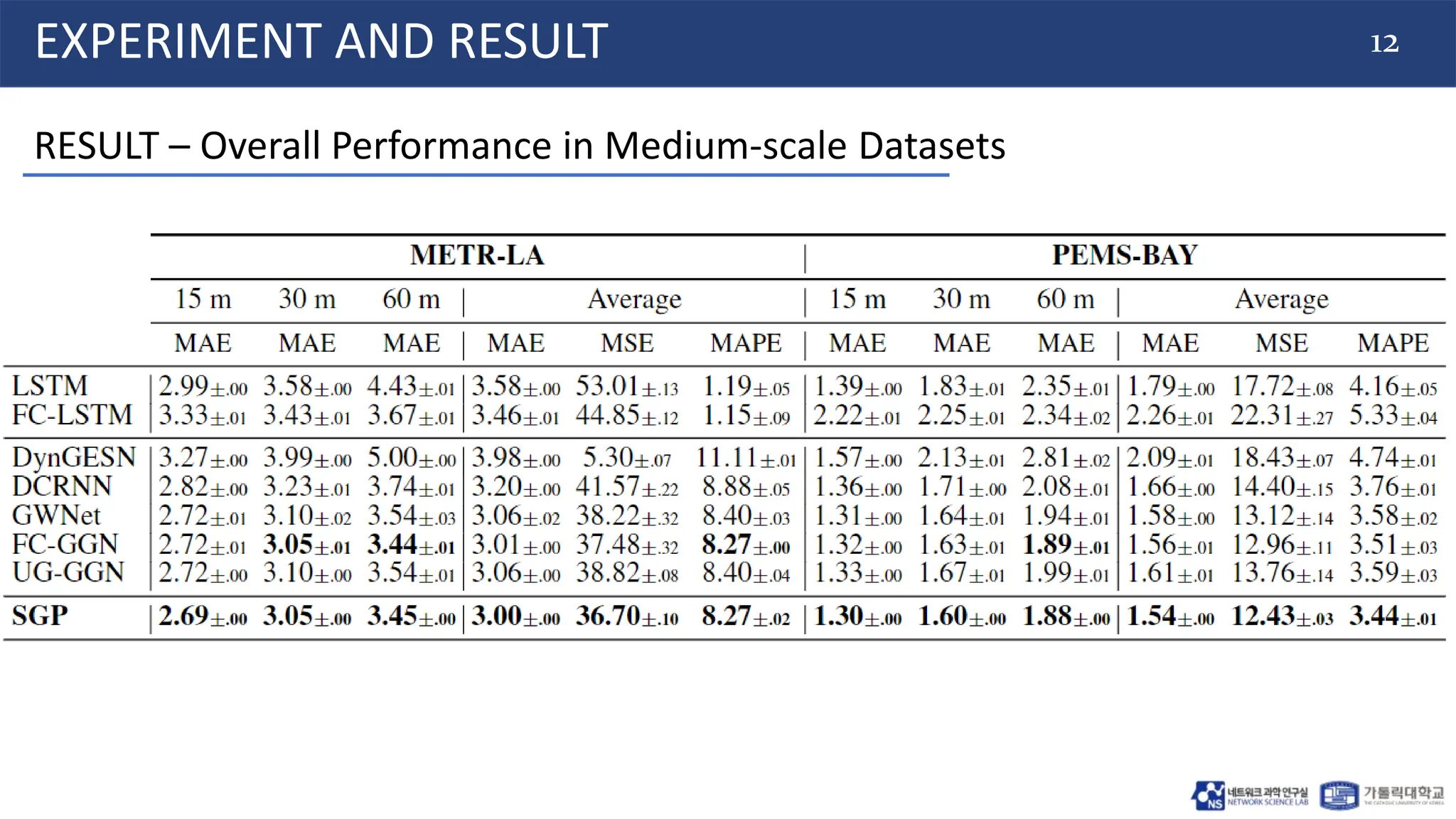

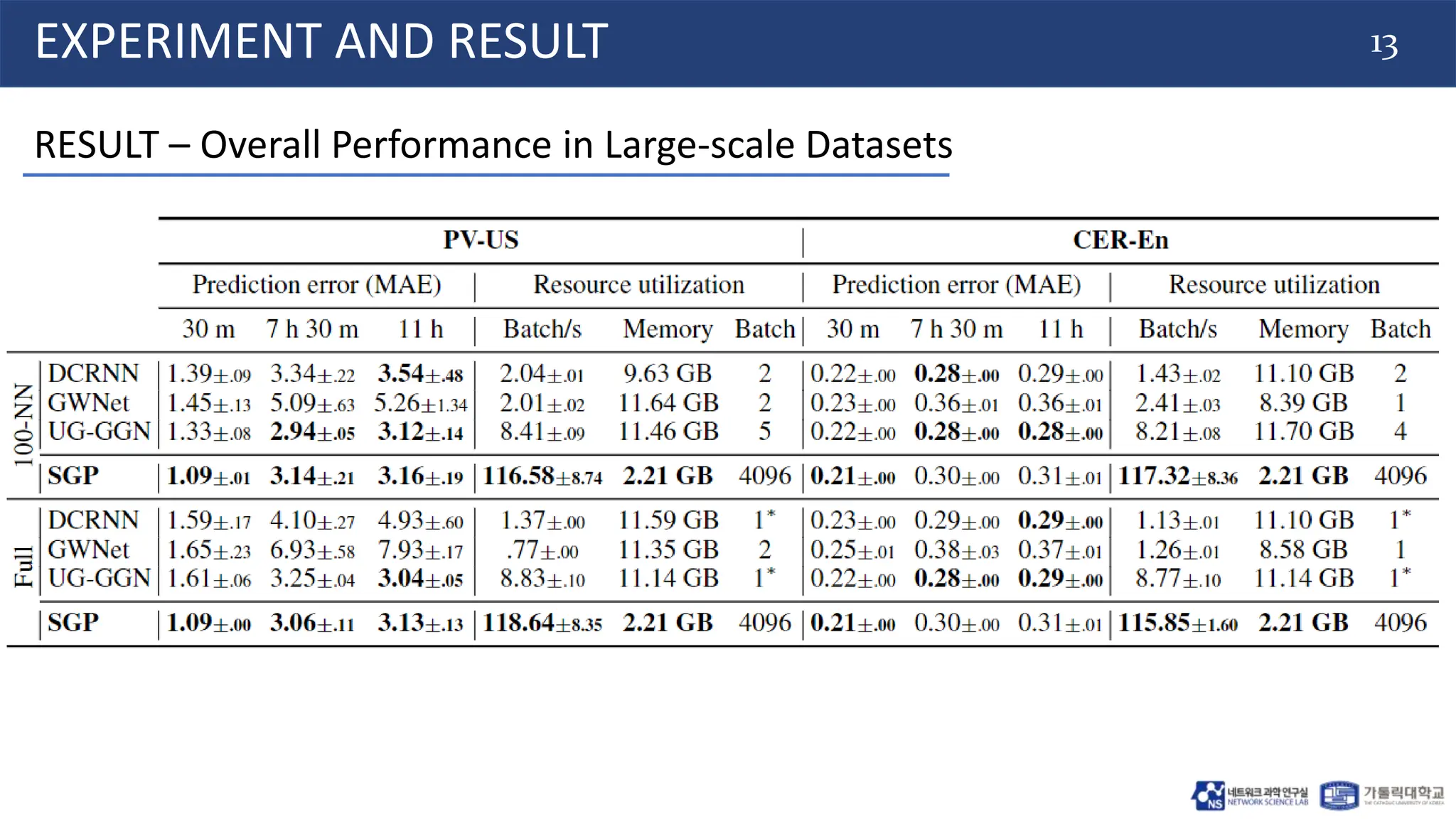

EXPERIMENT AND RESULT

EXPERIMENT SETTINGs

• Dataset:

o Medium scale: METR-LA, PEMS-BAY (traffic dataset).

o Large scale: PV-US, CER-En (Energy production dataset).

• Baselines:

o Deep Learning: LSTM, and FC-LSTM.

o Graph methods: DCRNN[1], Graph WaveNet(GWNet)[2], GatedGN (GGN)[3], and DynGESN[4].

[1] Li, Y., Yu, R., Shahabi, C., & Liu, Y. (2017). Diffusion convolutional recurrent neural network: Data-driven traffic forecasting. arXiv preprint arXiv:1707.01926..

[2] Wu, Z., Pan, S., Long, G., Jiang, J., & Zhang, C. (2019). Graph wavenet for deep spatial-temporal graph modeling. arXiv preprint arXiv:1906.00121.

[3] Gao, J., & Ribeiro, B. (2022, June). On the equivalence between temporal and static equivariant graph representations. In International Conference on Machine Learning (pp. 7052-7076). PMLR.

[4] Micheli, A., & Tortorella, D. (2022). Discrete-time dynamic graph echo state networks. Neurocomputing, 496, 85-95.

• Measurement:

o MAE, MSE, MAPE.](https://image.slidesharecdn.com/20240628labseminarhuyscalablestgnn-240628124039-93589631/75/20240628_LabSeminar_Huy-ScalableSTGNN-pptx-11-2048.jpg)

![[20240628_LabSeminar_Huy]ScalableSTGNN.pptx](https://image.slidesharecdn.com/20240628labseminarhuyscalablestgnn-240628124039-93589631/75/20240628_LabSeminar_Huy-ScalableSTGNN-pptx-15-2048.jpg)

![[20240628_LabSeminar_Huy]ScalableSTGNN.pptx](https://image.slidesharecdn.com/20240628labseminarhuyscalablestgnn-240628124039-93589631/75/20240628_LabSeminar_Huy-ScalableSTGNN-pptx-16-2048.jpg)