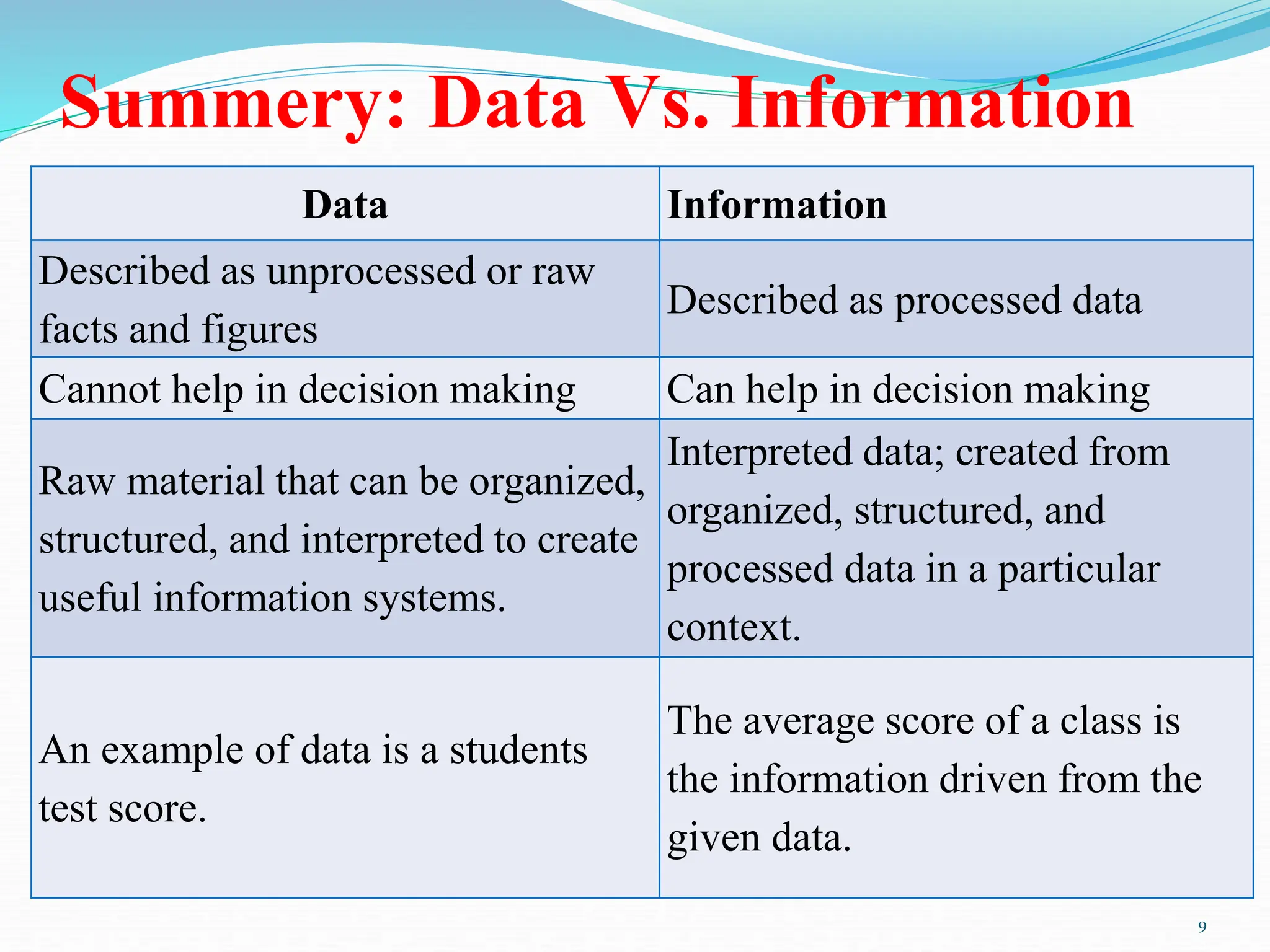

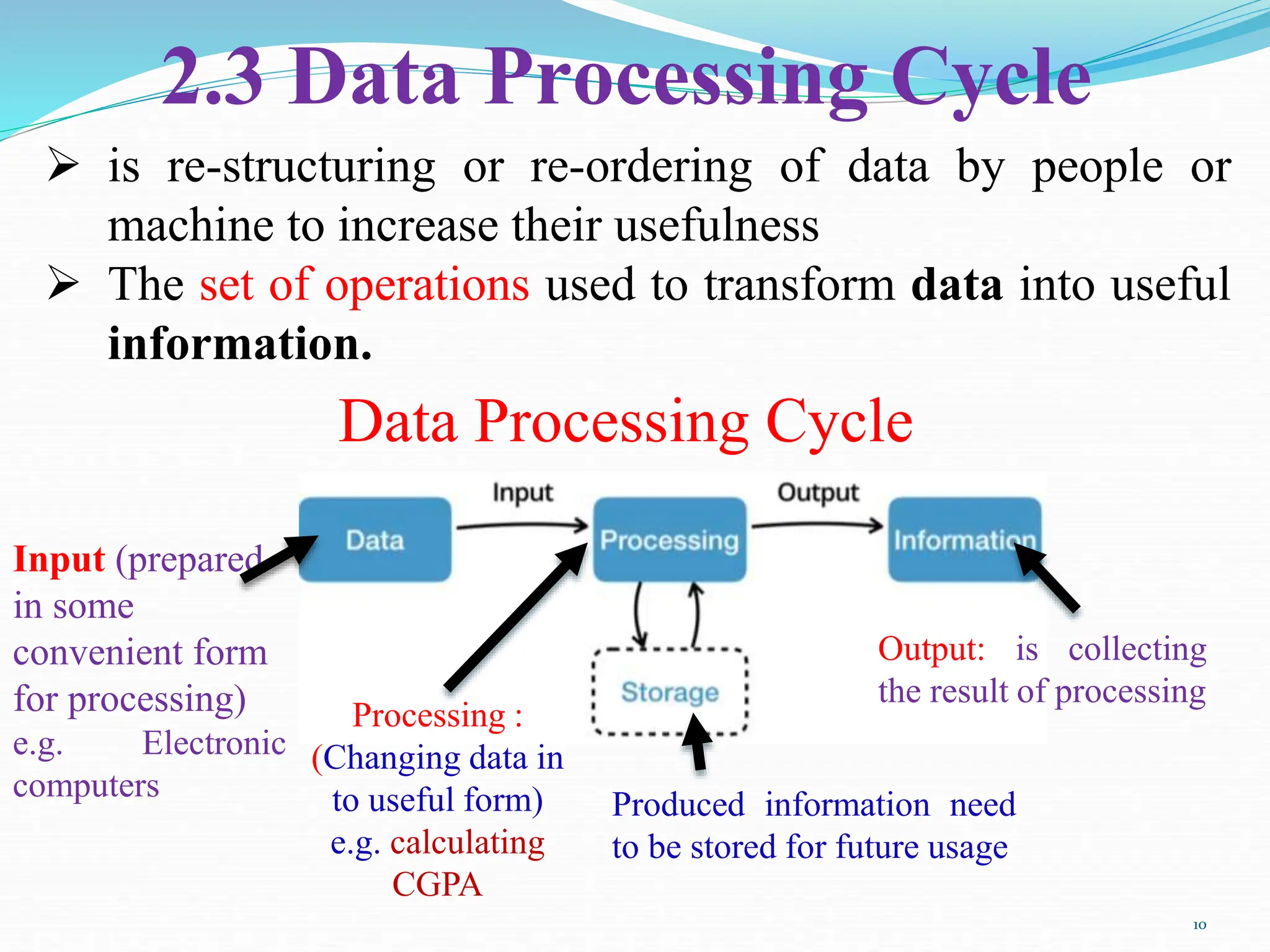

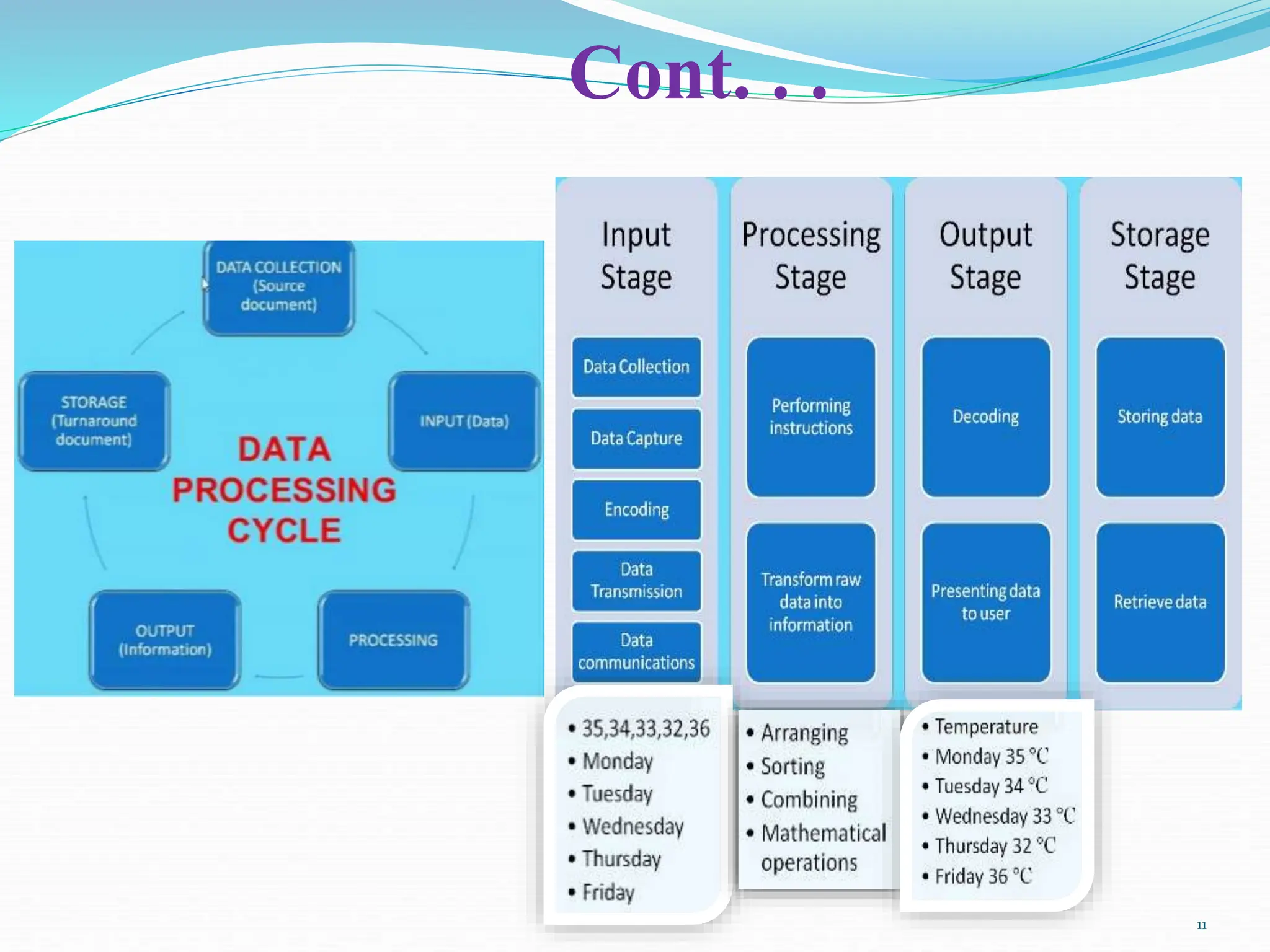

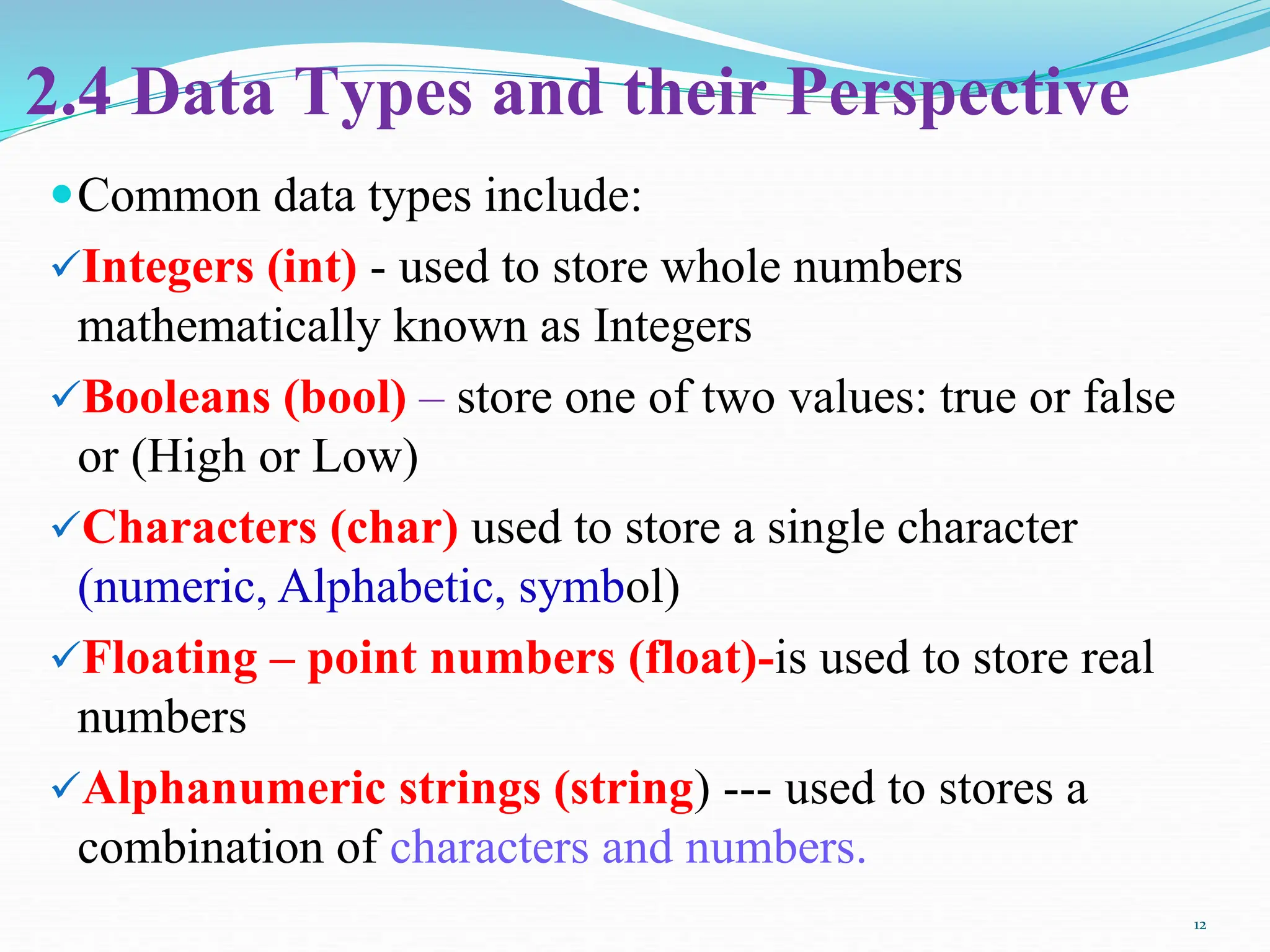

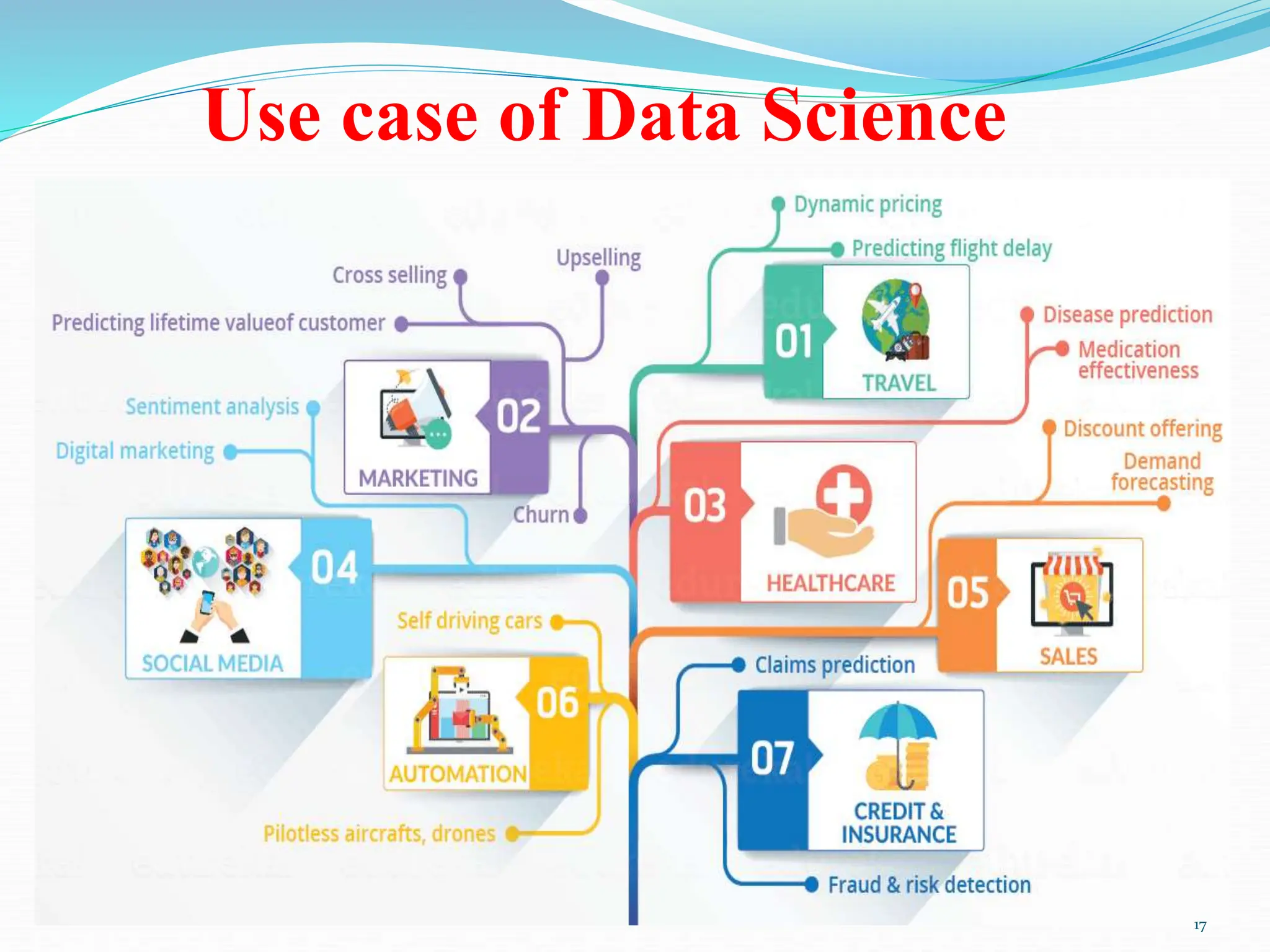

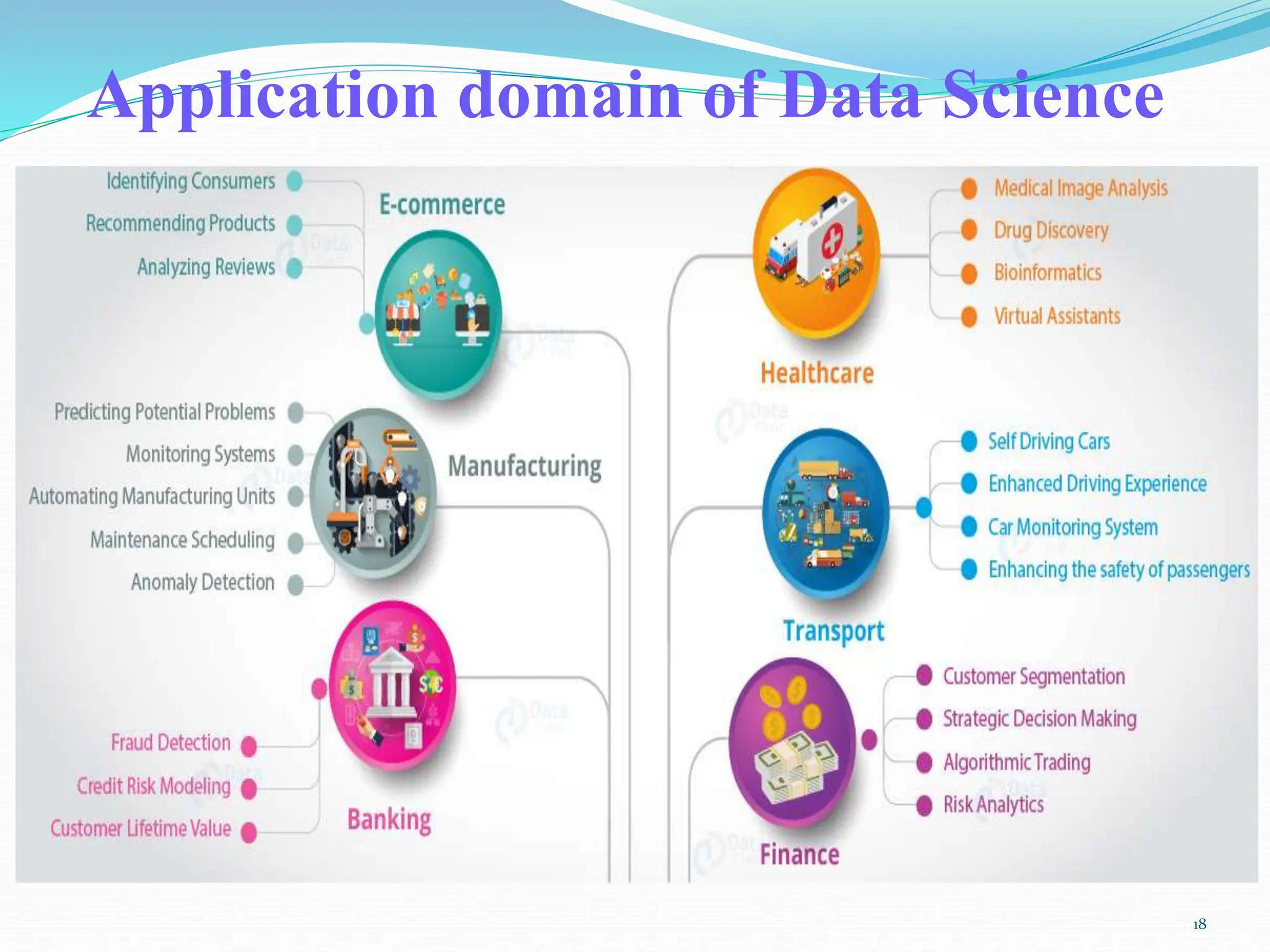

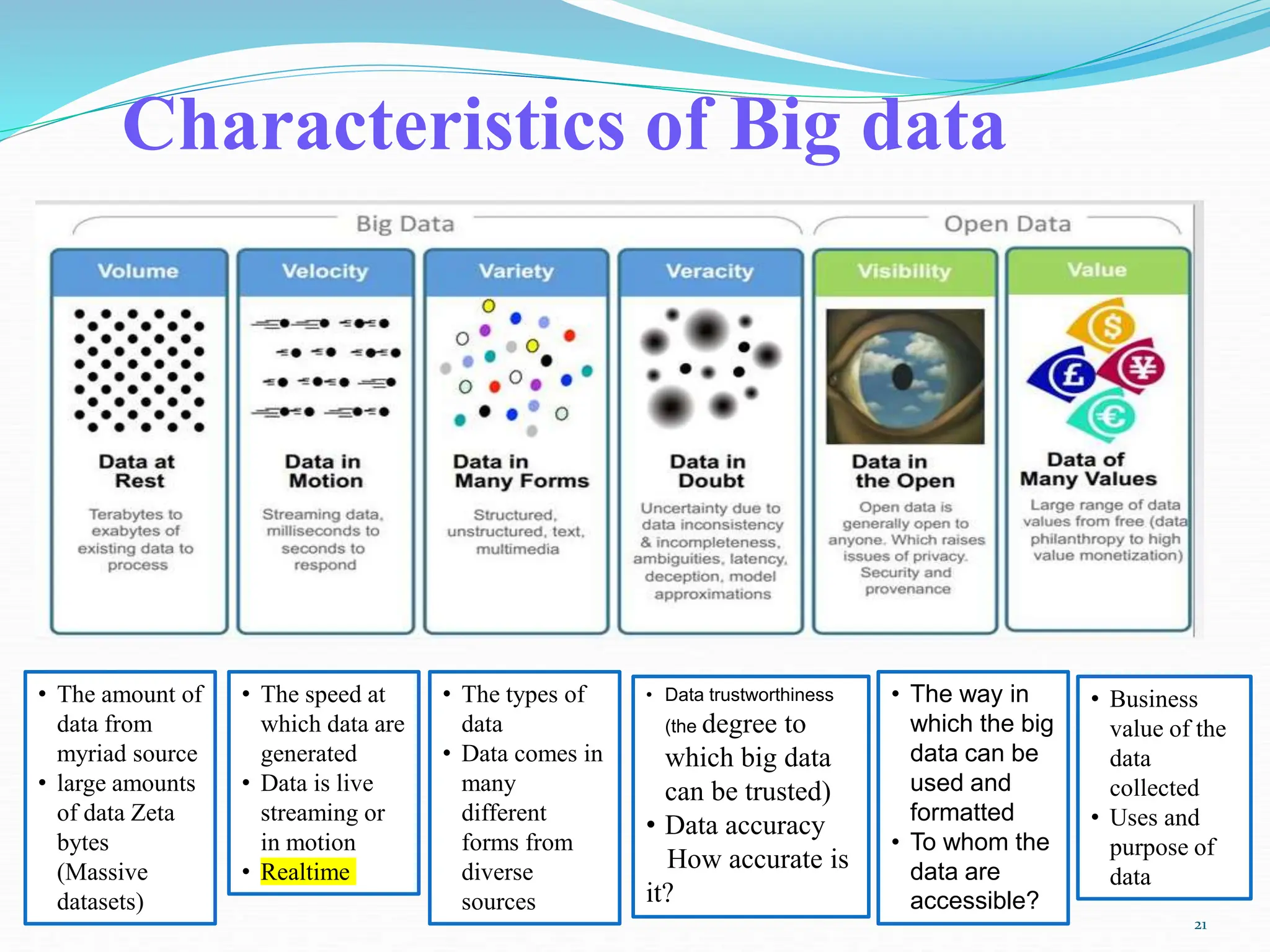

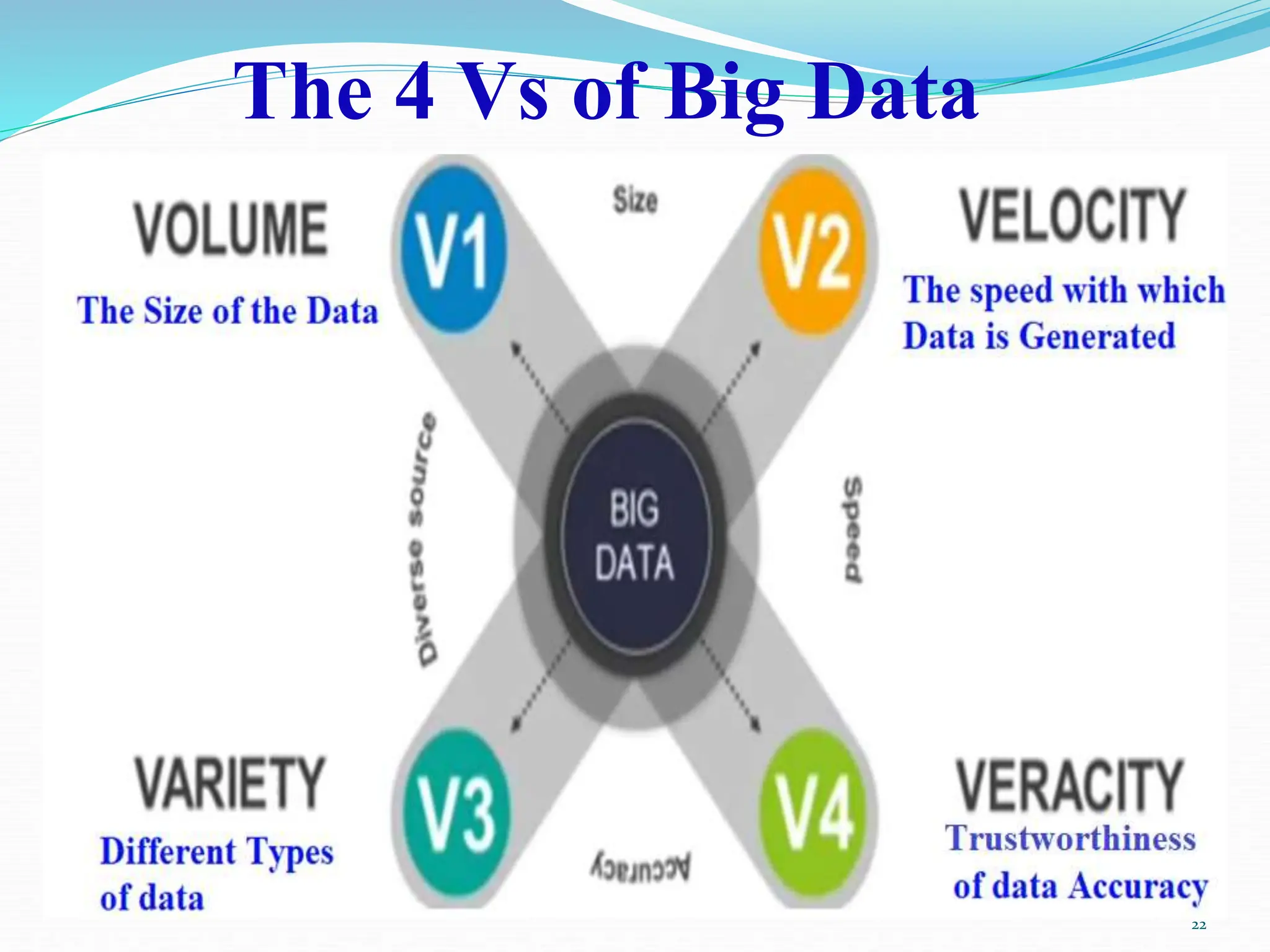

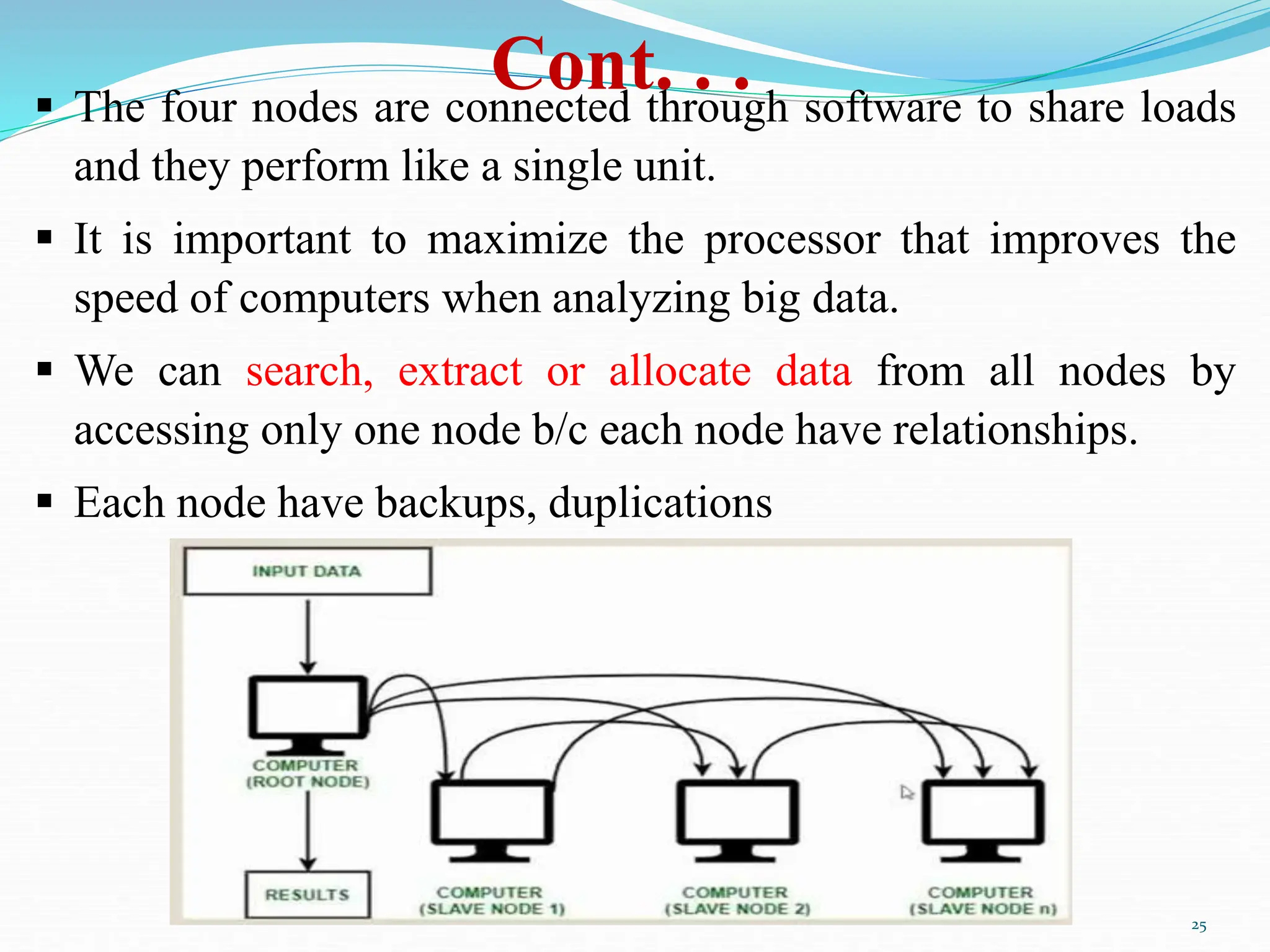

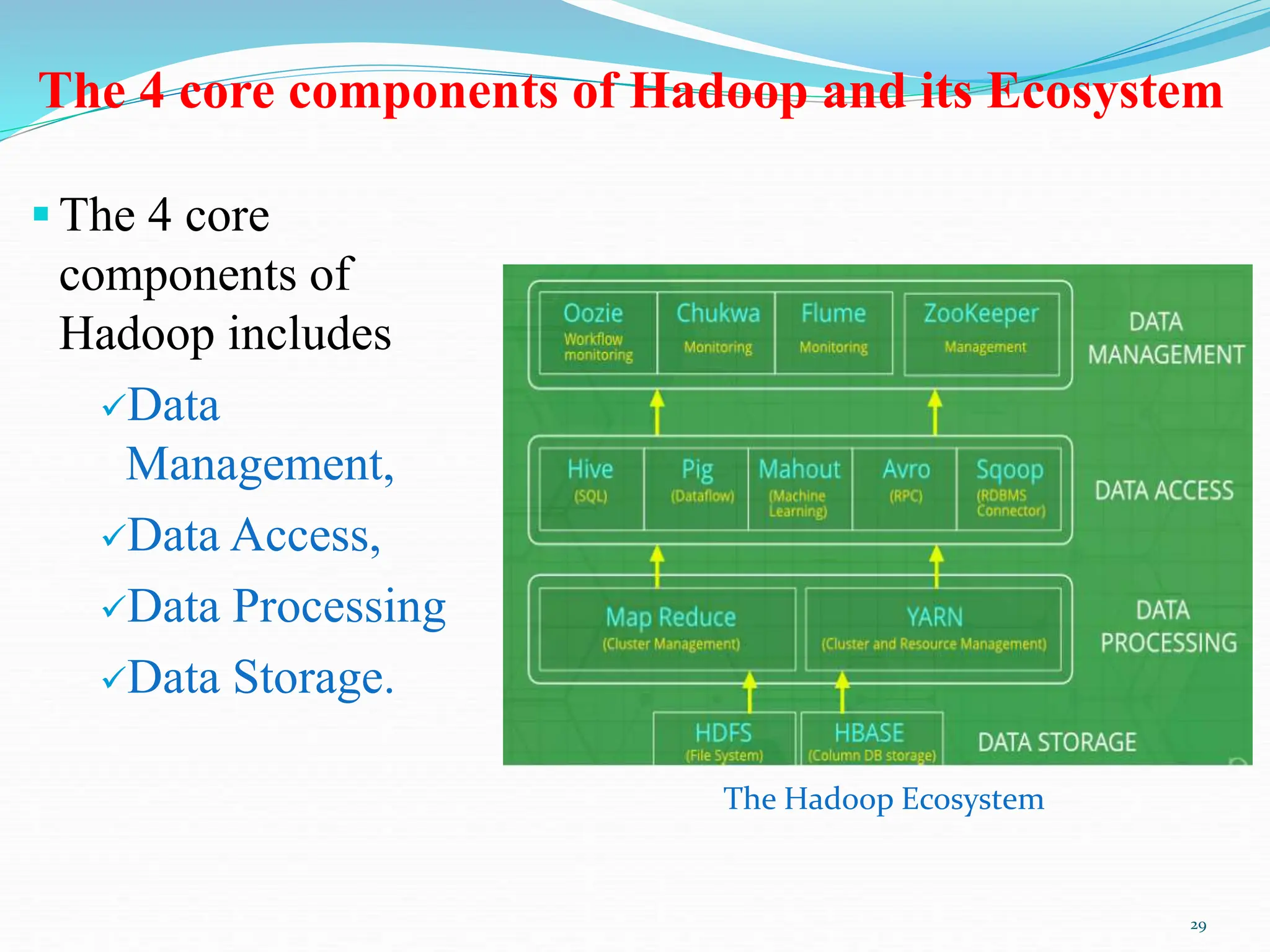

Chapter two of the document provides an overview of data science, discussing its definition, importance, and the roles of data scientists. It details the data processing cycle, data types, and the data value chain, emphasizing the concepts of big data and clustered computing. Additionally, the chapter highlights Hadoop and its ecosystem as essential tools for managing and analyzing big data.