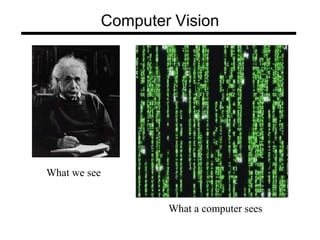

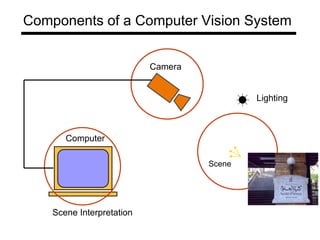

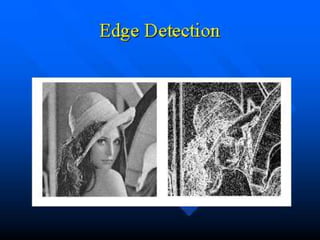

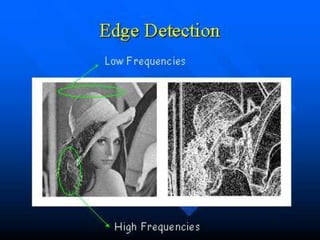

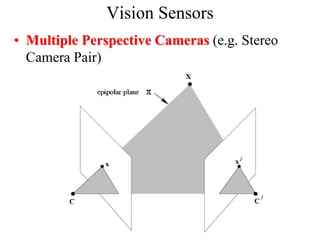

Computer vision systems use digital image processing and analysis techniques to interpret and understand images. There are many approaches to computer vision including edge detection, segmentation, shape and texture description, as well as techniques for image enhancement, restoration, compression and more. While human vision is very good at tasks like recognition and navigation, computer vision is still limited and faces challenges from changes in lighting, occlusion, and ambiguity.

![Image

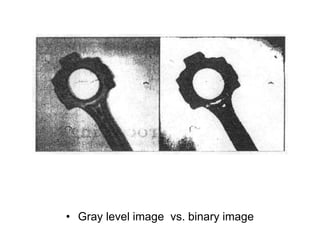

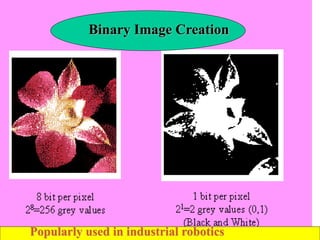

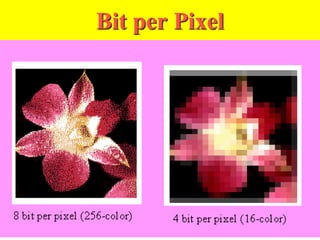

• Image : a two-dimensional array of pixels

• The indices [i, j] of pixels : integer values that specify

the rows and columns in pixel values](https://image.slidesharecdn.com/10833762-240131170631-26cd7415/85/10833762-ppt-4-320.jpg)