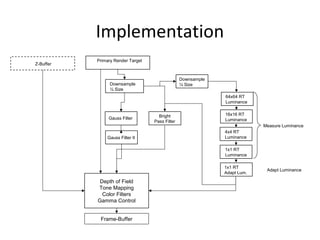

The document presents a comprehensive guide on post-processing techniques in rendering, covering topics such as gamma control, high-dynamic range rendering (HDR), color filters, and various visual effects like motion blur and light streaks. It delves into the mathematics and implementations of these effects, highlighting the importance of techniques like tone mapping and depth of field to achieve desired visual outcomes. Additionally, it discusses the challenges in rendering pipelines and optimization methods to enhance image quality while managing computational resources.

![Gamma control

• Problem: most color manipulation methods

applied in a renderer become physically non-

linear

• RGB color operations do not look right (adds up)

[Brown]

• dynamic lighting

• several light sources

• shadowing

• texture filtering

• alpha blending

• advanced color filters](https://image.slidesharecdn.com/05postfx-150519143223-lva1-app6891/85/Paris-Master-Class-2011-05-Post-Processing-Pipeline-4-320.jpg)

![Gamma control

• Gamma is compression method because 8-bit

per channel is not much precision to

represent color

– Non-linear transfer function to compress color

– Compresses into roughly perceptually uniform

space

-> called sRGB space [Stokes]

• Used everywhere by default for render targets

like the framebuffer and textures](https://image.slidesharecdn.com/05postfx-150519143223-lva1-app6891/85/Paris-Master-Class-2011-05-Post-Processing-Pipeline-5-320.jpg)

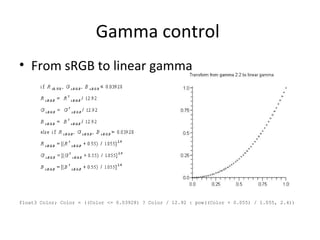

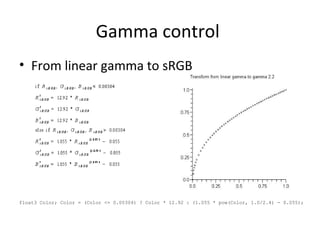

![Gamma Control

• Converting to gamma 1.0 [Stokes]

Color = ((Color <= 0.03928) ? Color / 12.92 : pow((Color + 0.055) / 1.055, 2.4))

• Converting to gamma 2.2

Color = (Color <= 0.00304) ? Color * 12.92 : (1.055 * pow(Color, 1.0/2.4) - 0.055);

• Hardware can convert textures and the end

result… but some hardware uses linear

approximations here

• Vertex colors still need to be converted “by

hand”](https://image.slidesharecdn.com/05postfx-150519143223-lva1-app6891/85/Paris-Master-Class-2011-05-Post-Processing-Pipeline-9-320.jpg)

![Color Filters: Saturation

• Remove color from image ~= convert to

luminance [ITU1990]

• Y of XYZ color space represents luminace

float Lum = dot(Color,float3(0.2126, 0.7152, 0.0722));

float3 Color = lerp(Lum.xxx, Color, Sat)](https://image.slidesharecdn.com/05postfx-150519143223-lva1-app6891/85/Paris-Master-Class-2011-05-Post-Processing-Pipeline-11-320.jpg)

![HD RR

• Ansel Adam’s Zone System [Reinhard]](https://image.slidesharecdn.com/05postfx-150519143223-lva1-app6891/85/Paris-Master-Class-2011-05-Post-Processing-Pipeline-16-320.jpg)

![HD RR

• Data with higher range than 0..1

• Storing High-Dynamic Range Data in Textures

– RGBE - 32-bit per pixel

– DXGI_FORMAT_R9G9B9E5_SHAREDEXP - 32-bit per pixel

– DXT1 + quarter L16 – 8-bit per pixel

– DXT1: storing common scale + exponent for each of the color channels in

a texture by utilizing unused space in the DXT header – 4-bit per-pixel

– -> Challenge: gamma control -> calc. exp. without gamma

– DX11 has a HDR texture but without gamma control

• Keeping High-Dynamic Range Data in Render Targets

– 10:10:10:2 (DX9: MS, blending, no filtering)

– 7e3 format XBOX 360: configure value range & precision with color exp.

Bias [Tchou]

– 16:16:16:16 (DX9: some cards: MS+blend others filter+blend)

– DX10: 11:11:10 (MS, source blending, filtering)](https://image.slidesharecdn.com/05postfx-150519143223-lva1-app6891/85/Paris-Master-Class-2011-05-Post-Processing-Pipeline-17-320.jpg)

![HD RR

• To convert RGB to Luminance [ITU1990]

• RGB->CIE XYZ->CIE Yxy

• CIE Yxy->CIE XYZ->RGB](https://image.slidesharecdn.com/05postfx-150519143223-lva1-app6891/85/Paris-Master-Class-2011-05-Post-Processing-Pipeline-21-320.jpg)

![HD RR

• Simple Tone Mapping Operator [Reinhard]

• Scaling with MiddleGrey

• Map range from 0..1](https://image.slidesharecdn.com/05postfx-150519143223-lva1-app6891/85/Paris-Master-Class-2011-05-Post-Processing-Pipeline-22-320.jpg)

![HD RR

• Exponential decay function [Pattanaik]

• Frame-rate independent

• Adapted luminance chases average luminance

– Stable lighting conditions -> the same

• tau interpolates between adaptation rates of

cones and rods

• 0.2 for rods / 0.4 for cones](https://image.slidesharecdn.com/05postfx-150519143223-lva1-app6891/85/Paris-Master-Class-2011-05-Post-Processing-Pipeline-25-320.jpg)

![HD RR

• Luminance History function [Tchou]

• Even out fast luminance changes (flashes etc.)

• Keeps track of the luminance of the last 16 frames

• If the last 16 values >= || < current adapted luminance

-> run light adaptation

• If some of the 16 values are going in different

directions

-> no light adaptation

• Runs only once per frame -> cheap](https://image.slidesharecdn.com/05postfx-150519143223-lva1-app6891/85/Paris-Master-Class-2011-05-Post-Processing-Pipeline-26-320.jpg)

![HD RR

• Scotopic View

• Contrast is lower

• Visual acuity is lower

• Blue shift

• Convert RGB to CIE XYZ

• Scotopic Tone Mapping Operator [Shirley]

• Multiply with a grey-bluish color](https://image.slidesharecdn.com/05postfx-150519143223-lva1-app6891/85/Paris-Master-Class-2011-05-Post-Processing-Pipeline-31-320.jpg)

![Depth of Field

• Range of acceptable sharpness == Depth of

Field see [Scheuermann] and [Gillham]

• Define a near and far blur plane

• Everything in front of the near blur plane and

everything behind the far blur plane is blurred](https://image.slidesharecdn.com/05postfx-150519143223-lva1-app6891/85/Paris-Master-Class-2011-05-Post-Processing-Pipeline-34-320.jpg)

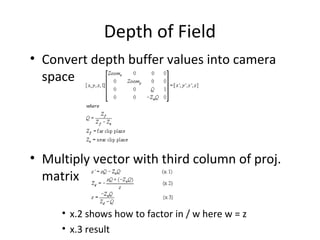

![Depth of Field

• Applying Depth of Field

• Convert to Camera Z == pixel distance from

camera

float PixelCameraZ = (-NearClip * Q) / (Depth - Q);

• Focus + Depth of Field Range [DOFRM]

lerp(OriginalImage, BlurredImage, saturate(Range * abs(Focus - PixelCameraZ)));

-> Auto-Focus effect possible

• Color leaking: change draw order or ignore it](https://image.slidesharecdn.com/05postfx-150519143223-lva1-app6891/85/Paris-Master-Class-2011-05-Post-Processing-Pipeline-36-320.jpg)

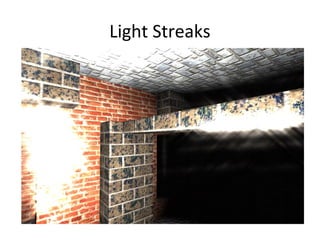

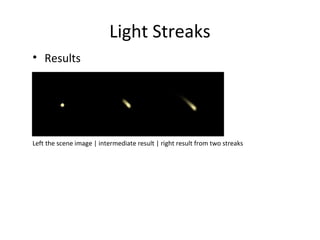

![Light Streaks

• Light scattering through a lens, eye lens or

aperture

-> cracks, scratches, grooves, dust and lens

imperfections cause light to scatter and diffract

• Painting light streaks would be done with a

brush by pressing a bit harder on the common

point and releasing the pressure while moving

the brush away from this point

->Light streak filter “smears” a bright spot into

several directions [Kawase][Oat]](https://image.slidesharecdn.com/05postfx-150519143223-lva1-app6891/85/Paris-Master-Class-2011-05-Post-Processing-Pipeline-38-320.jpg)

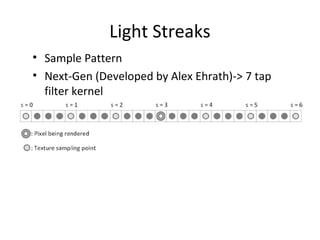

![Light Streaks

• Sample Pattern

• Masaki Kawase [Kawase] -> 4-tap == XBOX](https://image.slidesharecdn.com/05postfx-150519143223-lva1-app6891/85/Paris-Master-Class-2011-05-Post-Processing-Pipeline-39-320.jpg)

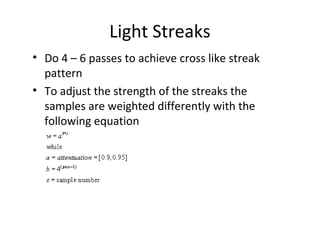

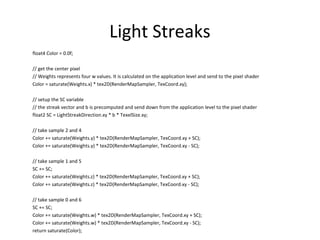

![Light Streaks

• Direction for the streak is coming from

orientation vector [Oat] like this

• b holds the power value of four or seven – shown on previous slide

• TxSize holds the texel size (1.0f / SizeOfTexture)](https://image.slidesharecdn.com/05postfx-150519143223-lva1-app6891/85/Paris-Master-Class-2011-05-Post-Processing-Pipeline-43-320.jpg)

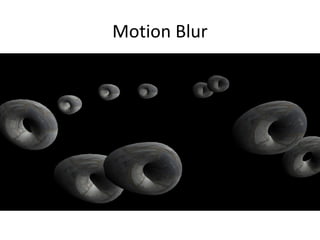

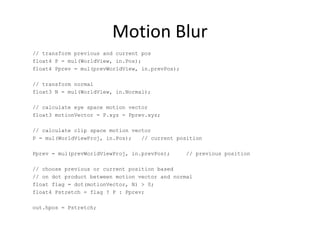

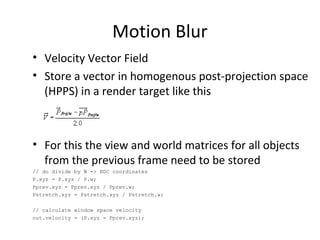

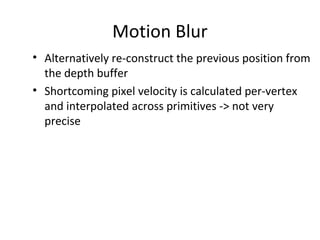

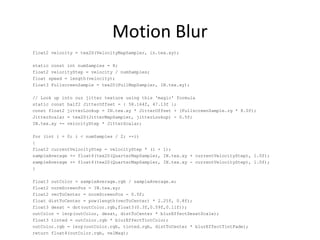

![Motion Blur

• Geometry Stretching [Wloka]

• Create a motion vector in view or world-space Vview

• Compare this vector to the normal in view or world-

space

– If similar in direction -> the current position value is

chosen to transform the vertex

– Otherwise the previous position value](https://image.slidesharecdn.com/05postfx-150519143223-lva1-app6891/85/Paris-Master-Class-2011-05-Post-Processing-Pipeline-48-320.jpg)

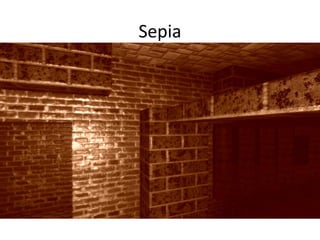

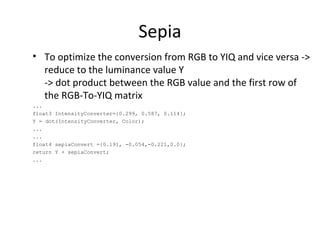

![Sepia

• Different than saturation control

• Marwan Ansari [Ansari] developed an efficient way

to convert to Sepia

• Basis is the YIQ color space

• Converting from RGB to YIQ with the matrix M

• Converting from YIQ to RGB is M’s inverse](https://image.slidesharecdn.com/05postfx-150519143223-lva1-app6891/85/Paris-Master-Class-2011-05-Post-Processing-Pipeline-53-320.jpg)

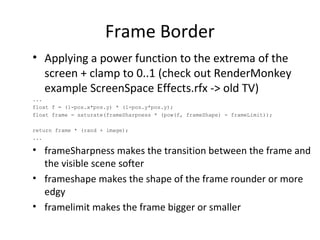

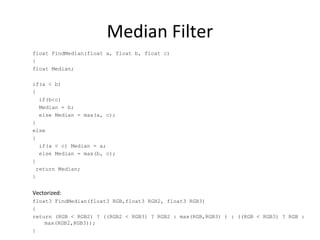

![Median Filter

• Used to remove “salt and pepper noise” from a picture

[Mitchell]

• Can be used to reduce color quality -> cheap camera with

MPEG compression

• Picks the middle value](https://image.slidesharecdn.com/05postfx-150519143223-lva1-app6891/85/Paris-Master-Class-2011-05-Post-Processing-Pipeline-60-320.jpg)

![References

[Ansari] Marwan Y. Ansari, "Fast Sepia Tone Conversion", Game Programming Gems 4, Charles River Media, pp 461 - 465, ISBN 1-58450-295-9

[Baker] Steve Baker, "Learning to Love your Z-Buffer", http://sjbaker.org/steve/omniv/love_your_z_buffer.html

[Berger] R.W. Berger, “Why Do Images Appear Darker on Some Displays?”, http://www.bberger.net/rwb/gamma.html

[Brown] Simon Brown, “Gamma-Correct Rendering”, http://www.sjbrown.co.uk/?article=gamma

[Caruzzi] Francesco Carucci, "Simulating blending operations on floating point render targets", ShaderX2

- Shader Programming Tips and Tricks with DirectX 9, Wordware Inc., pp 172 - 177, ISBN 1-

55622-988-7

[DOFRM] Depth of Field Effect in RenderMonkey 1.62

[Gilham] David Gilham, "Real-Time Depth-of-Field Implemented with a Post-Processing only Technique", ShaderX5

: Advanced Rendering, Charles River Media / Thomson, pp 163 - 175, ISBN 1-

58450-499-4

[Green] Simon Green, "Stupid OpenGL Shader Tricks", http://developer.nvidia.com/docs/IO/8230/GDC2003_OpenGLShaderTricks.pdf"

[ITU1990] ITU (International Tlecommunication Union), Geneva. ITU-R Recommendation BT.709, Basic Parameter Values for the HDTV Standard for the Studio and for International Programme

Exchange, 1990 (Formerly CCIR Rec. 709).

[Kawase] Masaki Kawase, "Frame Buffer Postprocessing Effects in DOUBLE-S.T.E.A.L (Wreckless)", http://www.daionet.gr.jp/~masa/

[Kawase2] Masumi Nagaya, Masaki Kawase, Interview on WRECKLESS: The Yakuza Missions (Japanese Version Title: "DOUBLE-S.T.E.A.L"), http://spin.s2c.ne.jp/dsteal/wreckless.html

[Krawczyk] Grzegorz Krawczyk, Karol Myszkowski, Hans-Peter Seidel, "Perceptual Effects in Real-time Tone Mapping", Proc. of Spring Conference on Computer Graphics, 2005

[Mitchell] Jason L. Mitchell, Marwan Y. Ansari, Evan Hart, "Advanced Image Processing with DirectX 9 Pixel Shaders", ShaderX2 - Shader Programming Tips and Tricks with DirectX 9, Wordware

Inc., pp 439 - 464, ISBN 1-55622-988-7

[Oat] Chris Oat, "A Steerable Streak Filter", , ShaderX3

: Advanced Rendering With DirectX And OpenGL, Charles River Media, pp 341 - 348, ISBN 1-58450-357-2

[Pattanaik] Sumanta N. Pattanaik, Jack Tumblin, Hector Yee, Donald P. Greenberg, "Time Dependent Visual Adaptation For Fast Realistic Image Display", SIGGRAPH 2000, pp 47-54

[Persson] Emil Persson, "HDR Texturing" in the ATI March 2006 SDK.

[Poynton] Charles Poynton, "The rehabilitation of gamma", http://www.poynton.com/PDFs/Rehabilitation_of_gamma.pdf

[Reinhard] Erik Reinhard, Michael Stark, Peter Shirley, James Ferwerda, "Photographic Tone Reproduction for Digital Images", http://www.cs.utah.edu/~reinhard/cdrom/

[Scheuermann] Thorsten Scheuermann and Natalya Tatarchuk, ShaderX3

: Advanced Rendering With DirectX And OpenGL, Charles River Media, pp 363 - 377, ISBN 1-58450-357-2

[Seetzen] Helge Seetzen, Wolfgang Heidrich, Wolfgang Stuerzlinger, Greg Ward, Lorne Whitehead, Matthew Trentacoste, Abhijeet Ghosh, Andrejs Vorozcovs, "High Dynamic Range Display

Systems",SIGGRAPH 2004, pp 760 - 768

[Shimizu] Clement Shimizu, Amit Shesh, Baoquan Chen, "Hardware Accelerated Motion Blur Generation", EUROGRAPHICS 2003 22:3

[Shirley] Peter Shirley et all., "Fundamentals of Computer Graphics", Second Edition, A.K. Peters pp. 543 - 544, 2005, ISBN 1-56881-269-8

[Spenzer] Greg Spenzer, Peter Shirley, Kurt Zimmerman, Donald P. Greenberg, "Physically-Based Glare Effects for Digital Images", SIGGRAPH 1995, pp. 325 - 334.

[Stokes] Michael Stokes (Hewlett-Packard), Matthew Anderson (Microsoft), Srinivasan Chandrasekar (Microsoft), Ricardo Motta (Hewlett-Packard) “A Standard Default Color Space for the

Internet - sRGB“ http://www.w3.org/Graphics/Color/sRGB.html

[Tchou] Chris Tchou, HDR the Bungie Way, http://msdn2.microsoft.com/en-us/directx/aa937787.aspx

[W3CContrast] W3C Working Draft, 26 April 2000, Technique, Checkpoint 2.2 - Ensure that foreground and background color combinations provide sufficient contrast when viewed by someone

having color deficits or when viewed on a black and white screen, htp://www.w3.org/TR/AERT#color-contrast

[Waliszewski] Arkadiusz Waliszewski, "Floating-point Cube maps", ShaderX2 - Shader Programming Tips and Tricks with DirectX 9, Wordware Inc., pp 319 - 323, ISBN 1-55622-988-7](https://image.slidesharecdn.com/05postfx-150519143223-lva1-app6891/85/Paris-Master-Class-2011-05-Post-Processing-Pipeline-64-320.jpg)