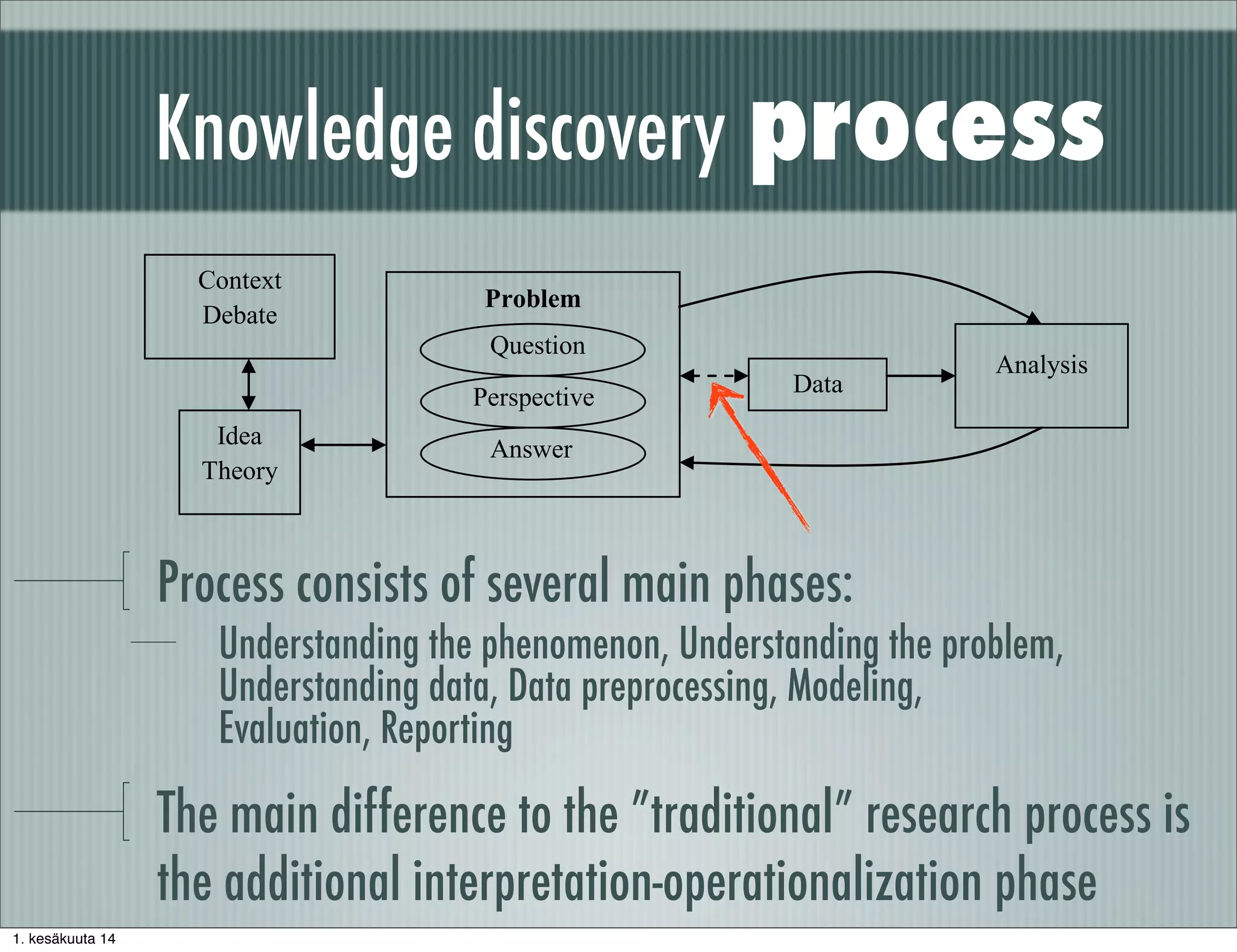

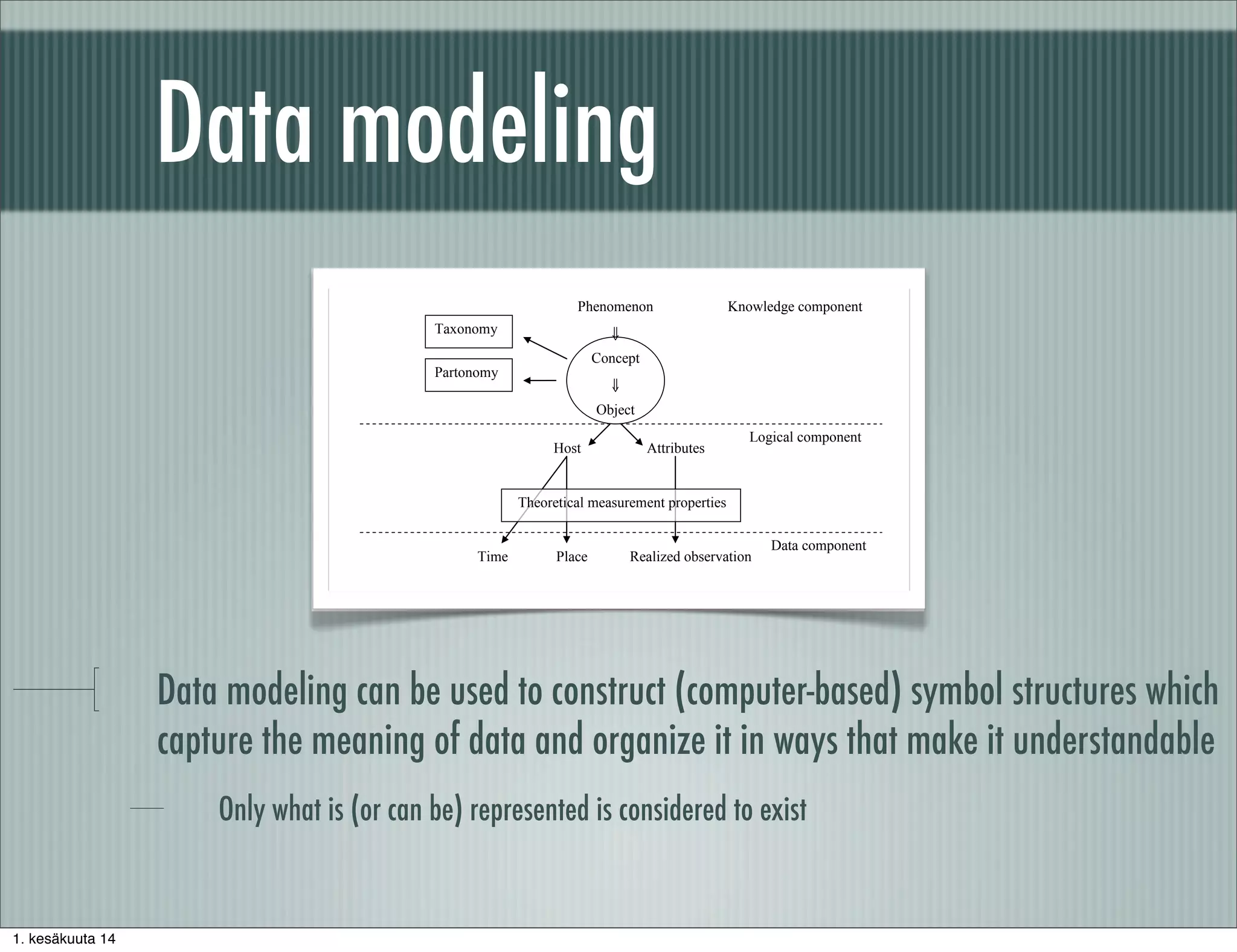

The document discusses methodological principles for working with big data. It notes that big data often consists of secondary data not tailored to specific research questions and requires additional background information and data preprocessing. Effective use of big data requires skills in measurement, data modeling, statistical computing, and the subject matter theory. Data preprocessing is an important part of the analysis process and involves correcting deficiencies, reducing uninteresting data, and intelligently enriching data with background knowledge.