The document discusses several aspects of database design including:

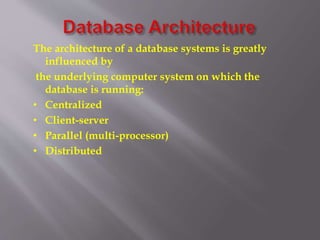

- Logical design which involves deciding on the database schema and relation schemas.

- Physical design which involves deciding on the physical layout of the database.

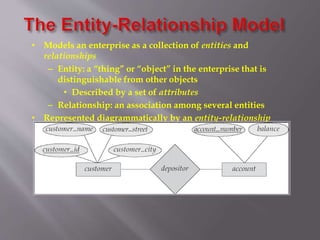

- Entity-relationship modeling which involves modeling an enterprise as entities and relationships.

- Extensions to the relational model to include object orientation and complex data types.