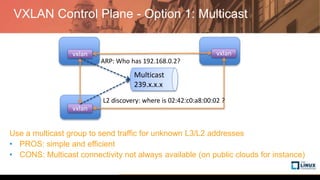

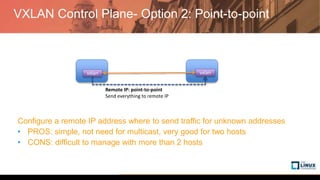

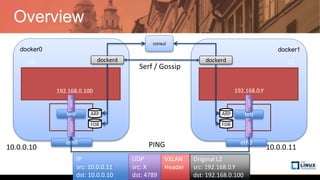

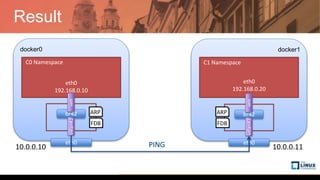

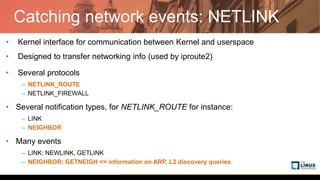

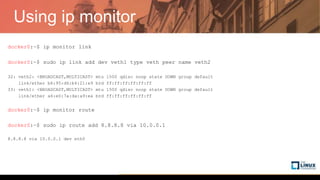

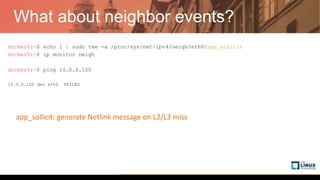

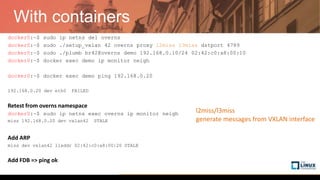

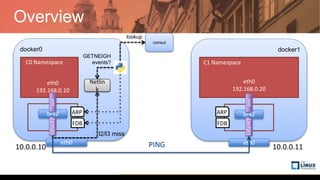

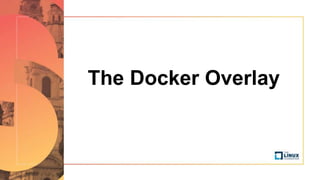

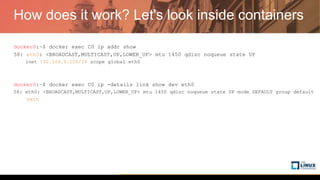

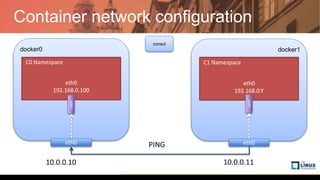

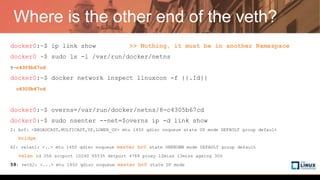

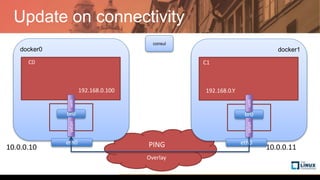

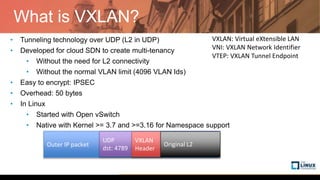

The document provides a detailed overview of creating and managing Docker overlay networks, specifically using the VXLAN tunneling technology. It covers steps for setting up an overlay network using Docker commands, inspecting configurations, and ensuring connectivity between containers through various network setups. Additionally, it discusses possible options for distributing VXLAN endpoint information and managing network events.

![Let's have a look

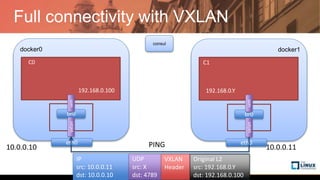

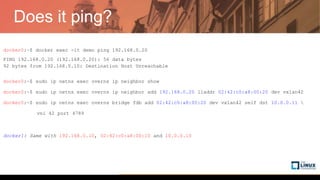

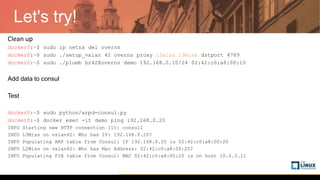

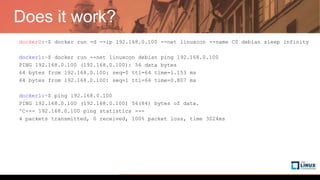

docker0:~$ sudo tcpdump -nn -i eth0 "port 4789"

docker1:~$ docker run -it --rm --net linuxcon debian ping 192.168.0.100

PING 192.168.0.100 (192.168.0.100): 56 data bytes

64 bytes from 192.168.0.100: seq=0 ttl=64 time=1.153 ms

64 bytes from 192.168.0.100: seq=1 ttl=64 time=0.807 ms

docker0:~$

13:35:12.796941 IP 10.0.0.11.60916 > 10.0.0.10.4789: VXLAN, flags [I] (0x08), vni 256

IP 192.168.0.2 > 192.168.0.100: ICMP echo request, id 1, seq 0, length 64

13:35:12.797035 IP 10.0.0.10.54953 > 10.0.0.11.4789: VXLAN, flags [I] (0x08), vni 256

IP 192.168.0.100 > 192.168.0.2: ICMP echo reply, id 1, seq 0, length 64](https://image.slidesharecdn.com/linuxcon-eu-2017-171025130617/85/Deep-Dive-in-Docker-Overlay-Networks-14-320.jpg)