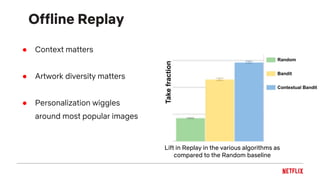

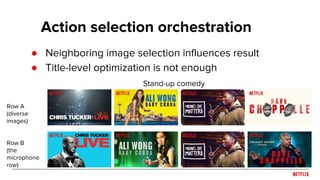

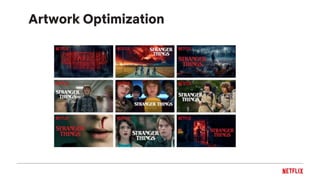

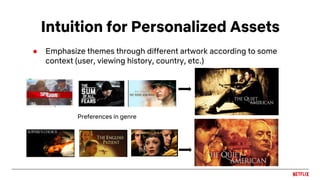

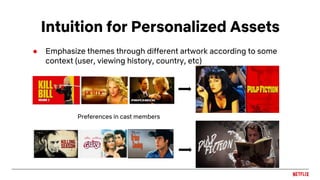

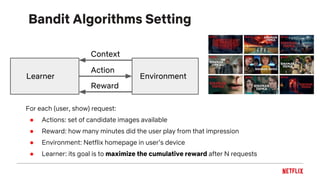

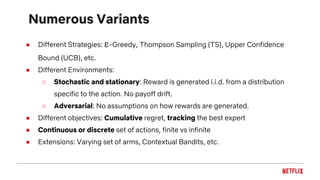

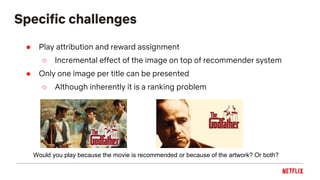

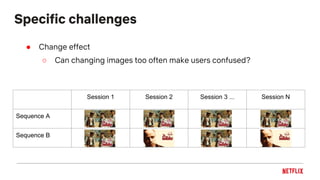

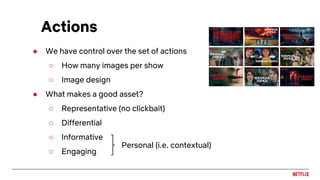

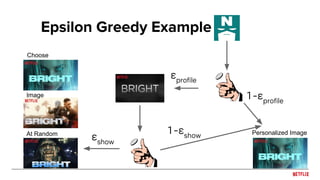

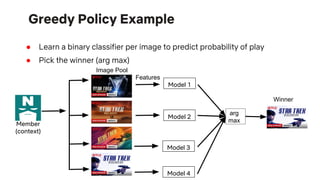

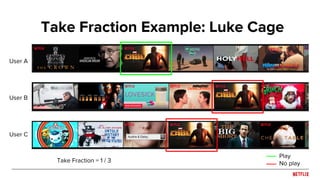

The document discusses how Netflix optimizes personalized artwork for its titles to enhance user engagement and viewing decisions across its 130 million global members. It details the use of algorithms, such as bandit algorithms, to analyze user preferences and choose effective imagery based on various factors like viewing history and location. The document also highlights the challenges of image attribution, reward assignment, and maintaining user interest through diverse and representative artwork.

![● Unbiased offline evaluation from explore data

Offline metric: Replay [Li et al, 2010]

Offline Take Fraction = 2 / 3

User 1 User 2 User 3 User 4 User 5 User 6

Random Assignment

Play?

Model Assignment](https://image.slidesharecdn.com/fernandoamatnetflixartworks-181019081420/85/Artworks-personalization-on-Netflix-17-320.jpg)