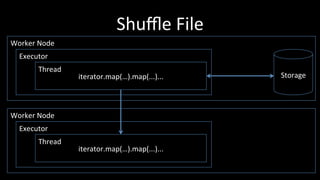

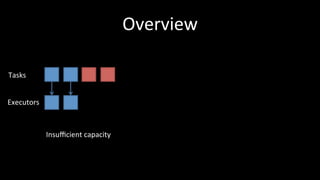

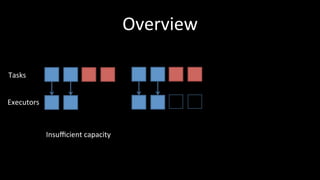

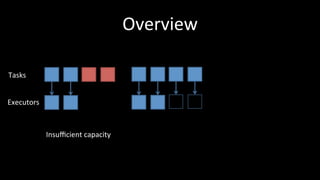

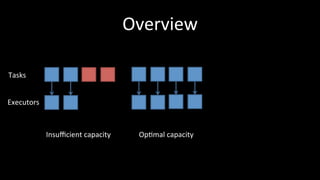

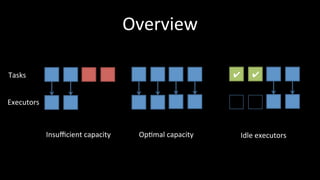

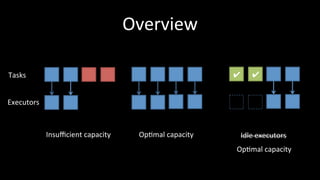

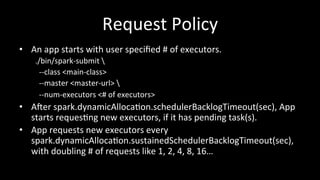

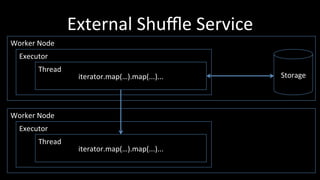

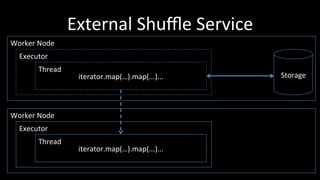

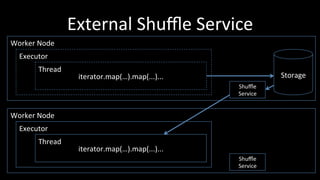

Dynamic resource allocation in Apache Spark allows executors to be dynamically added or removed based on the workload of applications. Extra executors are added when applications have pending tasks to help balance workload, and idle executors are removed to free resources for other applications. The dynamic allocation policies control when executors are requested or removed based on factors like pending tasks and executor idle time. An external shuffle service is also used to improve shuffle performance.