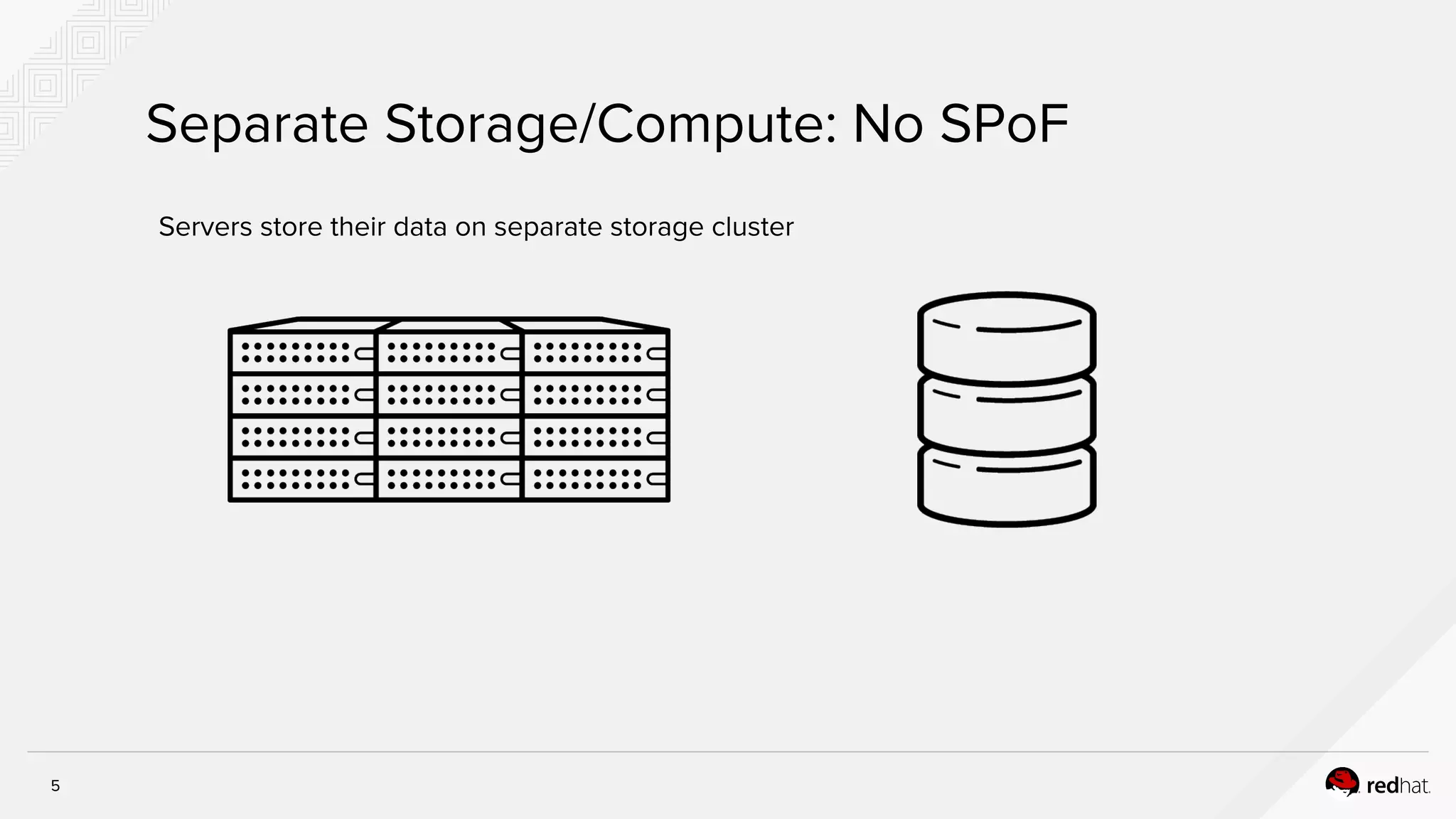

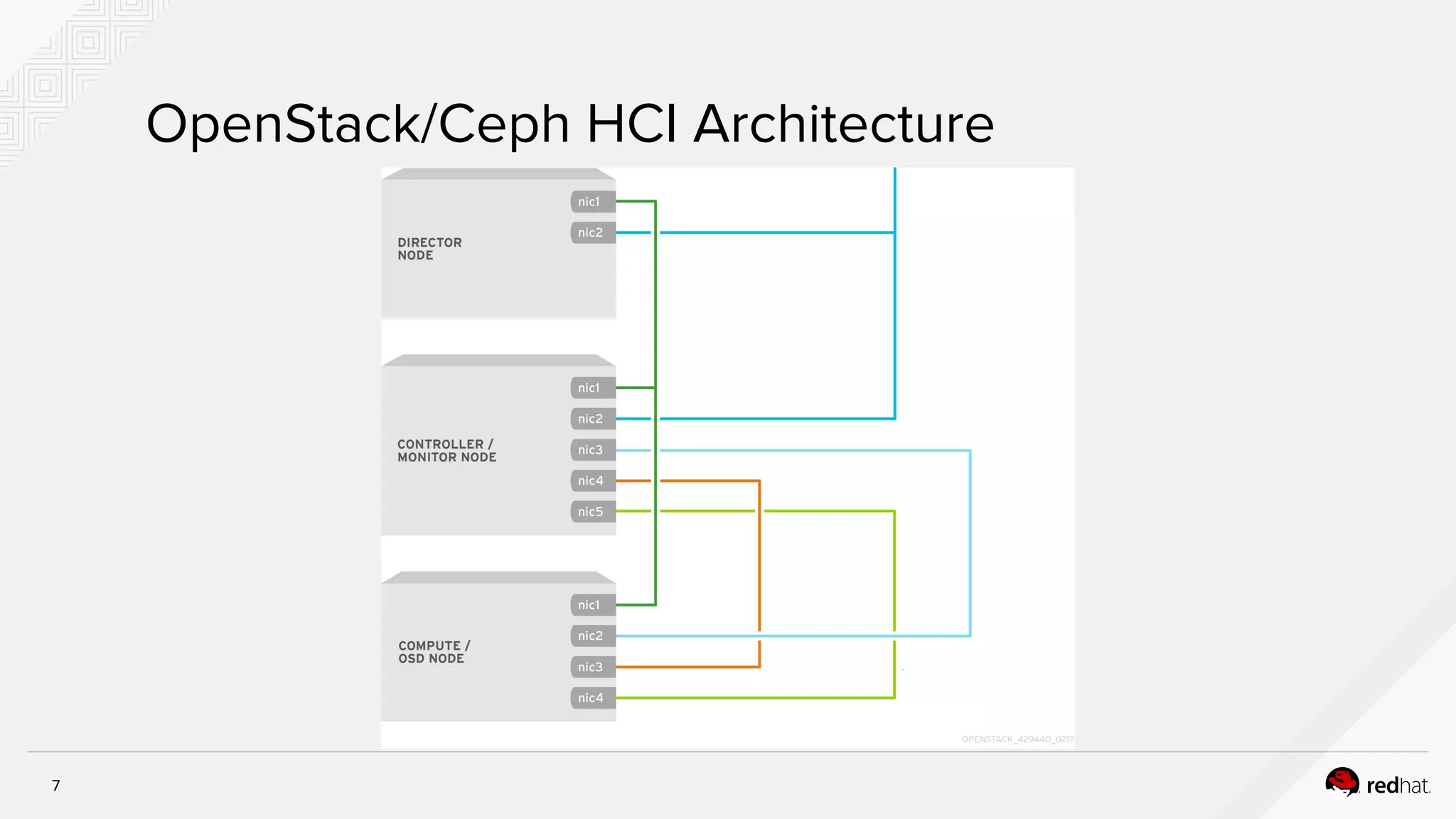

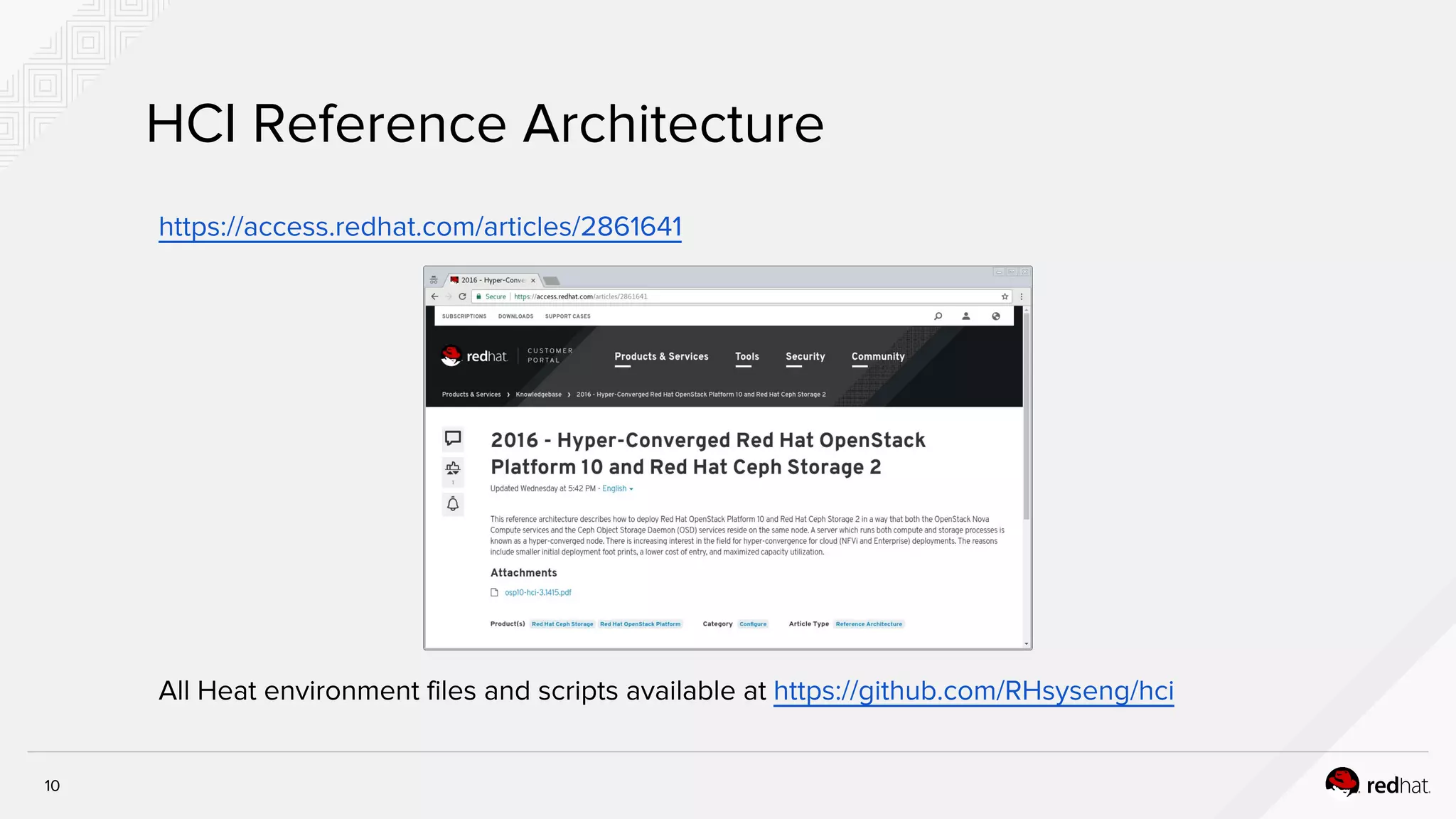

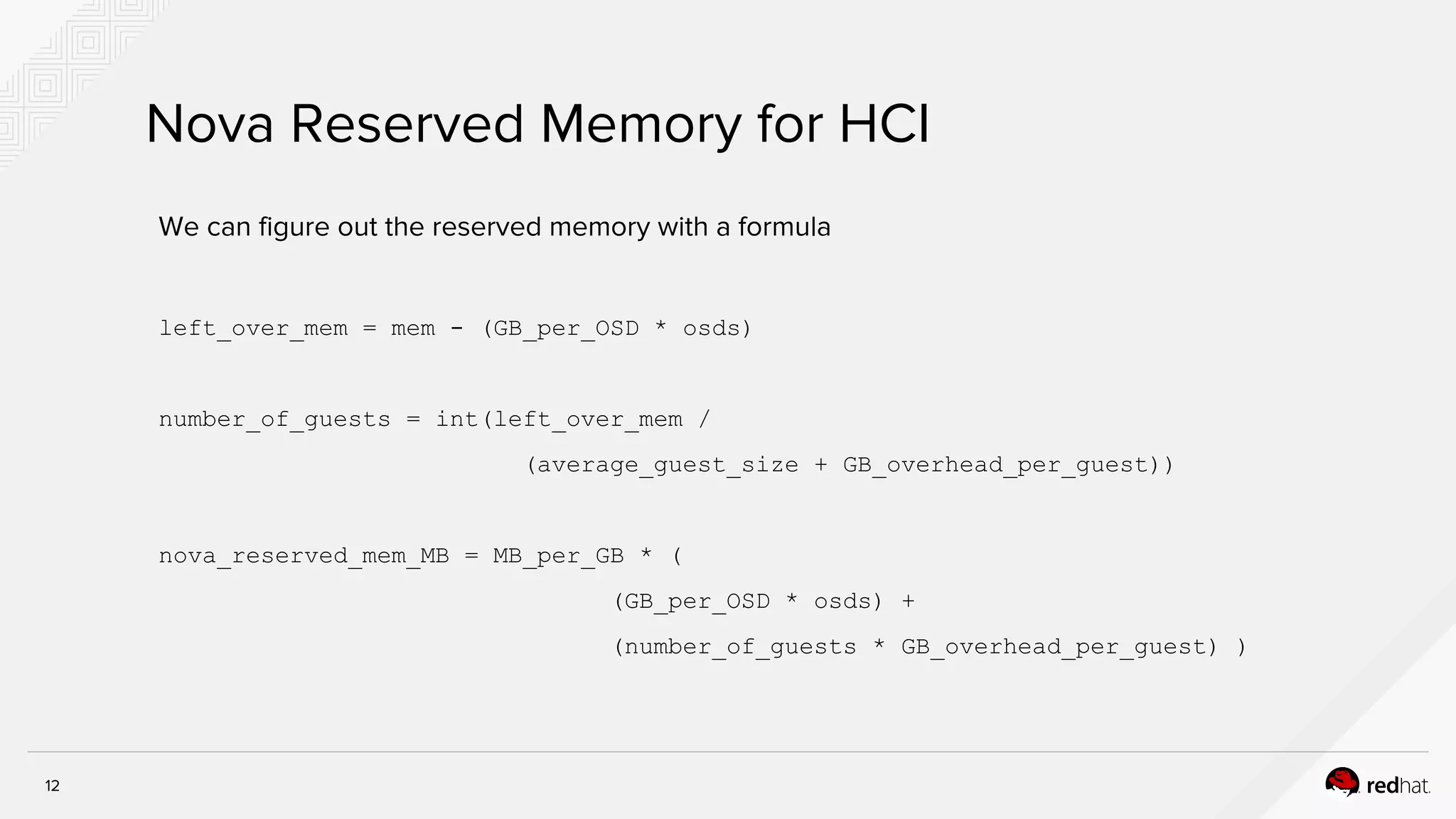

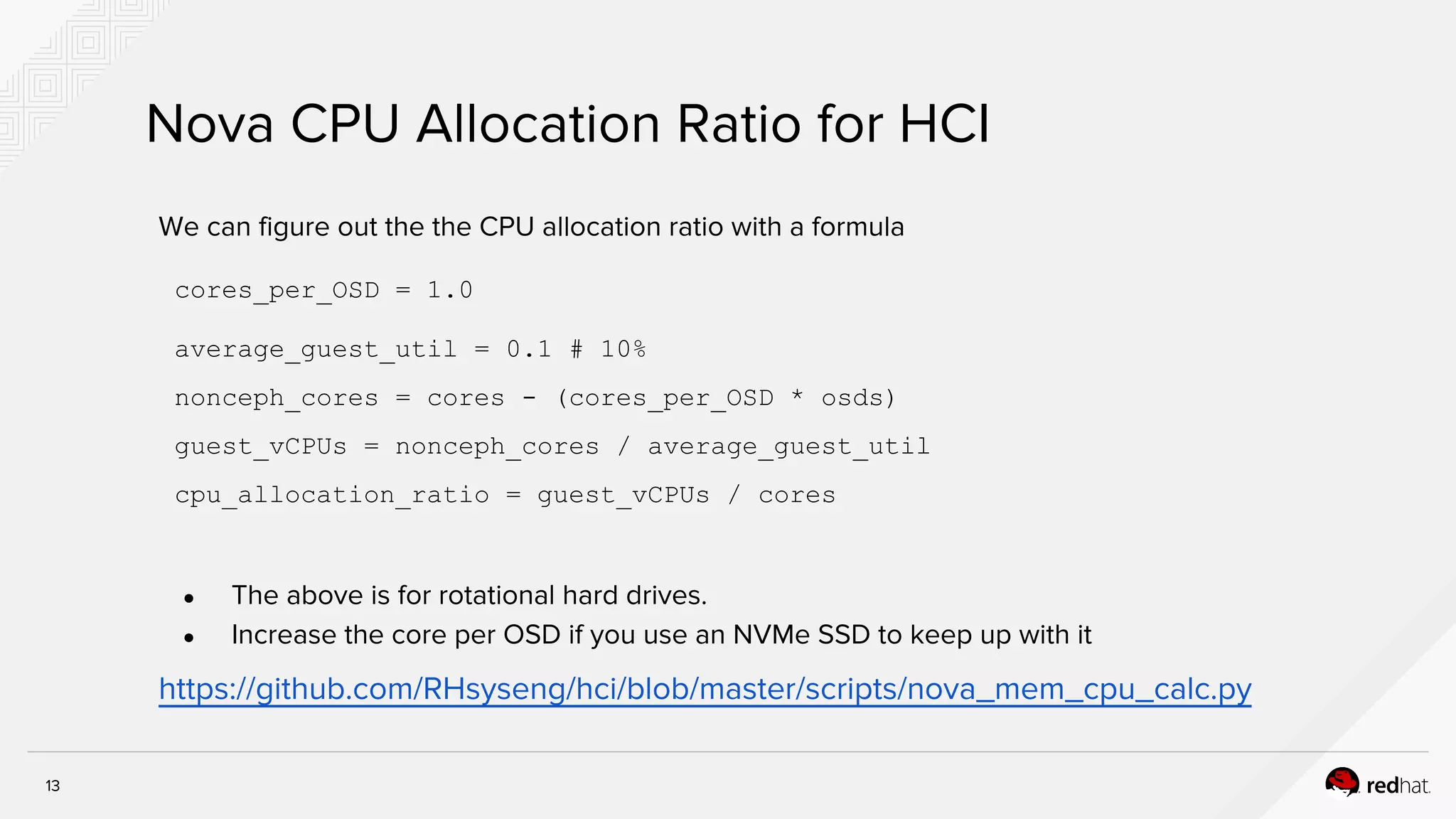

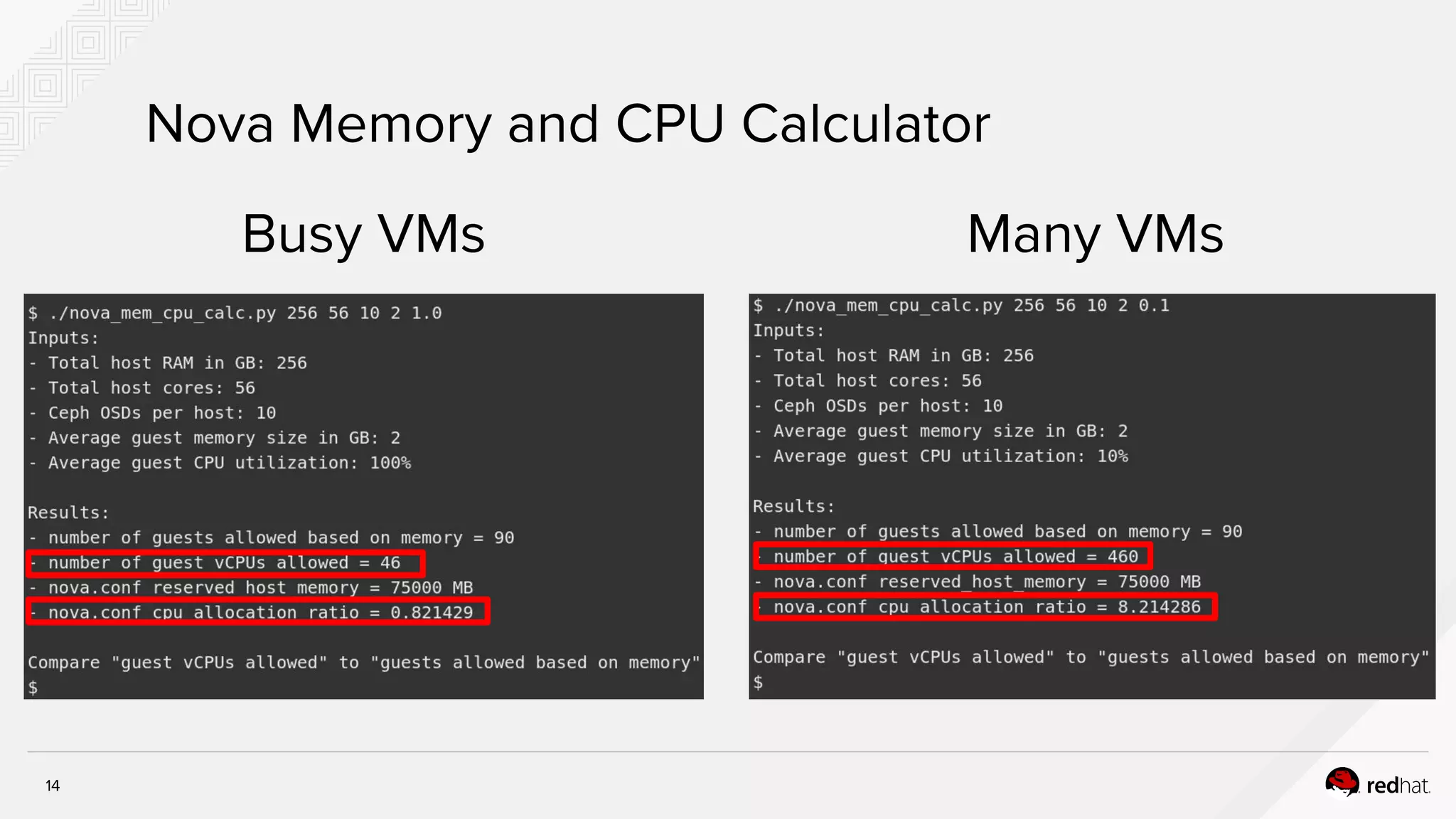

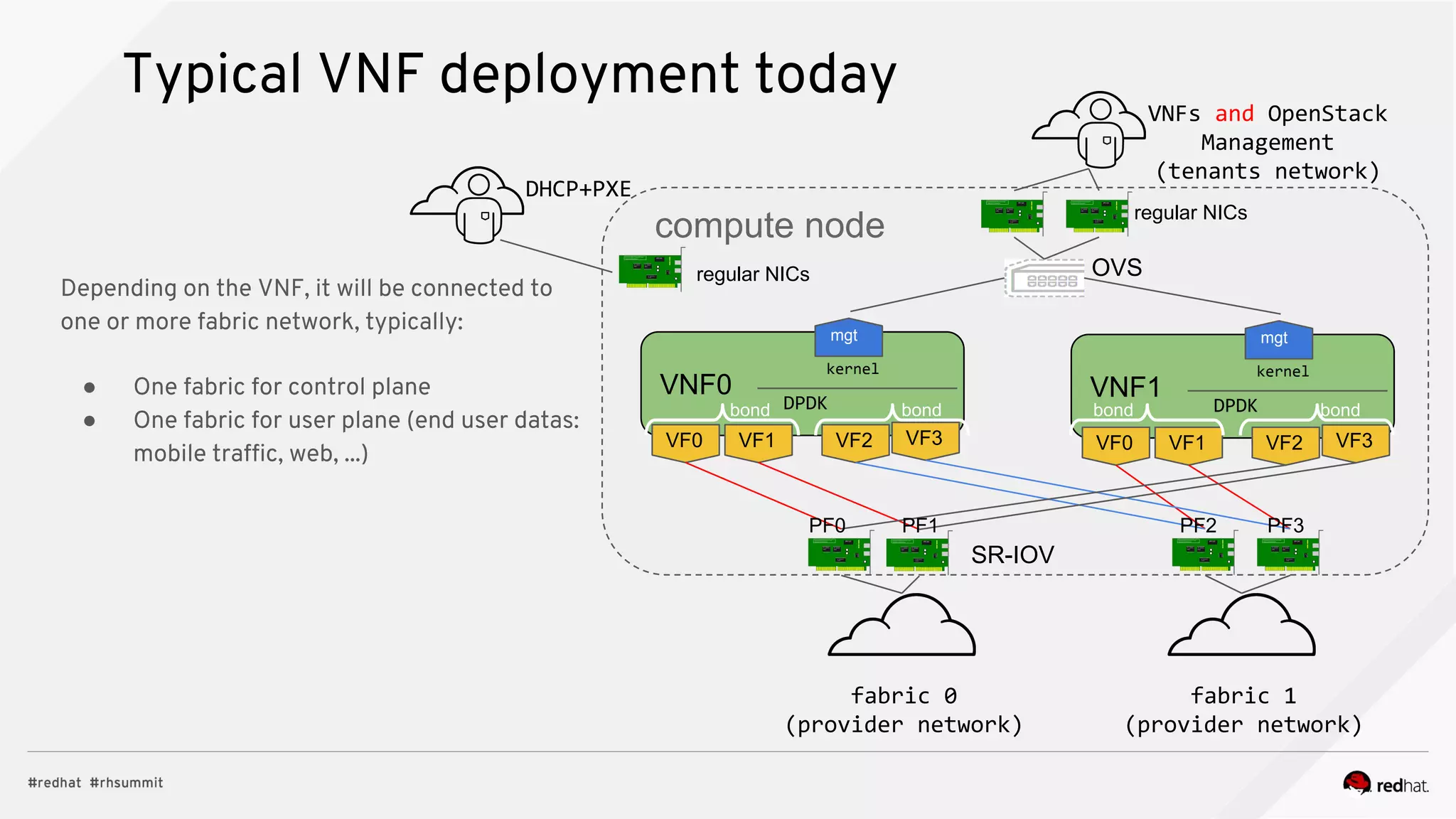

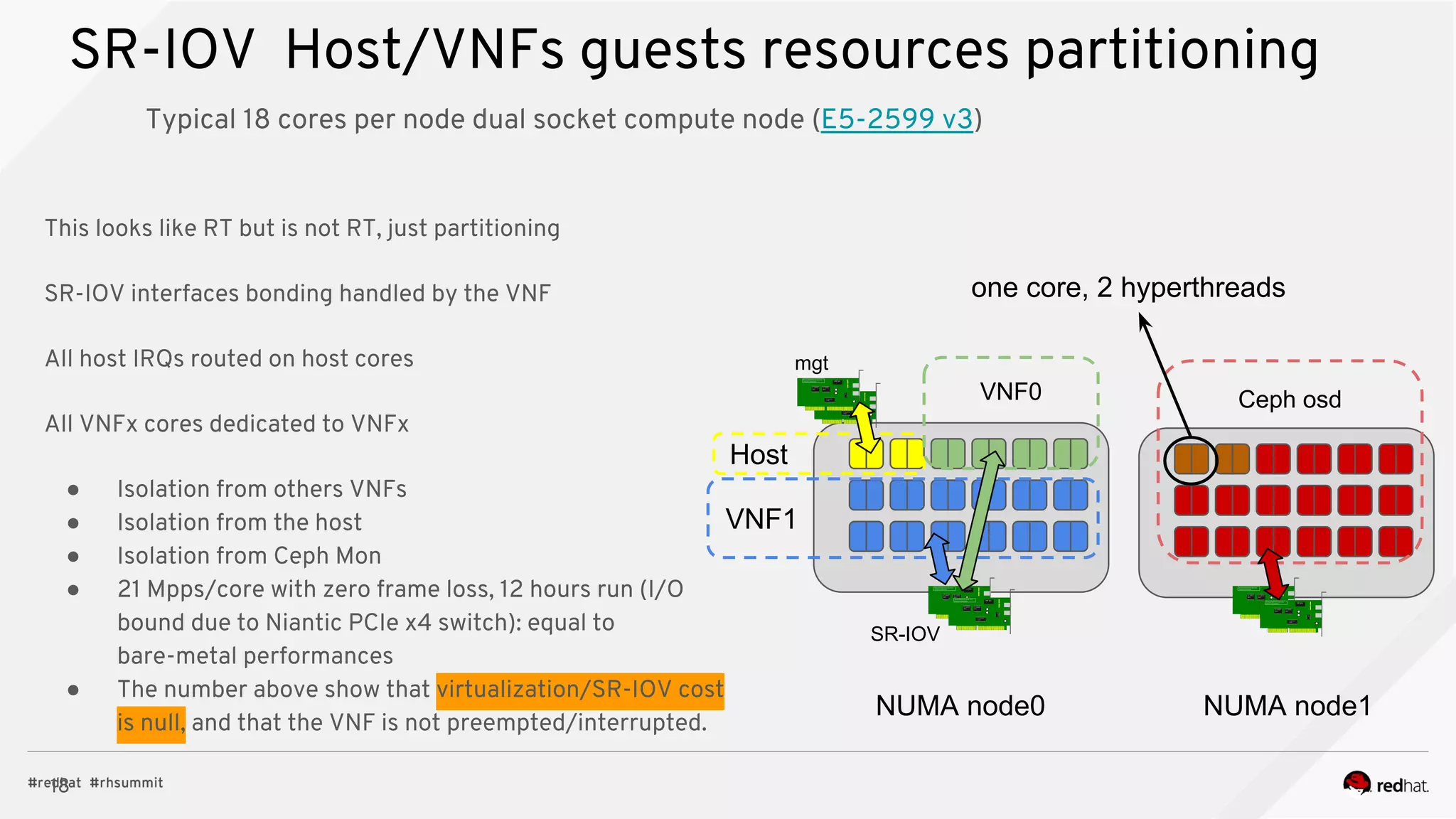

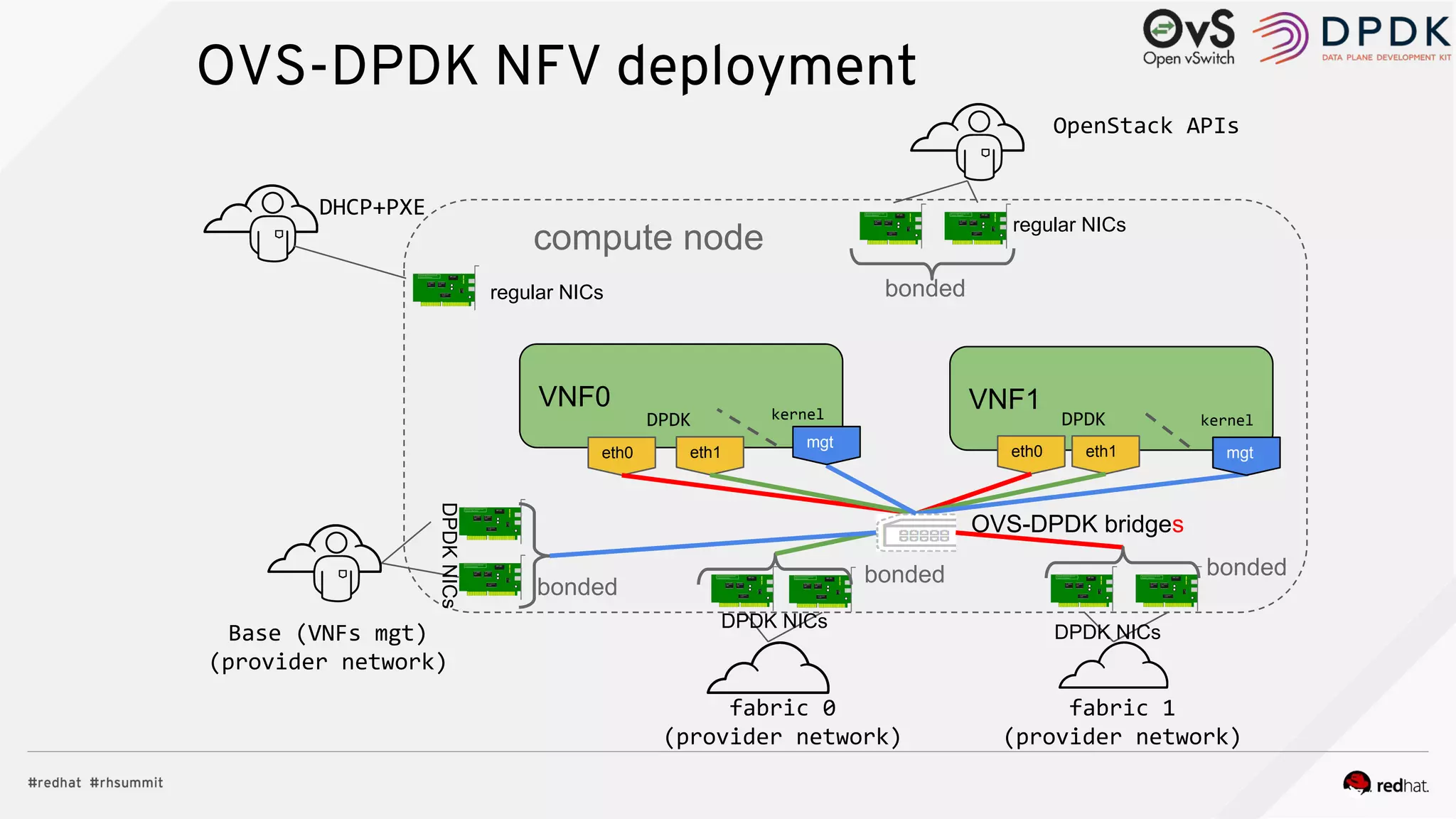

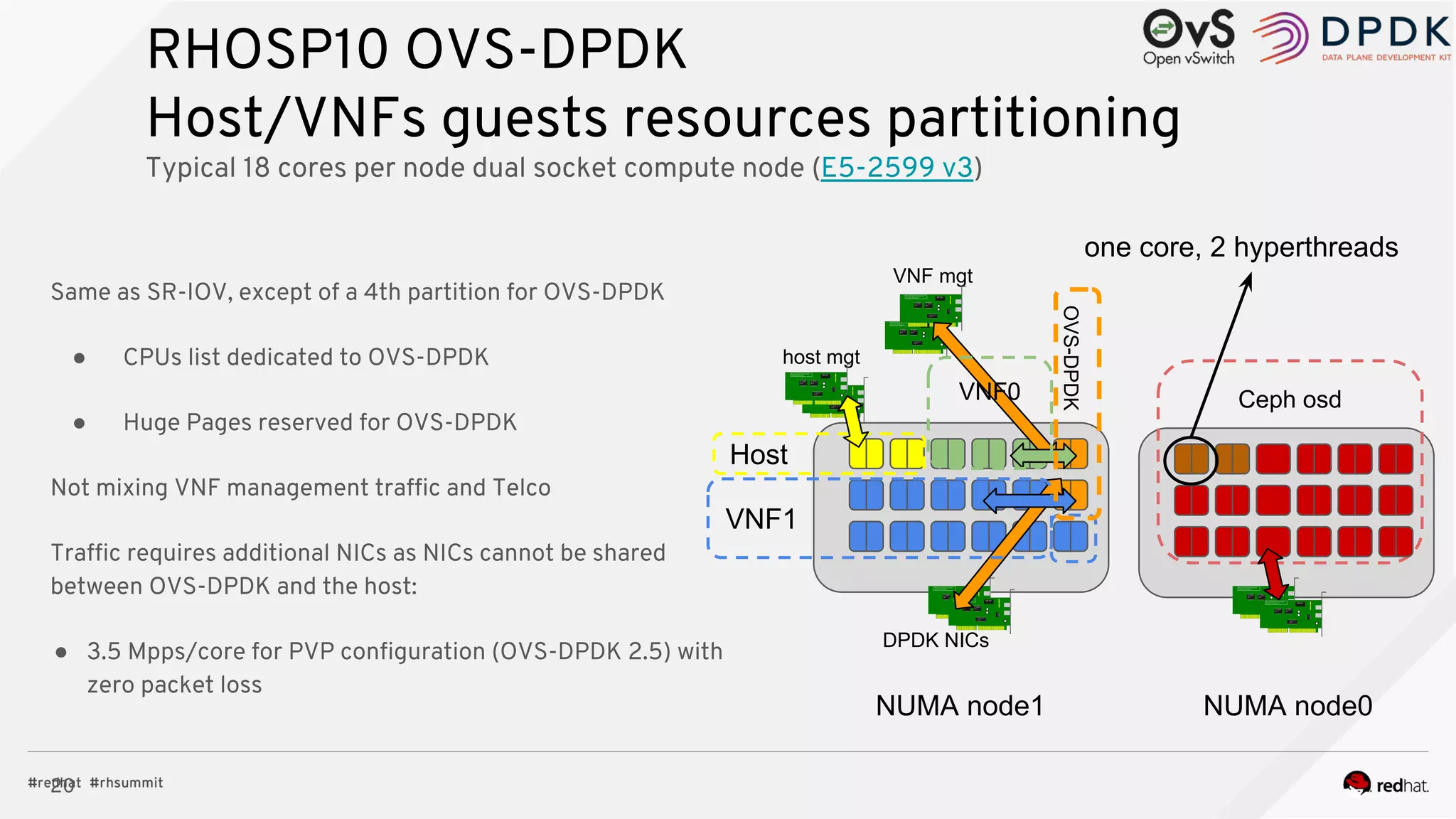

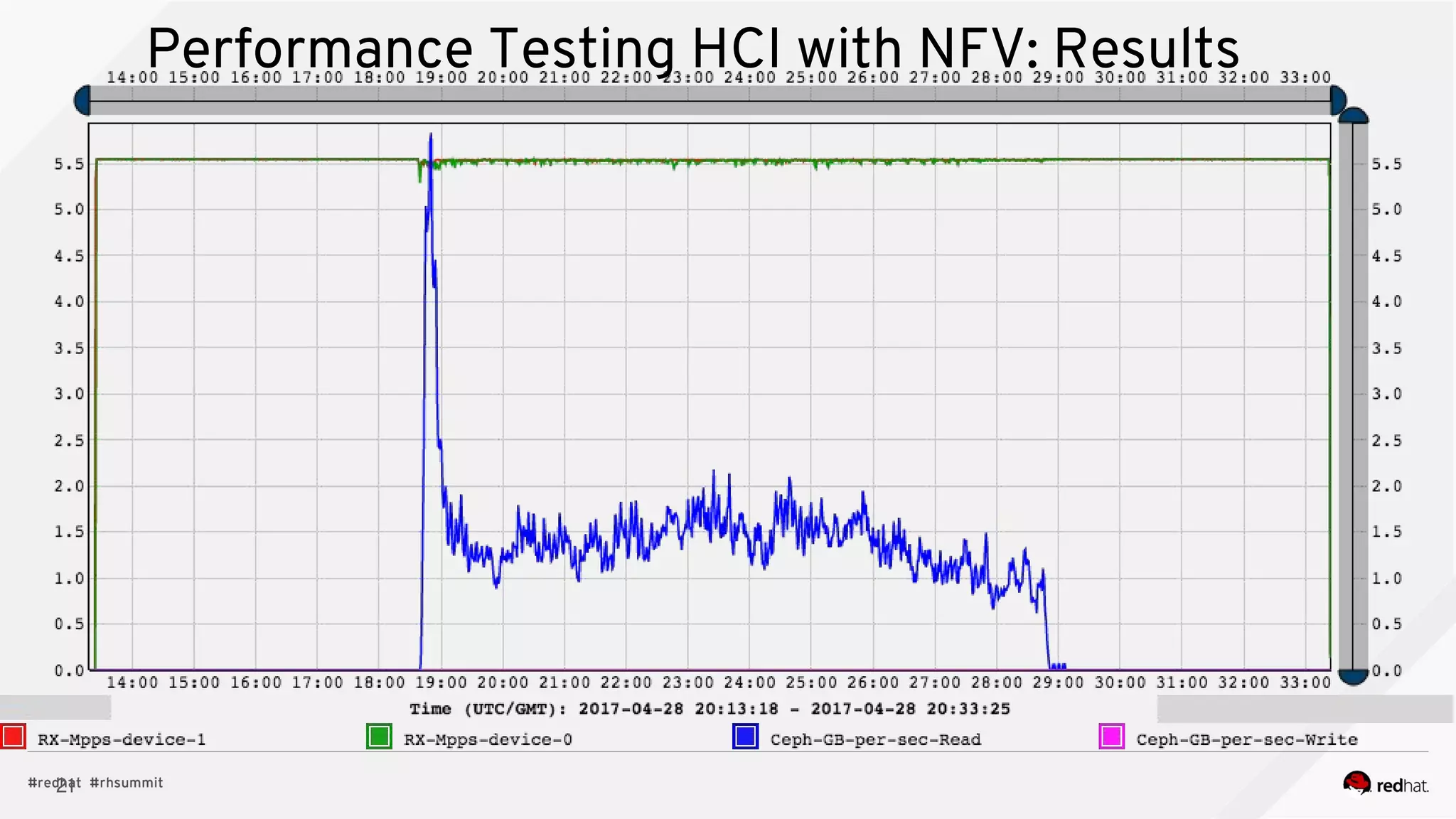

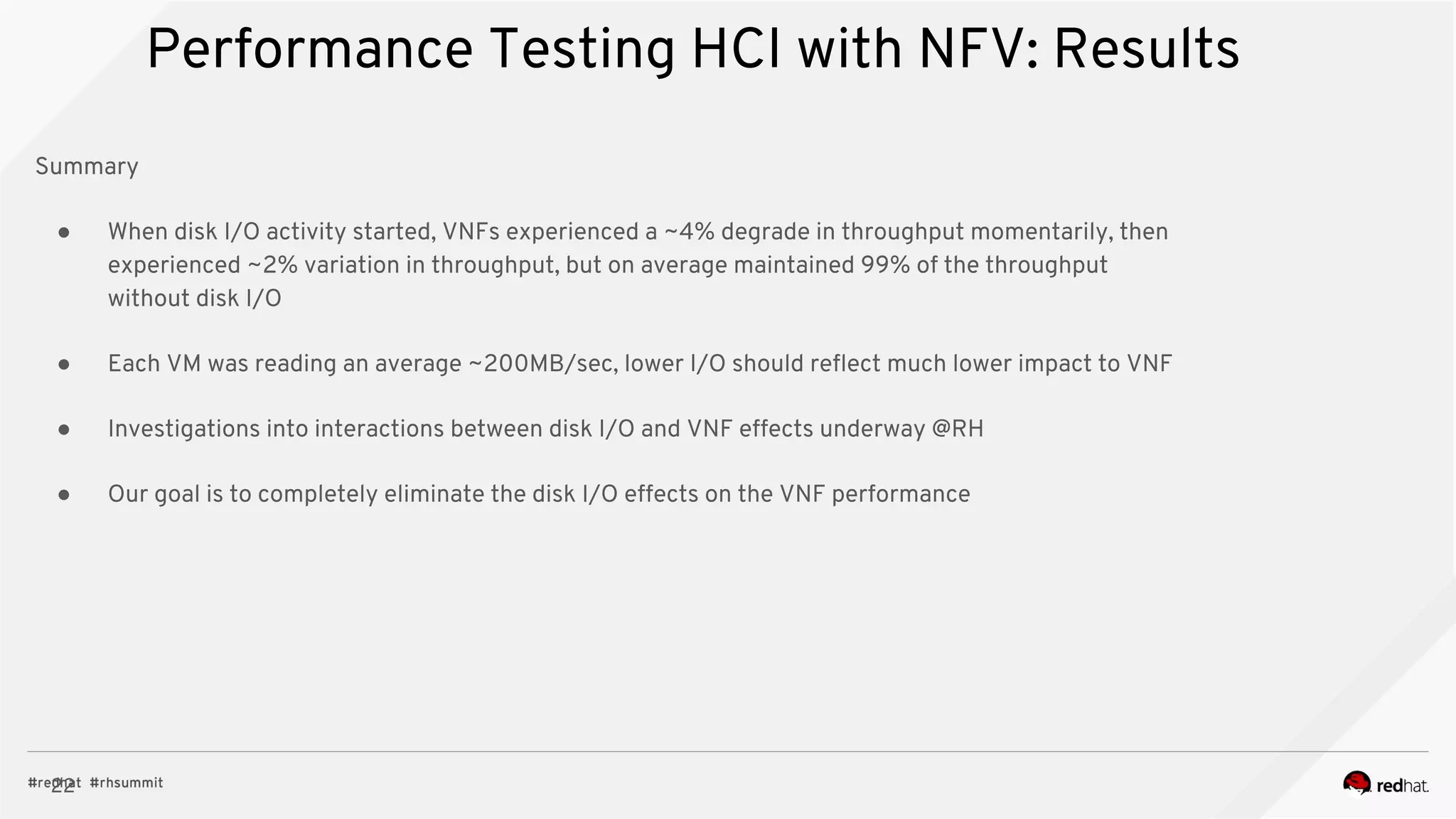

The document discusses hyper-converged infrastructure (HCI) and its integration with OpenStack and Ceph for improved resource management and efficiency. It highlights the advantages of HCI, including reduced footprint, better hardware standardization, and isolation for network function virtualization (NFV). Performance testing results indicate that VNFs maintained high throughput with minimal impact from disk I/O activities.