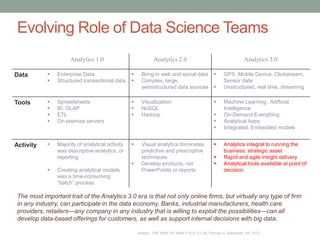

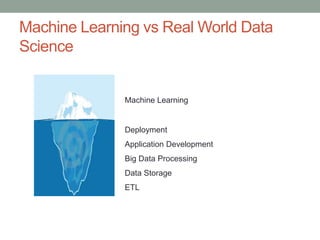

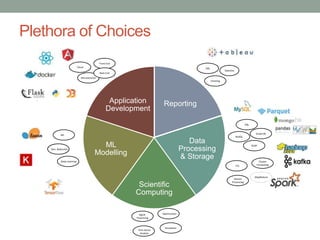

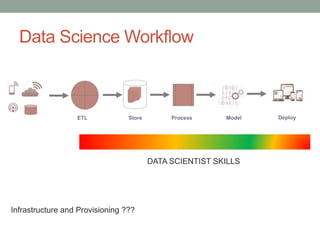

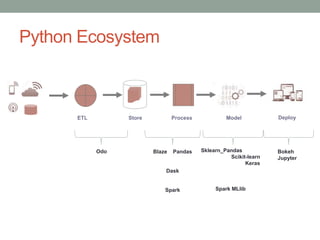

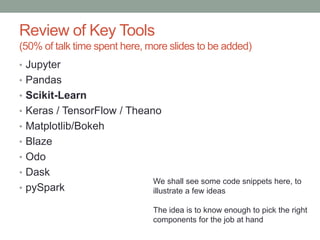

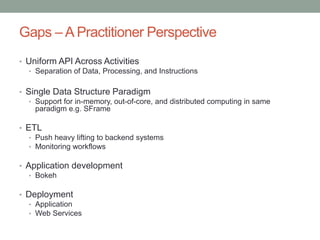

This document provides an overview of the Python ecosystem for data science. It describes how tools in the ecosystem can be used to support various data science tasks like reporting, data processing, scientific computing, machine learning modeling, and application development. The document outlines common workflows for small, medium and big data use cases. It also reviews popular Python tools, identifies strengths in the current ecosystem, and discusses some gaps from a practitioner's perspective.