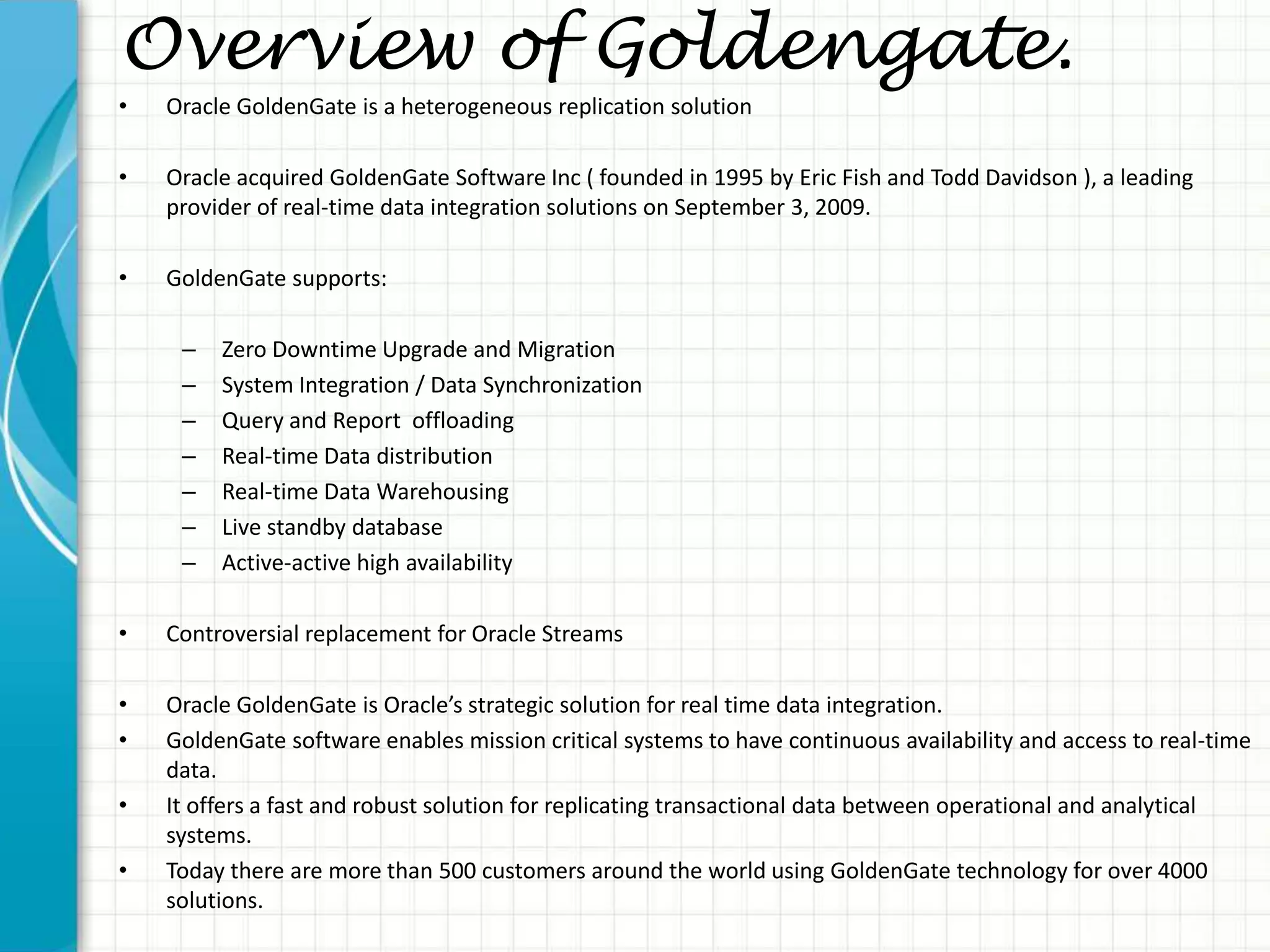

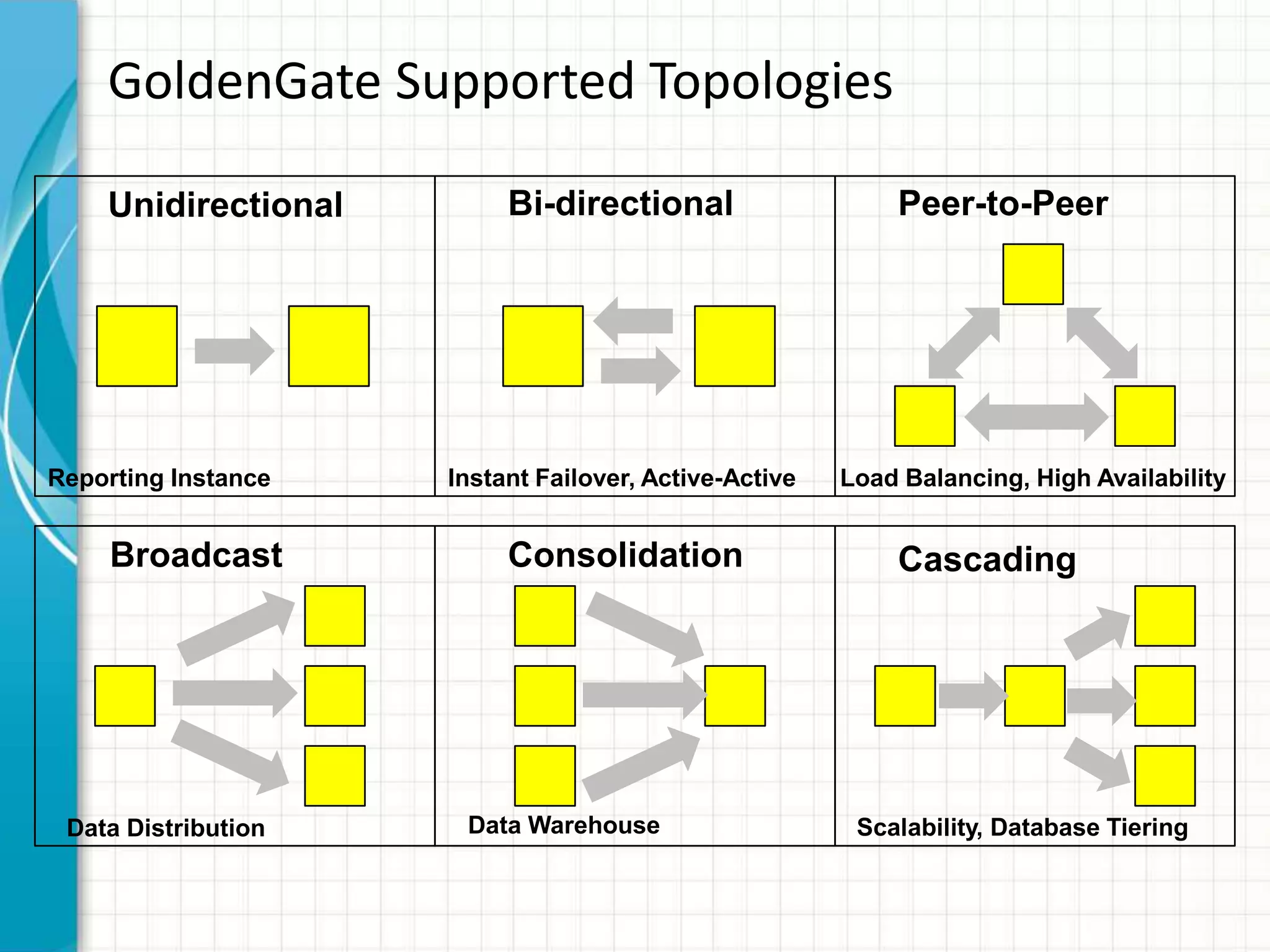

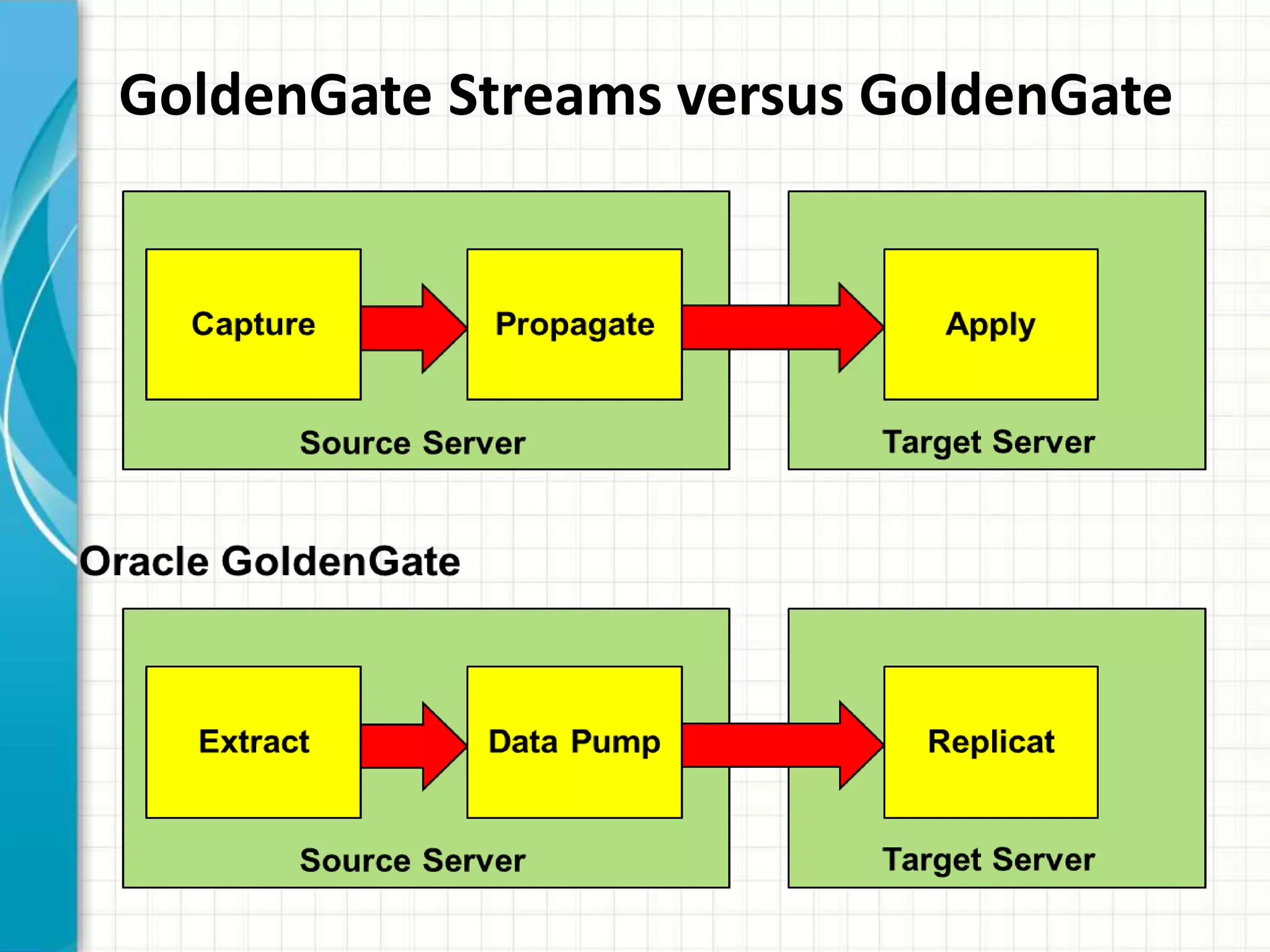

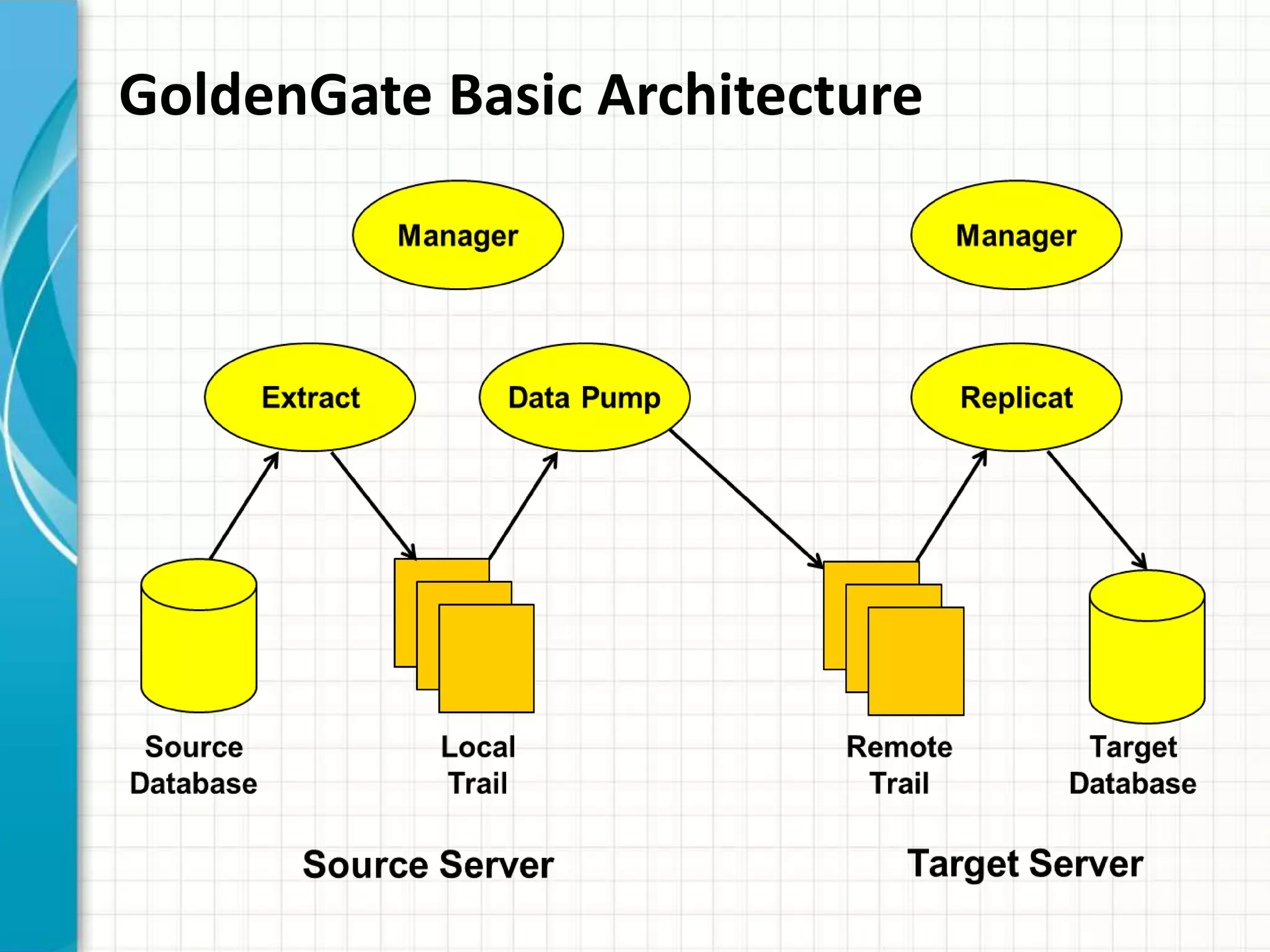

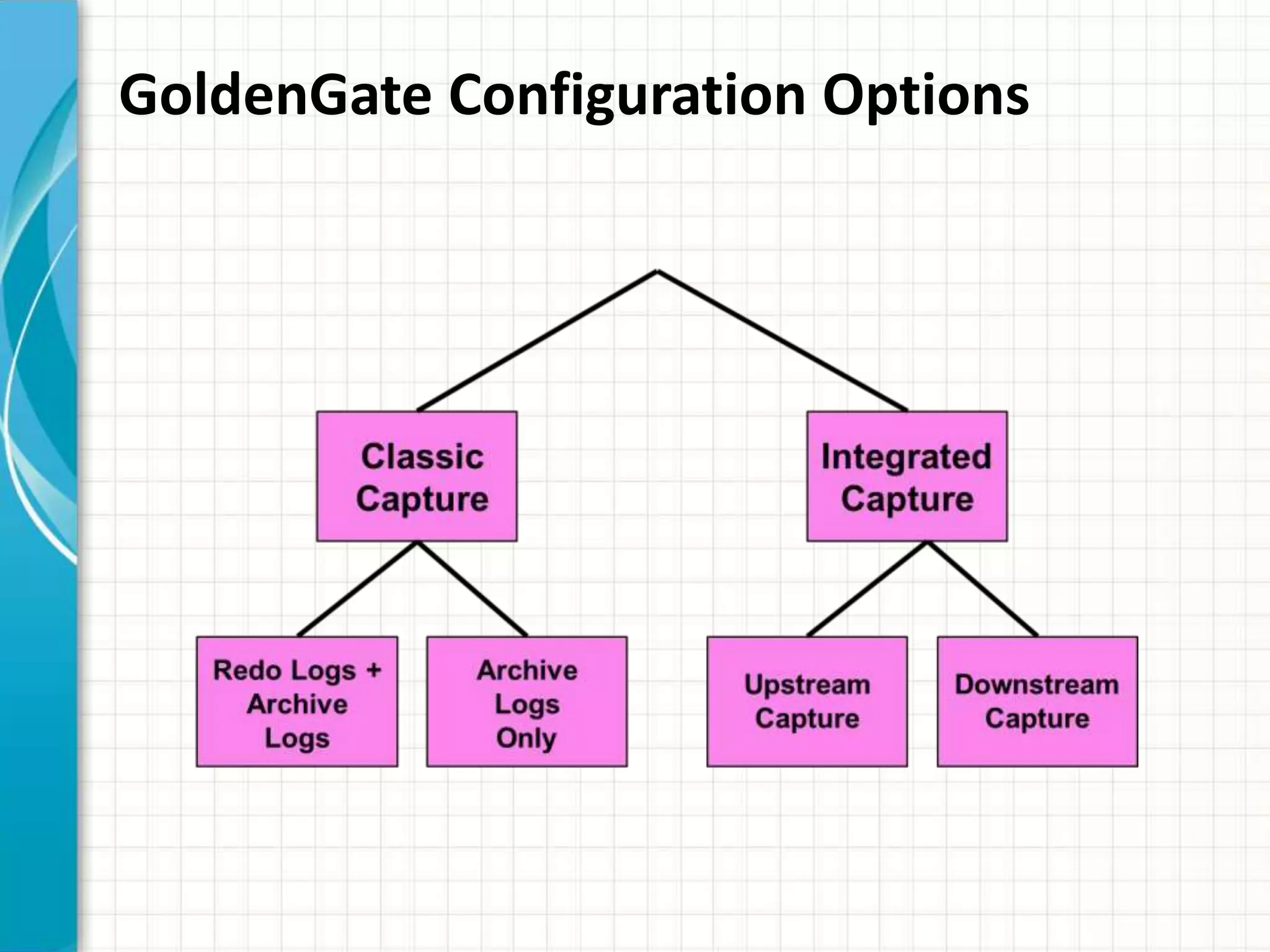

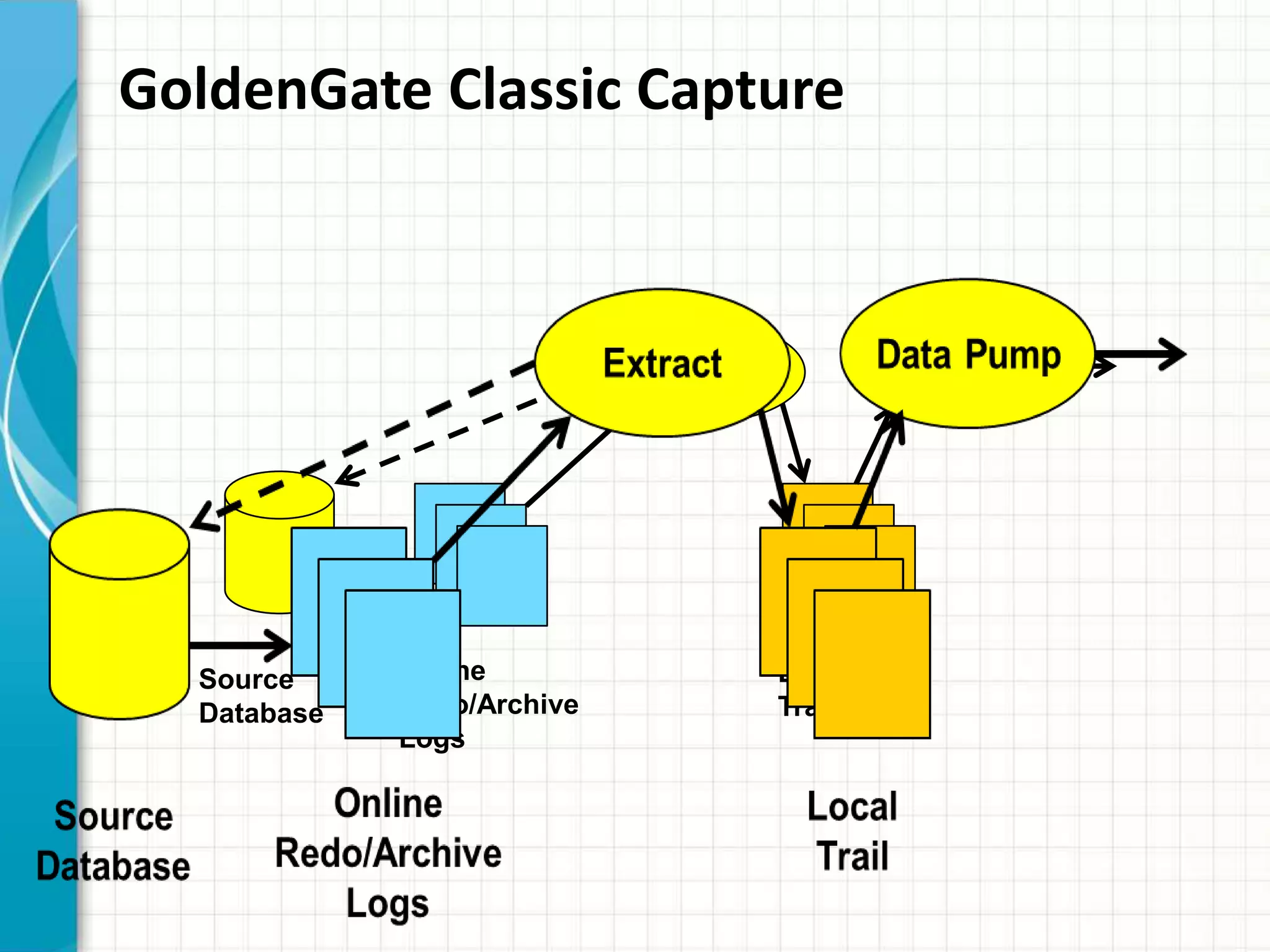

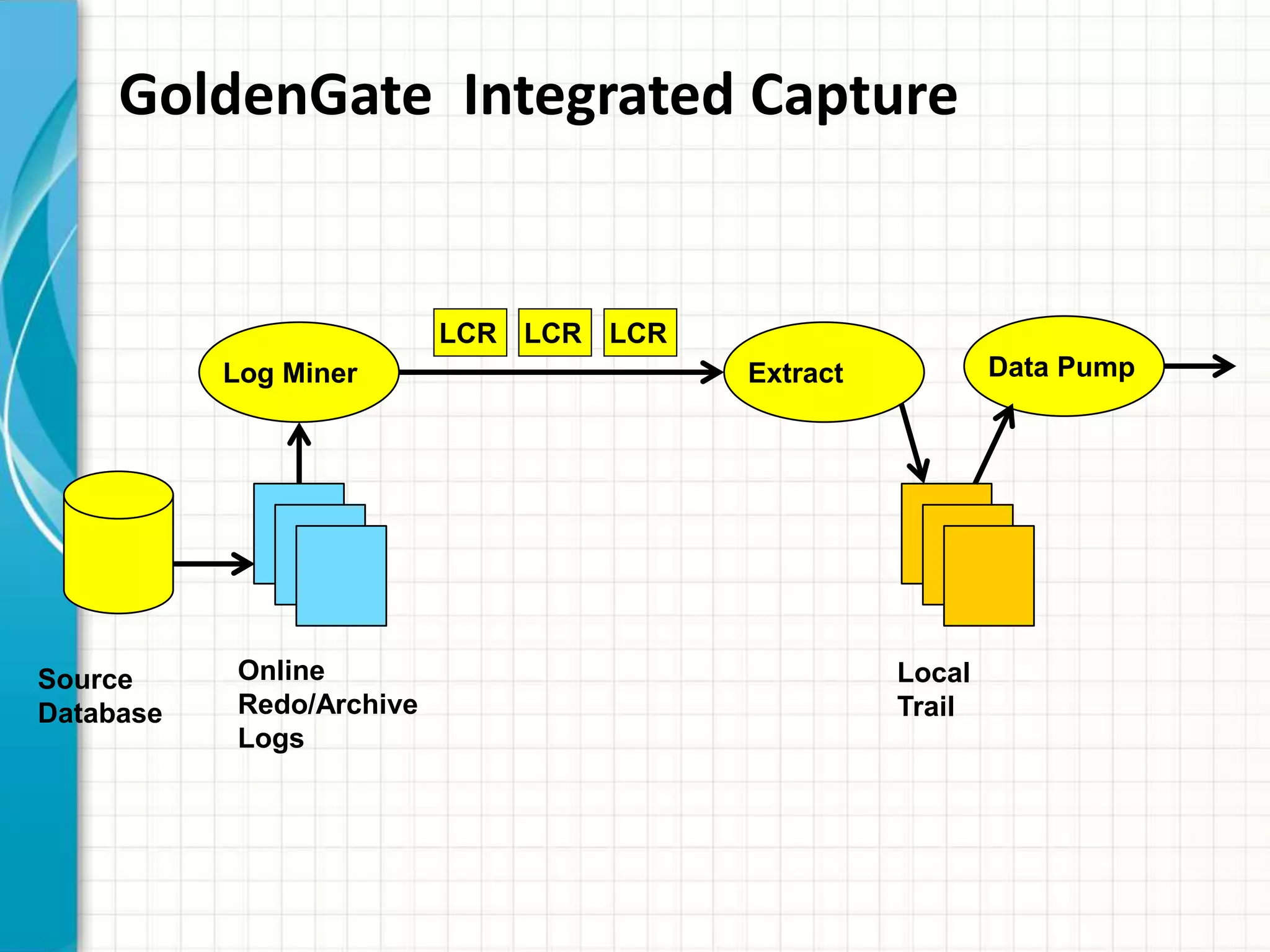

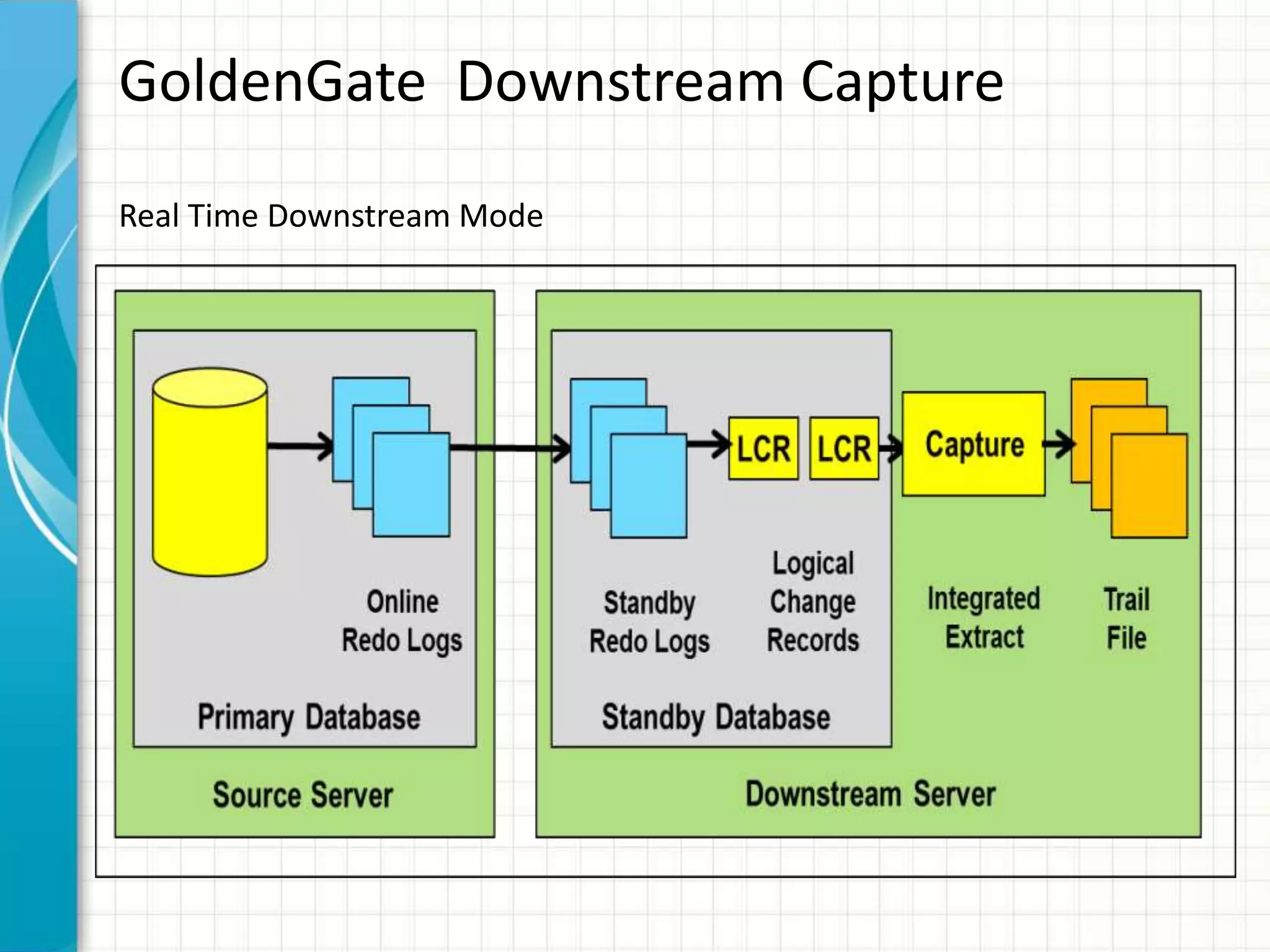

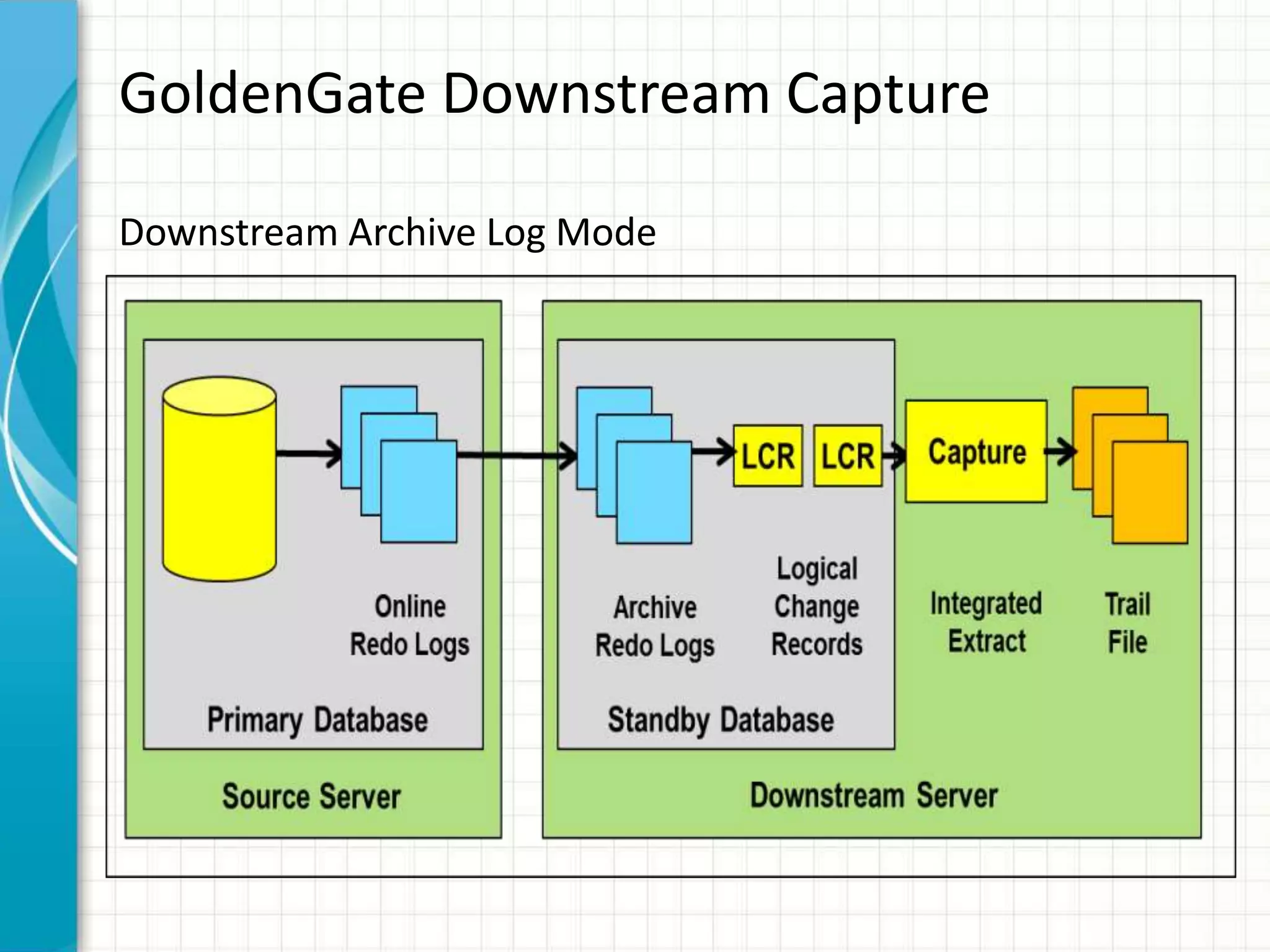

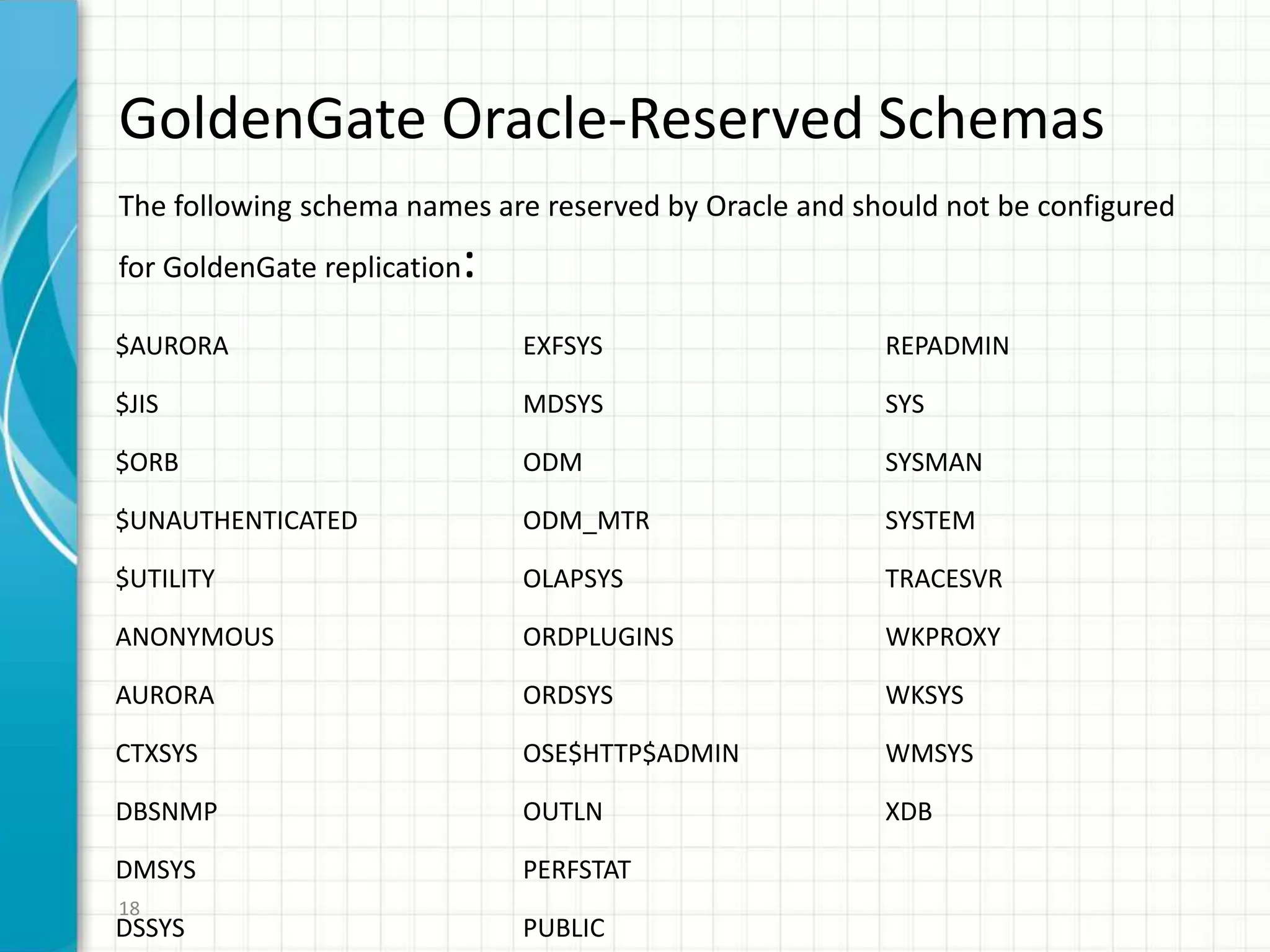

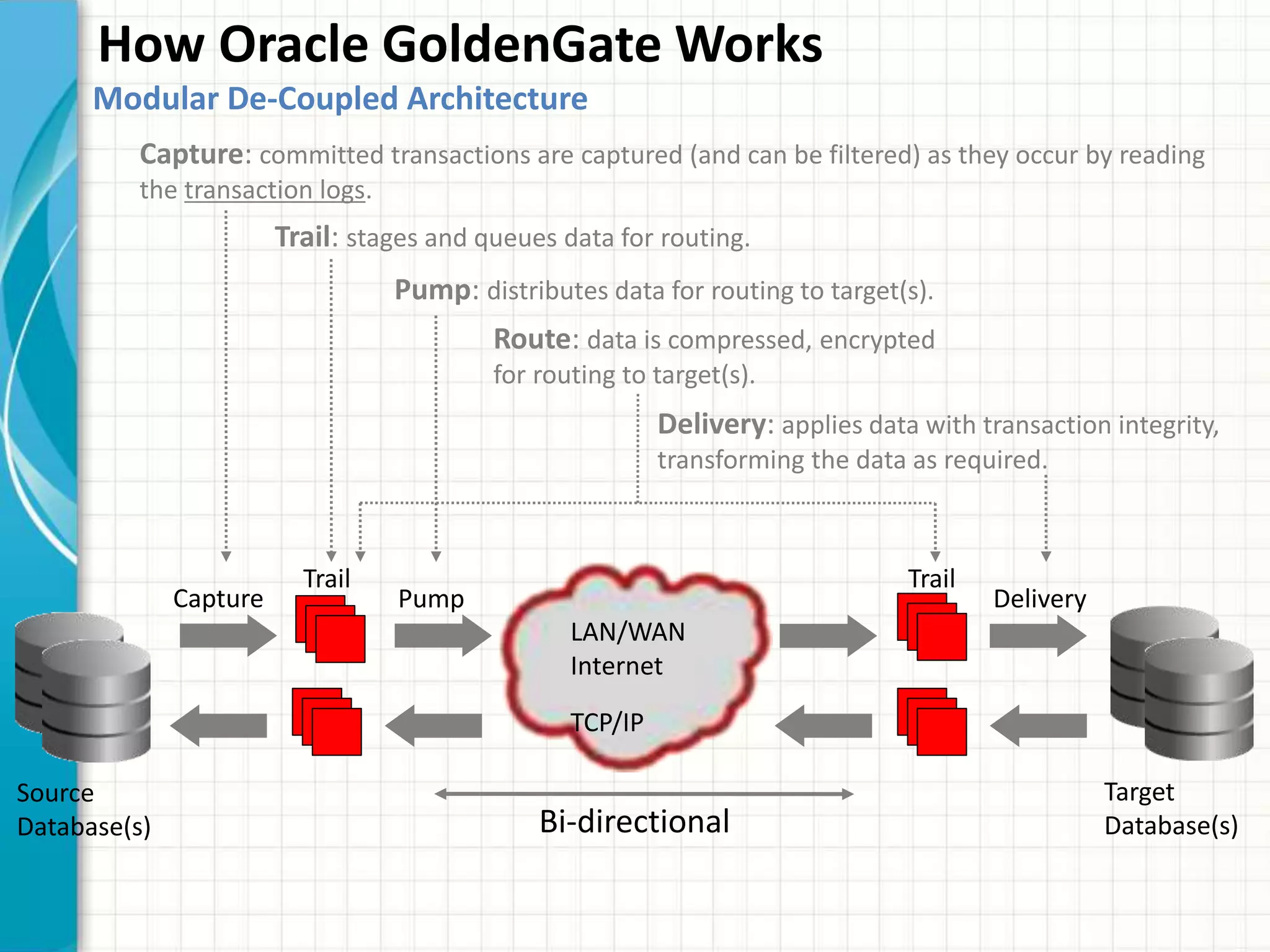

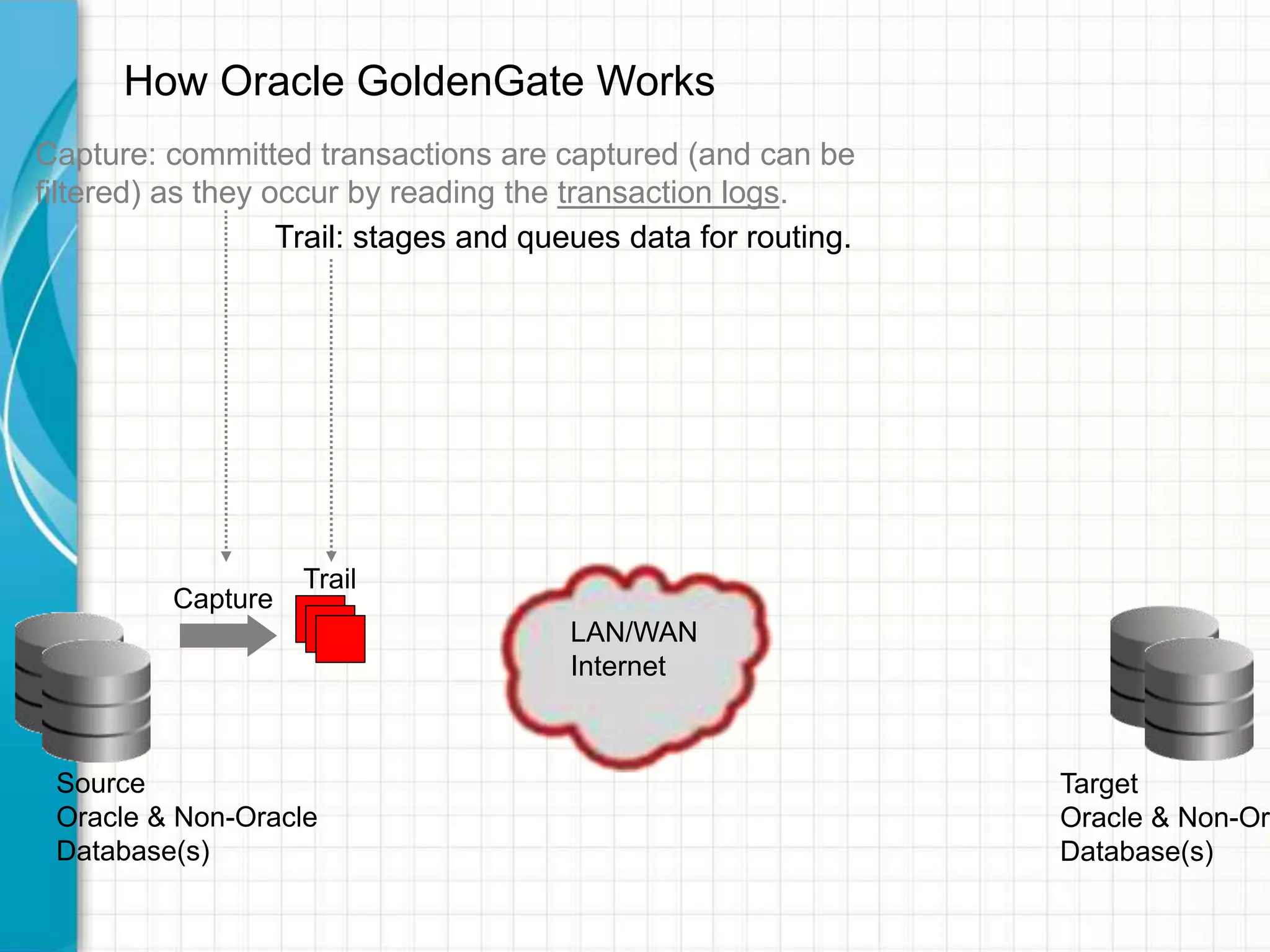

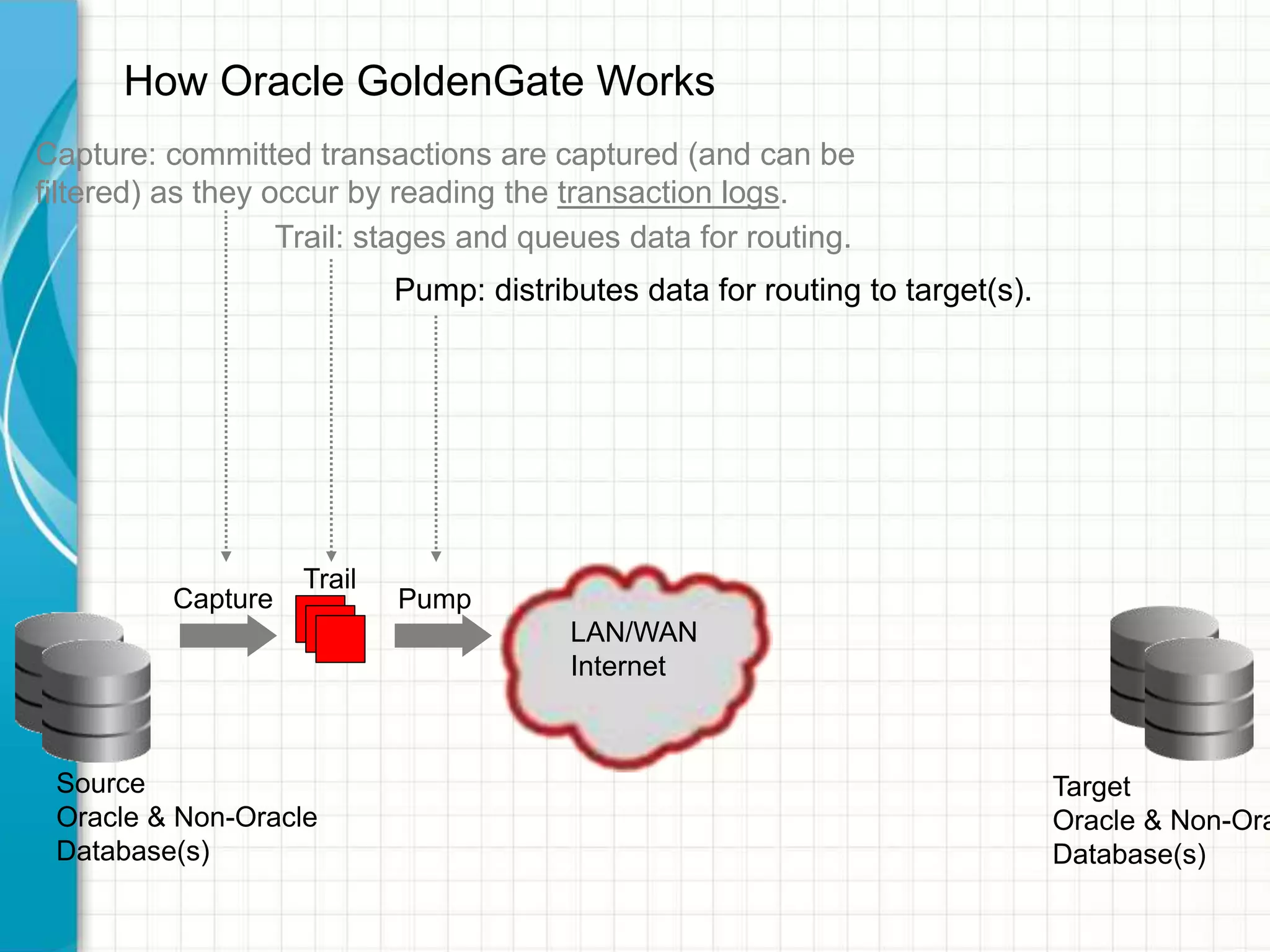

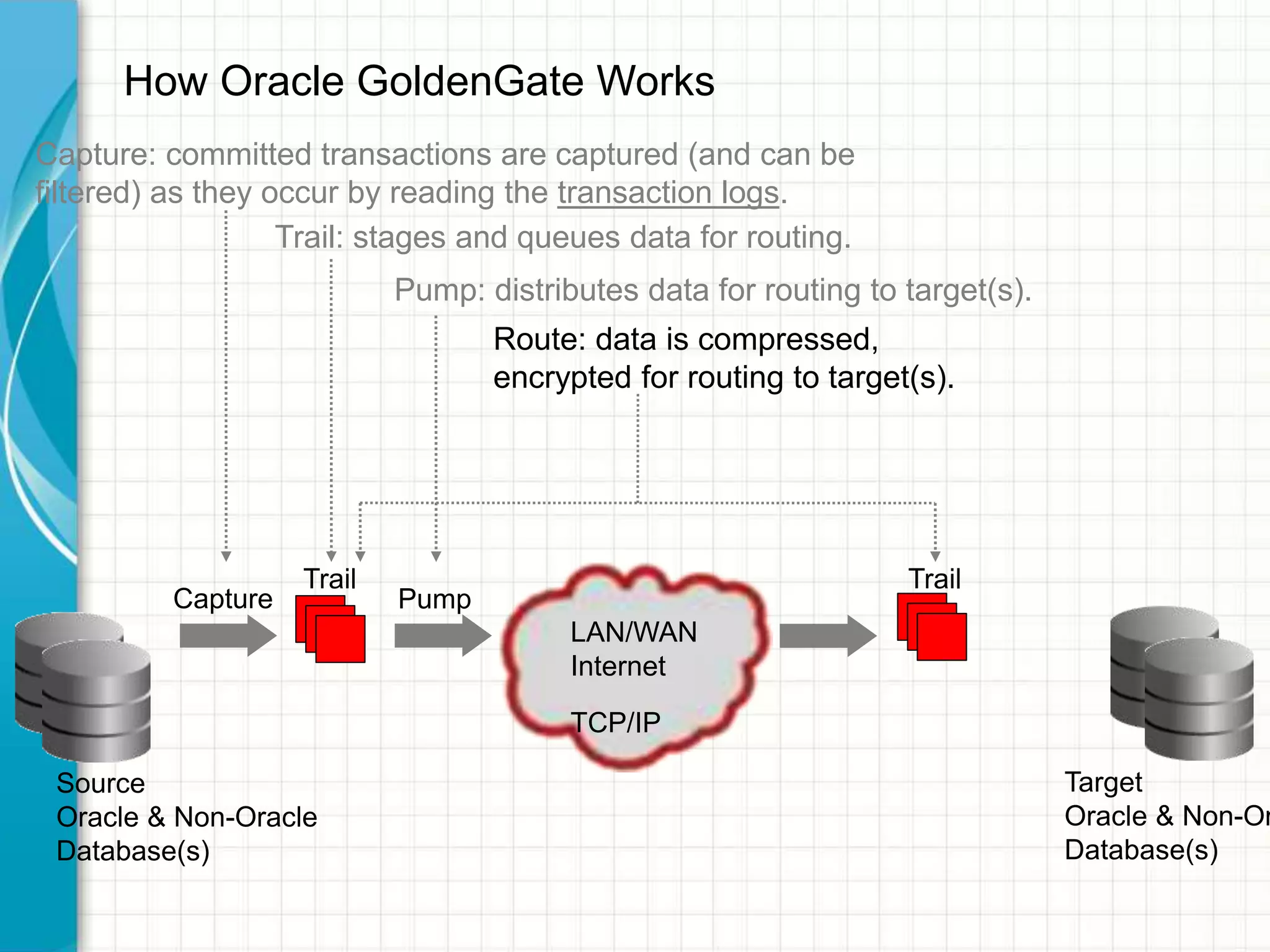

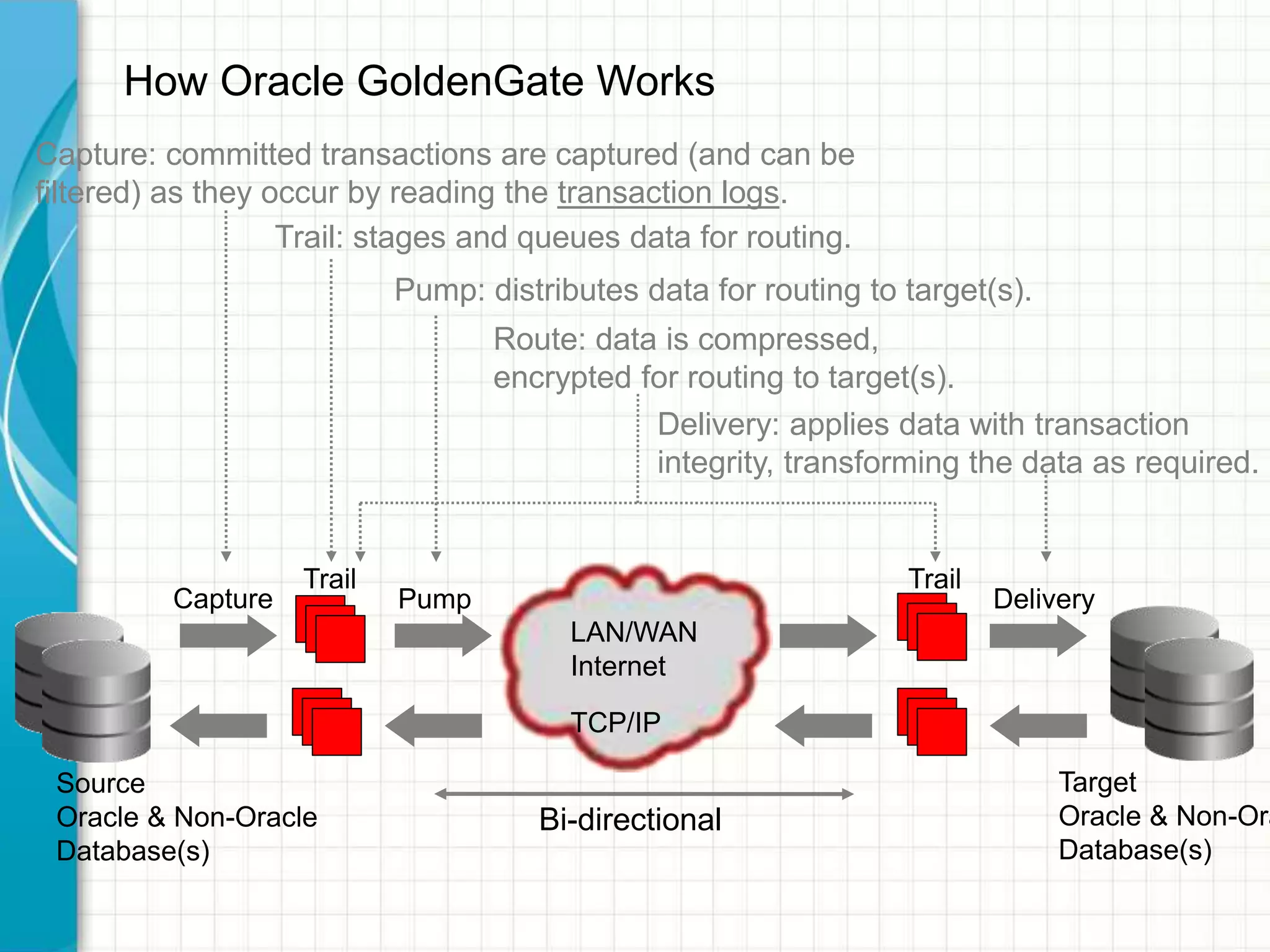

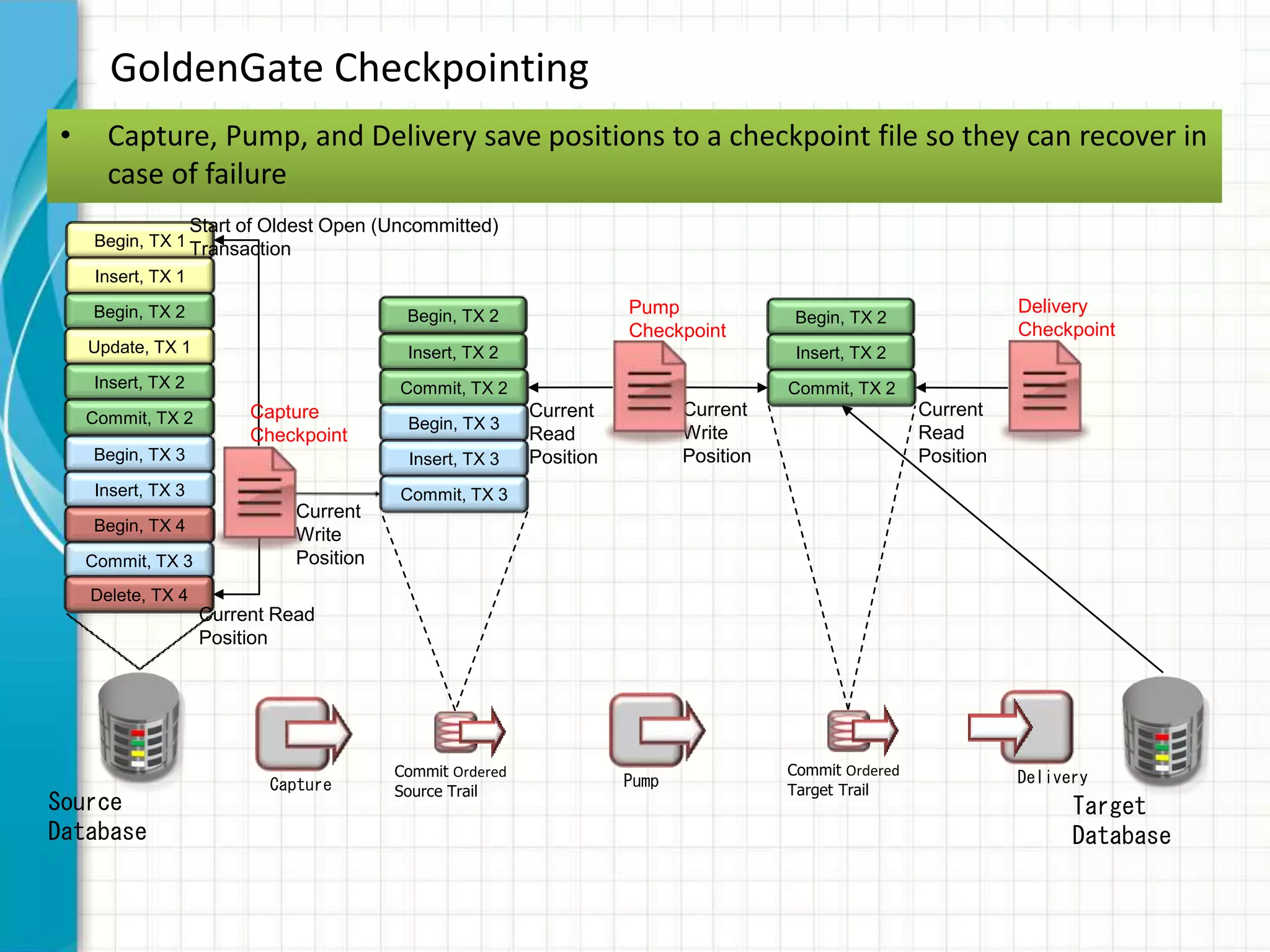

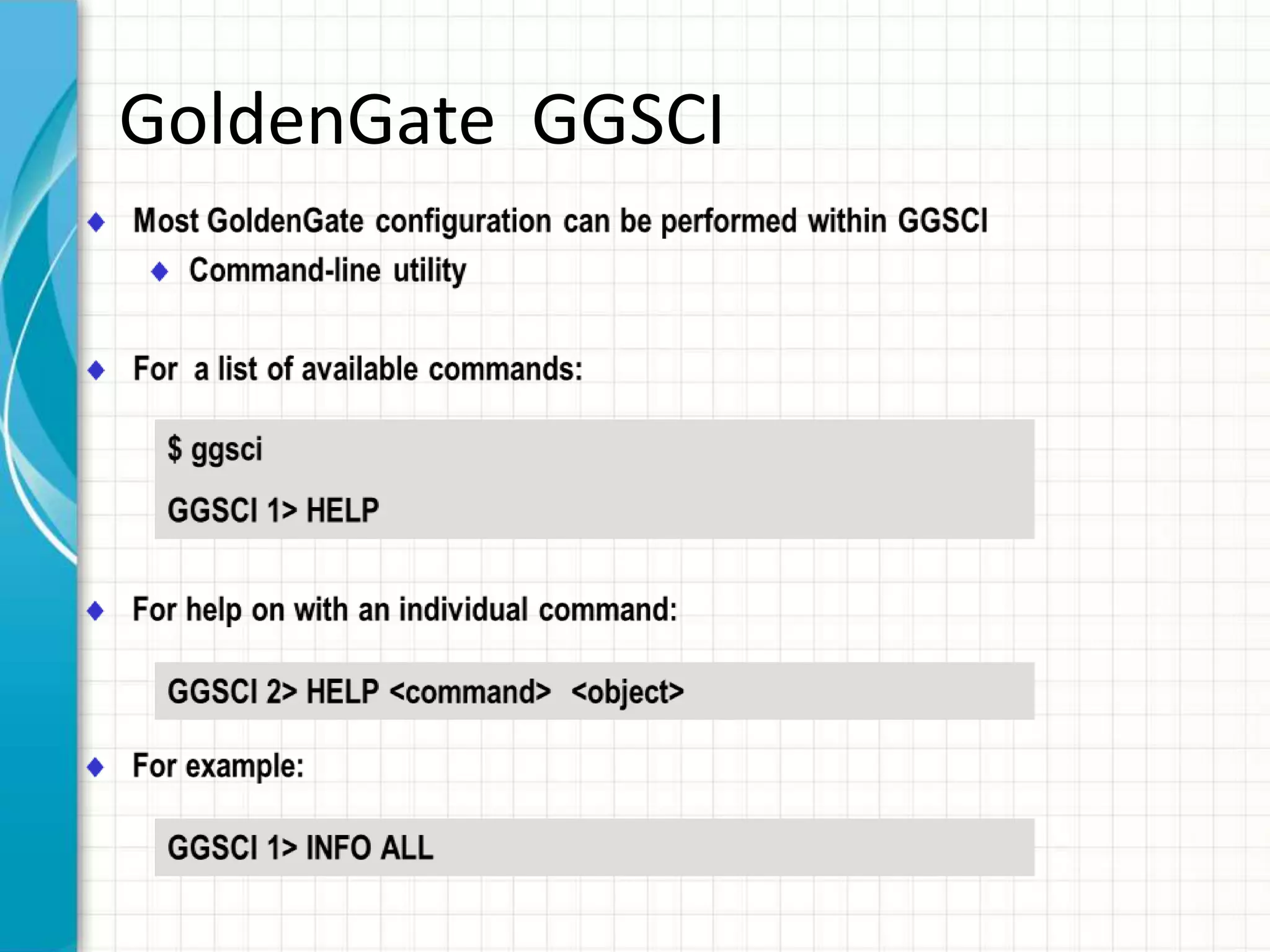

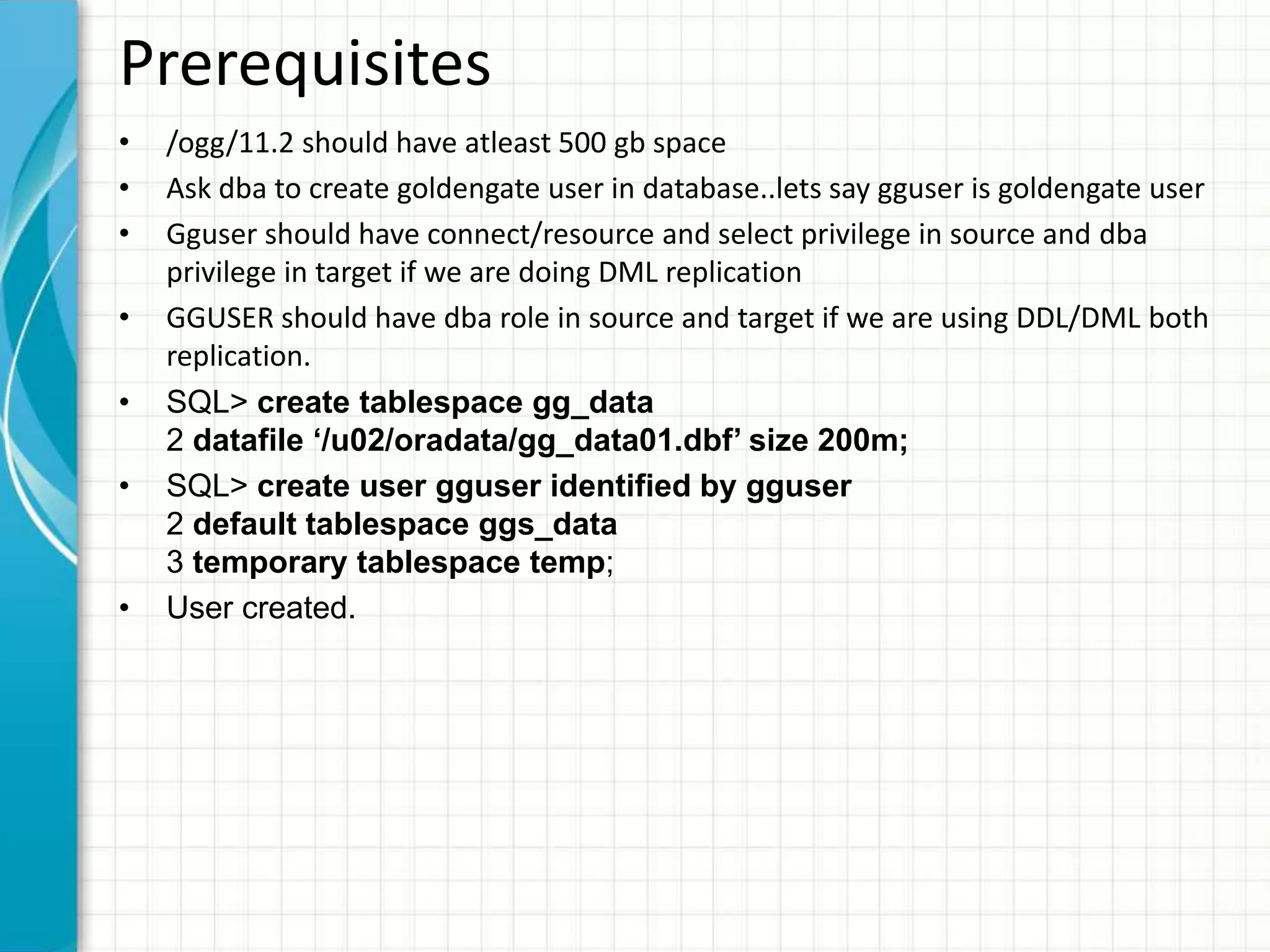

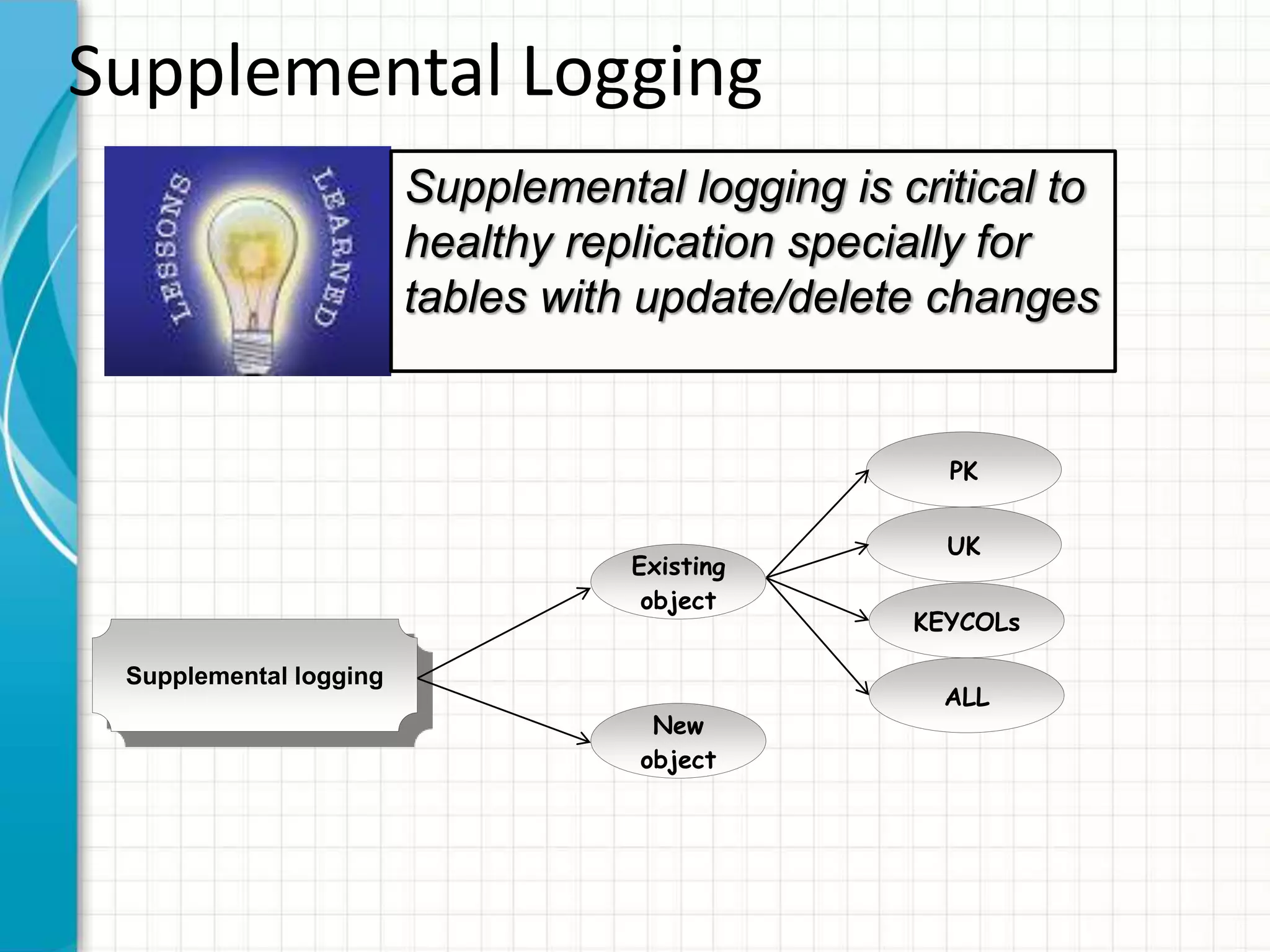

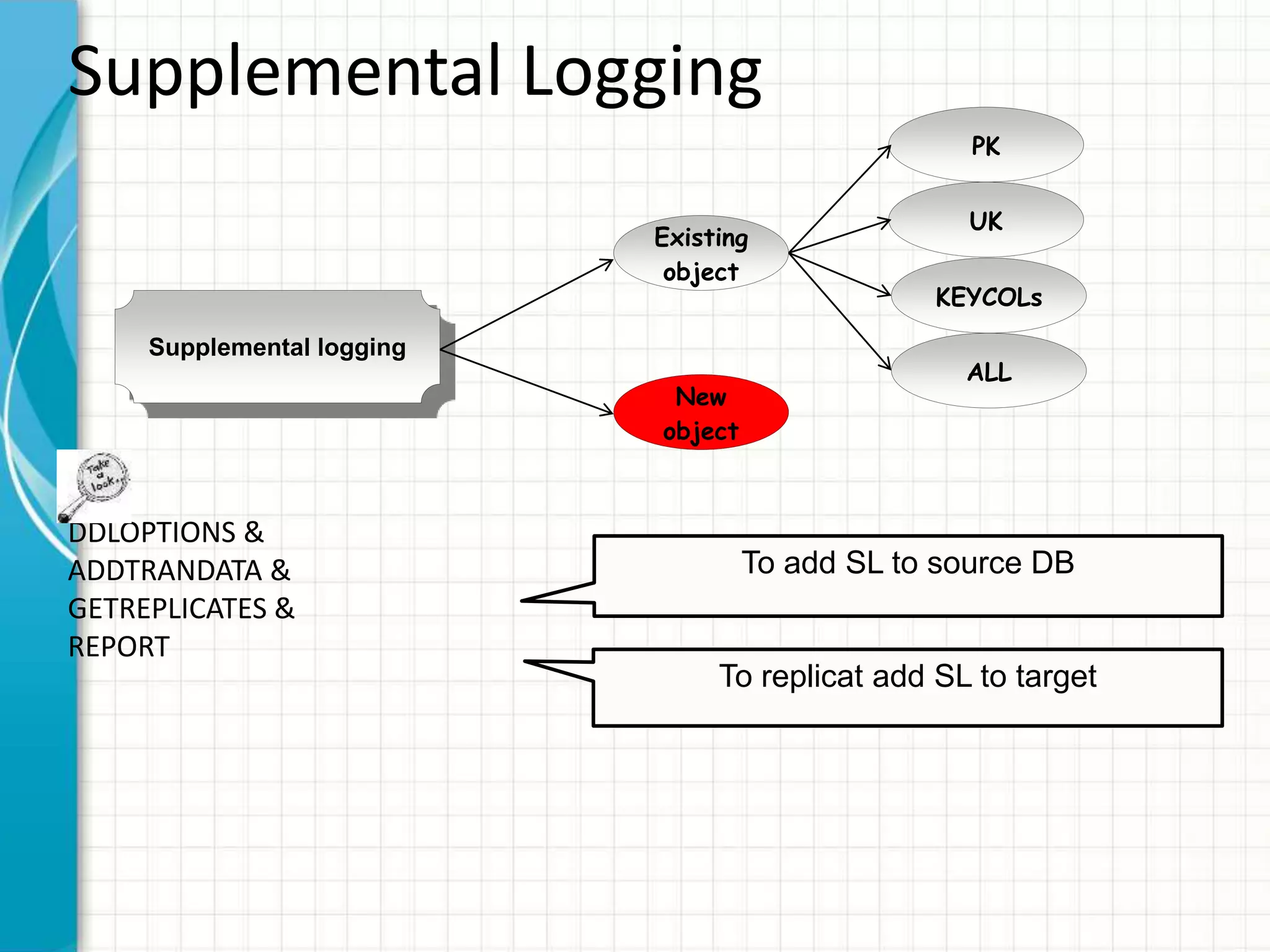

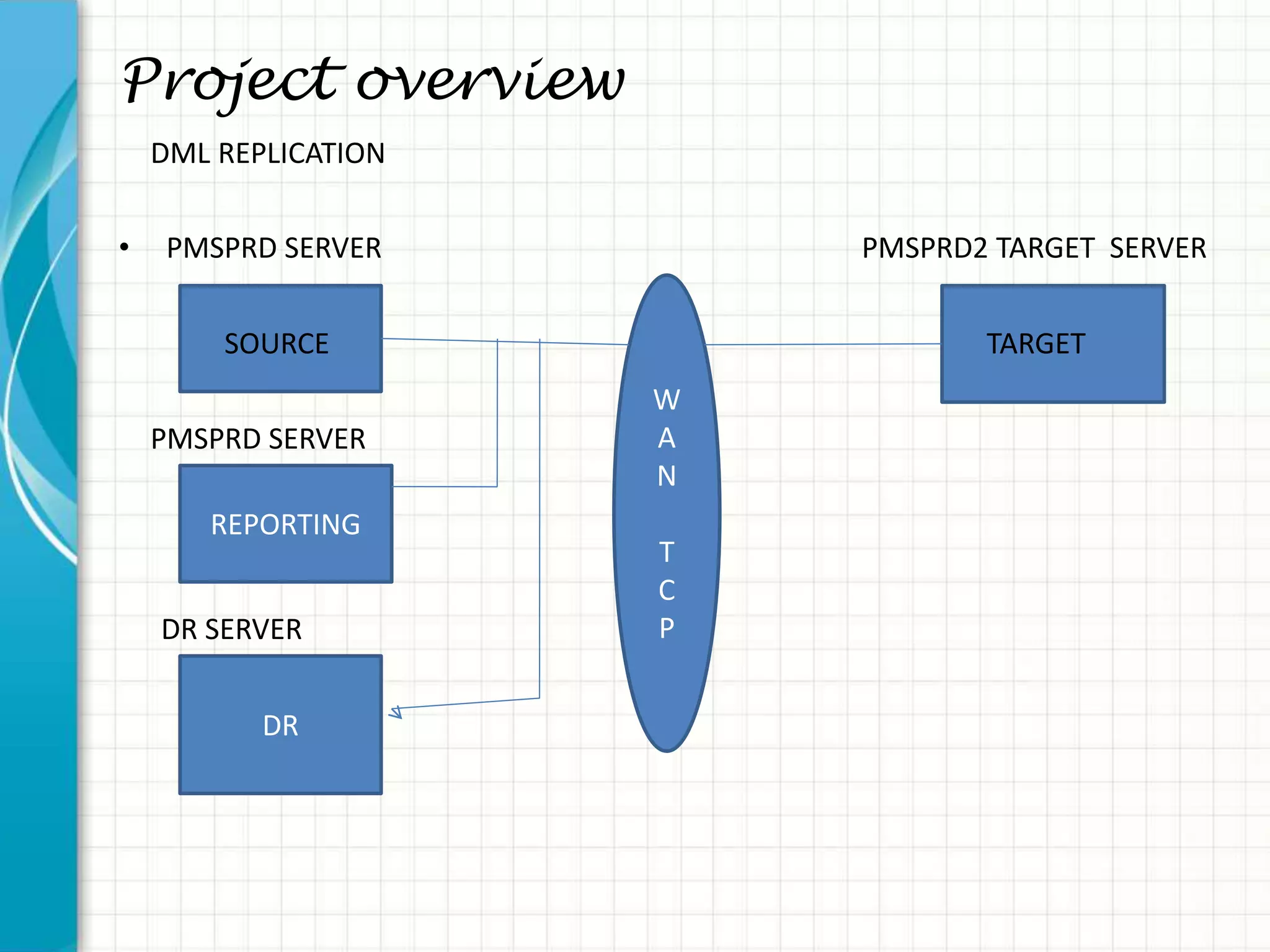

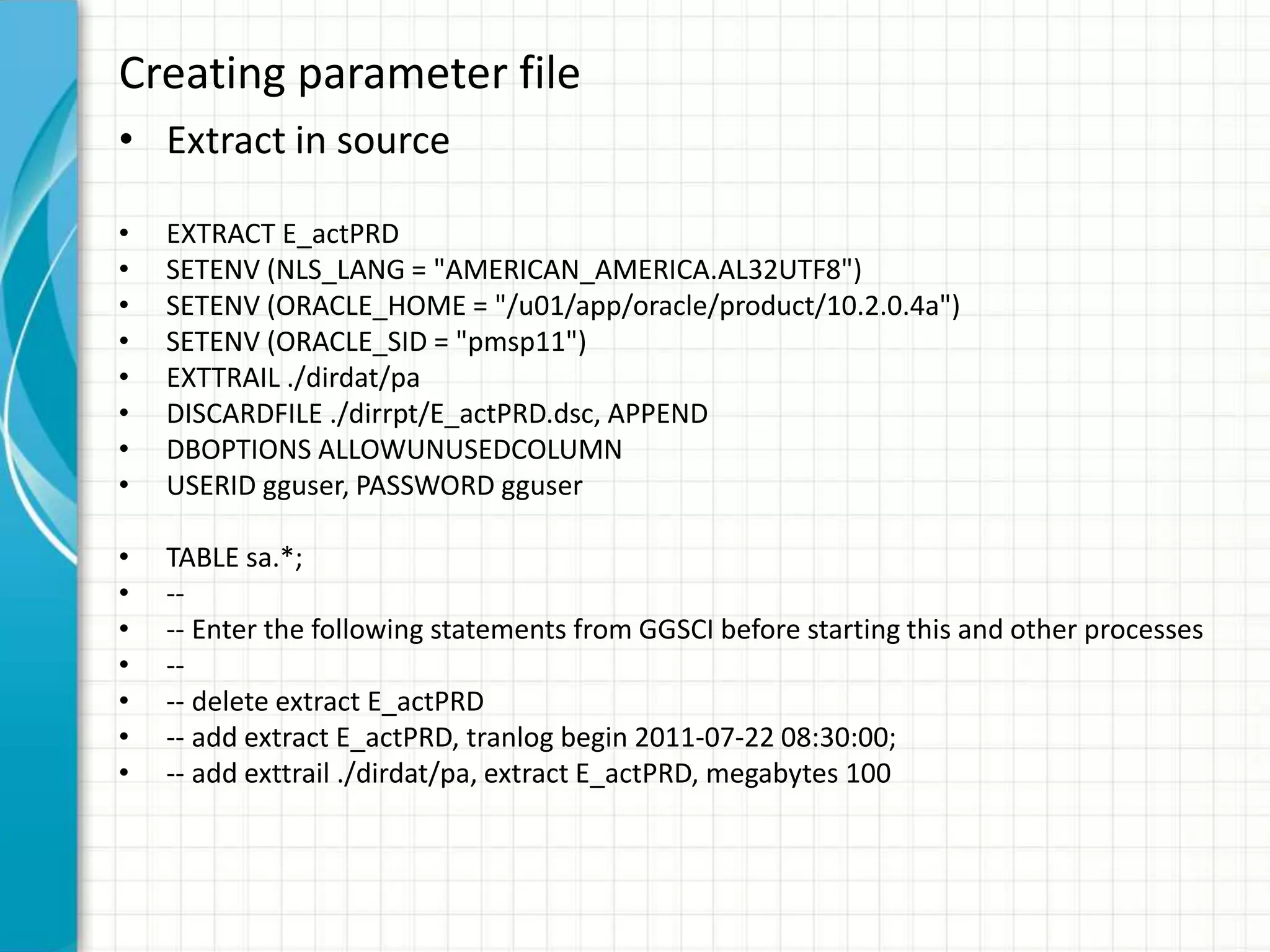

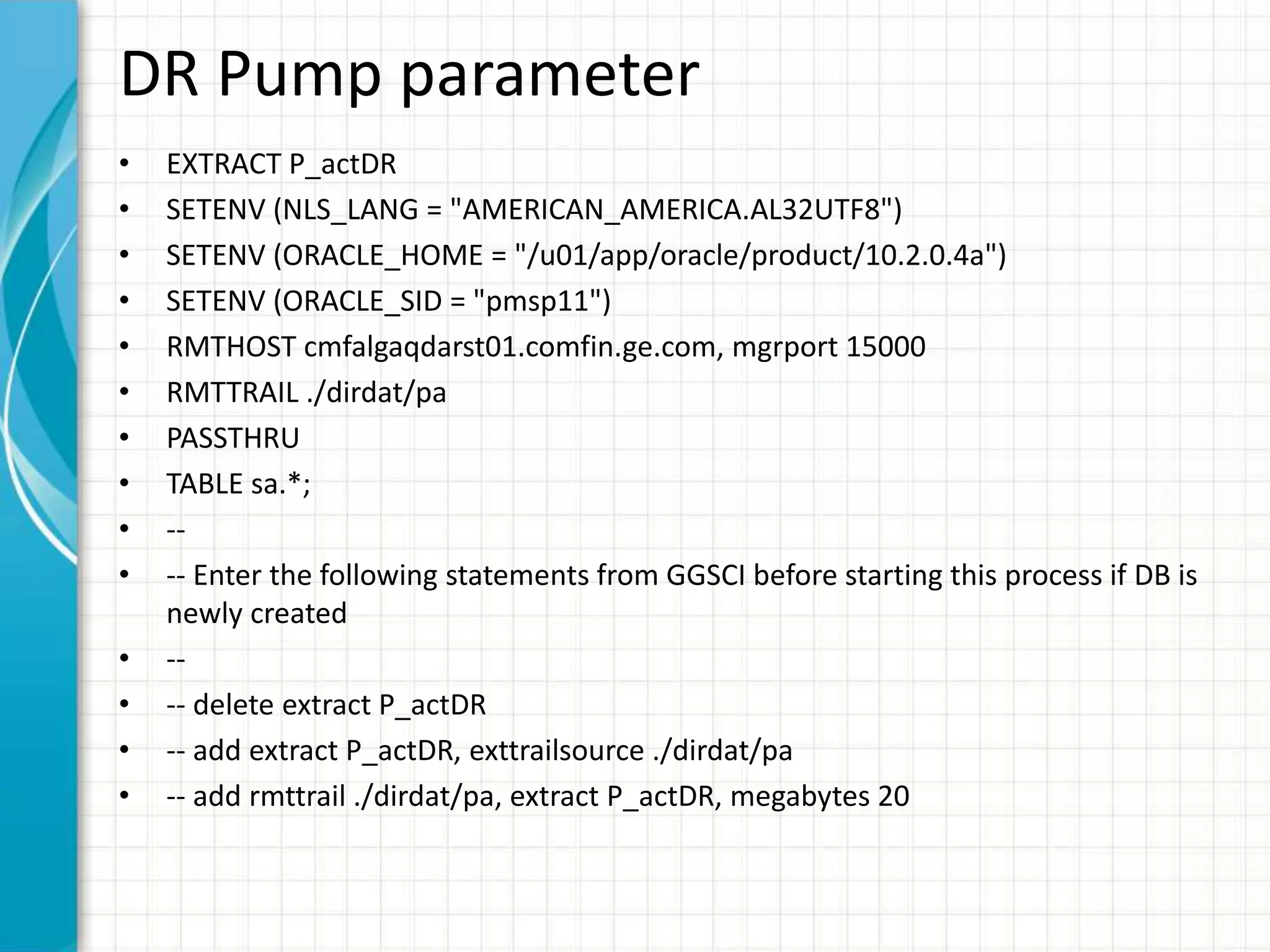

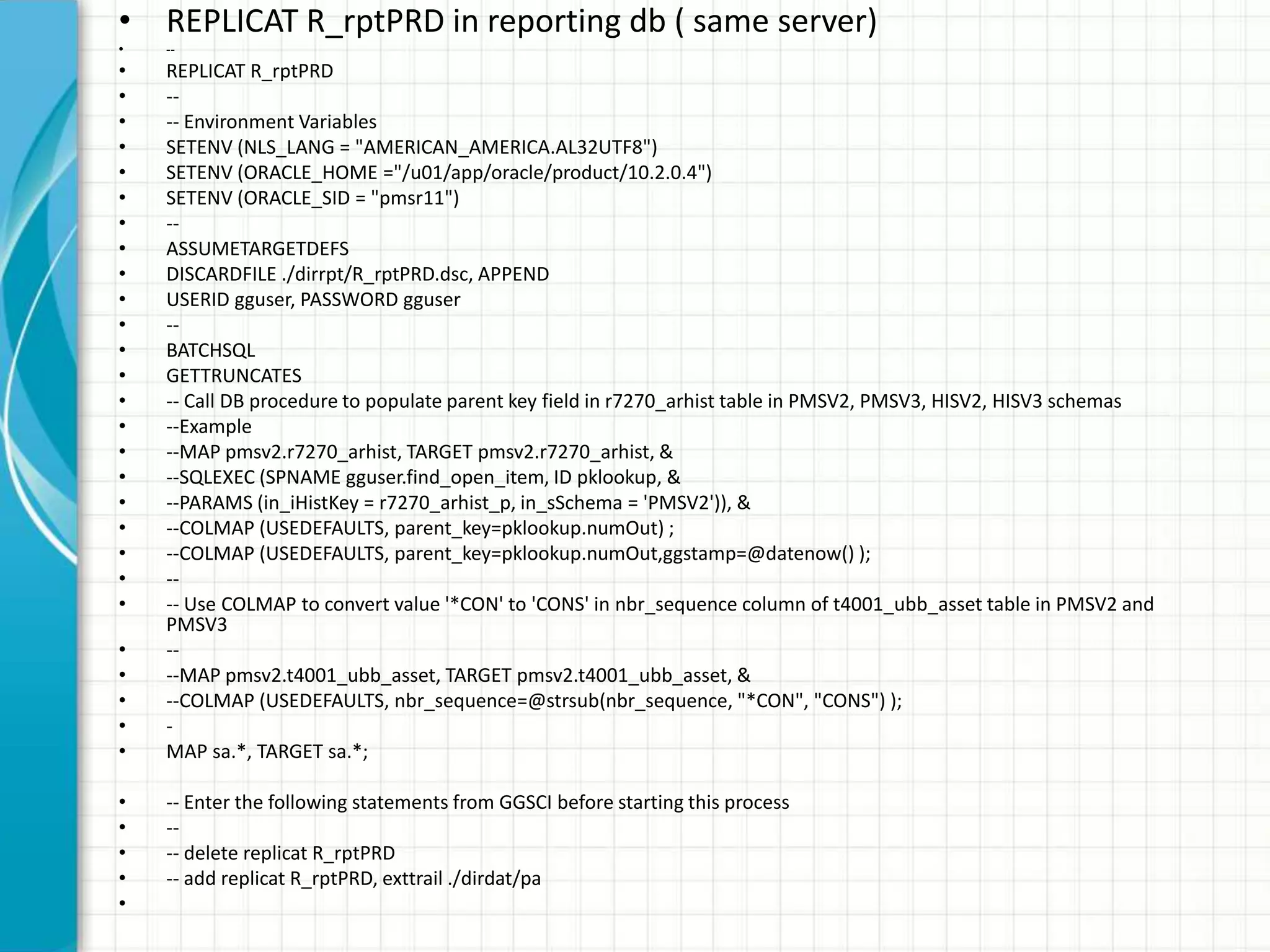

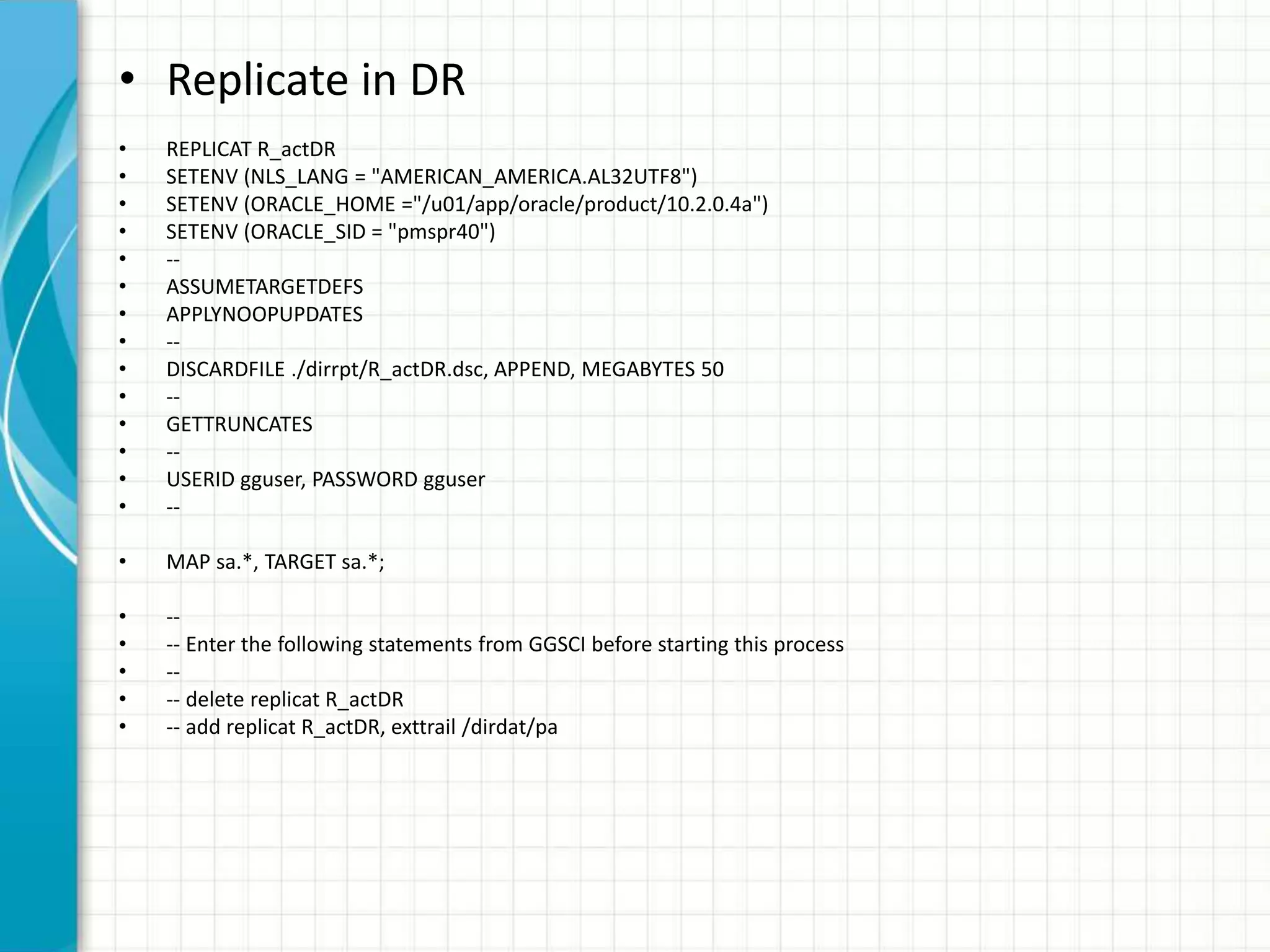

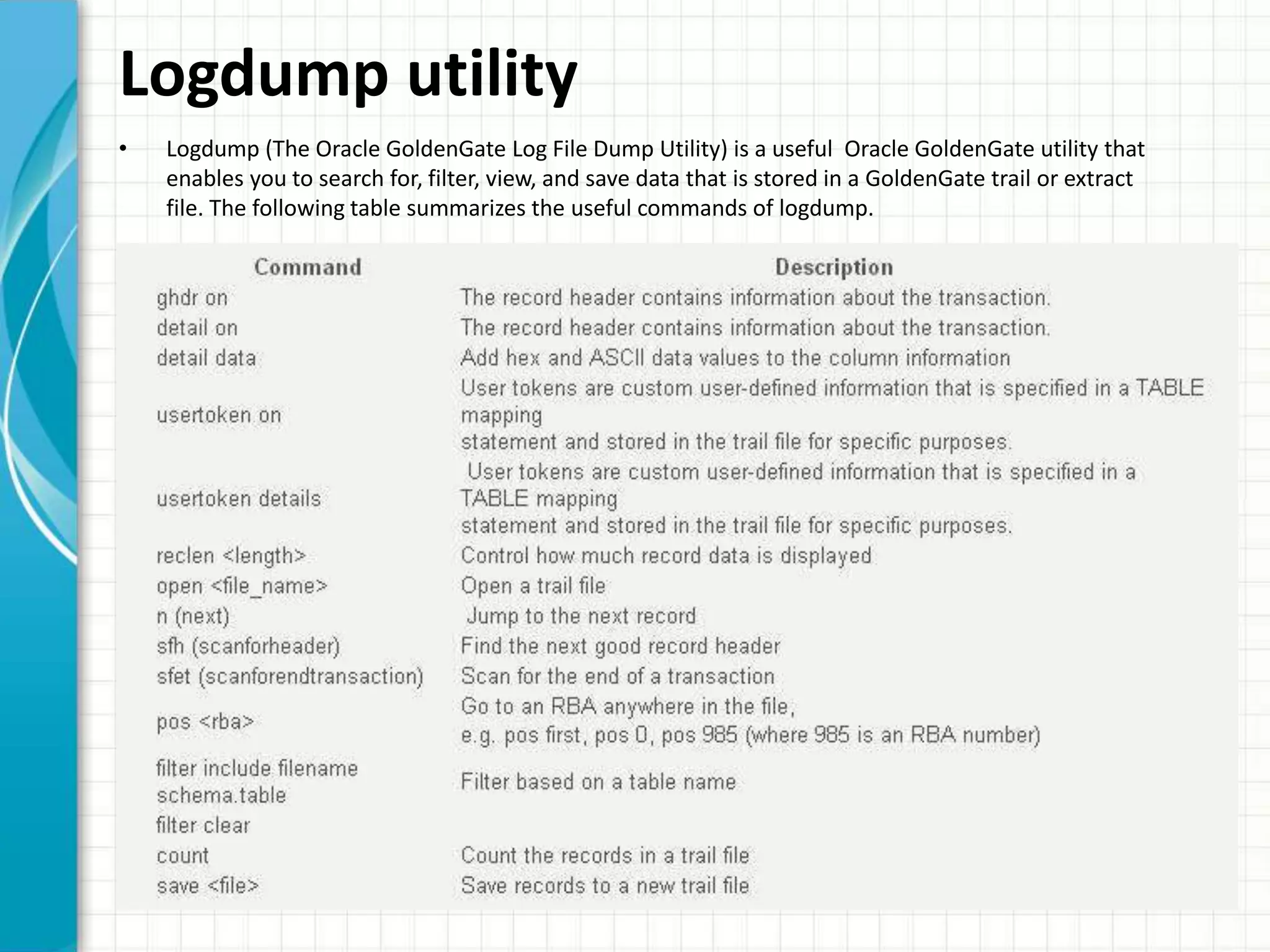

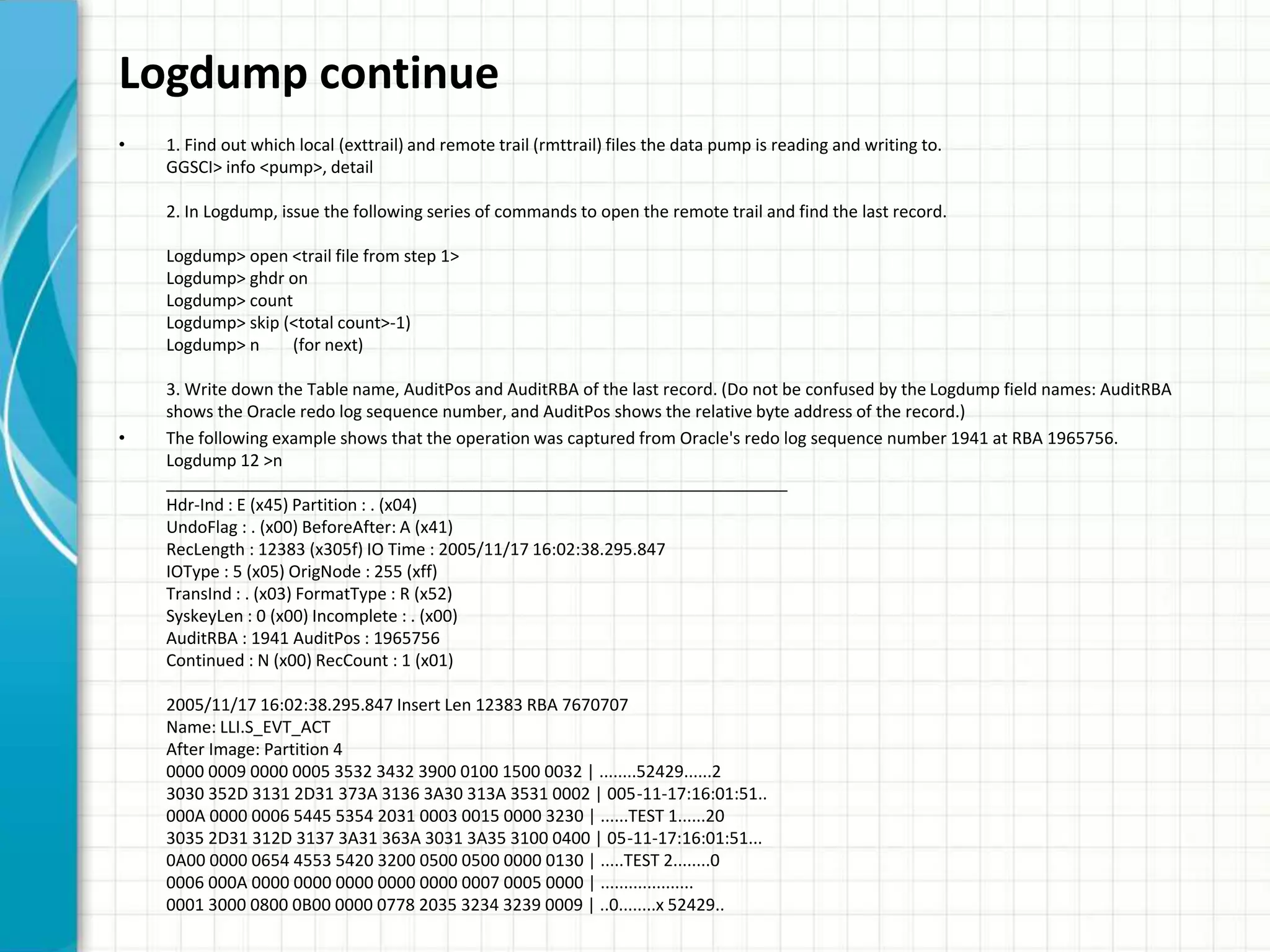

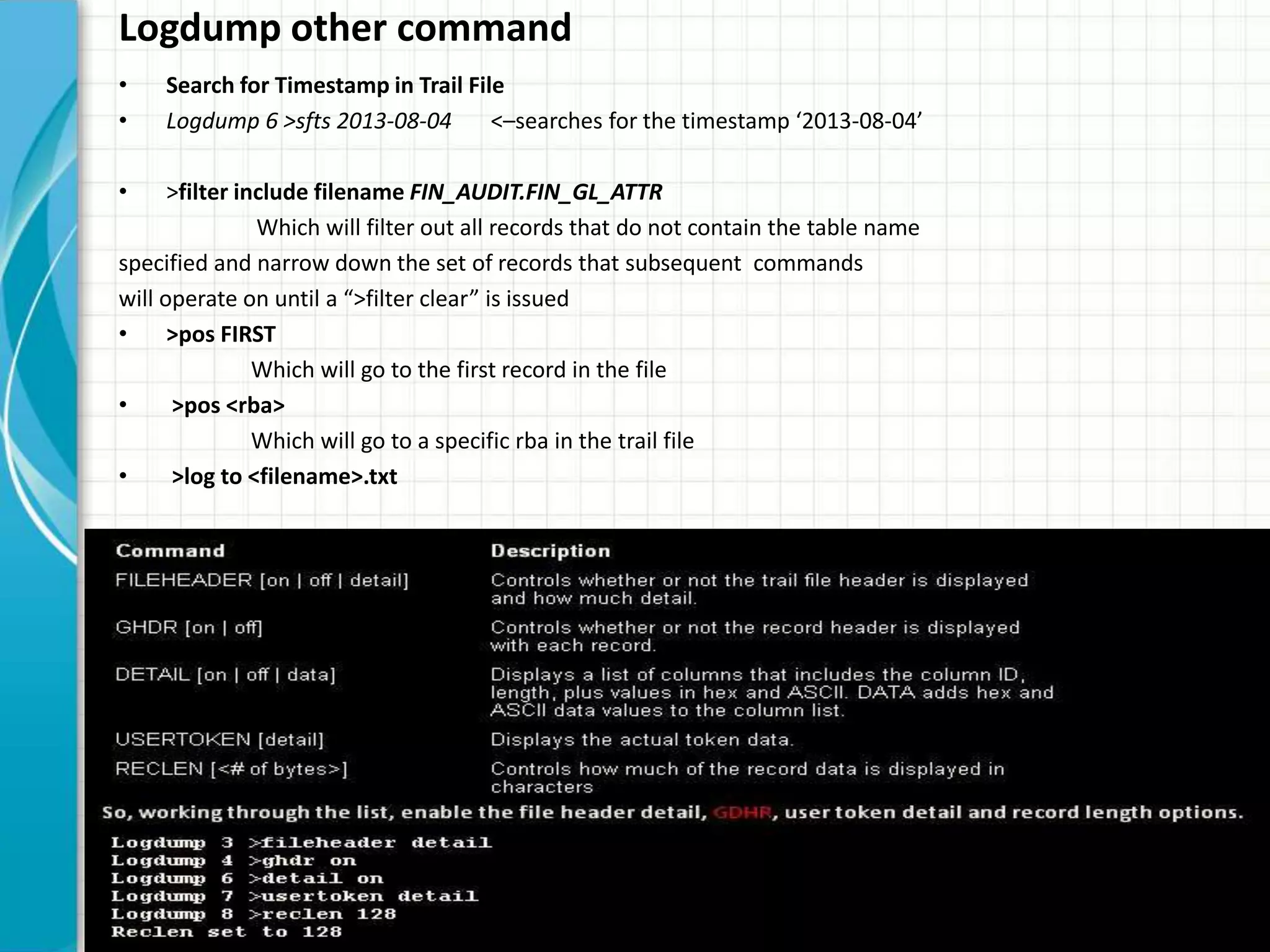

The document provides a comprehensive overview of Oracle GoldenGate, a heterogeneous replication solution that supports real-time data integration and availability. Key topics include installation prerequisites, configuration options, supported databases, and various replication processes like DML and DDL replication. It also covers utilities such as 'extract,' 'replicat,' and 'data pump,' while detailing checkpointing mechanisms and supplemental logging requirements for successful replication.