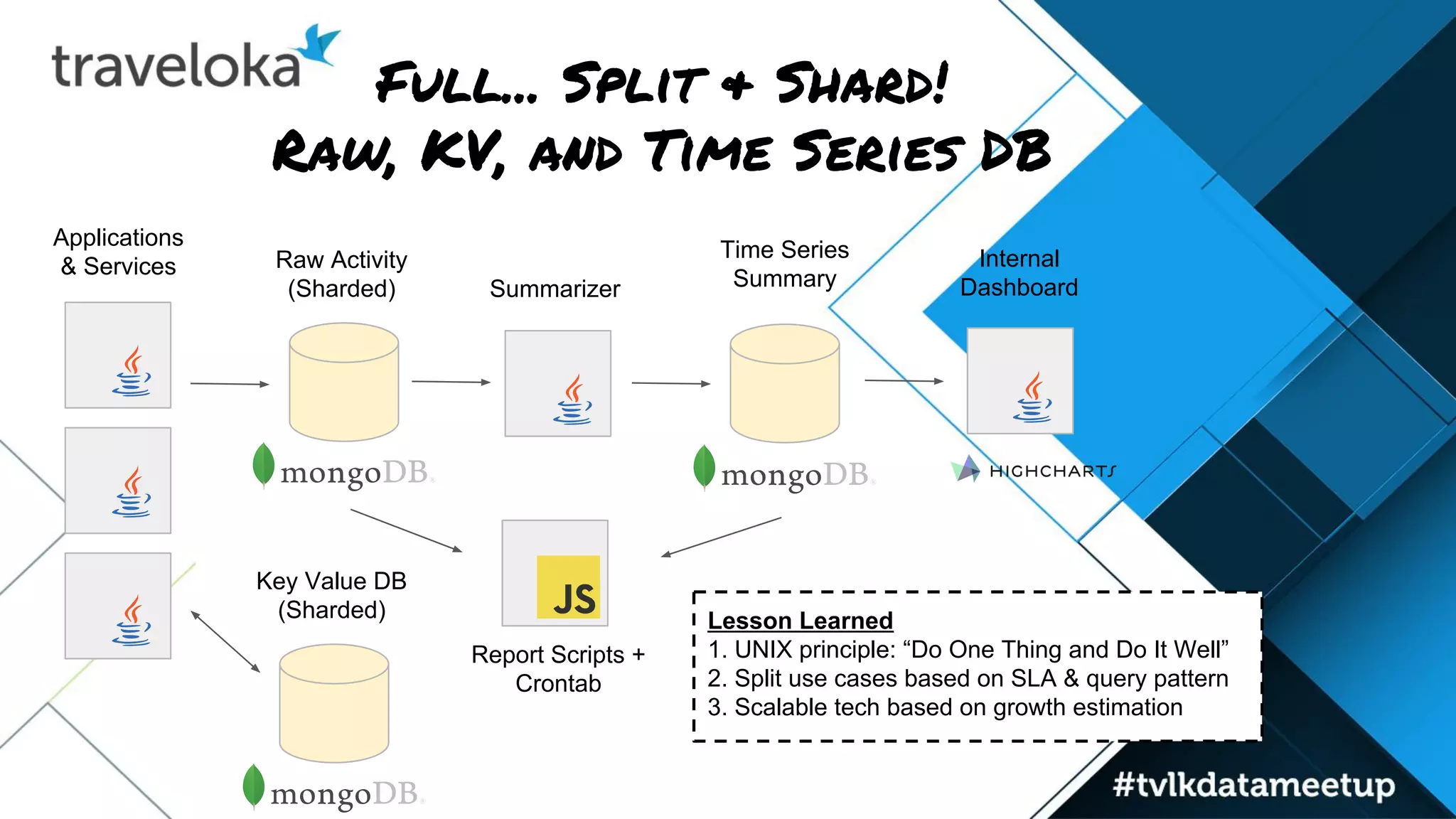

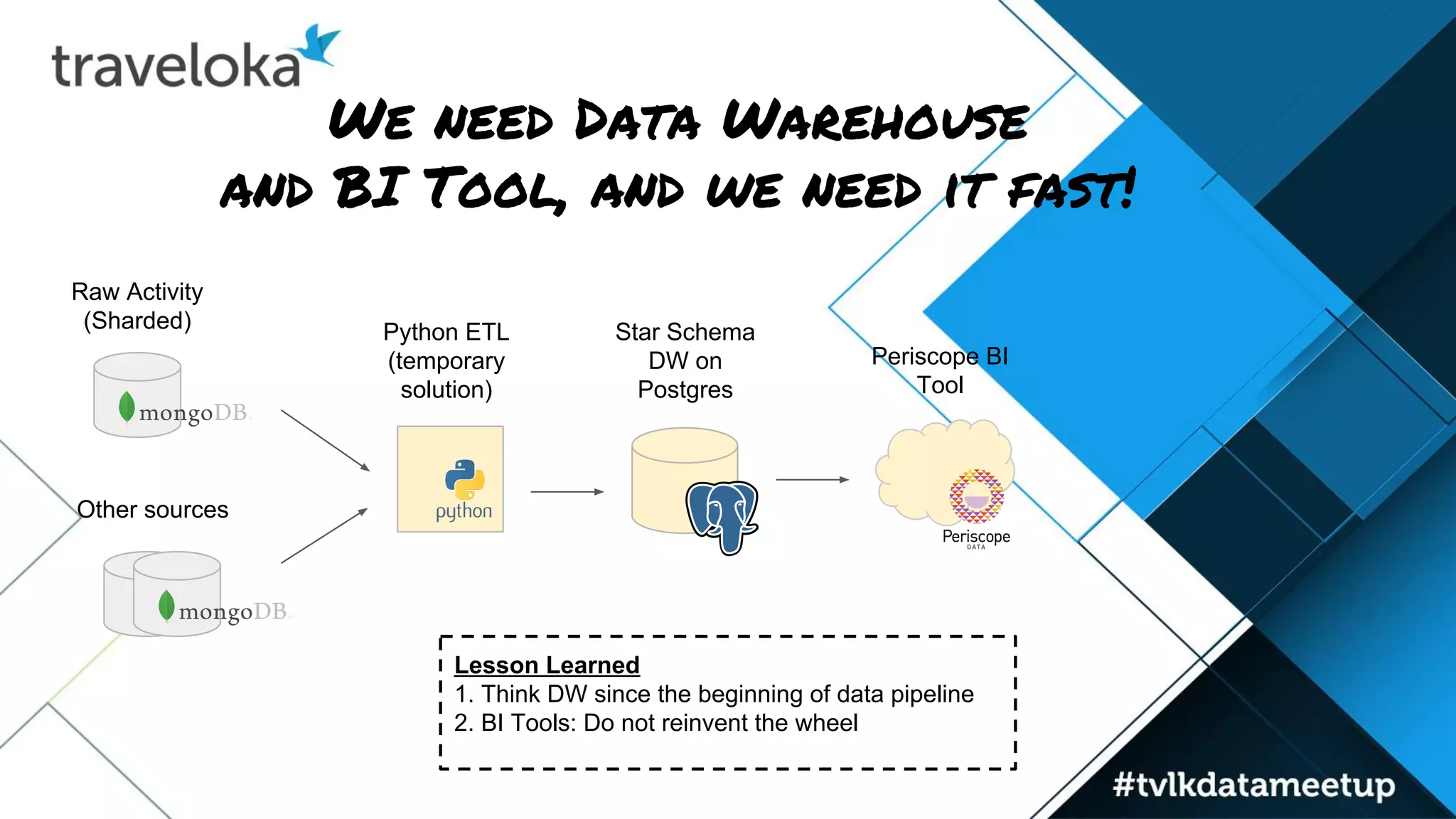

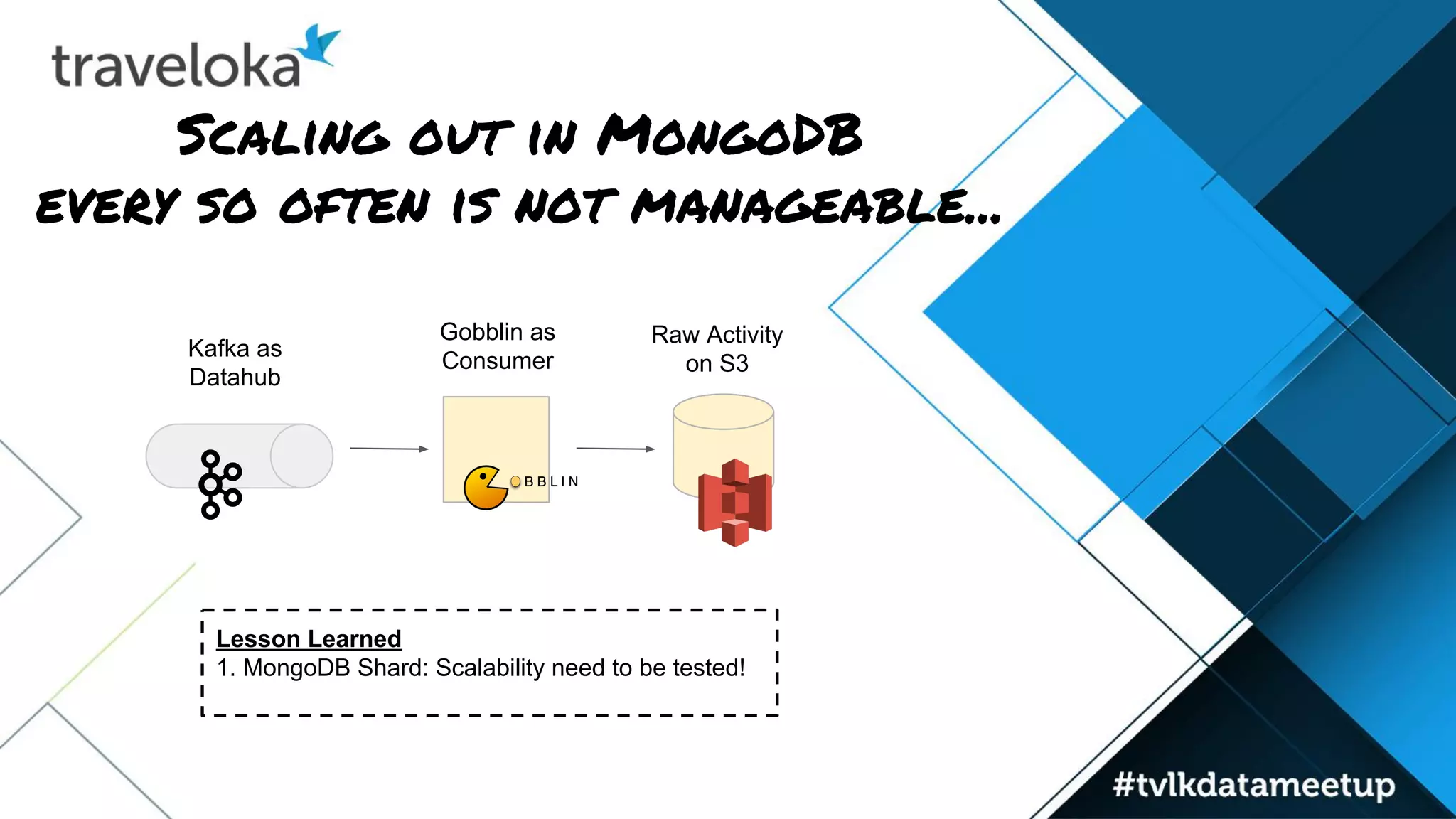

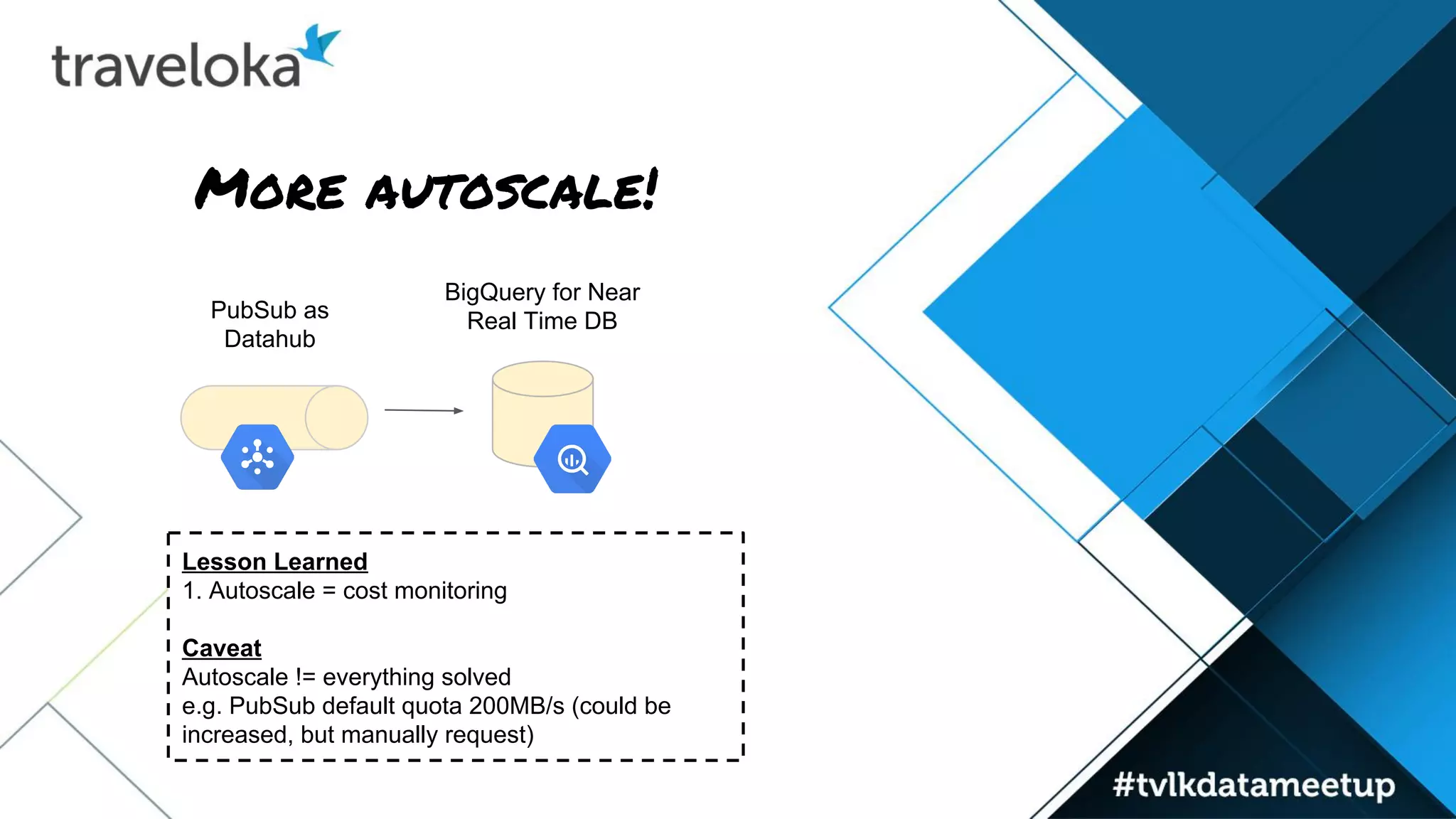

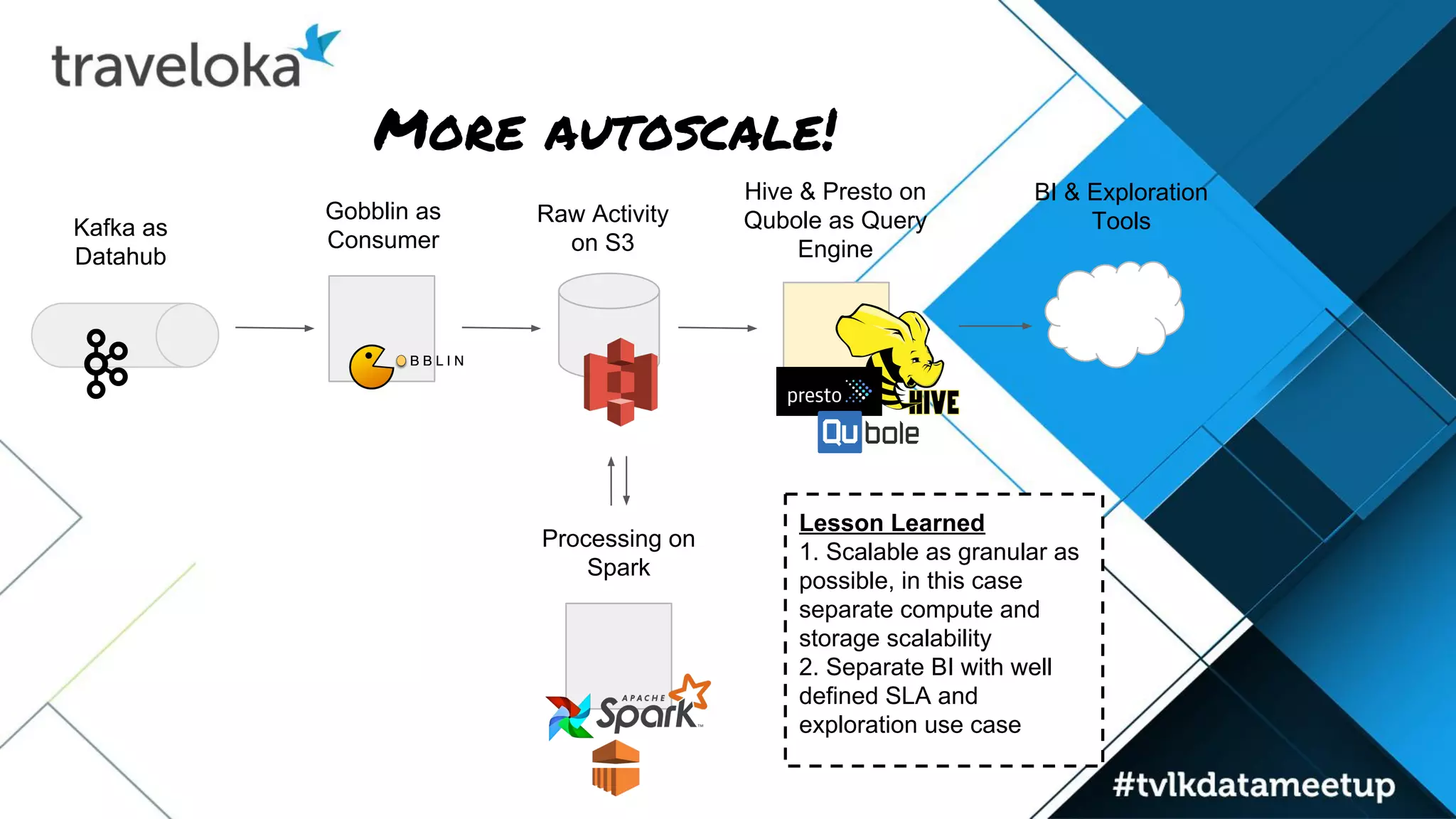

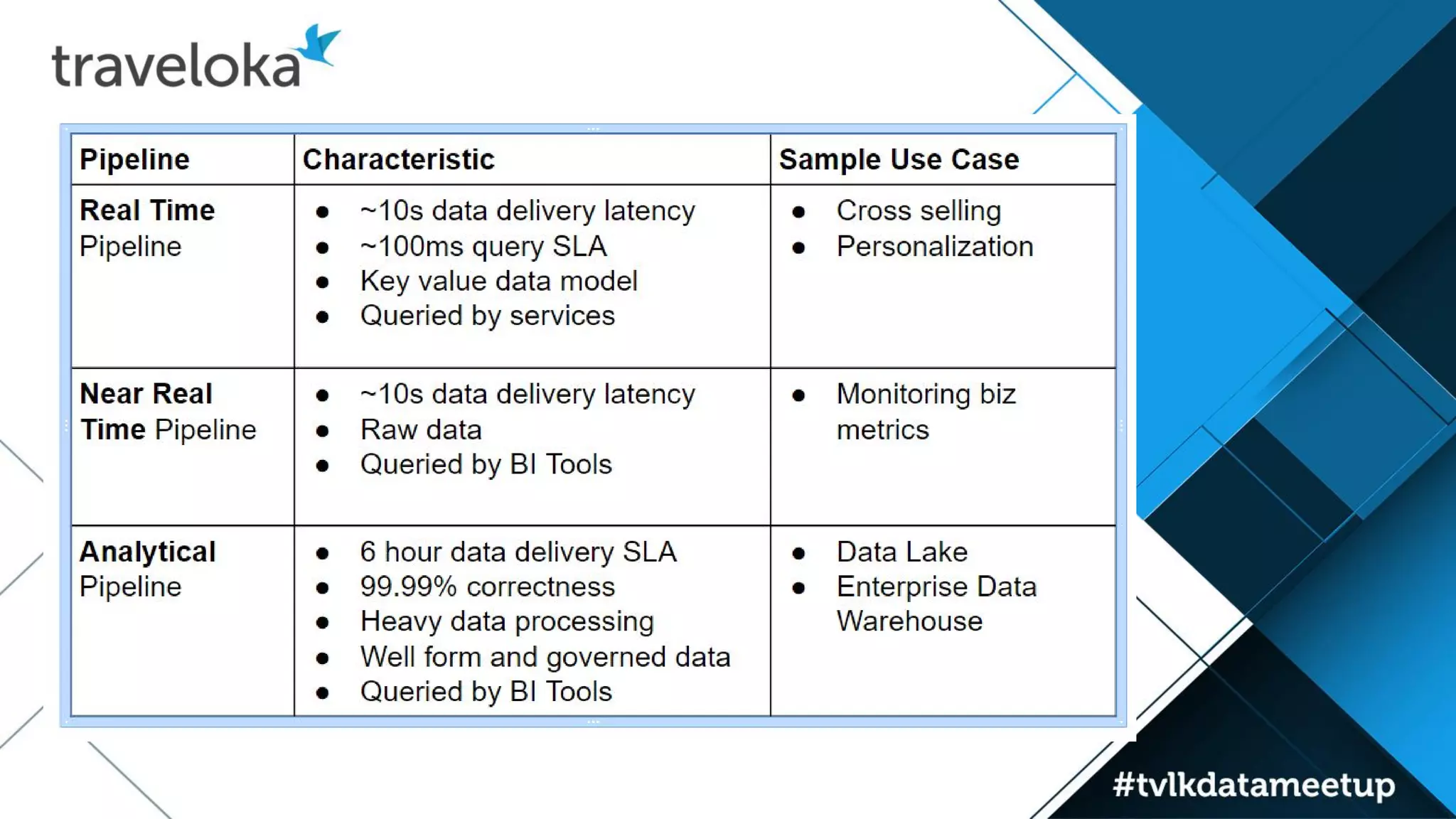

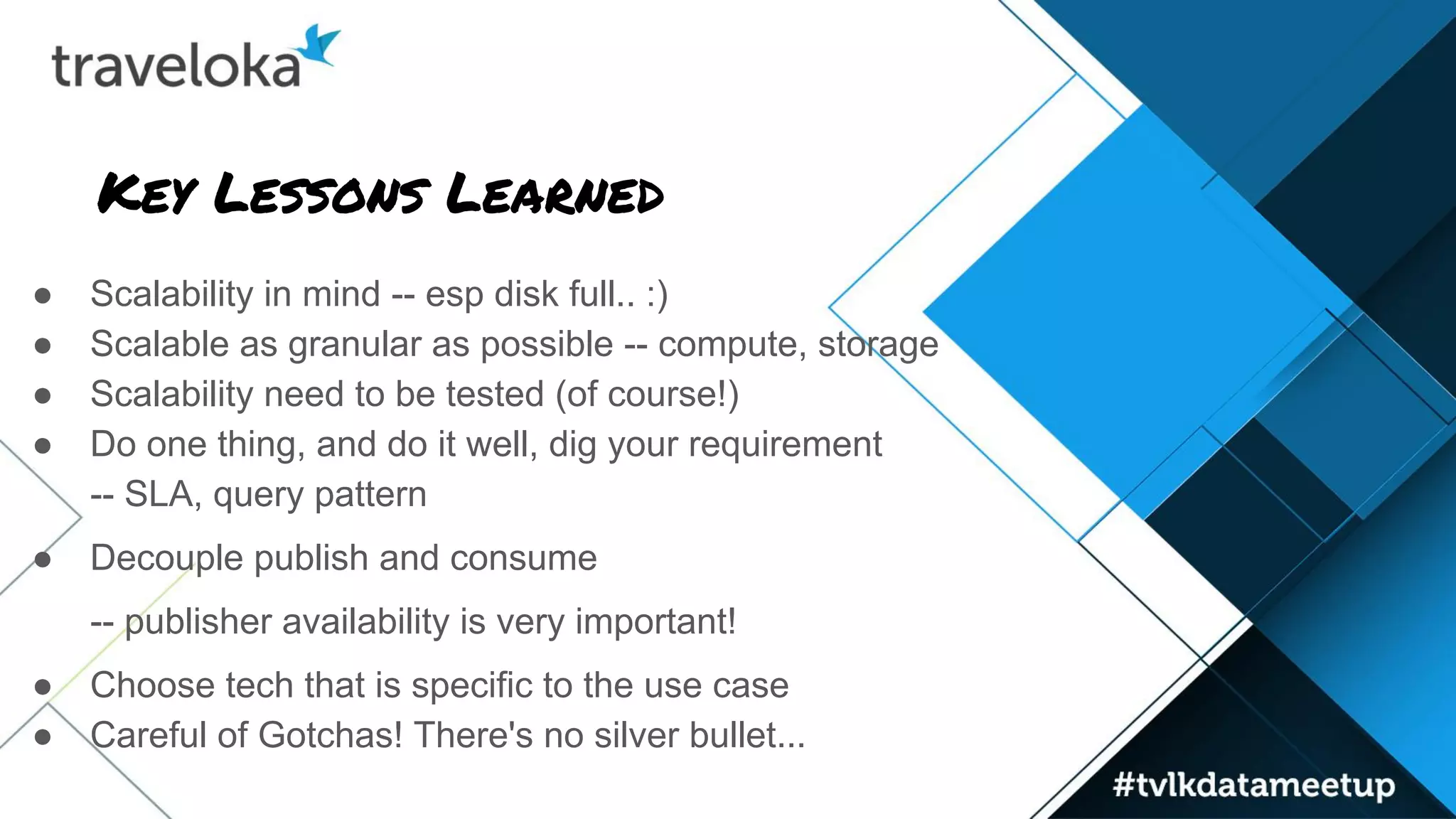

The document details Traveloka's experiences and lessons learned in building a scalable data pipeline, emphasizing the need for careful selection of technology and architecture to handle scalability and performance. Key lessons include the importance of early planning for data warehousing, the benefits of decoupling data publishing and consumption, and the necessity of autoscaling while managing costs. The roadmap for future improvements focuses on simplifying data architecture to provide a single entry point for data analysts while maintaining reliability and speed.